Speech synthesis method based on generative adversarial network

A speech synthesis and generative technology, applied in speech synthesis, biological neural network model, speech analysis, etc., can solve the problems of no significant improvement in synthesis speed, slow speed, difficult application, etc., and achieve small model parameters and fast speed , to ensure the effect of clarity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048] Specific embodiments of the present invention will be further described in detail below.

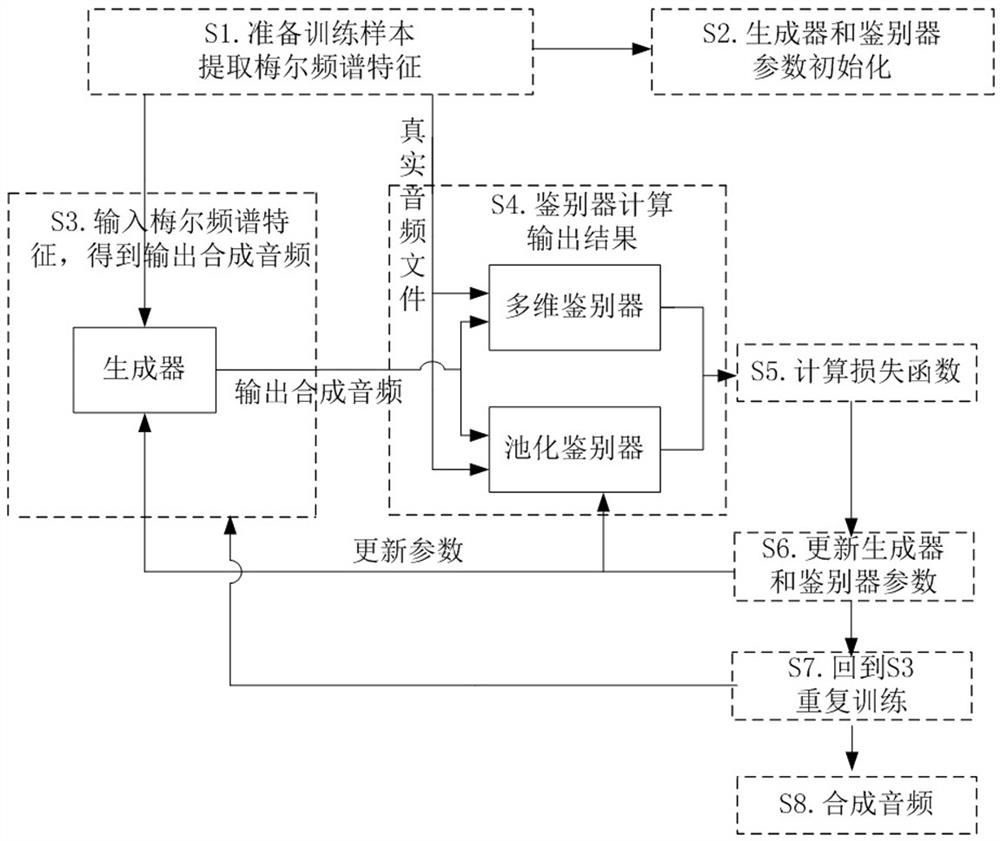

[0049] The speech synthesis method based on the generative confrontation network of the present invention comprises the following steps:

[0050] S1. Prepare training samples, including real audio data, and extract the Mel spectrum features of the real audio data;

[0051]S2. According to the extraction method and sampling rate of Mel spectral features, set the initialized generator parameter group, including setting one-dimensional deconvolution parameters and one-dimensional convolution parameters; set the initialized discriminator parameter group, including multi-dimensional discriminator and The parameters of the pooling discriminator;

[0052] S3. Input the Mel spectrum feature to the generator, and the corresponding output synthetic audio is obtained by the generator;

[0053] S4. Correspondingly input the real audio data in S1 and the output synthetic audio obtained by S3...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com