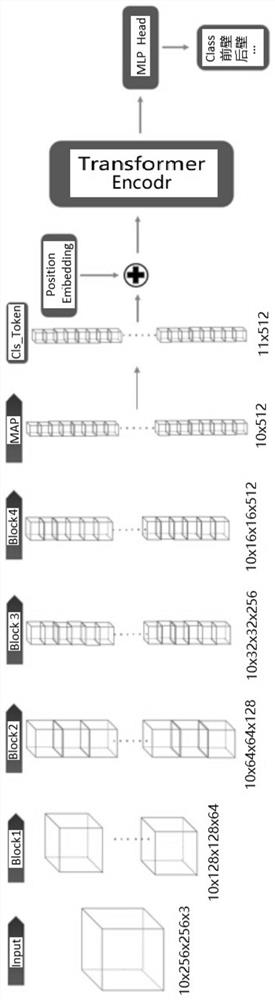

Gastroscope video part identification network structure based on Transformer

A network structure and video technology, applied in the field of video recognition, can solve problems such as poor recognition accuracy, achieve auxiliary shooting and diagnosis, accurate classification results, and improve classification accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0011] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments. This embodiment is carried out on the premise of the technical solution of the present invention, and detailed implementation and specific operation process are given, but the protection scope of the present invention is not limited to the following embodiments.

[0012] Such as figure 1 The schematic diagram of the Transformer-based gastroscope video part recognition network structure is shown. The video image is collected in real time and input to the recognition network. First, it enters the feature extraction. The feature extraction part follows the CNN structure. The 2D convolution kernel is used to extract features independently for each frame of image, that is, the convolution kernel is in Slide on each frame of image, go through four convolutional layers (Block1~Block4), and finally perform dimensionality reduction feature extraction th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com