Multi-scale filtering target tracking method based on adaptive feature fusion

A feature fusion and target tracking technology, applied in the field of target tracking, can solve problems such as motion blur, achieve the effect of enhancing precision and accuracy, improving accuracy and success rate, and improving tracking accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] Hereinafter, embodiments of the present invention will be described with reference to the drawings.

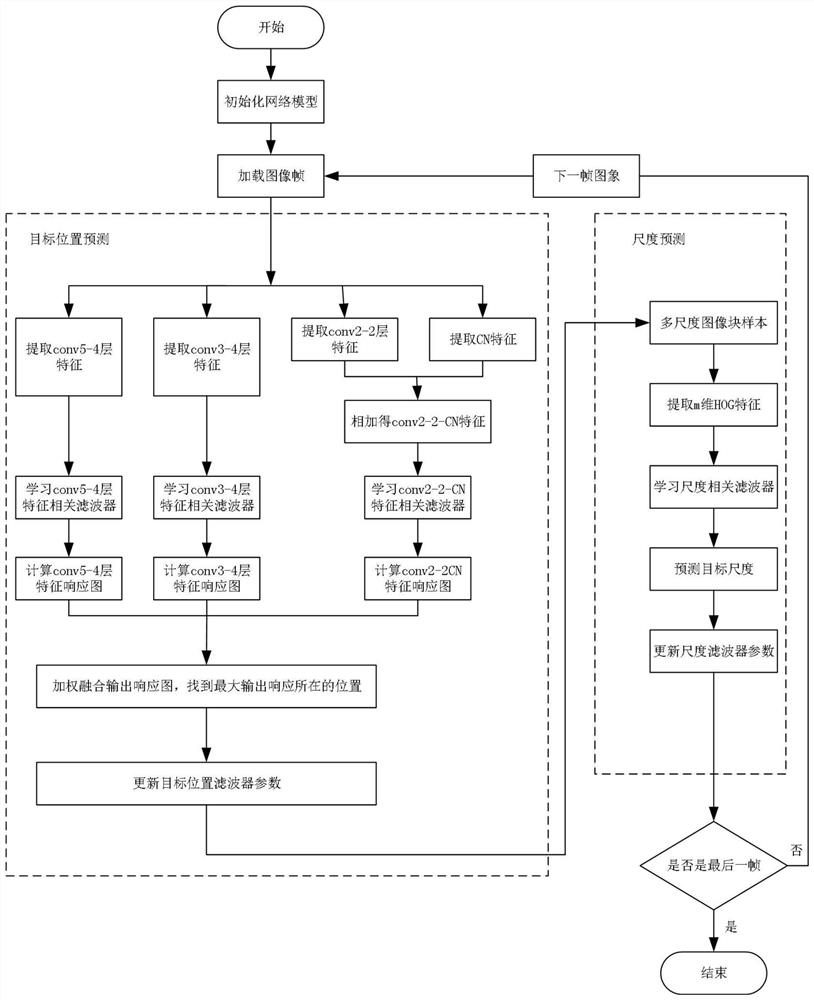

[0034] Such as figure 1 The method of the present invention is shown to comprise the following steps:

[0035] Step 1. Read and process the video:

[0036] First initialize the network module, then read the network video, obtain Q image frames in the video stream, convert the RGB image to grayscale and extract infrared features and depth features to form a three-channel multi-modal image as a template. And crop a search window of size n×n centered on the estimated position in the previous frame.

[0037] Step 2. Extract features and fuse them. The specific steps are as follows:

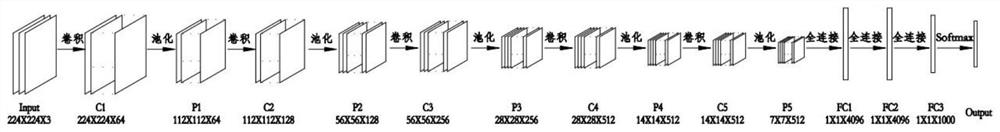

[0038] Step 2.1 adopts training on ImageNet, figure 2 VGG19 is shown for feature extraction. On the image frame of a given search window of size n×n, set a space size of Used to adjust the feature size of each convolutional layer. First delete the fully connected layer, and use the out...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com