Automatic skin covering method and device for character grid model based on neural network

A technology of neural network and grid model, applied in the direction of biological neural network model, neural learning method, neural architecture, etc., can solve the problem of unsatisfactory joint area deformation, and achieve the effect of improving quality and quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

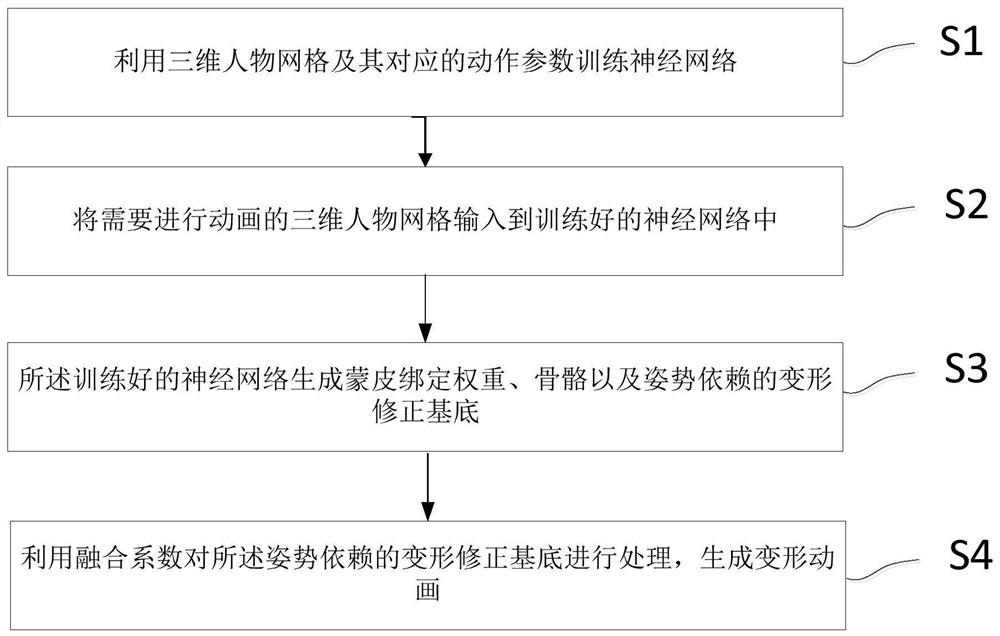

[0039] This embodiment implements a neural network-based automatic skinning method for a character mesh model, such as figure 1 shown, including the following steps:

[0040] S1. Using the 3D character grid and its corresponding action parameters to train the neural network;

[0041] S2. Inputting the 3D character grid to be animated into the trained neural network;

[0042] S3. The trained neural network generates skin binding weights, bones and pose-dependent deformation correction bases;

[0043] S4. Using the fusion coefficient to process the pose-dependent deformation correction base to generate a deformation animation.

[0044] Preferably, said motion comprises joint rotation.

[0045] Specifically, the neural network includes a grid convolutional neural network, a bone-aware convolutional neural network, and a multi-layer perceptron neural network.

[0046] Preferably, the 3D character grid to be animated is in a T pose.

[0047] Preferably, there are nine posture-...

Embodiment 2

[0057] This embodiment implements a neural network-based automatic skinning method for a character mesh model, including the following steps:

[0058]Use the 3D character grid and its corresponding action parameters to train the neural network;

[0059] Input the 3D character mesh that needs to be animated into the trained neural network;

[0060] The trained neural network generates skin binding weights, bones, and pose-dependent deformation correction bases;

[0061] The pose-dependent deformation correction base is processed by using fusion coefficients to generate deformation animation.

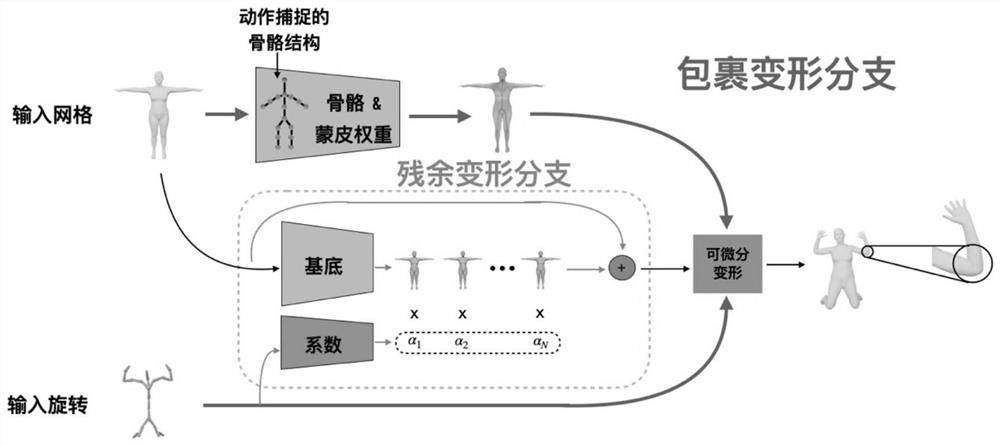

[0062] Among them, two branches are included when training the neural network, such as figure 2 As shown, one is the wrapped deformation branch, and the other is the residual deformation branch. The wrap deformation branch predicts the corresponding skin rig weights and bones, and deforms the input model using differentiable deformations. At the same time, the residual deformation br...

Embodiment 3

[0065] This embodiment implements a neural network-based automatic skinning method for a character mesh model, including the following steps:

[0066] Use the 3D character grid and its corresponding action parameters to train the neural network;

[0067] Input the 3D character mesh that needs to be animated into the trained neural network;

[0068] The trained neural network generates skin binding weights, bones, and pose-dependent deformation correction bases;

[0069] The pose-dependent deformation correction base is processed by using fusion coefficients to generate deformation animation.

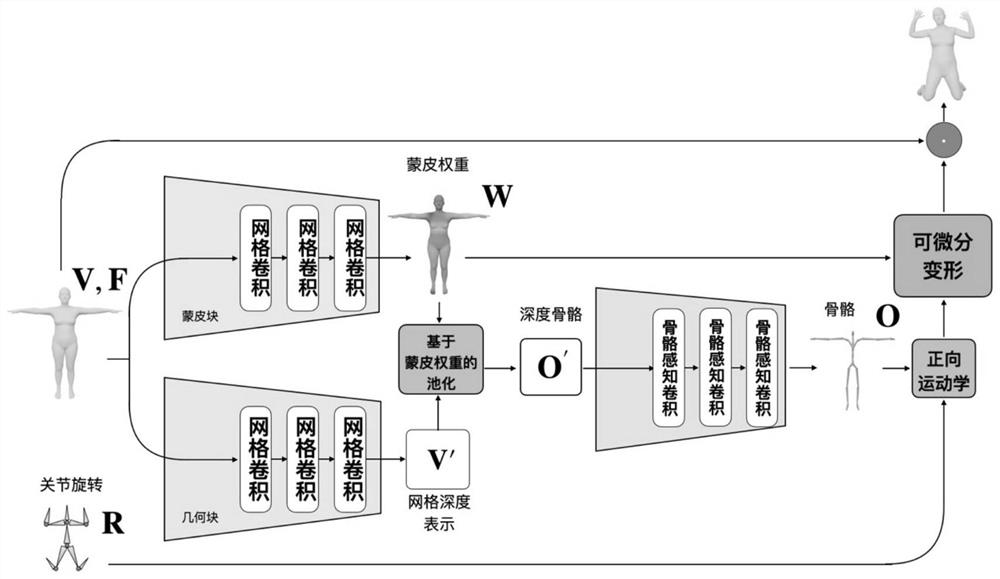

[0070] The 3D character grid that needs to be animated is a T pose. Specifically, the 3D character grid of the T pose is respectively input into the first grid convolutional neural network and the second grid convolutional neural network. The first grid The grid convolutional neural network generates the skin binding weights, and the second grid convolutional neural network calculates ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com