Animation character facial expression generation method and system based on facial expression recognition

A facial expression recognition and facial expression technology, which is applied in the acquisition/recognition of facial features, animation production, neural learning methods, etc. limited and other problems, to achieve the effect of improving the user experience, reducing the production cost, and improving the capture effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

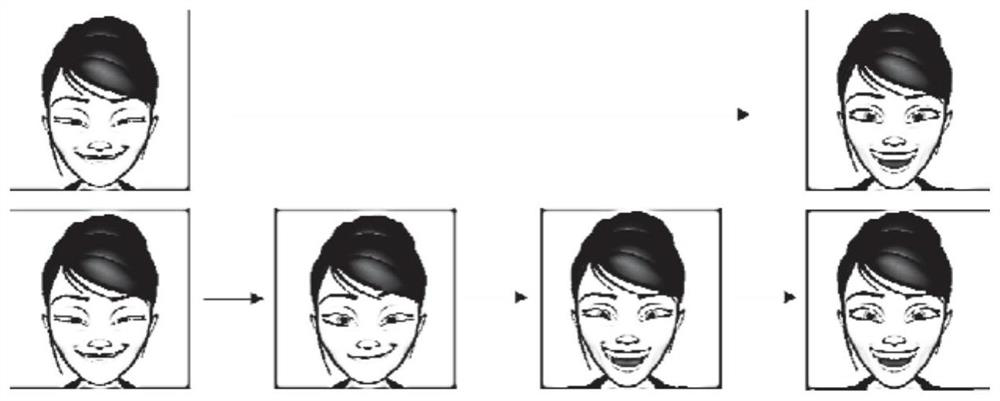

[0045] A method for generating facial expressions of animated characters based on facial expression recognition, comprising the following steps:

[0046] S1, face data set and animation data set recognize face and animation character expression through emotion recognition network, and match face and animation data pictures;

[0047] The face data sets used in this embodiment come from CK+, DISFA, KDEF and MMI. Each of the datasets selects frontal face pictures with six-degree emotions and seven neutral labels. Rotate, zoom and other operations are performed on the pictures under each label in the data set to perform data enhancement, so that the number of pictures under each label is equal, and finally about 10,000 pictures are obtained. The illustrations in this example and the pictures showing the experimental results are all from the above data sets.

[0048] The 3D animation data set used in this embodiment comes from the FERG-3D-DB data set, including four characters of...

Embodiment 2

[0076] combine Figure 1 to Figure 5 , a facial expression generation system for animated characters based on facial expression recognition, including:

[0077] The data preprocessing module recognizes facial and animation character expressions in face datasets and animation datasets through deep convolutional networks, and matches faces and animation data images;

[0078] The offline training module obtains the mapping relationship between face pictures with the same expression and character bone parameters through deep learning;

[0079]The online generation module first inputs the key frame of the human face to the offline training module to obtain the bone parameters of the character; then uses the connection between the front and rear key frames to interpolate the bone parameters of the character obtained; then performs three-dimensional Reconstruct to obtain the motion parameters of the character, and finally combine the geometric information of the face picture to opti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com