Traffic scene sound classification method and system based on deep learning

A deep learning, traffic scene technology, applied in the field of sound classification based on deep learning traffic scene, can solve the problem that the sound does not have the ability to classify the sound, the capture system misses the false alarm, and the misjudgment, etc., to improve the accuracy and capture rate. , the effect of reducing false positives and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

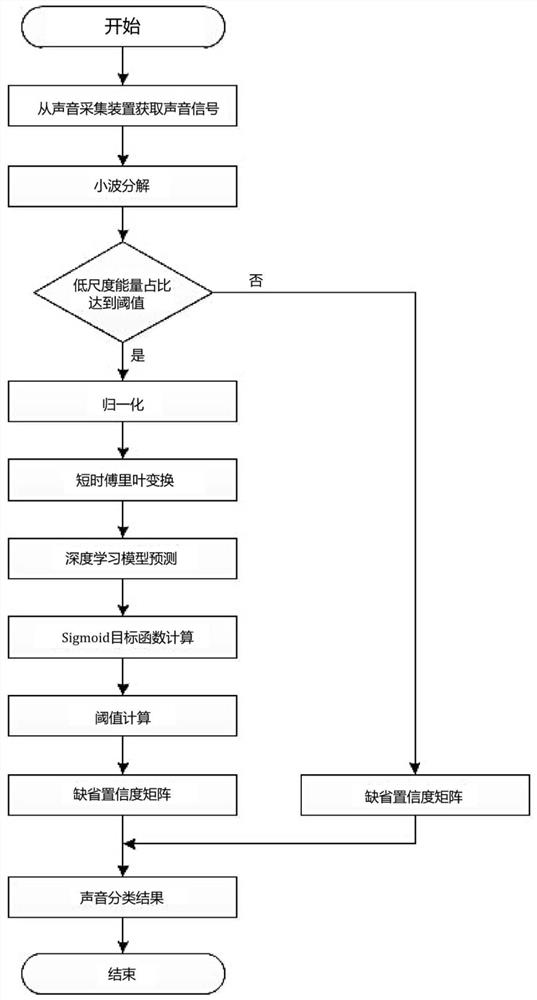

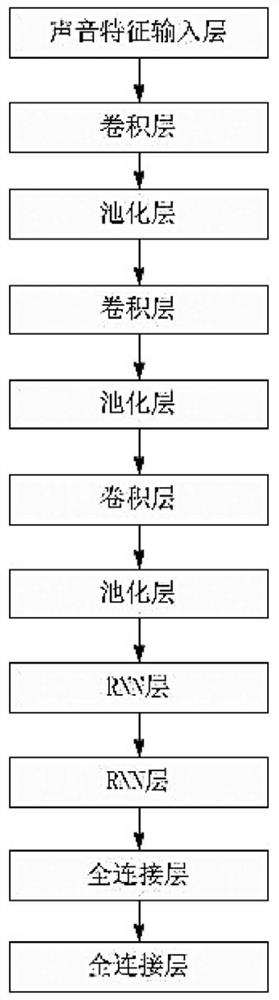

[0026] Such as figure 1 As shown, this embodiment relates to a traffic scene sound classification method based on deep learning, in which the sound signal is acquired in real time from the sound collection device, the sound signal is screened, the sound signal is normalized and the short-time Fourier transform is calculated Get the sound features, use the pre-trained deep learning model to analyze the sound features, the deep learning model will output the predicted classification matrix of the sound features, use the objective function to convert the predicted classification matrix into a confidence matrix, and then determine the All sound event categories and time intervals in which sound events occur in a sound signal, the specific steps are as follows:

[0027] Step 1) Screen the real-time sound signal obtained from the sound collection device, use the wavelet packet to decompose the sound signal, use the db4 wavelet as the analysis wavelet, and calculate the proportion of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com