Method for detecting character interaction in image based on multi-feature fusion

A multi-feature fusion and interactive detection technology, applied in the field of visual relationship detection and understanding, can solve problems such as difficulty in determining the correlation between people and object instances, low detection accuracy, and wrong associations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

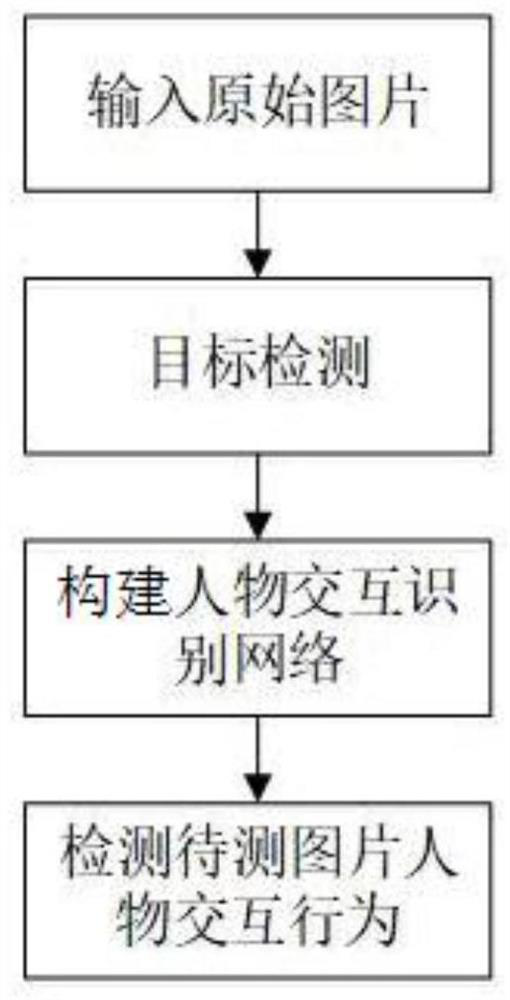

[0043] In this example, see figure 1 , a method for detecting human interaction in images based on multi-feature fusion, the operation steps are:

[0044] Step 1: Input the original picture;

[0045] Step 2: target detection;

[0046] Step 3: Build a character interaction recognition network;

[0047] Step 4: Detect the interactive behavior of the characters in the picture to be tested;

[0048] In the step 2, after using the target detection algorithm to detect all the instance information in the picture, including the position information of the human body and the position and category information of the object, input the trained character interaction behavior recognition network to detect the relationship between the character pairs in the picture to be tested. interactive behavior;

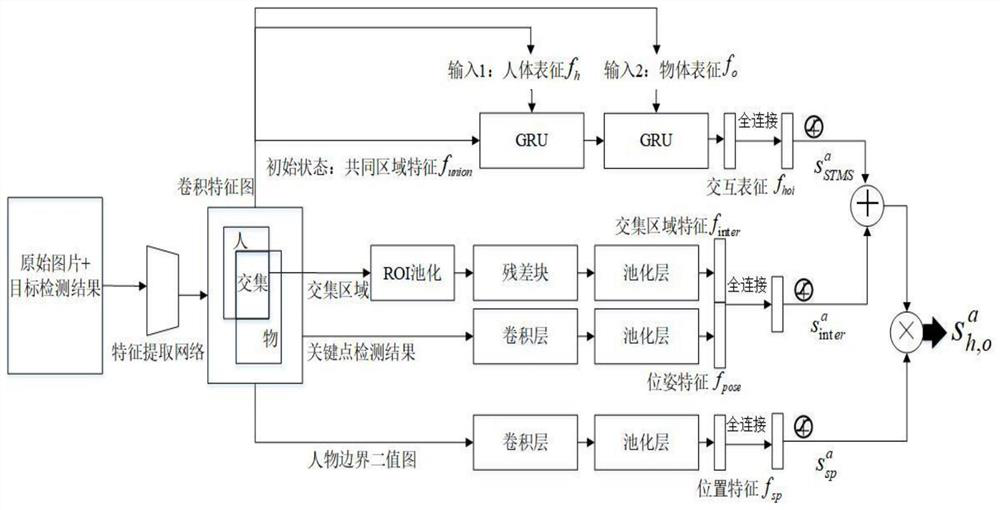

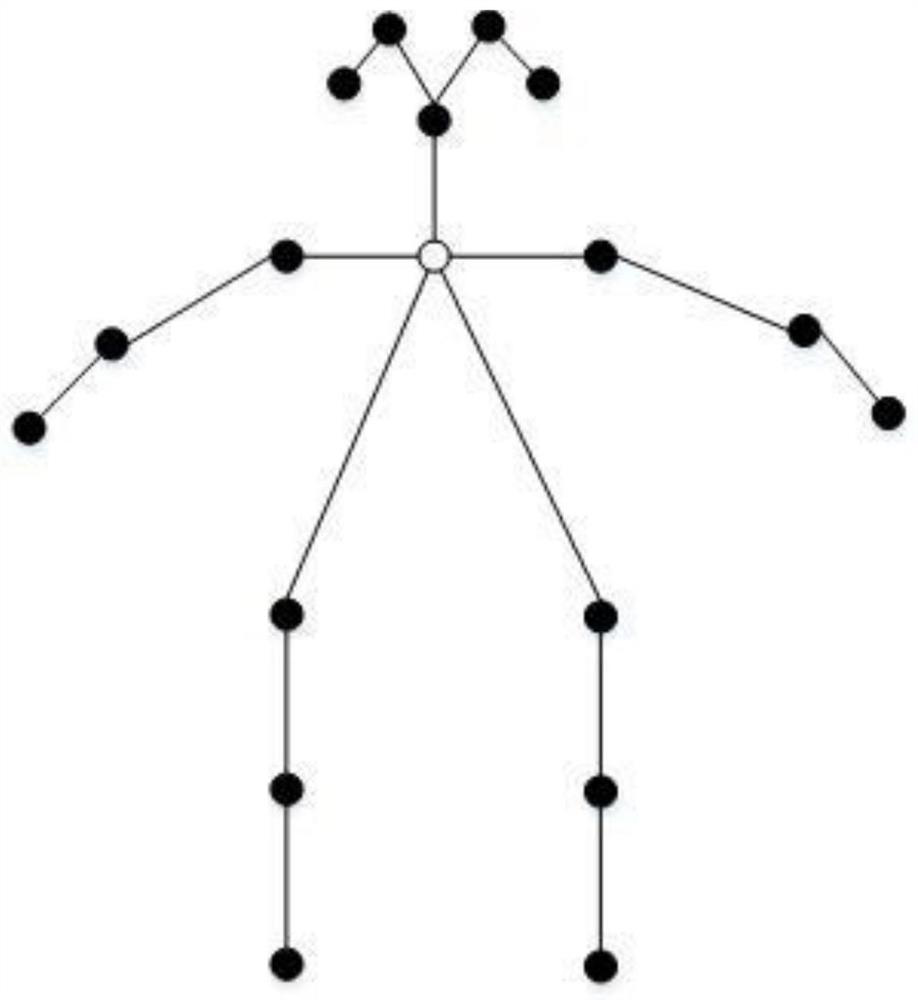

[0049] In the step 3, the character interaction recognition network adopts a multi-branch neural network structure, including paired tributaries, intersection tributaries and short-term me...

Embodiment 2

[0052] This embodiment is basically the same as Embodiment 1, especially in that:

[0053] In this embodiment, in the step 2, the process of target detection is:

[0054] Use the trained target detector to perform target detection on the input picture, and get the candidate frame b of the person h and the human confidence s h and object candidate box b o and the object's confidence s o . The subscript h represents the human body and o represents the object.

[0055] In this embodiment, in the step 3, constructing a character interaction recognition network includes the following steps:

[0056] 1) Extract the convolutional features of the entire image:

[0057] Use the classic residual network ResNet-50 to perform convolutional feature extraction on the original input image to obtain the global convolutional feature map F of the entire image, and the human body position b of the target detection result h , object position b o Together as the input of the character inte...

Embodiment 3

[0075] This embodiment is basically the same as the above-mentioned embodiment, and the special features are:

[0076] In this example, if figure 1 As shown, a method for human interaction detection in images based on multi-feature fusion, the specific steps are as follows:

[0077] Step 1: Perform target detection on the picture to obtain all instance information, including human body location information, object location and category information, and form a instance with people and objects to input person interaction recognition network for person interaction detection.

[0078] Step 2: Construct a character interaction recognition network, and use a multi-branch neural network structure to learn various features of instances in the picture, including paired tributaries, intersection tributaries and short-term memory selection tributaries. Each tributary extracts different feature information to detect the interaction between person pairs. Attached below figure 2 The i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com