Three-dimensional target sensing method in vehicle-mounted edge scene

A three-dimensional target and scene technology, applied in the field of three-dimensional target perception, can solve problems such as poor generalization and easy missing target information, and achieve the effects of long time consumption, reduced point cloud processing time, and improved real-time performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

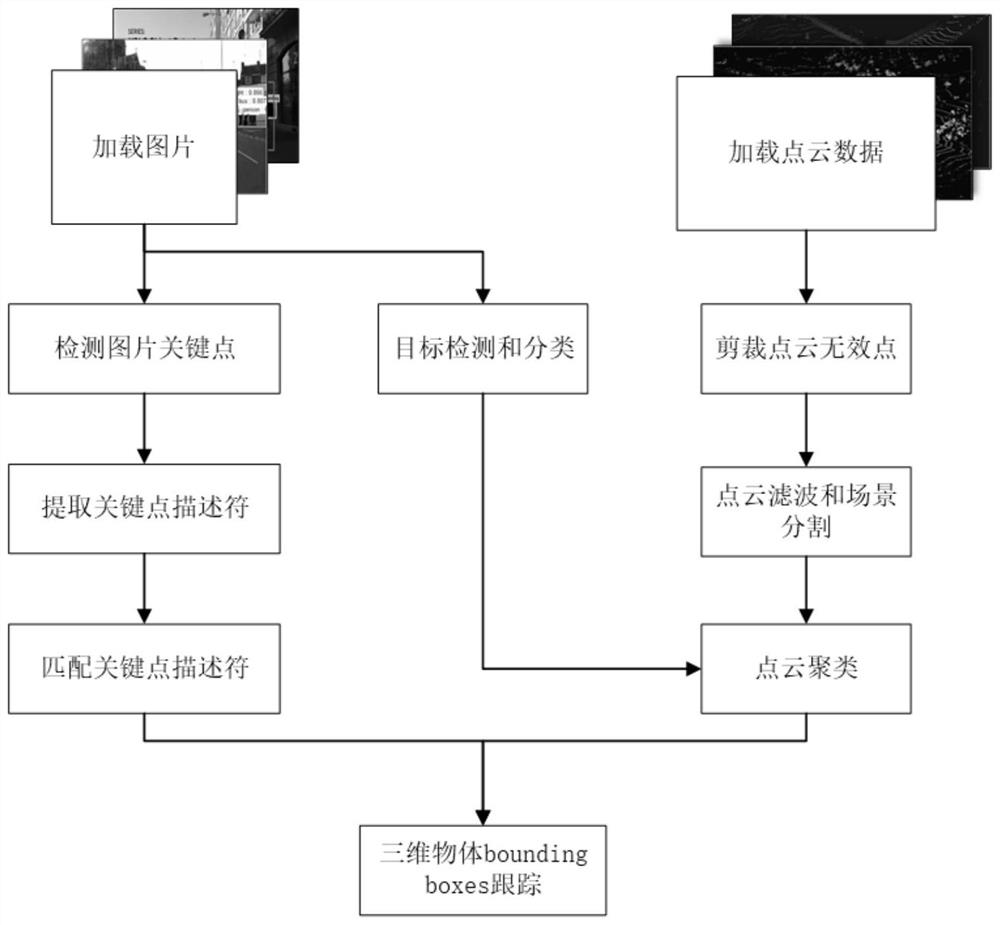

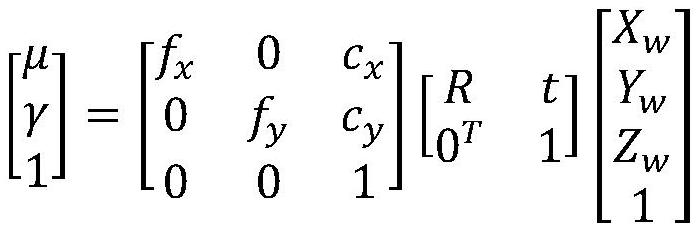

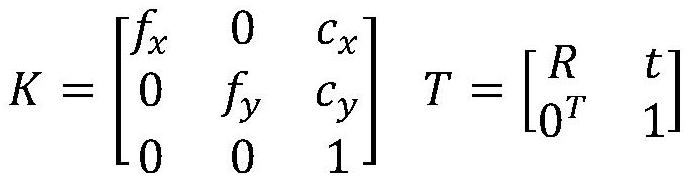

[0043]The present invention provides a three-dimensional target perception method in a vehicle-mounted edge scene, which realizes the three-dimensional target perception and tracking in a vehicle system by using point cloud projection and two-dimensional image fusion. Under the algorithm optimization of parallel computing, this method performs filtering and segmentation operations on the point cloud image data, then performs point cloud classification and feature value extraction, and then combines the two-dimensional image to project the point cloud onto the two-dimensional image for clustering, and finally Combine the relevant data of the front and back frames to match the information points and connect the targets to achieve the effect of matching and tracking. The method also solves the problem of how to combine the laser radar and the image returned by the camera for target recognition and how to deploy it on a smaller terminal device. After the method of the present inven...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com