Video behavior recognition method based on weighted fusion of multiple image tasks

A technology of weighted fusion and recognition method, which is applied in the direction of character and pattern recognition, instruments, computer parts, etc., can solve the problems of time-consuming and labor-intensive, and achieve the effect of expanding and reducing the distance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

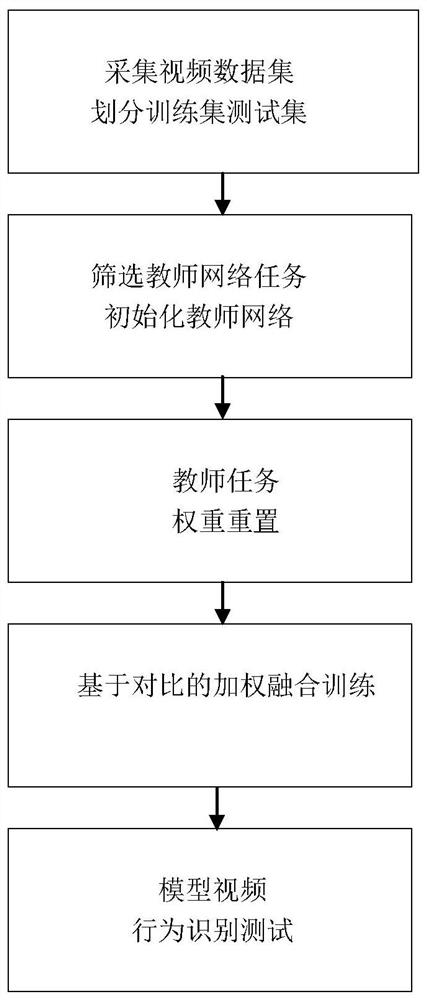

[0052] Step 1. Collect human body activity video data sets, segment them according to the categories of human body behavior in the video, and assign category labels, perform frame extraction and normalization processing on the video data, and divide them into training sets and test sets. The specific method is as follows:

[0053] Step 1.1. The collection of video data includes self-built video data sets or using existing public data sets: first download the relevant data set files from the official website, the specific data set is: HMDB51 is a video behavior recognition with 51 action tags The data set has a total of 6849 videos, and each action contains at least 51 videos. The actions mainly include: facial actions such as smiling, chewing, talking, facial and object interactions such as smoking, eating, drinking; body actions such as clapping, climbing, jumping, Running, actions that interact with objects such as combing hair, dribbling, and playing golf, and interactions b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com