Humanoid target segmentation method based on convolutional neural network

A convolutional neural network and humanoid technology, applied in the field of computer vision recognition, can solve the problems of inaccurate humanoid target segmentation and slow humanoid target recognition speed, so as to avoid the reduction of detection efficiency and accuracy, reduce the time spent, and improve segmentation speed effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] In order to make the object, technical solution and technical effect of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

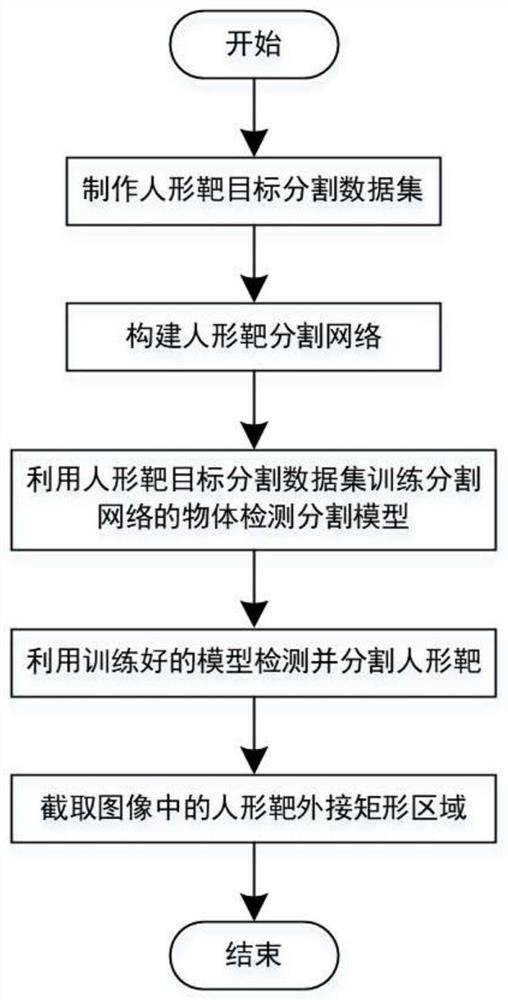

[0029] like Figure 1-2 As shown, in the embodiment of the present invention, implement the humanoid target segmentation method based on the convolutional neural network as follows:

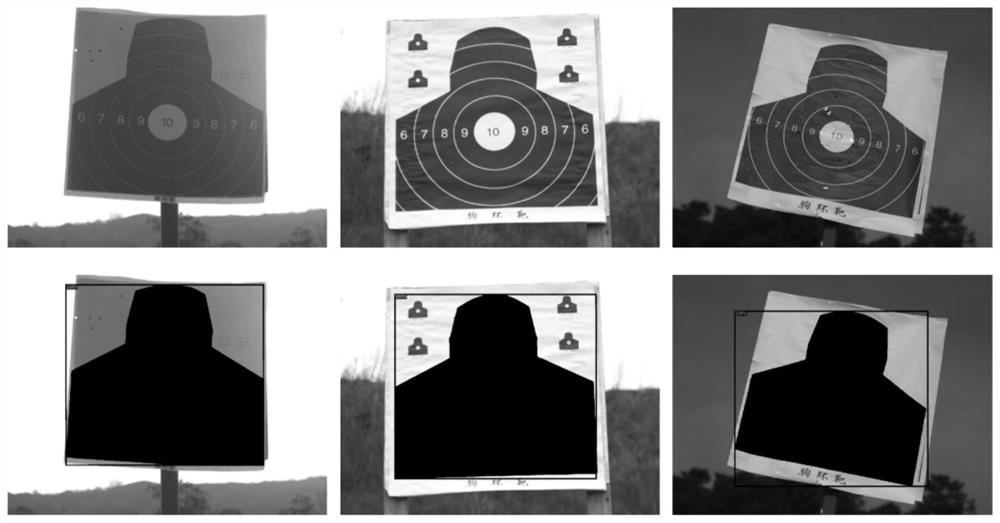

[0030] Step 1: Construct a humanoid target segmentation data set, collect the target surface image in the actual shooting environment as the original input data, manually label the original input data, and divide the training set, verification set and test set;

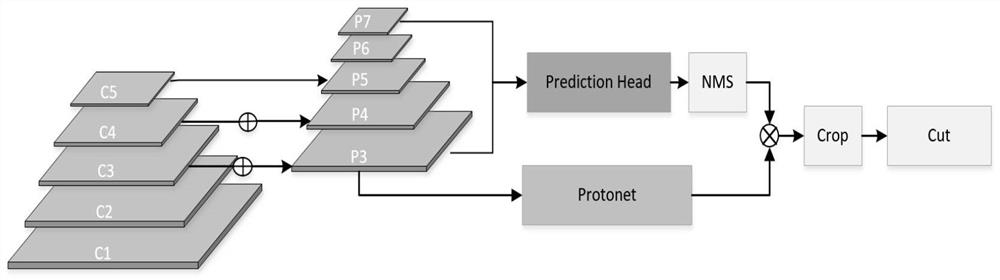

[0031] Step 2: Input the image into the deep convolution network with ResNet101 as the Backbone, and extract the image feature layers C1, C2, C3, C4 and C5 in sequence, where C1 is the feature layer obtained by ResNet through the conv1 convolution module, and C2 is the feature layer obtained by ResNet ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com