Mass small file distributed caching method oriented to AI (Artificial Intelligence) training

A technology of distributed caching and massive small files, which is applied in the direction of file system, file access structure, storage system, etc., can solve the problems of affecting data access rate, waiting for a long time, missing cache, etc., so as to improve data access rate and increase Cache hit rate, the effect of solving random access problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

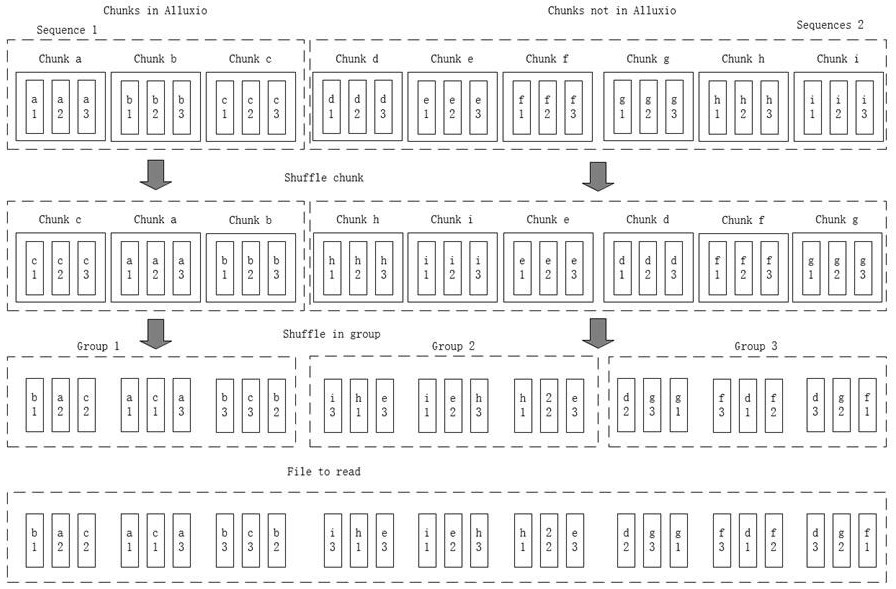

[0018] Below with the accompanying drawings, specific embodiments of the present invention will be further described in detail.

[0019] The invention includes the following steps:

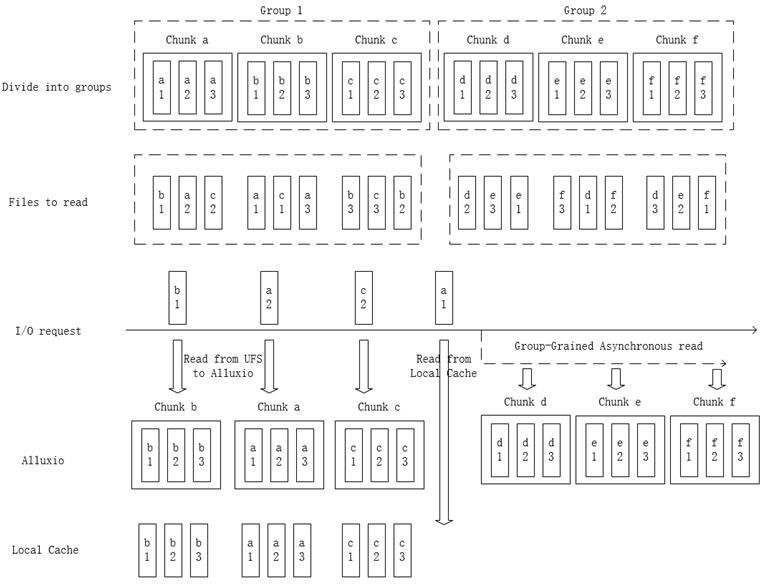

[0020] Step 1: Create Local Cache and Alluxio cache.

[0021] To create key-value key-value store in the Local Cache client and create Alluxio cache in a distributed storage device.

[0022] Alluxio Cache: Alluxio support block buffer memory means, storing the main data chunk combined, whenever an application is not Alluxio access to a cache when the chunk, the chunk will removed from the underlying storage, and restored to Alluxio cache so follow-up visits.

[0023] Local Cache: Local Cache key in key-value store, in the client, main memory chunk parsing all the small files, a chunk whenever removed from the cache Alluxio, small chunk file is parsed and stored in the Local Cache.

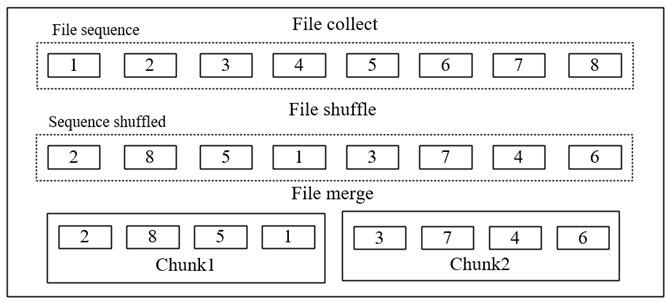

[0024] Step 2: AI in the training data storage stage, the data set based on a merge operation chunk Batch Size fitting c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com