Student experiment classroom behavior identification method based on top vision

A technology for students and experiments, applied in biometrics, character and pattern recognition, computer components, etc., can solve problems such as low pertinence, small number of cameras, incompleteness, etc., to avoid collecting students' facial information and improve privacy Effects of safety and positioning deviation reduction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] Embodiments of the present invention will be further described in detail below in conjunction with the accompanying drawings.

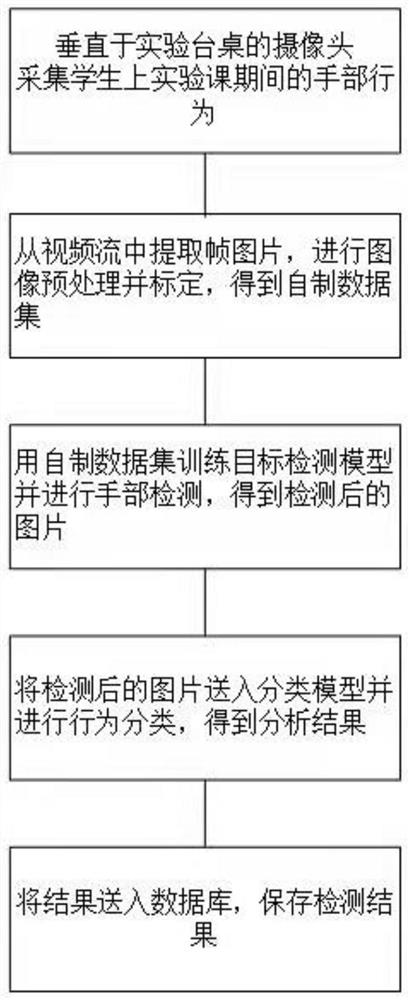

[0022] refer to figure 1 , the implementation steps of the present invention are as follows:

[0023] Step 1, install the camera in the laboratory.

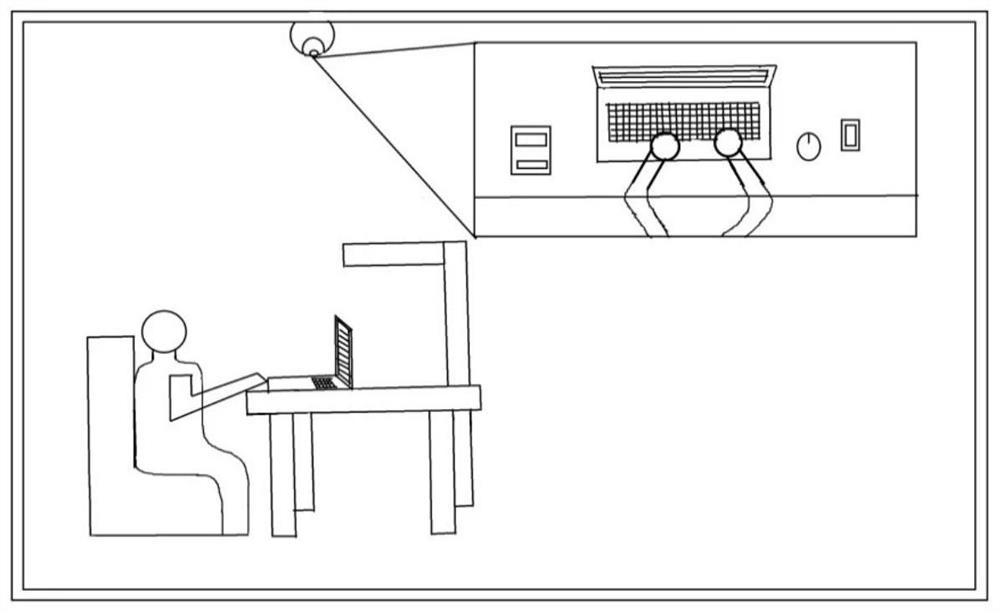

[0024] refer to figure 2 , install the camera vertically above the tabletop of each experimental table, and adjust the shooting angle so that it is aimed at the area where students' hands move on the tabletop of the experimental table.

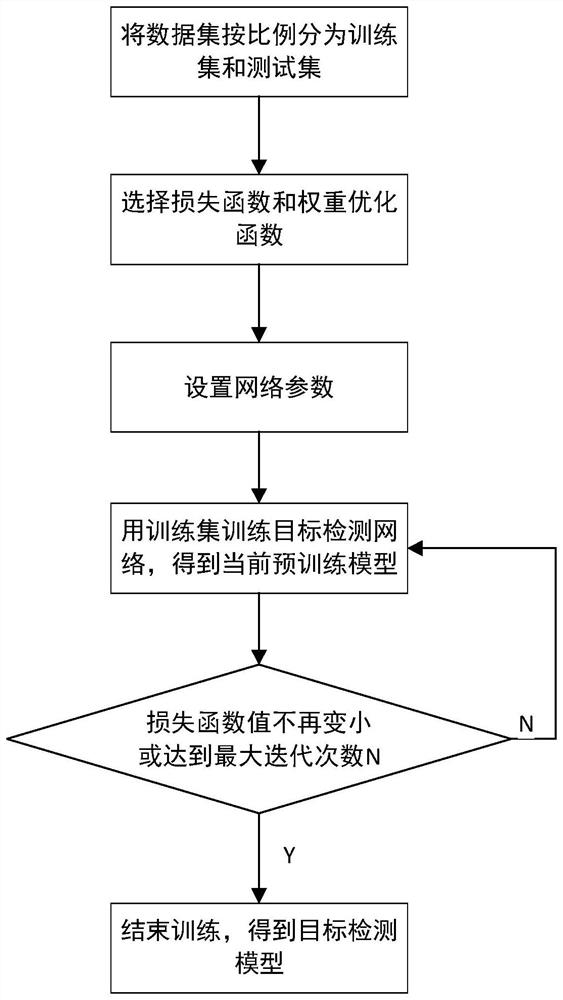

[0025] Step 2, make a dataset.

[0026] 2.1) Use each camera to take a video of the hand behavior of the students during the experiment at the same time, and extract the frame from the video stream to obtain the original picture;

[0027] 2.2) For each original picture, use any method of horizontal flip, vertical flip, proportional scaling, cropping, expansion, and rotation to enhance, increase the number of pictures, and perform calibration to obtain a data ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com