Video motion amplification method based on improved self-encoding network

A self-encoding network and motion amplification technology, applied in the field of video motion amplification, can solve the problems of image texture color loss, artifact and contour deformation, checkerboard effect, etc., to achieve the effect of maintaining effectiveness, improving performance, and low demand

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

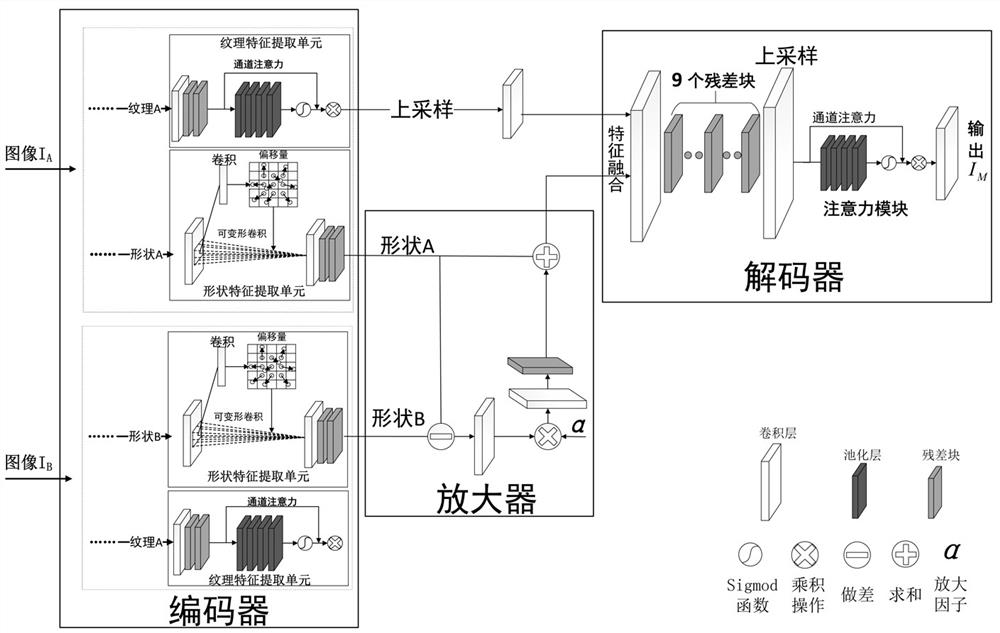

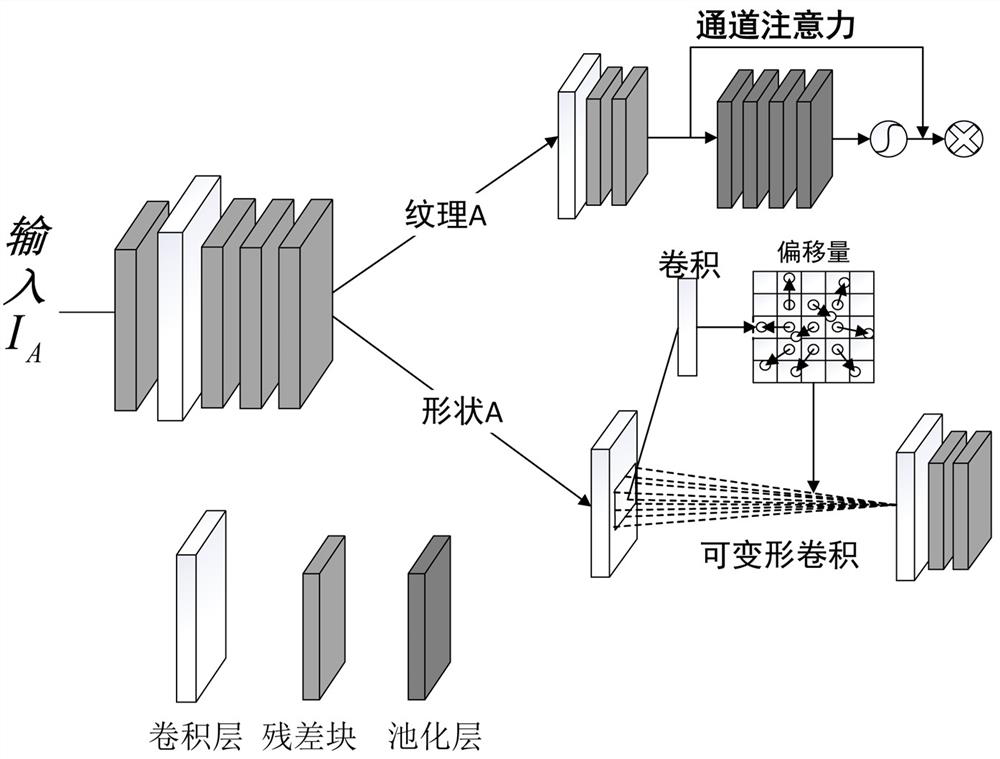

[0036] Such as figure 1 As shown, the modified autoencoder network of the example includes an encoder, an amplifier, and a decoder. The encoder includes a texture feature extraction unit and a shape feature extraction unit. The texture feature extraction unit adopts a channel attention mechanism, and the shape feature extraction unit includes a convolutional layer, a deformable convolution, and a residual block, such as figure 2 shown.

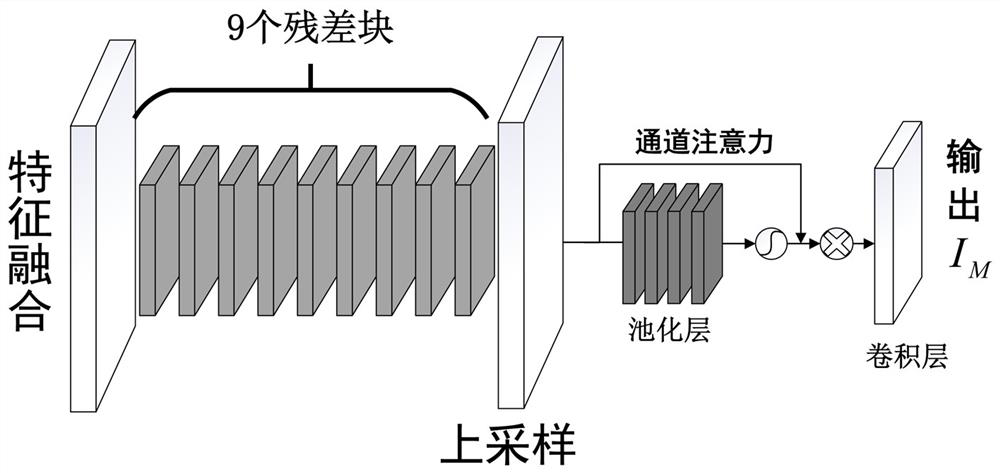

[0037] Such as image 3As shown, the decoder of the modified autoencoder network consists of sequentially connected feature fusion layers, 9 residual blocks, upsampling layers, channel attention units, and convolutional layers.

[0038] The video motion amplification method based on the improved autoencoder network includes the following steps:

[0039] Step 1: Decompose the video data, using the decomposed two consecutive frames of images I A , I B As the input to the encoder, I A Indicates the first frame image of two consecutive fram...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com