Adaptive scheduling with dynamic partition load balancing for fast partition compilation

A technology of load averaging and partitioning, which is applied in code compilation, program code conversion, instrumentation, etc., and can solve problems such as stagnation of compilation processing, inconspicuousness, and inefficient use of computing resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

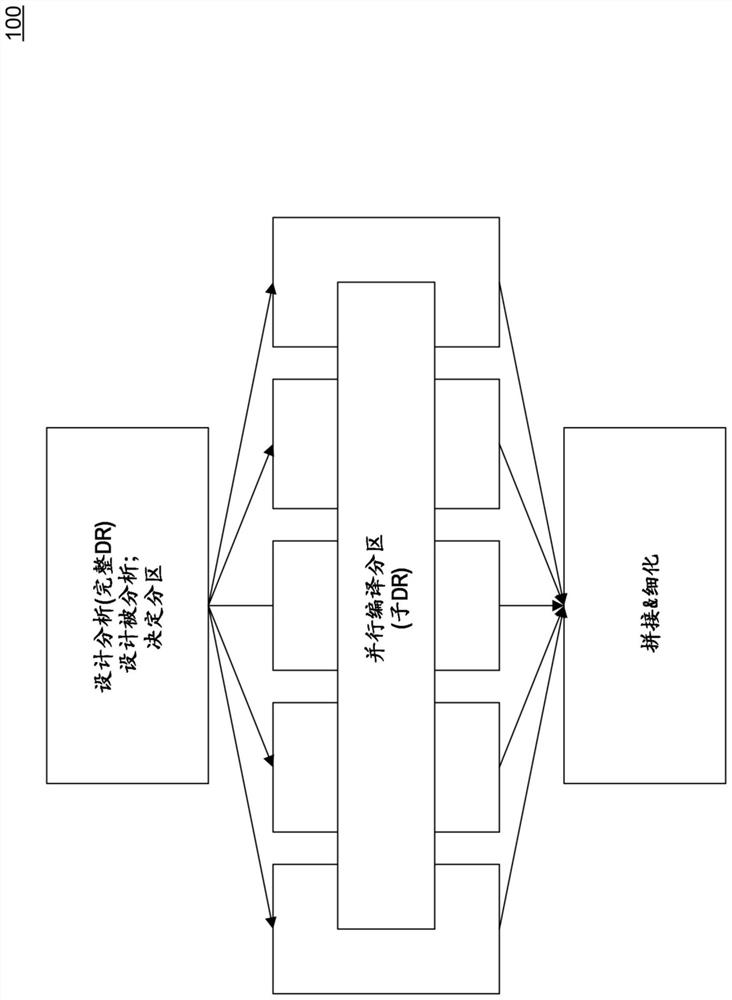

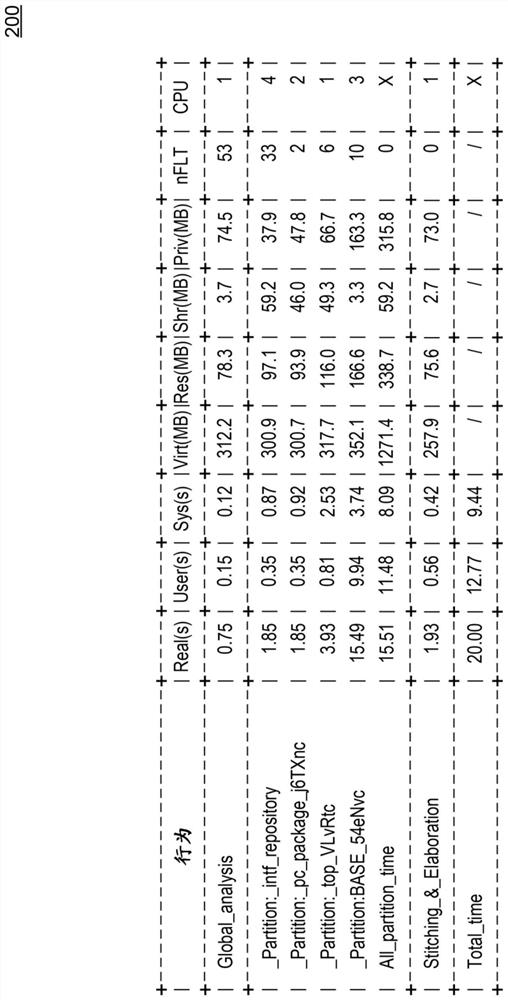

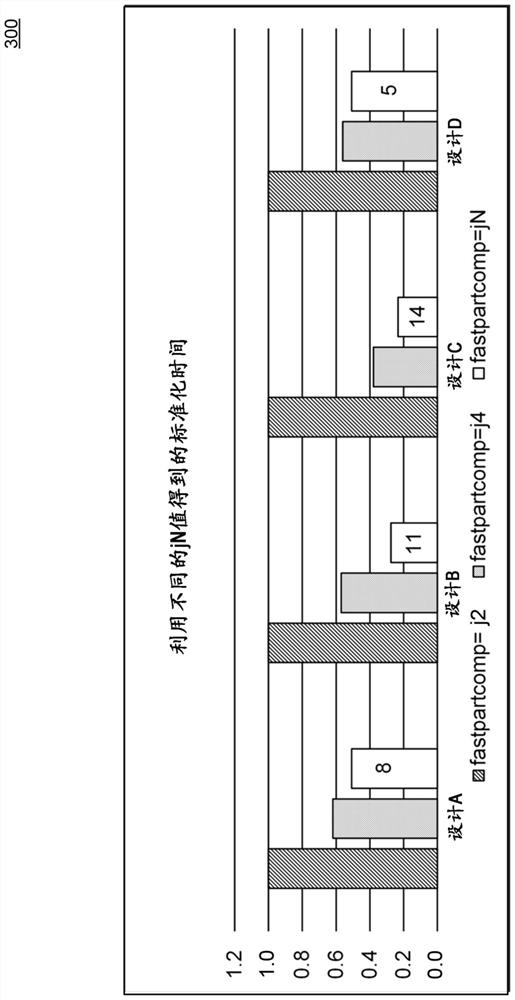

[0025] Aspects of this disclosure relate to adaptive scheduling with fast partition compilation. A further aspect of the disclosure relates to dynamic partition load balancing for fast partition compilation.

[0026] As mentioned above, a hardware design specification or specification can be divided into multiple parts, which will not be compiled separately. Portions of a hardware design specification or description may be referred to as partitions. Using multiple partitions allows EDA tools to manage complex and parallelized workloads. When using traditional EDA tools to manage complex and parallelized workloads, the resulting resource usage and overall performance can be problematic for designs that can be relatively large and particularly complex. Additionally, in the presence of time constraints and / or resource constraints, specific compilations for large or complex designs may not complete in time, delaying schedules and delaying project delivery.

[0027] Various aspe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com