Patents

Literature

495 results about "Load balancing (computing)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

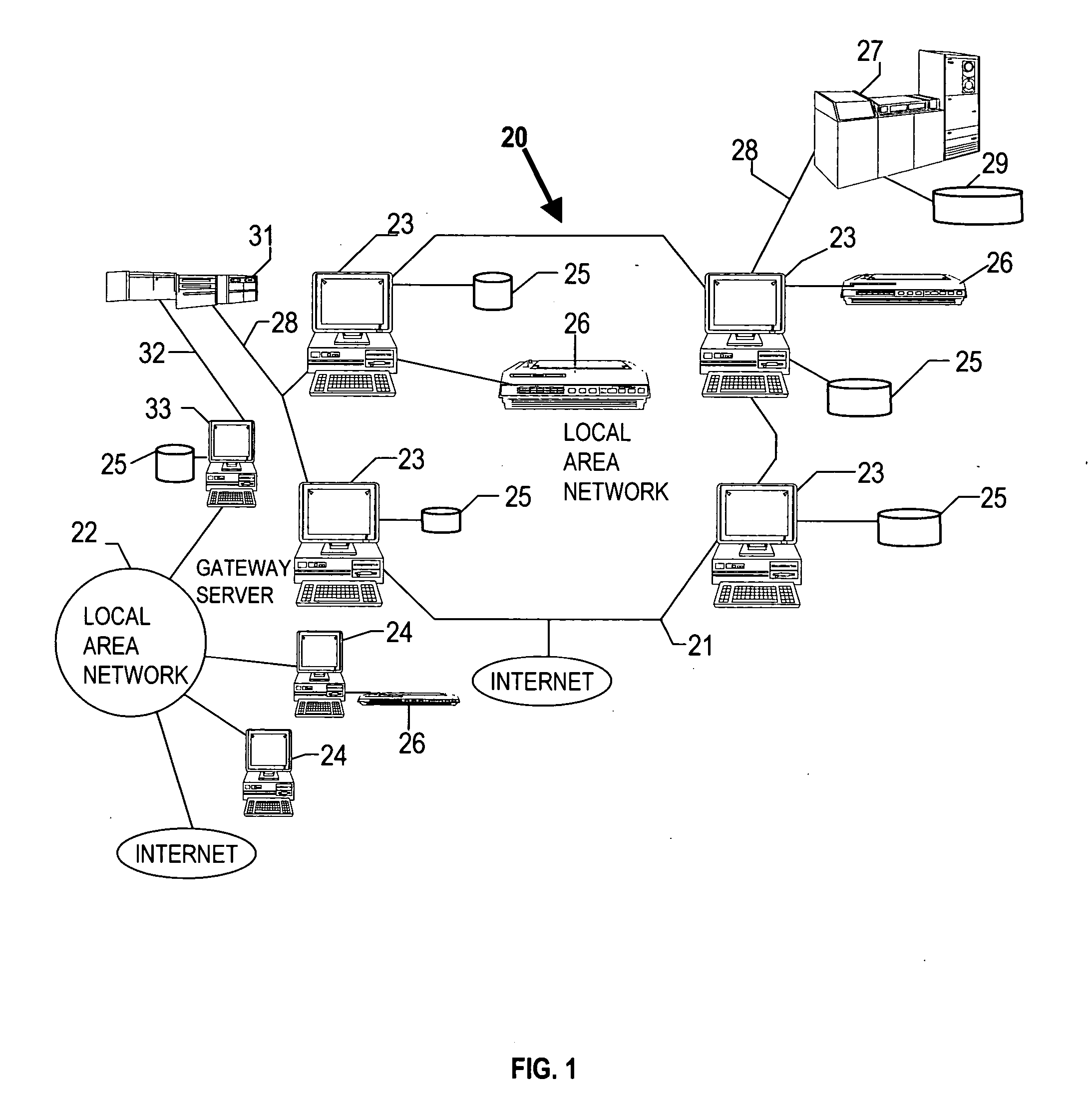

In computing, load balancing improves the distribution of workloads across multiple computing resources, such as computers, a computer cluster, network links, central processing units, or disk drives. Load balancing aims to optimize resource use, maximize throughput, minimize response time, and avoid overload of any single resource. Using multiple components with load balancing instead of a single component may increase reliability and availability through redundancy. Load balancing usually involves dedicated software or hardware, such as a multilayer switch or a Domain Name System server process.

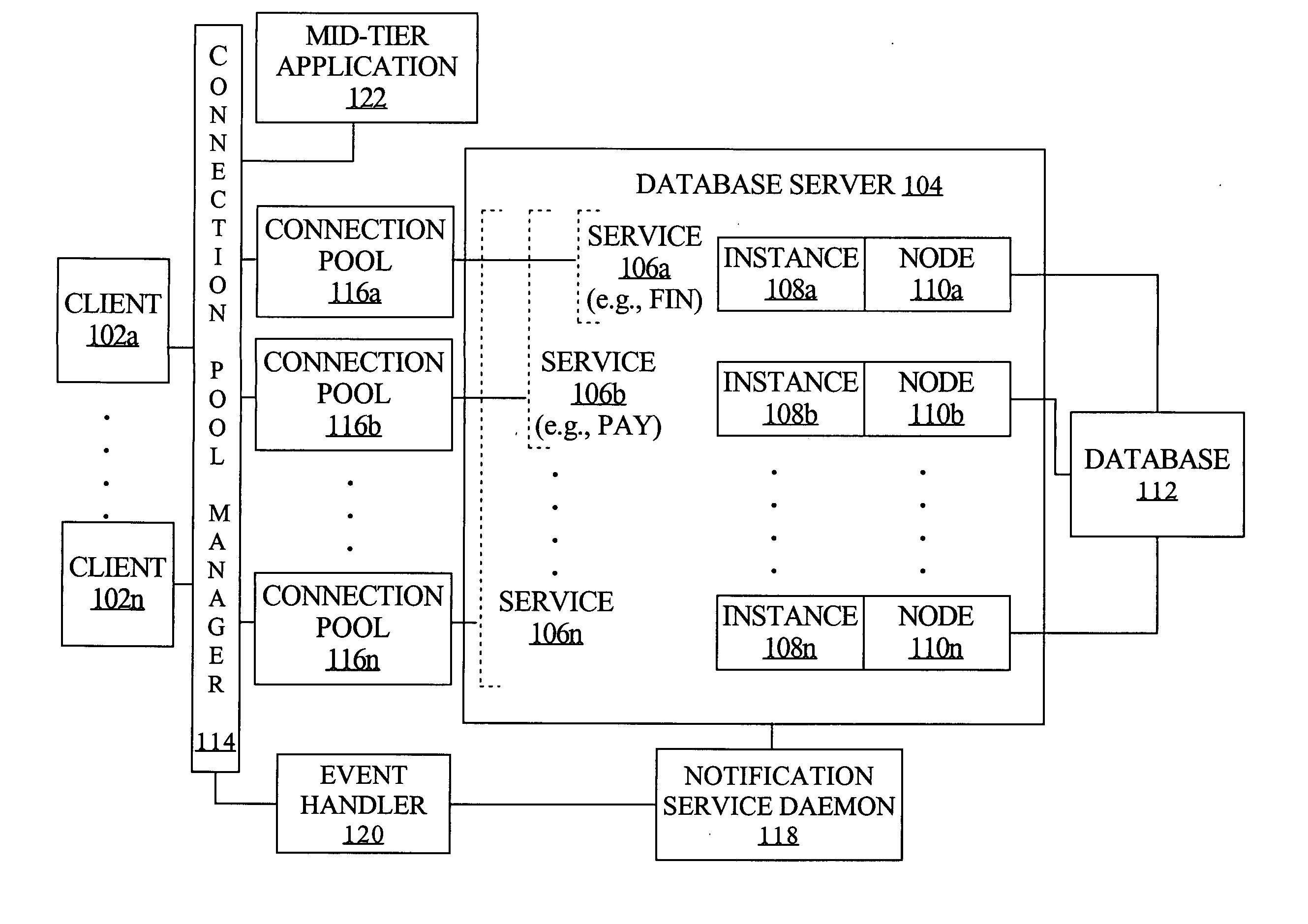

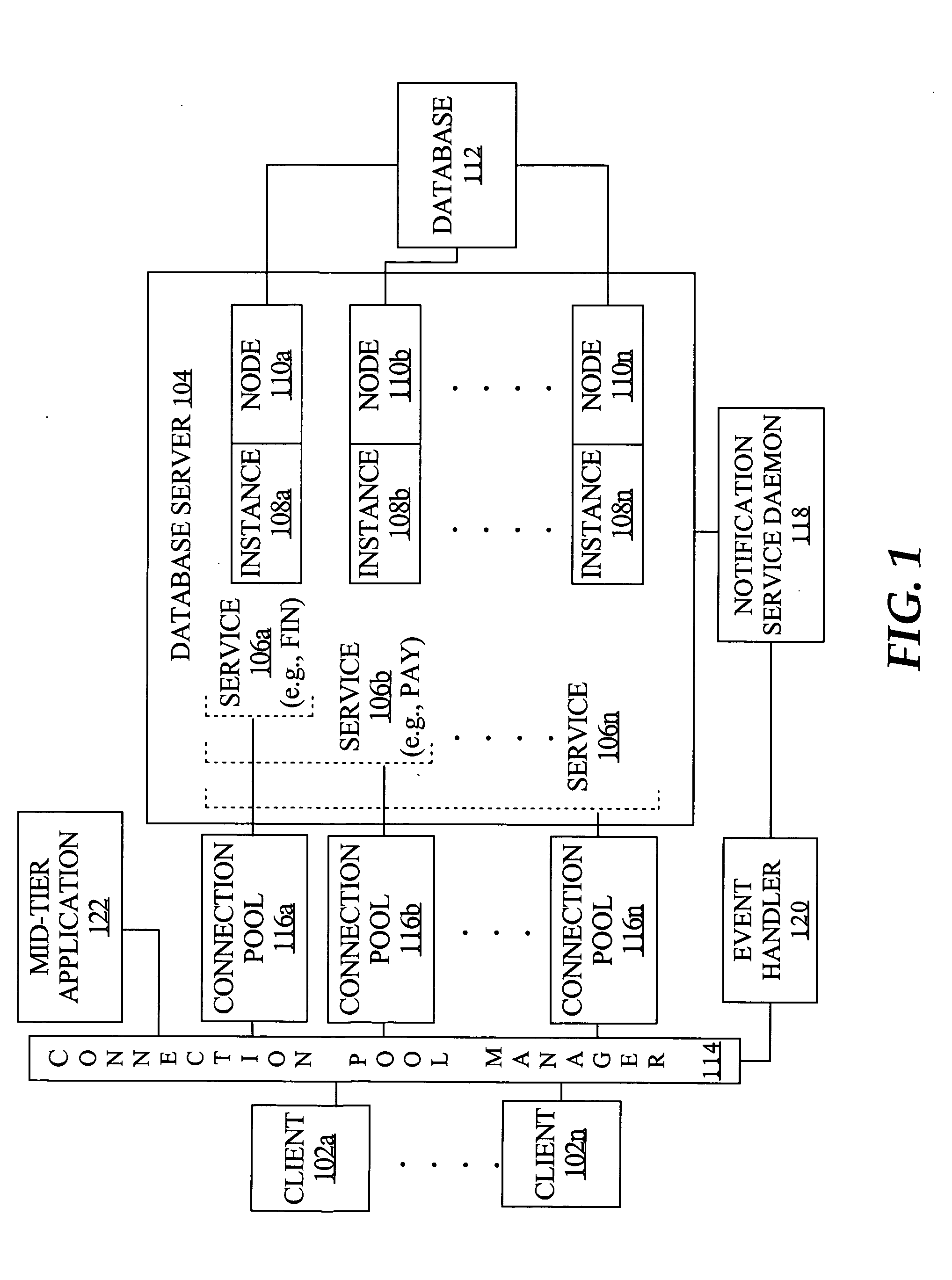

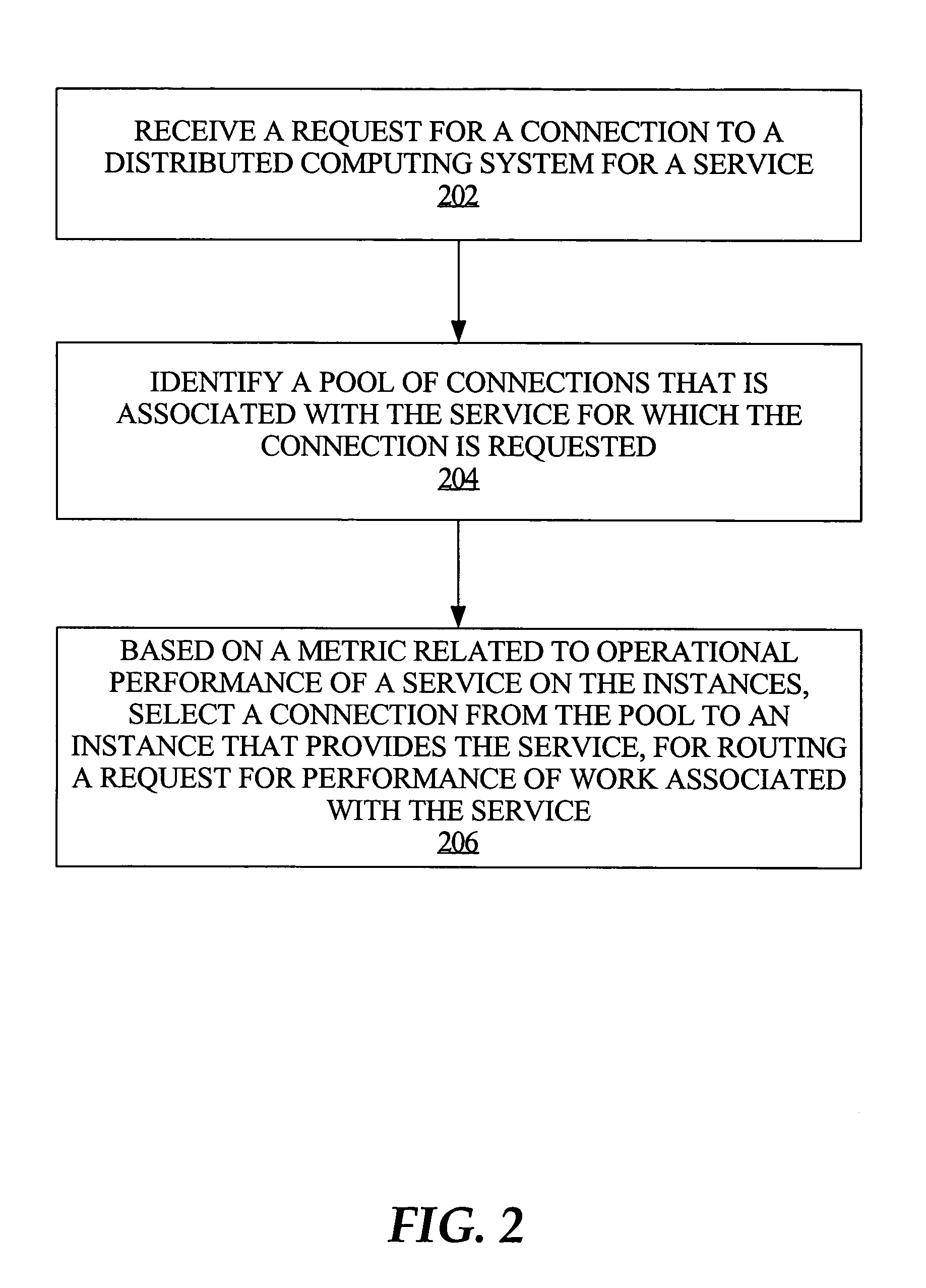

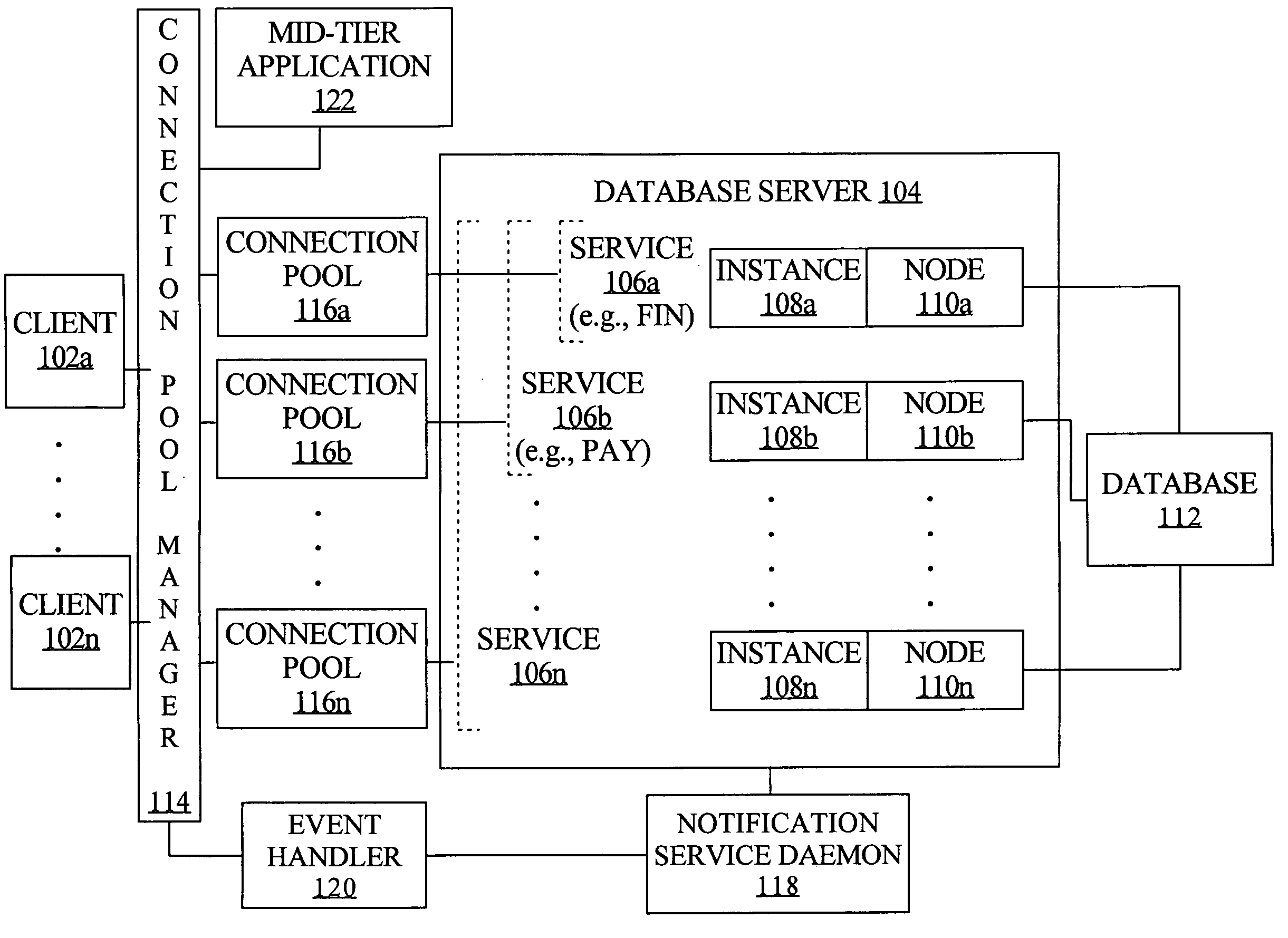

Connection pool use of runtime load balancing service performance advisories

Runtime connection load balancing of work across connections to a clustered computing system involves the routing of requests for a service, based on the current operational performance of each of the instances that offer the service. A connection is selected from an identified connection pool, to connect to an instance that provides the service for routing a work request. The operational performance of the instances may be represented by performance information that characterizes the response time and / or the throughput of the service that is provided by a particular instance on a respective node of the system, and is relative to other instances that offer the same service.

Owner:ORACLE INT CORP

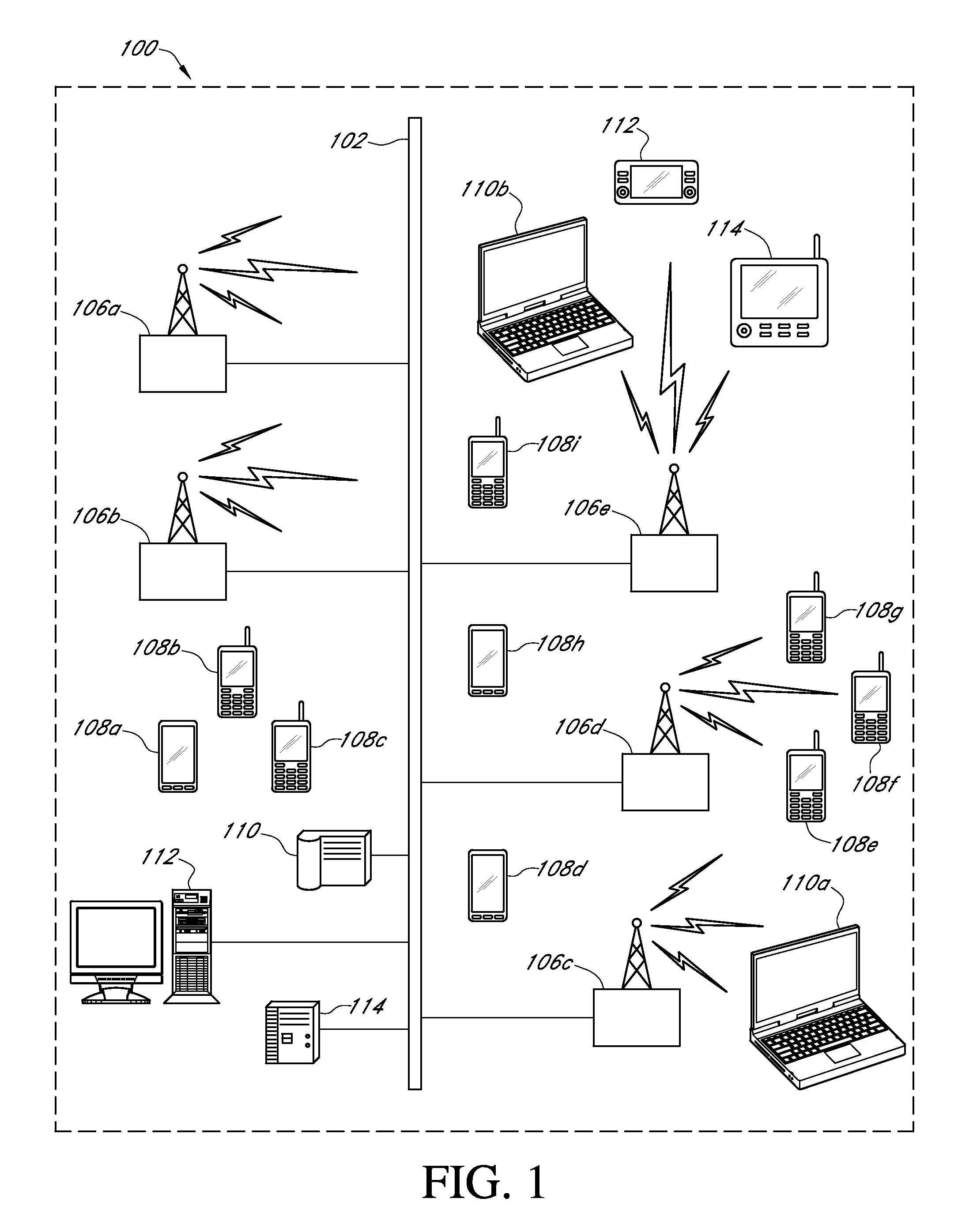

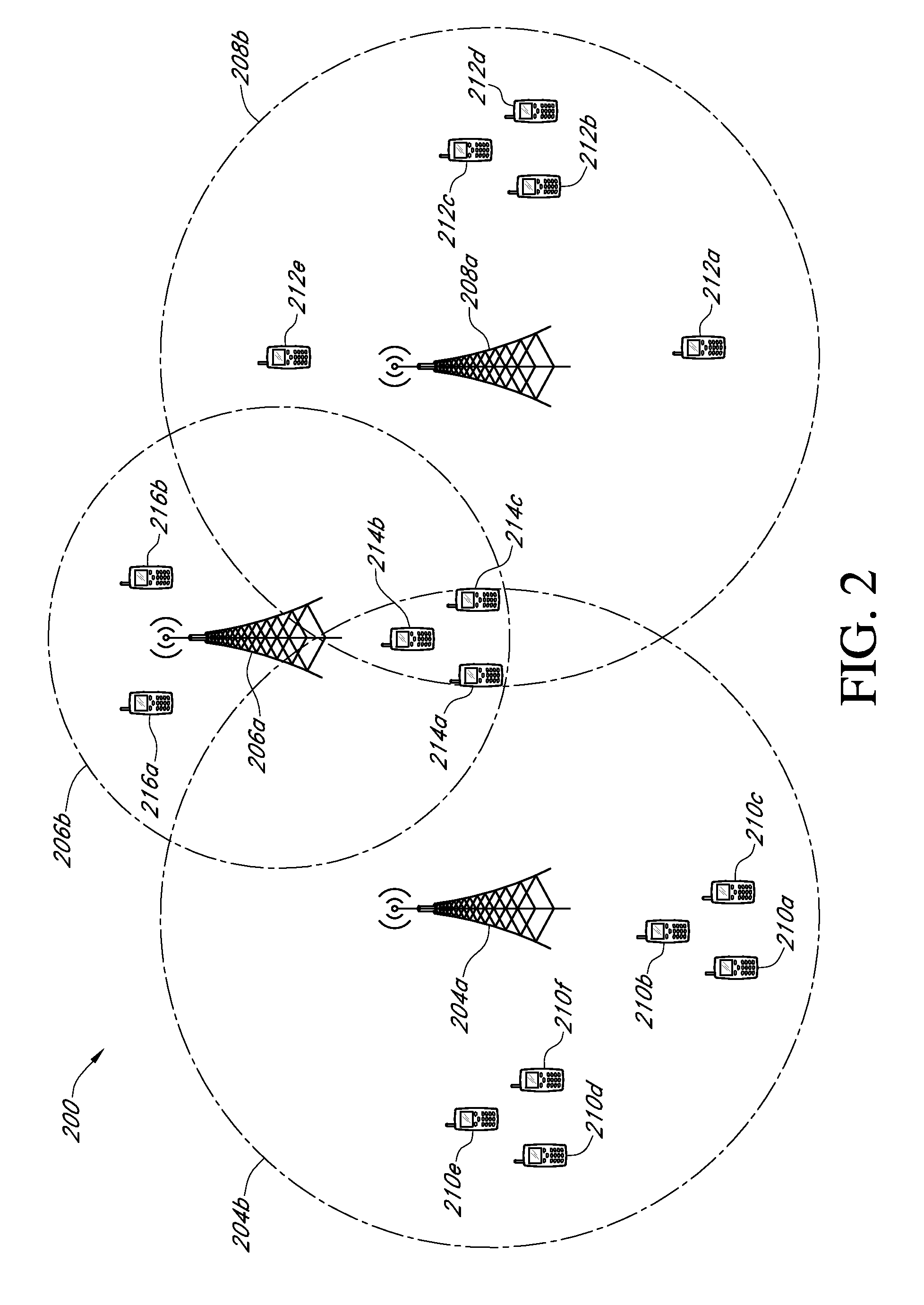

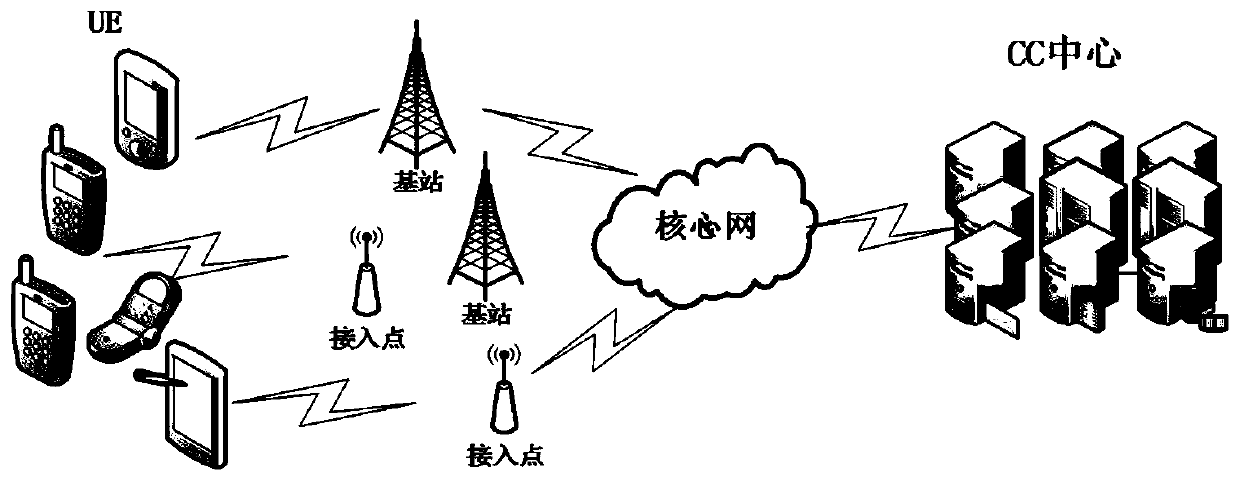

Systems and methods for autonomously determining network capacity and load balancing amongst multiple network cells

A networked computing system including multiple network base stations, user equipment, a network resource controller (NRC), and a data communications network facilitating communications amongst all devices of the networked computing system. The NRC determines a current radio channel available capacity based on a user load associated with regional user equipment, and then forecasts a maximum radio channel capacity based on the current radio channel available capacity. The NRC may be a network base station and it may determine a number of additional user equipment it can support as a component of the forecast maximum radio channel capacity. The NRC / base station may be further configured to determine a handover threshold utilizing the forecast maximum radio channel capacity, and when the NRC base station's number of users exceeds the handover threshold, one or more user equipment may be handed over to a second network base station with better service capacity.

Owner:VIVO MOBILE COMM CO LTD

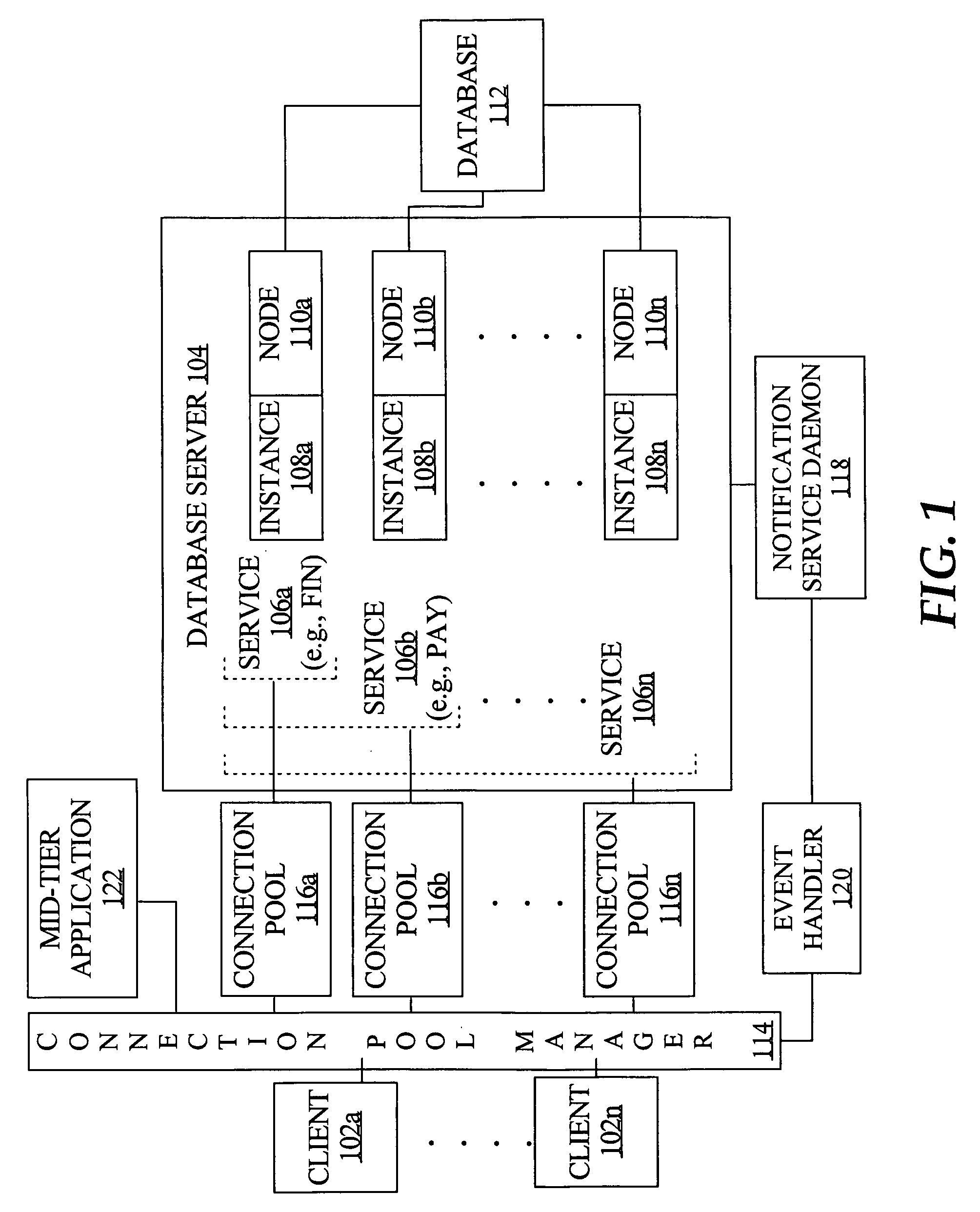

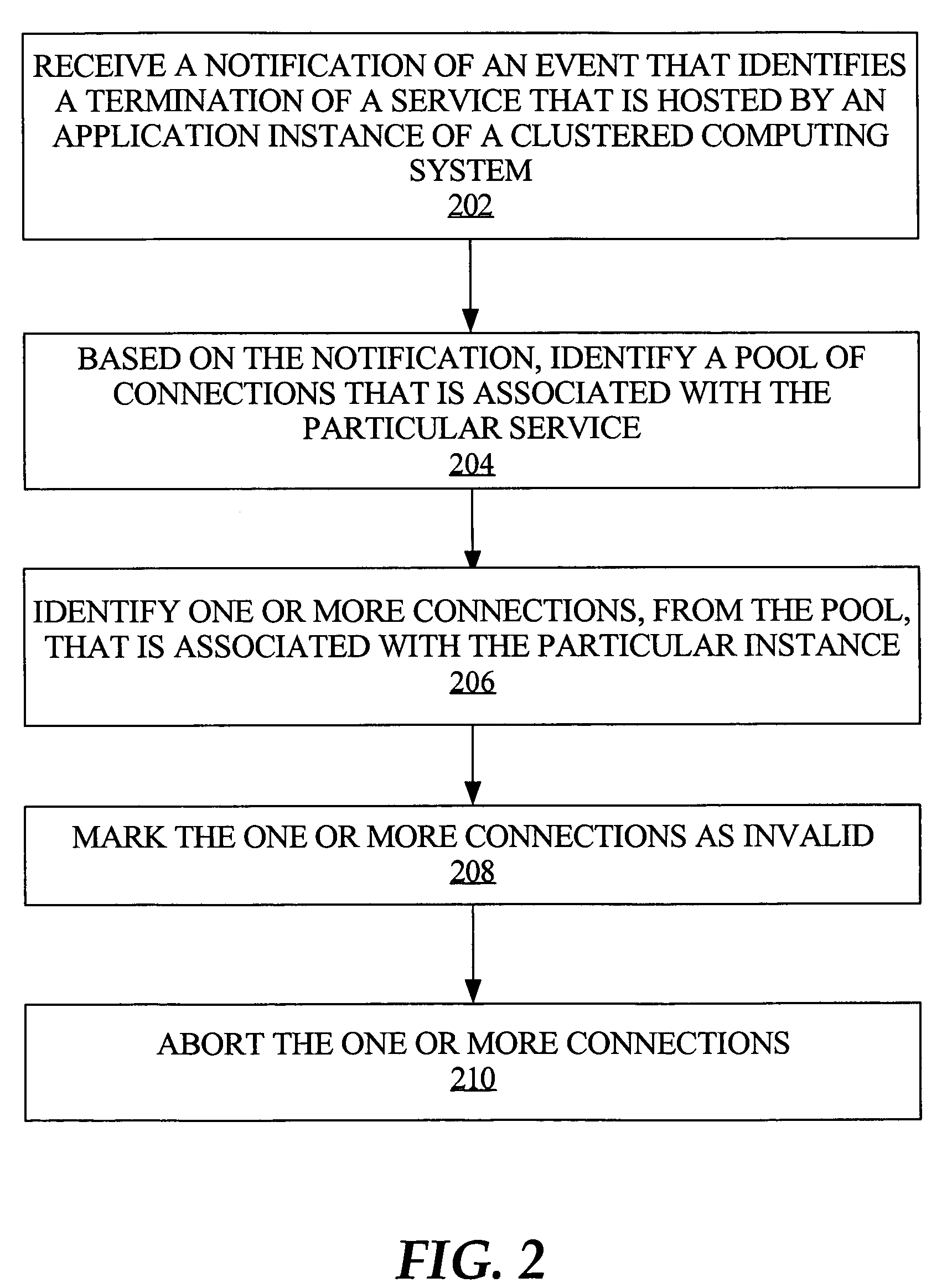

Fast reorganization of connections in response to an event in a clustered computing system

ActiveUS20050038801A1Easy to handleRapid positioningError detection/correctionDigital data processing detailsParallel computingConnection pool

Techniques for fast recovery and / or balancing of connections to a clustered computing system provide management of such connections by determining a number of connections to load balance across nodes and by triggering creation of such connections. In one aspect, a notification of an event regarding the clustered computing system is received by a connection pool manager, a pool of connections to the system is identified based on the notification, and one or more connections from the pool are processed in response to the event. According to an embodiment, the notification comprises the identities of the service, database, server instance and machine that correspond to the event.

Owner:ORACLE INT CORP

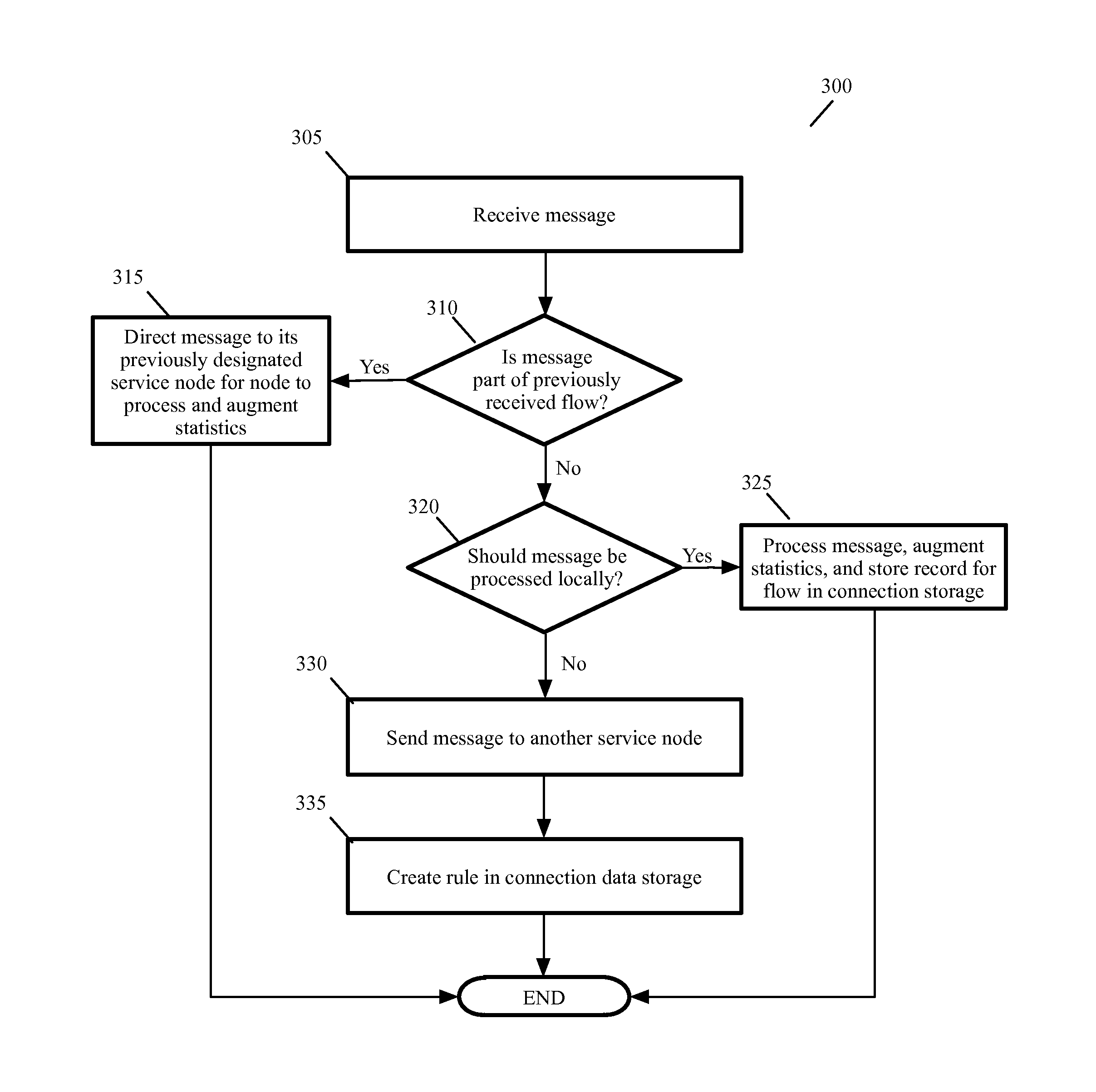

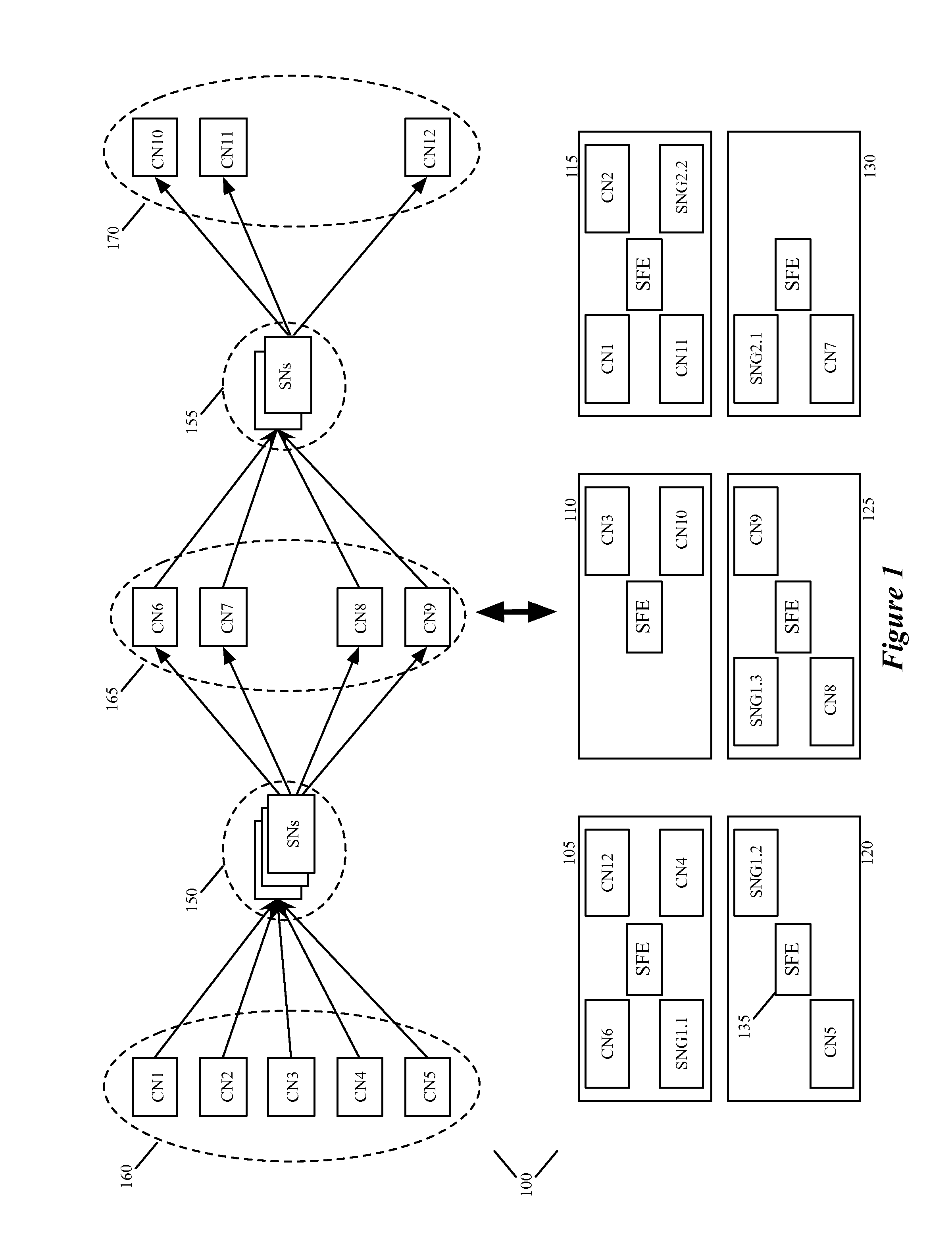

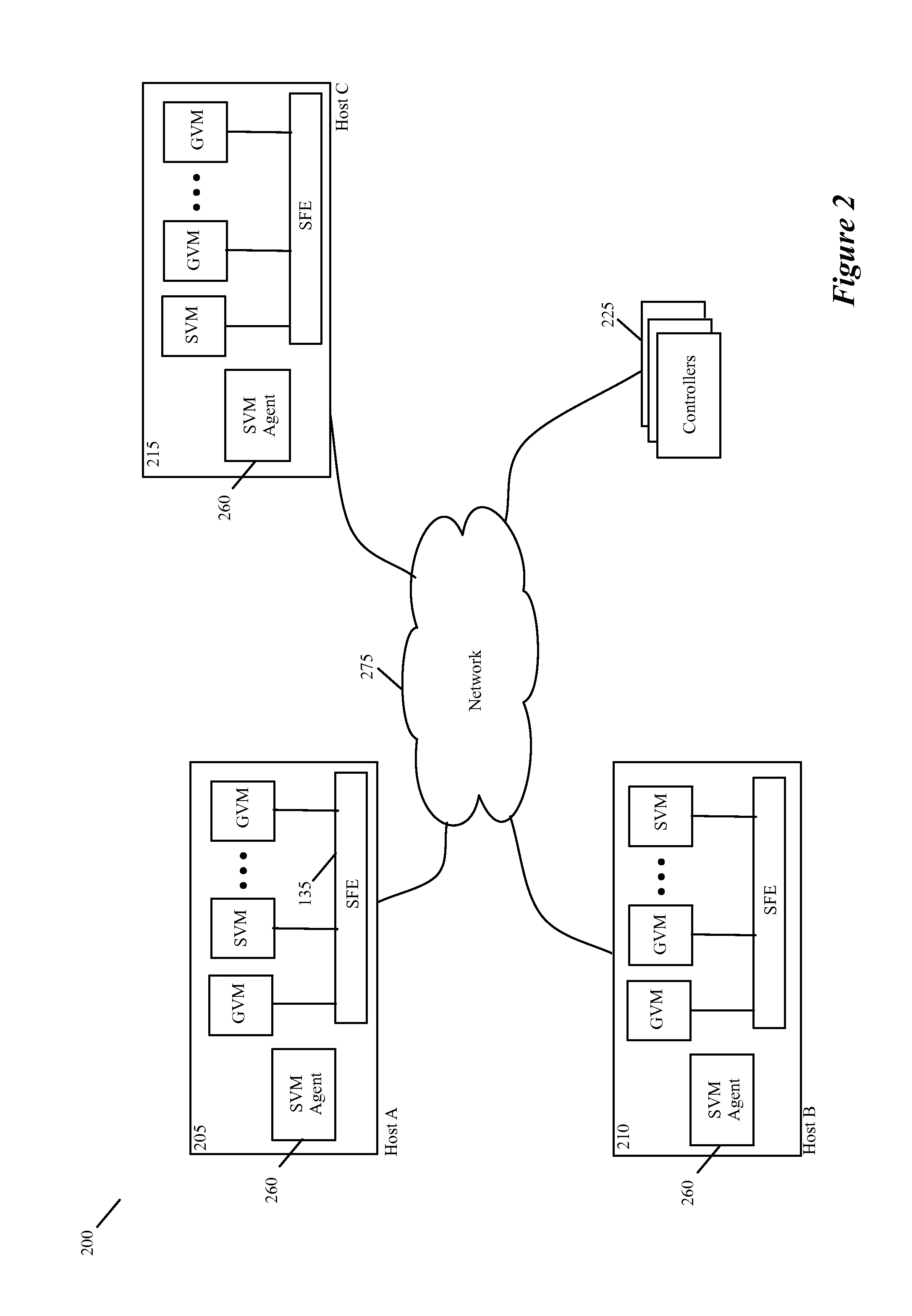

Method and apparatus for providing a service with a plurality of service nodes

Some embodiments provide an elastic architecture for providing a service in a computing system. To perform a service on the data messages, the service architecture uses a service node (SN) group that includes one primary service node (PSN) and zero or more secondary service nodes (SSNs). The service can be performed on a data message by either the PSN or one of the SSN. However, in addition to performing the service, the PSN also performs a load balancing operation that assesses the load on each service node (i.e., on the PSN or each SSN), and based on this assessment, has the data messages distributed to the service node(s) in its SN group. Based on the assessed load, the PSN in some embodiments also has one or more SSNs added to or removed from its SN group. To add or remove an SSN to or from the service node group, the PSN in some embodiments directs a set of controllers to add (e.g., instantiate or allocate) or remove the SSN to or from the SN group. Also, to assess the load on the service nodes, the PSN in some embodiments receives message load data from the controller set, which collects such data from each service node. In other embodiments, the PSN receives such load data directly from the SSNs.

Owner:NICIRA

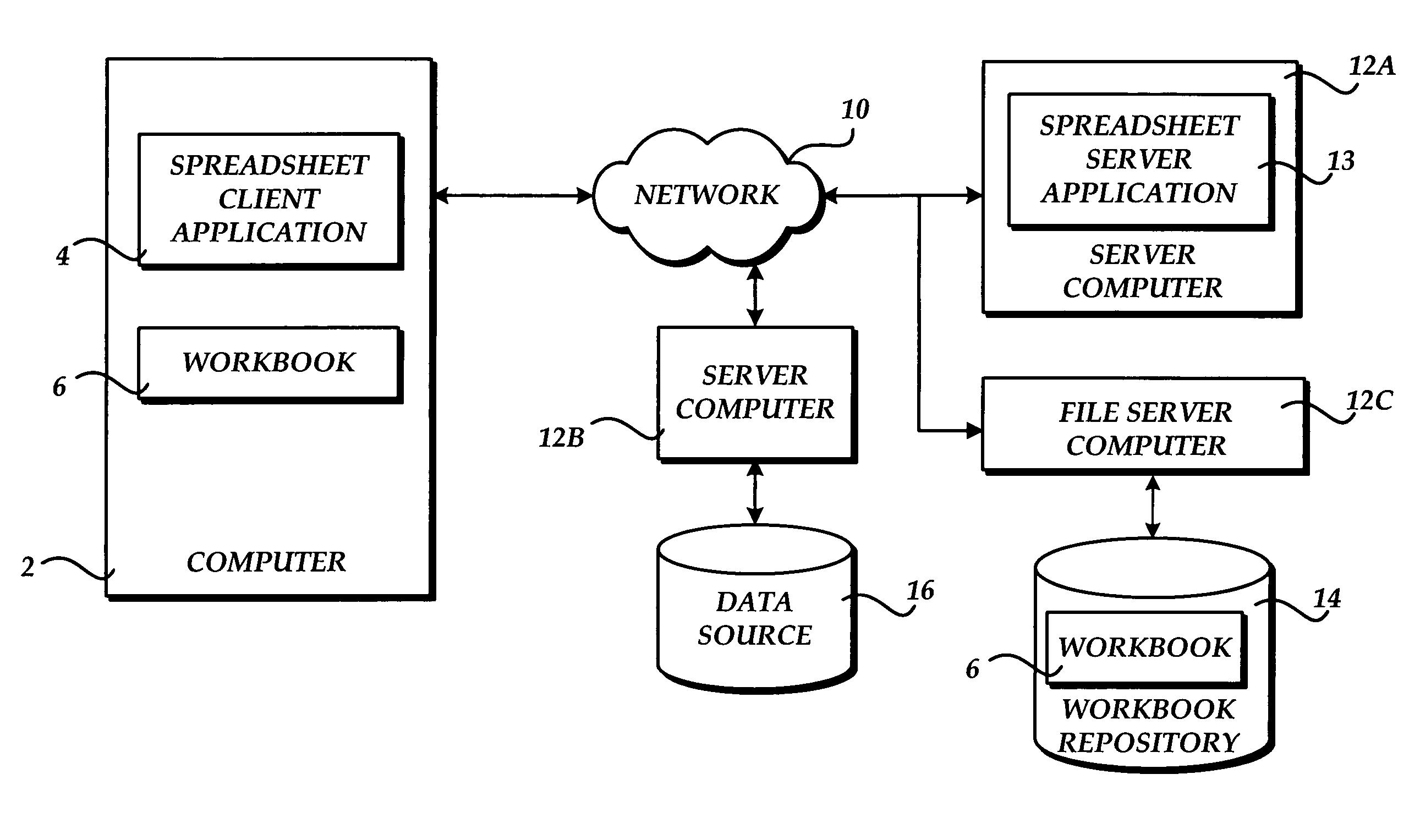

Load balancing based on cache content

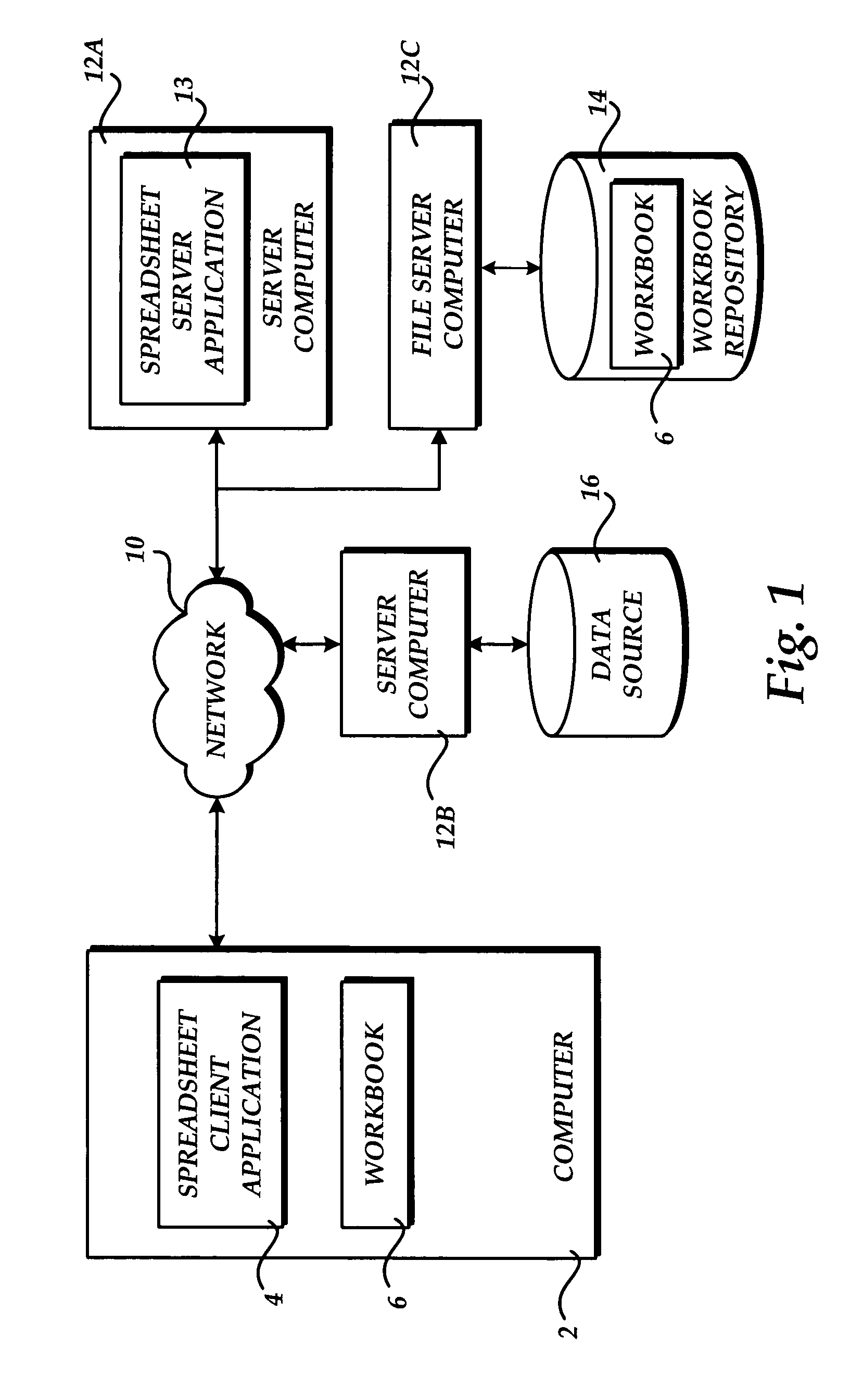

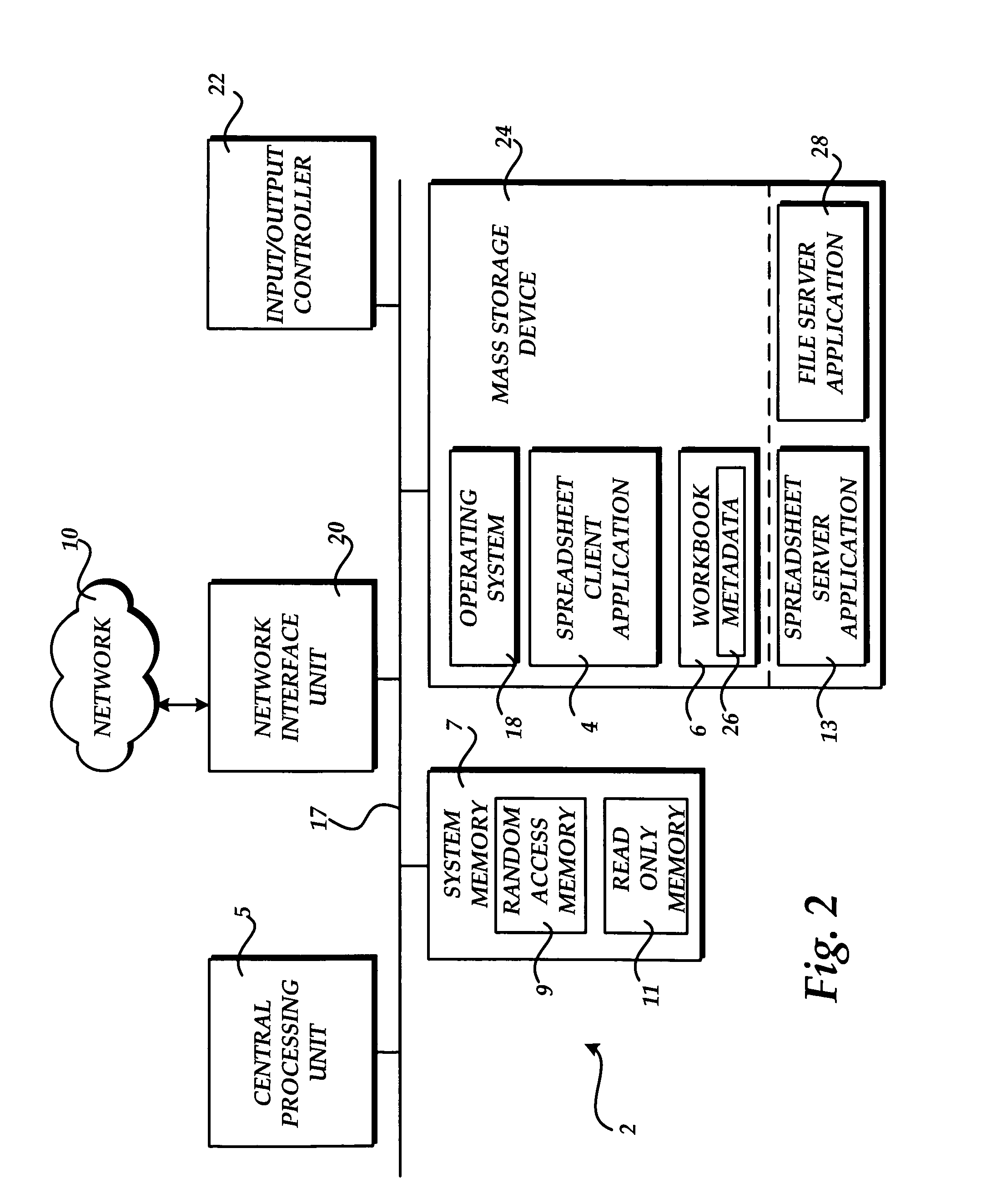

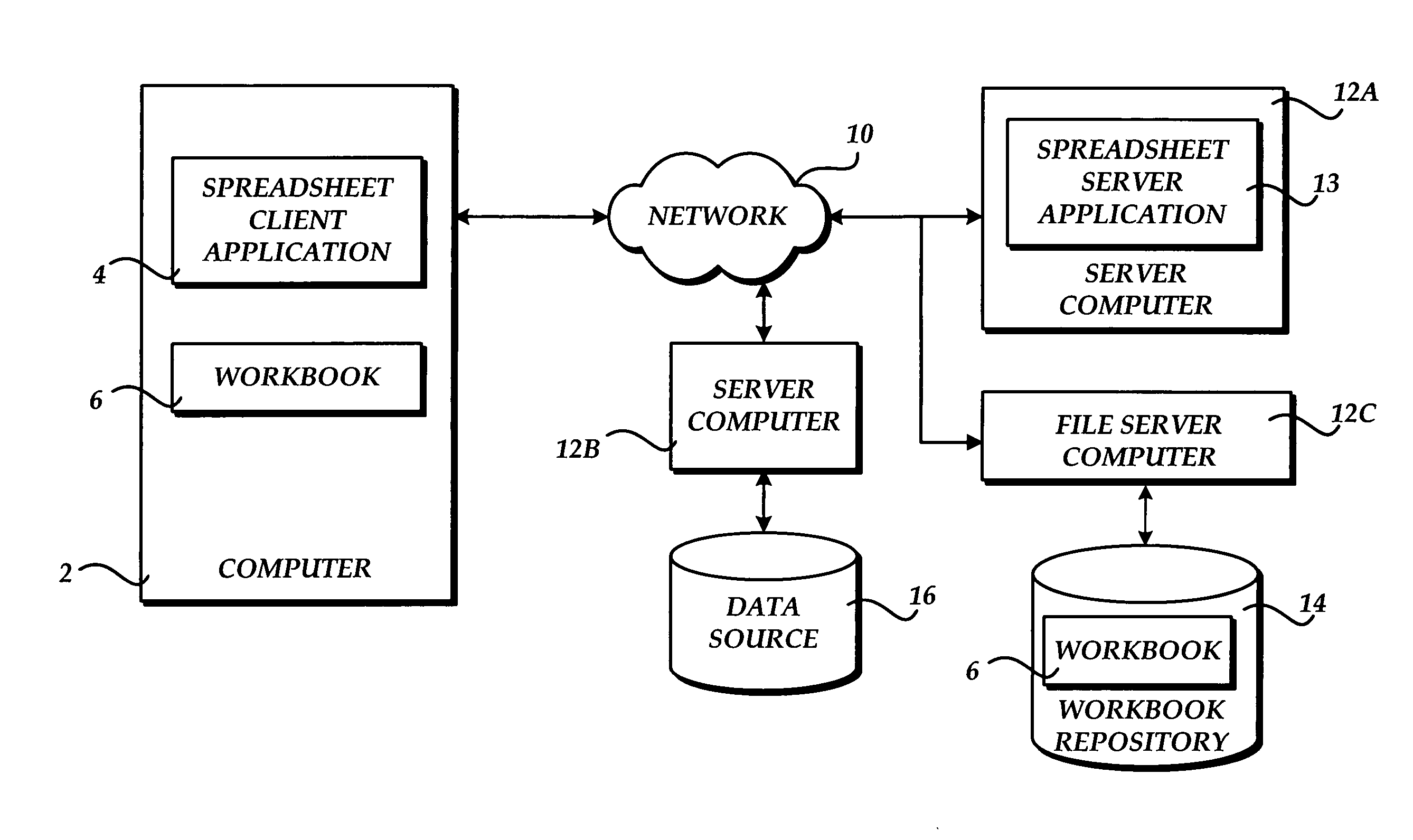

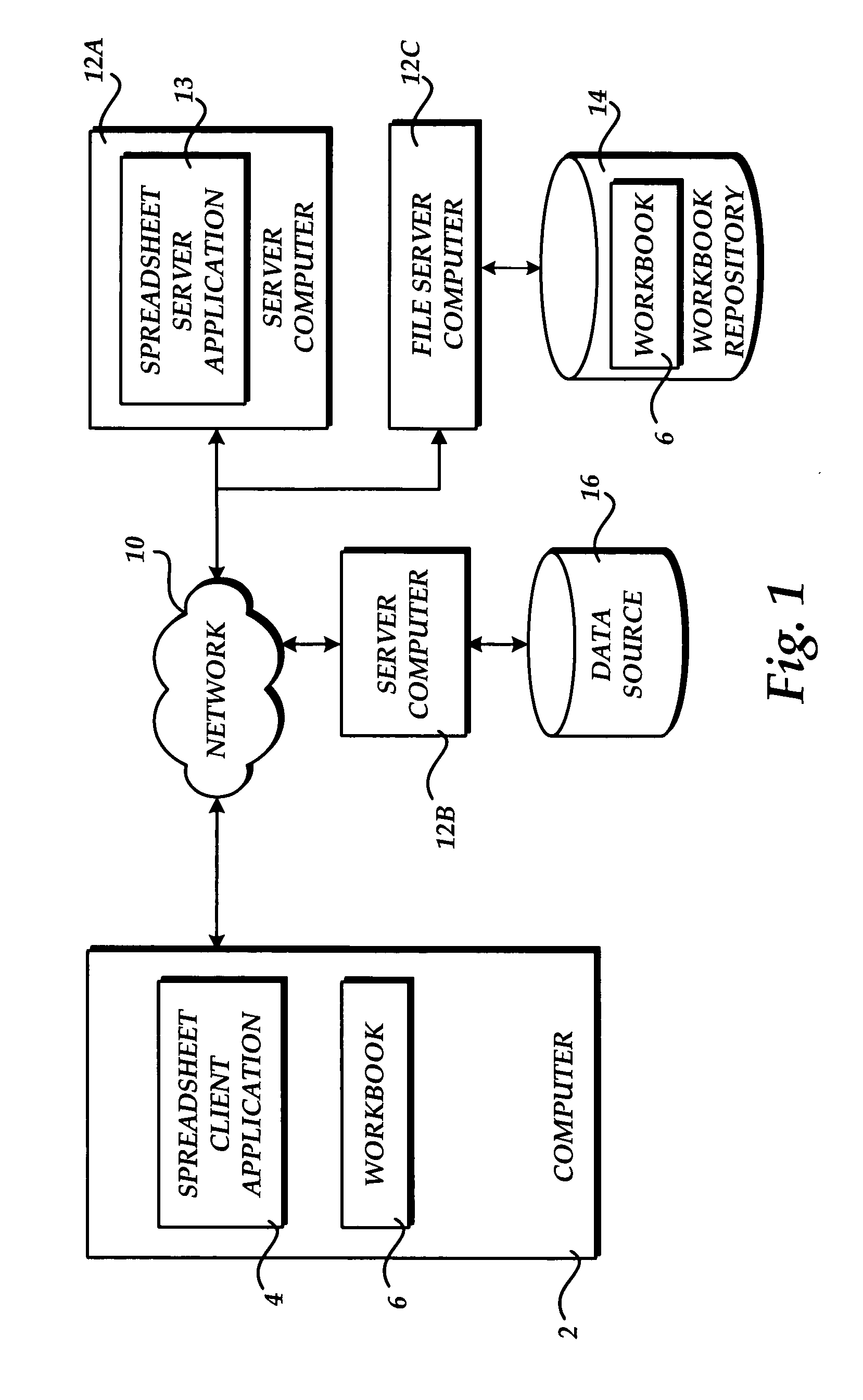

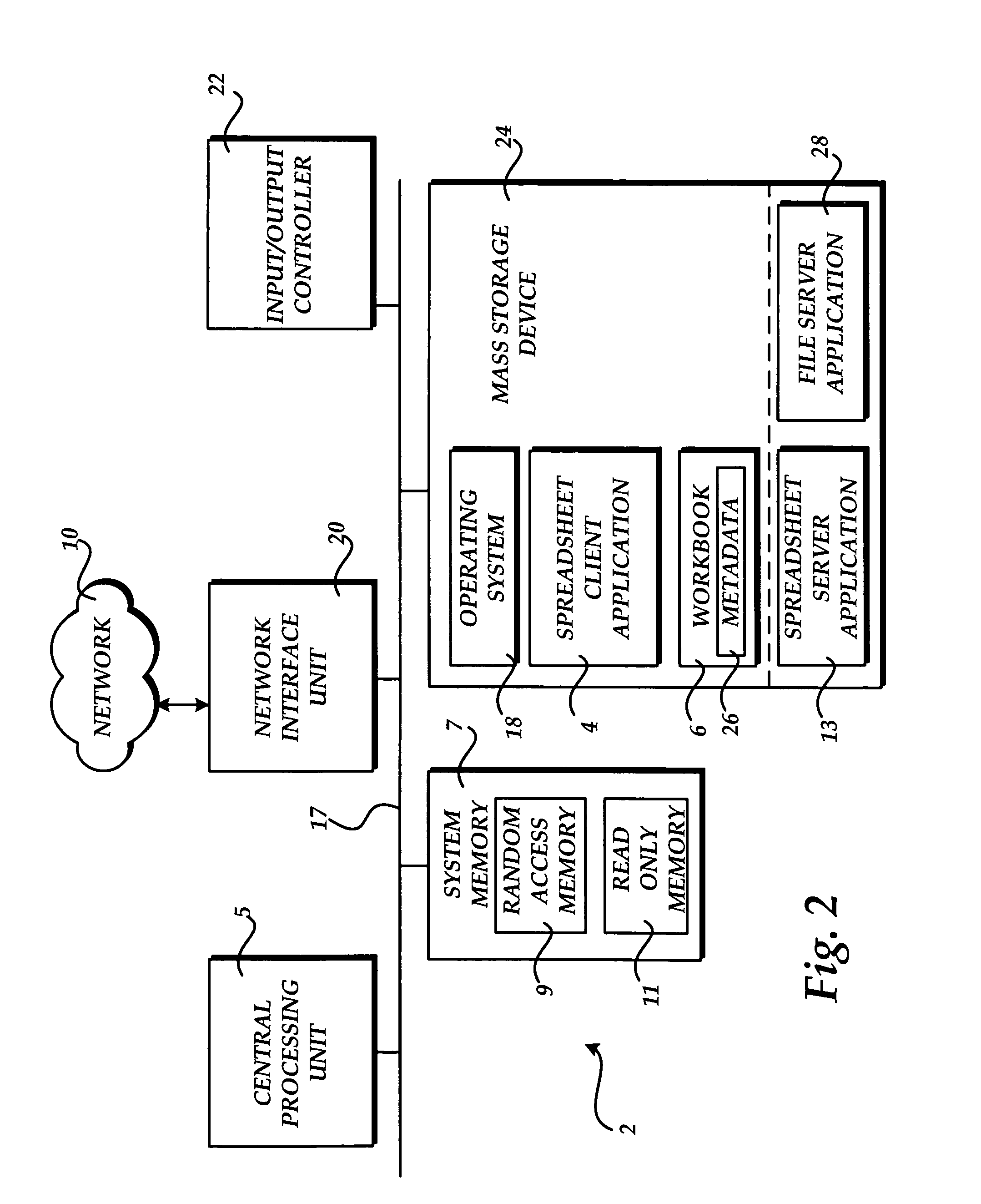

A method, schema, and computer-readable medium provide various means for load balancing computing devices in a multi-server environment. The method, schema, and computer-readable medium for load balancing computing devices in a multi-server environment may be utilized in a networked server environment, implementing a spreadsheet application for manipulating a workbook, for example. The method, schema, and computer-readable medium operate to load balance computing devices in a multi-server environment including determining whether a file, such as a spreadsheet application workbook, resides in the cache of a particular server, such as a calculation server. Upon meeting certain conditions, the user request may be directed to the particular server.

Owner:MICROSOFT TECH LICENSING LLC

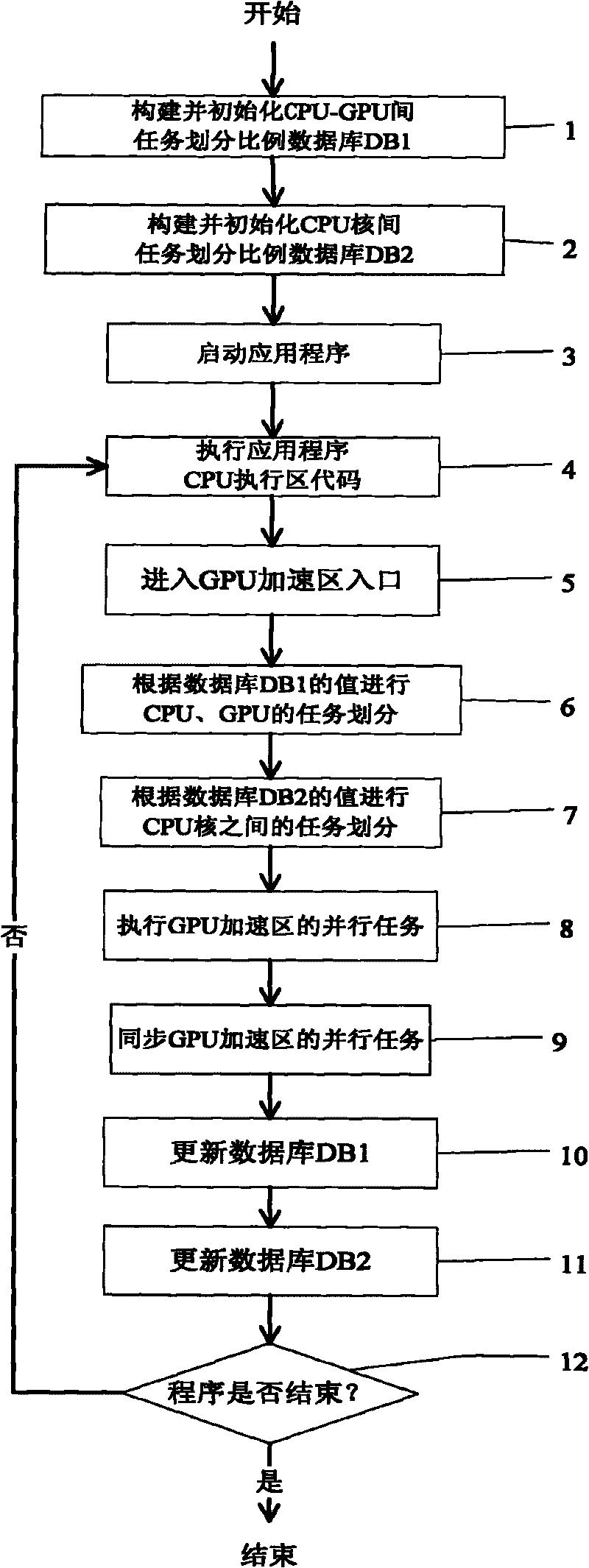

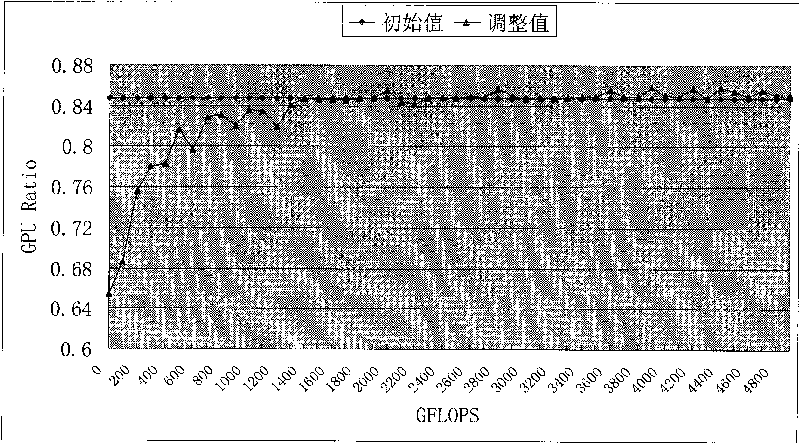

Method for partitioning dynamic tasks of CPU and GPU based on load balance

InactiveCN101706741AGuaranteed task load balancingImprove performanceResource allocationApplication softwareReal-time computing

Owner:NAT UNIV OF DEFENSE TECH

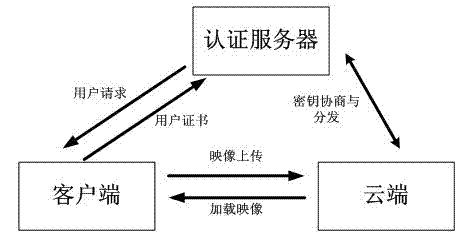

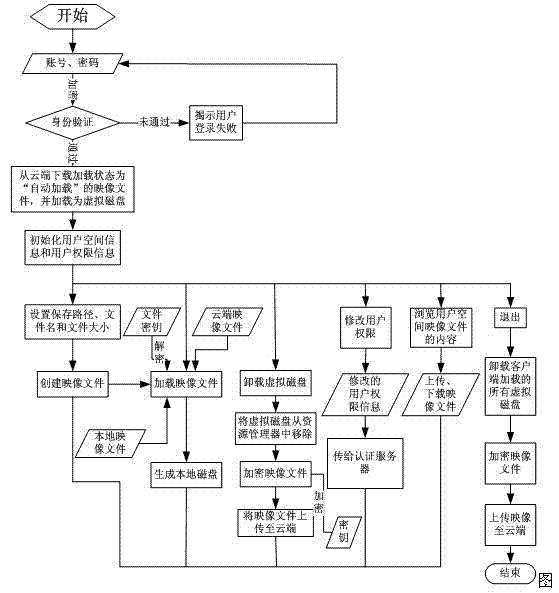

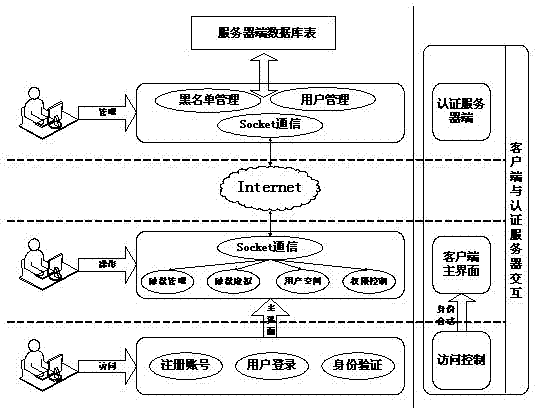

Network virtual disk file safety management method based on cloud computing

InactiveCN102394894AIncrease legitimacyImprove securityTransmissionCloud computingLoad balancing (computing)

The invention provides a network virtual disk file safety management method based on cloud computing, which achieves the functions of automatic load balancing and transparent space expanding and shrinking by adopting cloud computing thought. In order to overcome the defect that public cloud can not realize data safety isolation and authorization use, the method can take Hadoop cloud platform as a base to construct a seamless virtual disk transparent encryption environment and realize distribution storage, data isolation, safe data sharing and other functions.

Owner:WUHAN UNIV

Load balancing based on cache content

InactiveUS20060161577A1Data processing applicationsDigital data processing detailsLoad SheddingElectronic form

A method, schema, and computer-readable medium provide various means for load balancing computing devices in a multi-server environment. The method, schema, and computer-readable medium for load balancing computing devices in a multi-server environment may be utilized in a networked server environment, implementing a spreadsheet application for manipulating a workbook, for example. The method, schema, and computer-readable medium operate to load balance computing devices in a multi-server environment including determining whether a file, such as a spreadsheet application workbook, resides in the cache of a particular server, such as a calculation server. Upon meeting certain conditions, the user request may be directed to the particular server.

Owner:MICROSOFT TECH LICENSING LLC

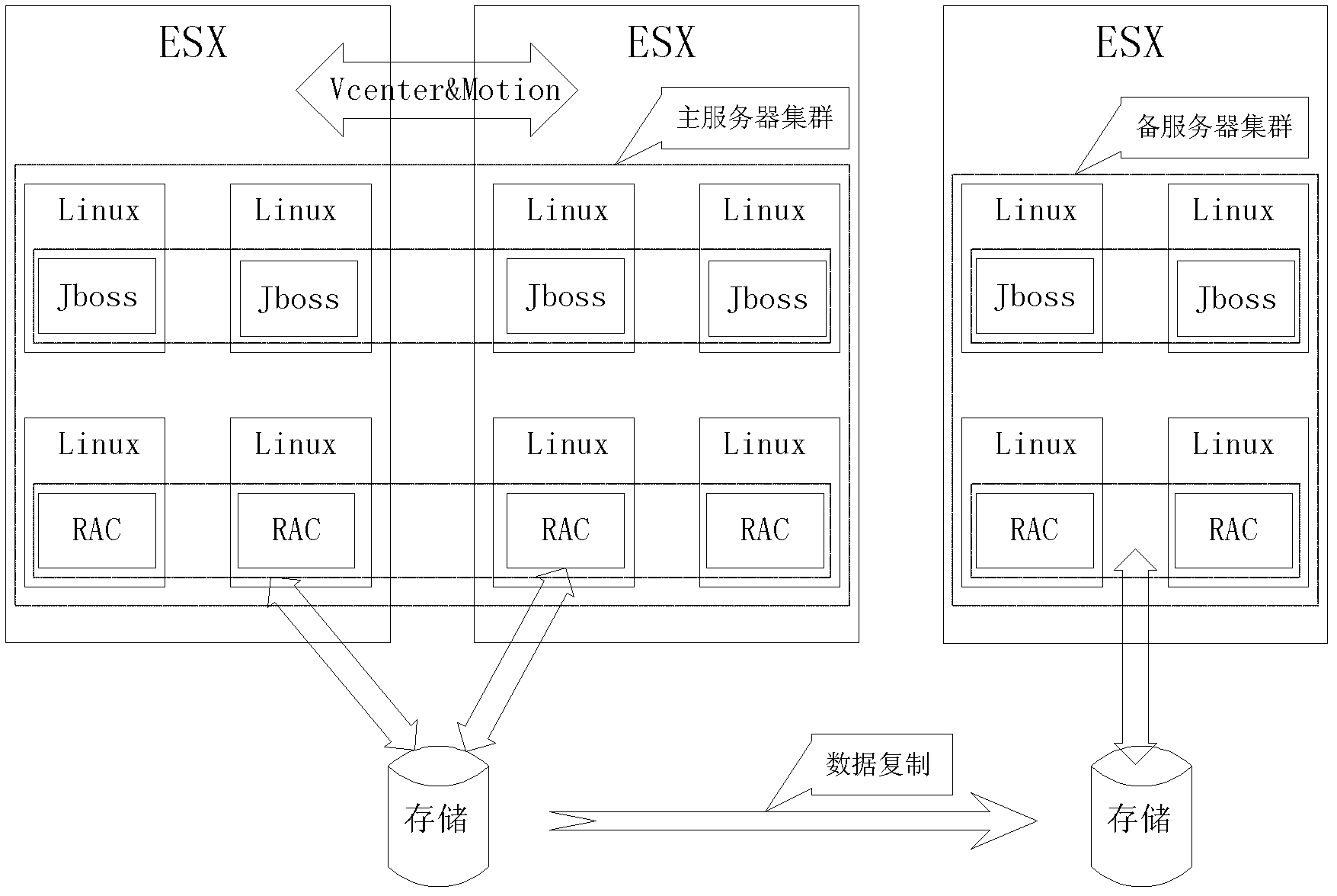

Cloud computing implementation method and system

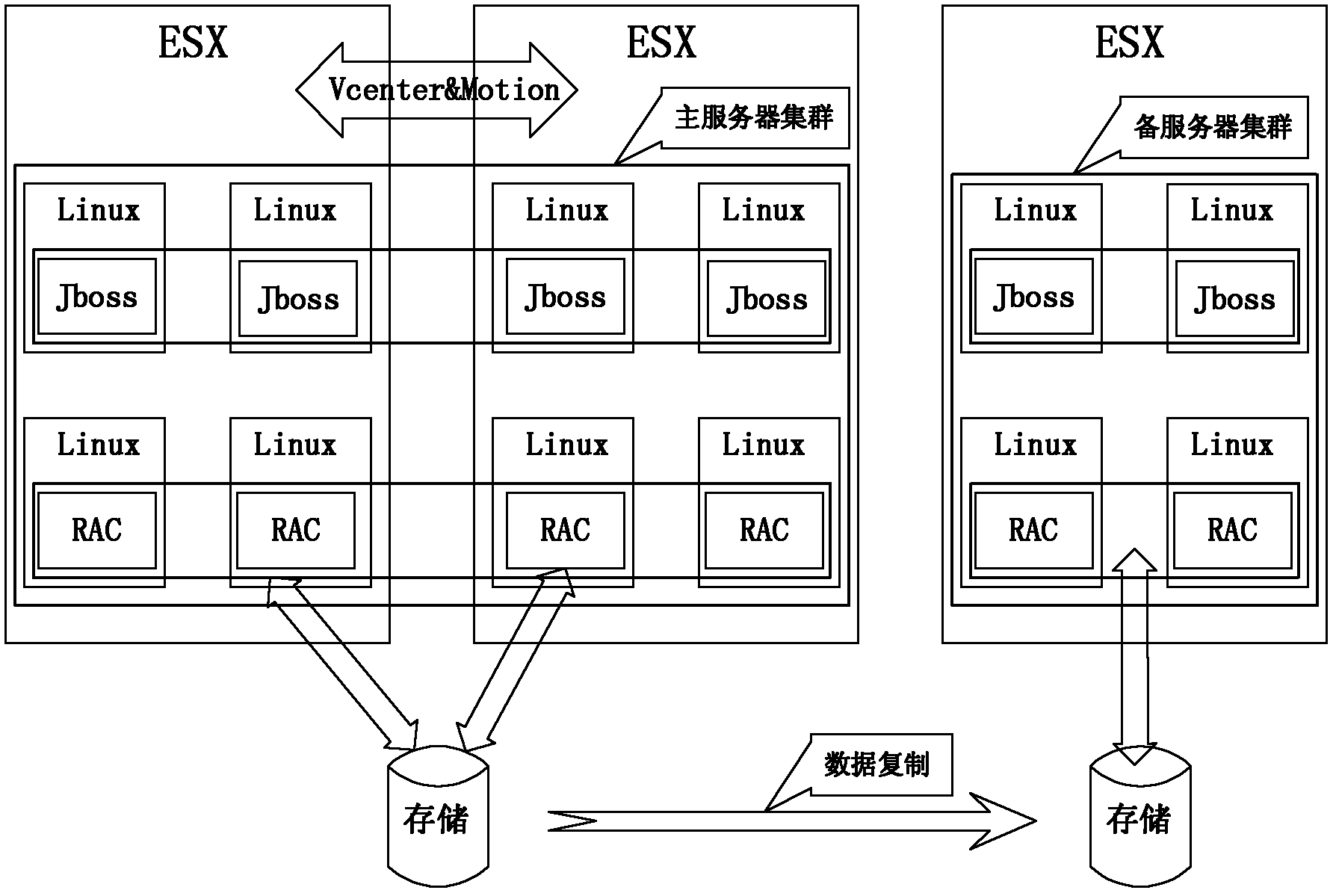

ActiveCN102325192AAvoid pauseGuaranteed unobstructedTransmissionSoftware simulation/interpretation/emulationFile systemComputer module

The invention discloses a cloud computing implementation method. By multilayer load balancing architecture design, a plurality of virtual machines with totally same functions are deployed in an application layer, a middle layer and a database layer, and fault monitoring modules are respectively deployed on the virtual machines; when one virtual machine has a file system read-only fault and the like, the fault monitoring module automatically turns off the virtual machine to realize automatic isolation of a virtual machine node, and reports the fault information to a management center; the management center migrates the access request of the virtual machine having the fault to the other corresponding virtual machine; and the fault or the deletion of any main virtual machine does not cause pause on the current service system, so that the fault of a single main virtual machine can be prevented from affecting the whole cloud computing platform, the smoothness of services is ensured, and the user experience and the performance of the whole system are improved. The invention also discloses a cloud computing system.

Owner:SHANGHAI BAOSIGHT SOFTWARE CO LTD +1

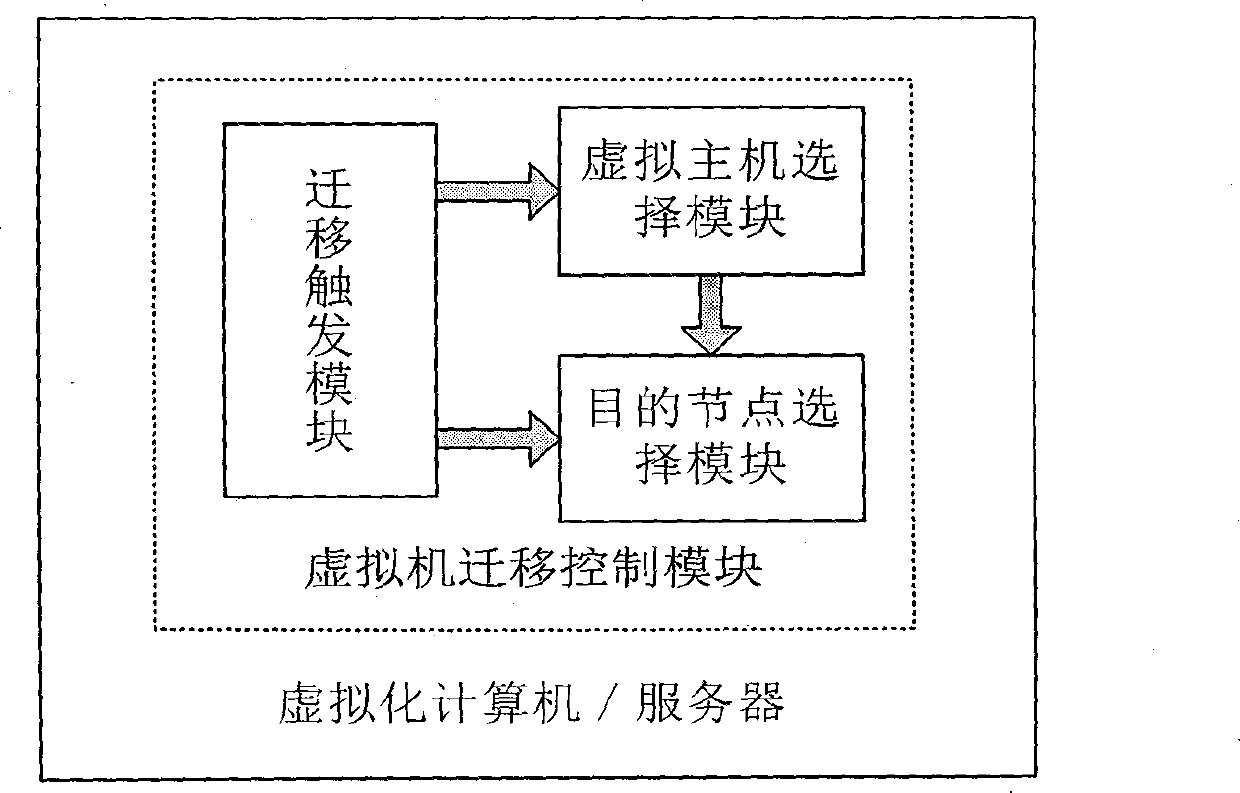

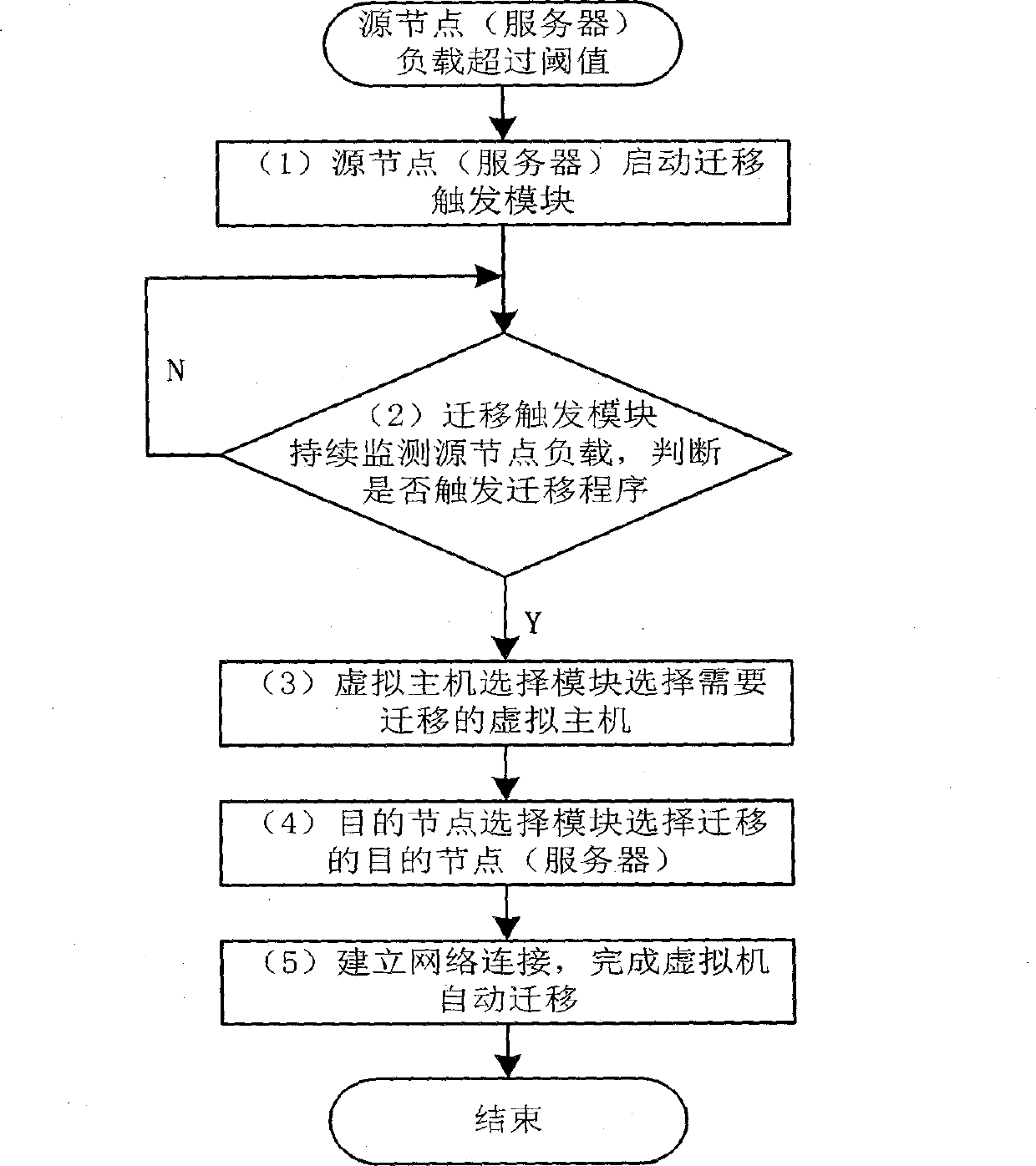

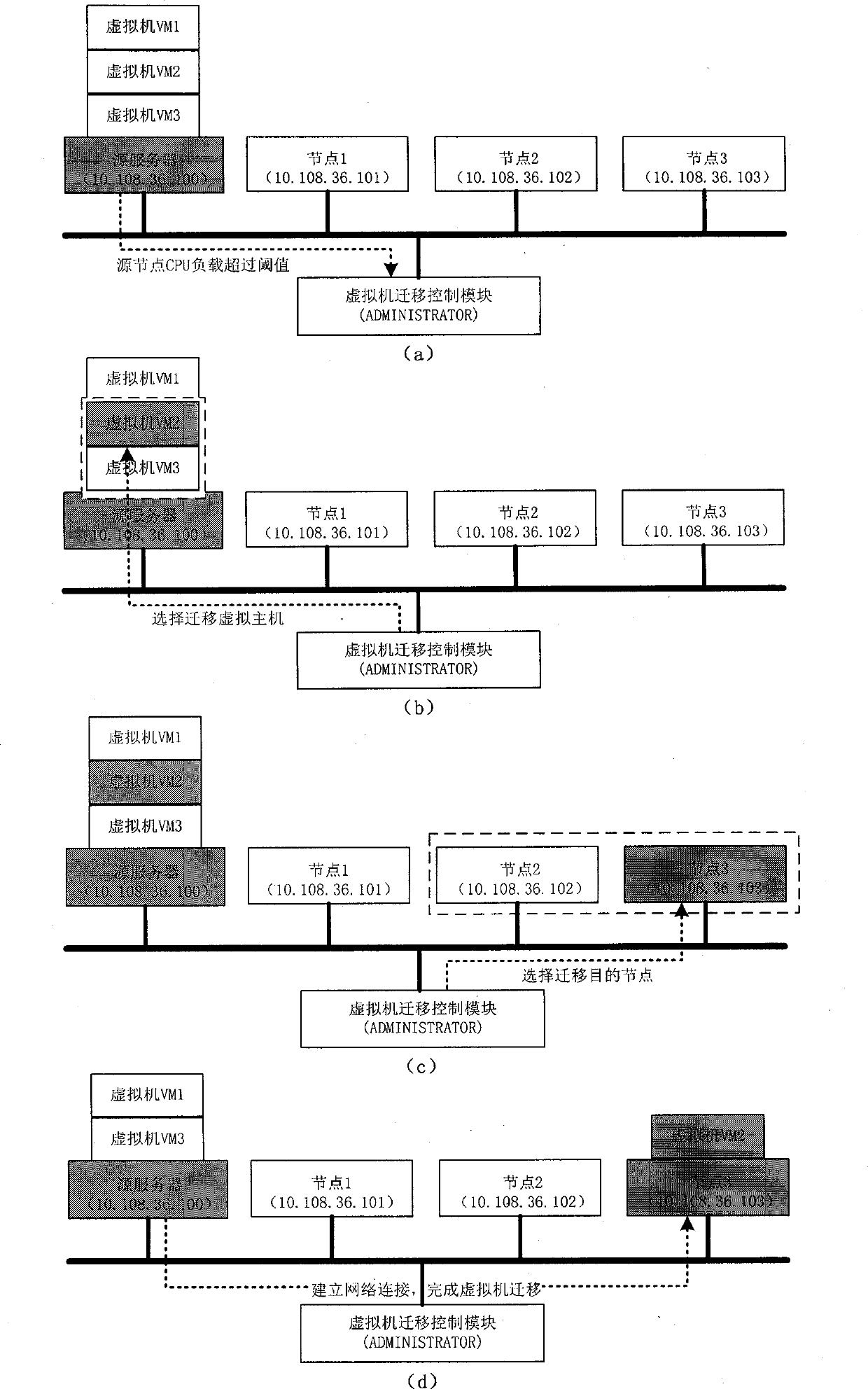

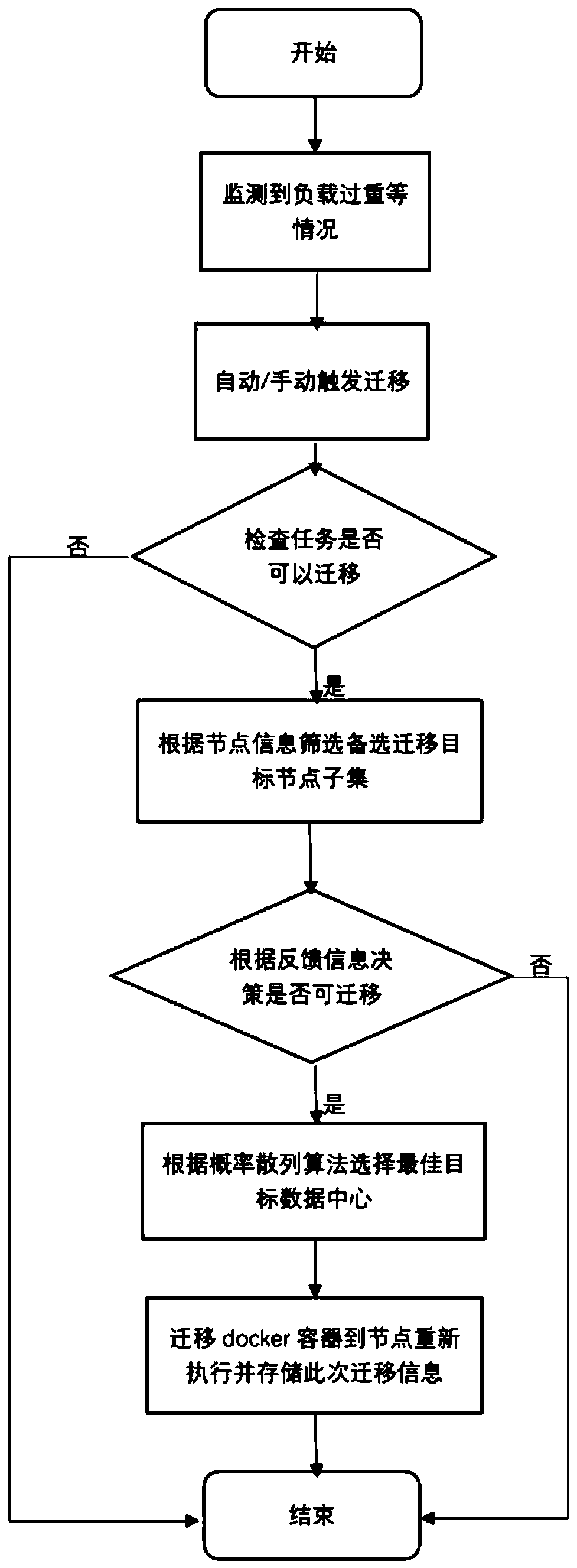

Decision method and control module facing to cloud computing virtual machine migration

The invention provides a decision method and a control module facing to cloud computing virtual machine migration. The control module is composed of a migration trigger module, a virtual machine choosing module and a destination node choosing module. The method includes: firstly setting load threshold value of the system through the migration trigger module, and enabling the system to effectively prevent instant load peak value from triggering unnecessary virtual machine migration by utilizing the load forecast technology; enabling the virtual machine choosing module to choose the virtual machine needing migration according to the migration cost minimum strategy, and reducing the migration cost of the virtual machine to the minimum value to save system resources; and finally, enabling the destination node choosing module to provide the destination node choosing strategy based on weighting probability choosing algorithm. Therefore, the problem of aggregation collision caused by migration of a large number of virtual machines is effectively solved. At last, the decision method can well achieve load balancing and enables resources to be used fair and reasonably.

Owner:BEIJING UNIV OF POSTS & TELECOMM

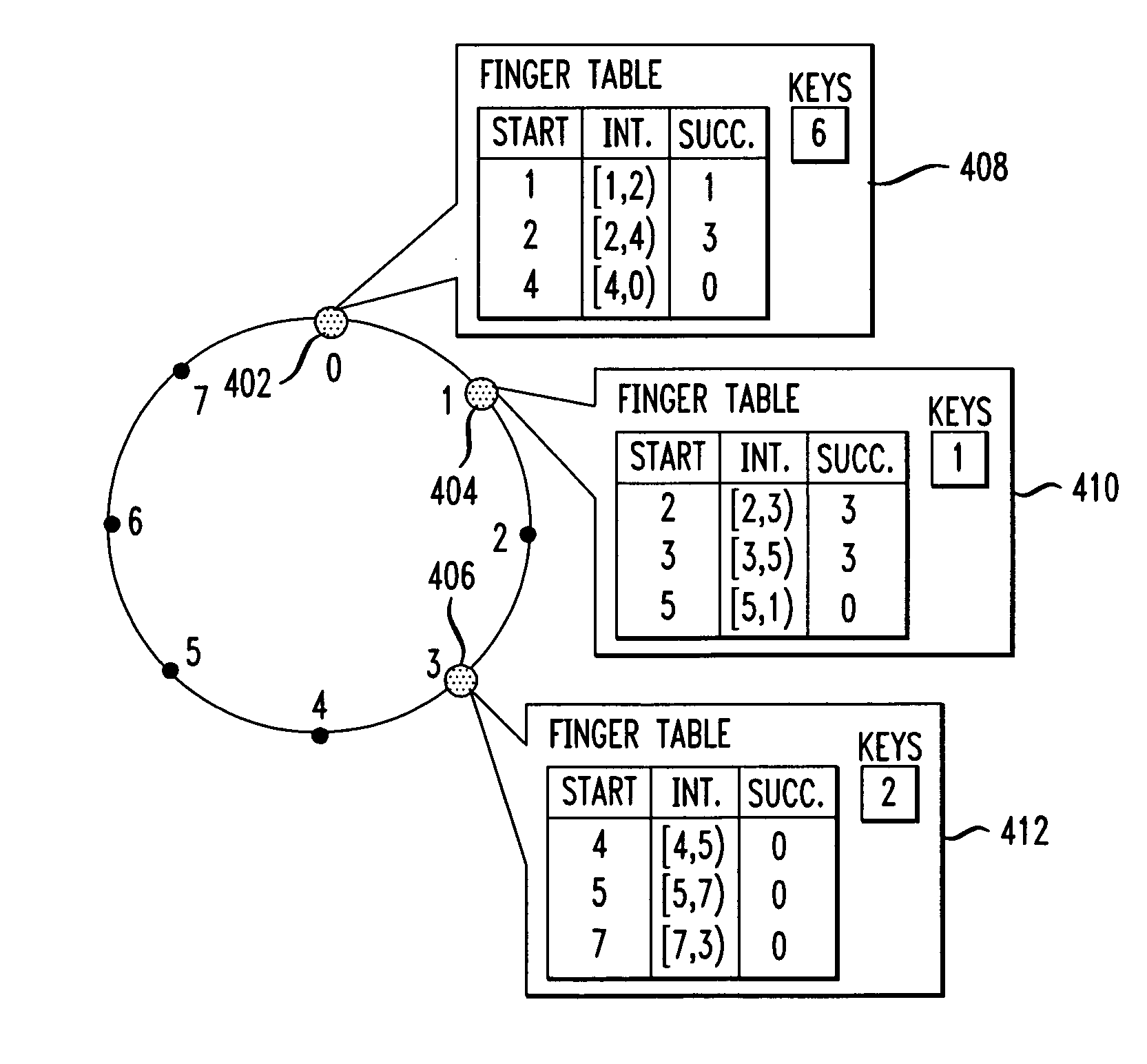

Method and apparatus for distributed indexing

InactiveUS20070079004A1Improve techniqueDigital computer detailsProgram controlDistributed computingGrid computing

Disclosed is a method and apparatus for providing range based queries over distributed network nodes. Each of a plurality of distributed network nodes stores at least a portion of a logical index tree. The nodes of the logical index tree are mapped to the network nodes based on a hash function. Load balancing is addressed by replicating the logical index tree nodes in the distributed physical nodes in the network. In one embodiment the logical index tree comprises a plurality of logical nodes for indexing available resources in a grid computing system. The distributed network nodes are broker nodes for assigning grid computing resources to requesting users. Each of the distributed broker nodes stores at least a portion of the logical index tree.

Owner:NEC LAB AMERICA

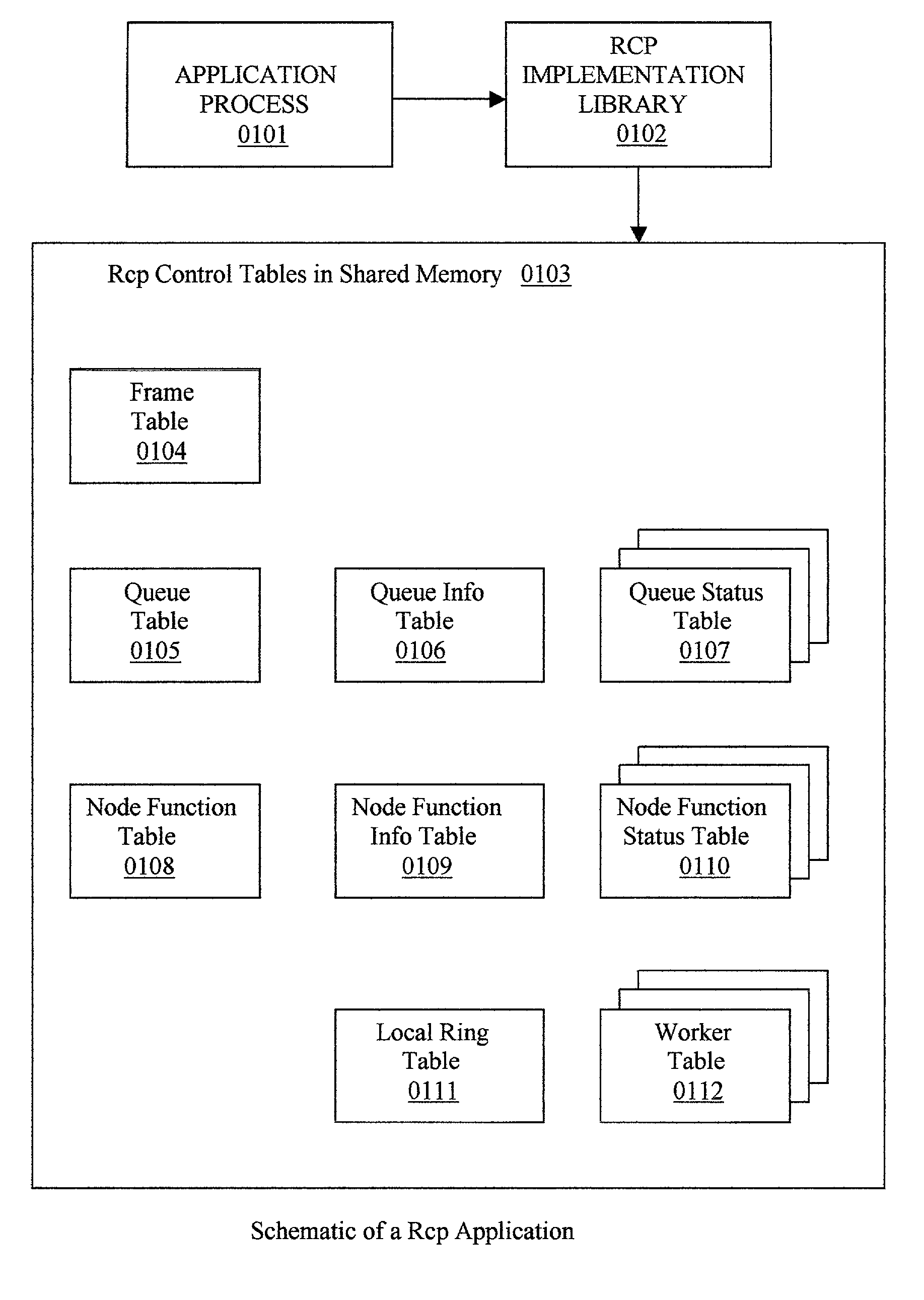

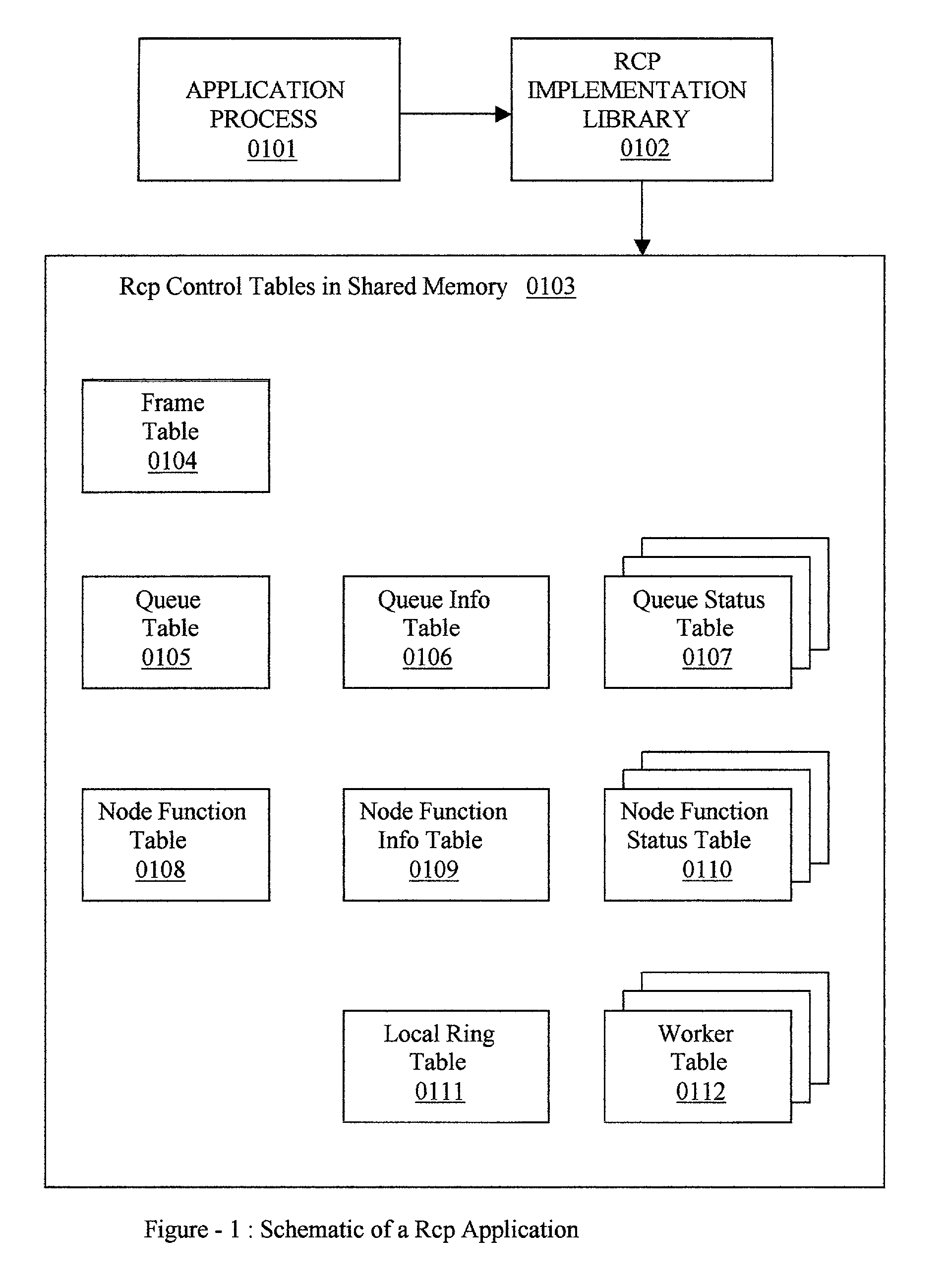

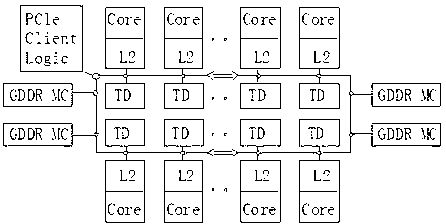

Parallel processing system design and architecture

InactiveUS20030188300A1Resource allocationSpecific program execution arrangementsVirtualizationComputer architecture

An architecture and design called Resource control programming (RCP), for automating the development of multithreaded applications for computing machines equipped with multiple symmetrical processors and shared memory. The Rcp runtime (0102) provides a special class of configurable software device called Rcp Gate (0600), for managing the inputs and outputs and user functions with a predefined signature called node functions (0500). Each Rcp gate manages one node function, and each node function can have one or more invocations. The inputs and outputs of the node functions are virtualized by means of virtual queues and the real queues are bound to the node function invocations, during execution. Each Rcp gate computes its efficiency during execution, which determines the efficiency at which the node function invocations are running. The Rcp Gate will schedule more node function invocations or throttle the scheduling of the node functions depending on the efficiency of the Rcp gate. Thus automatic load balancing of the node functions is provided without any prior knowledge of the load of the node functions and without computing the time taken by each of the node functions.

Owner:PATRUDU PILLA G

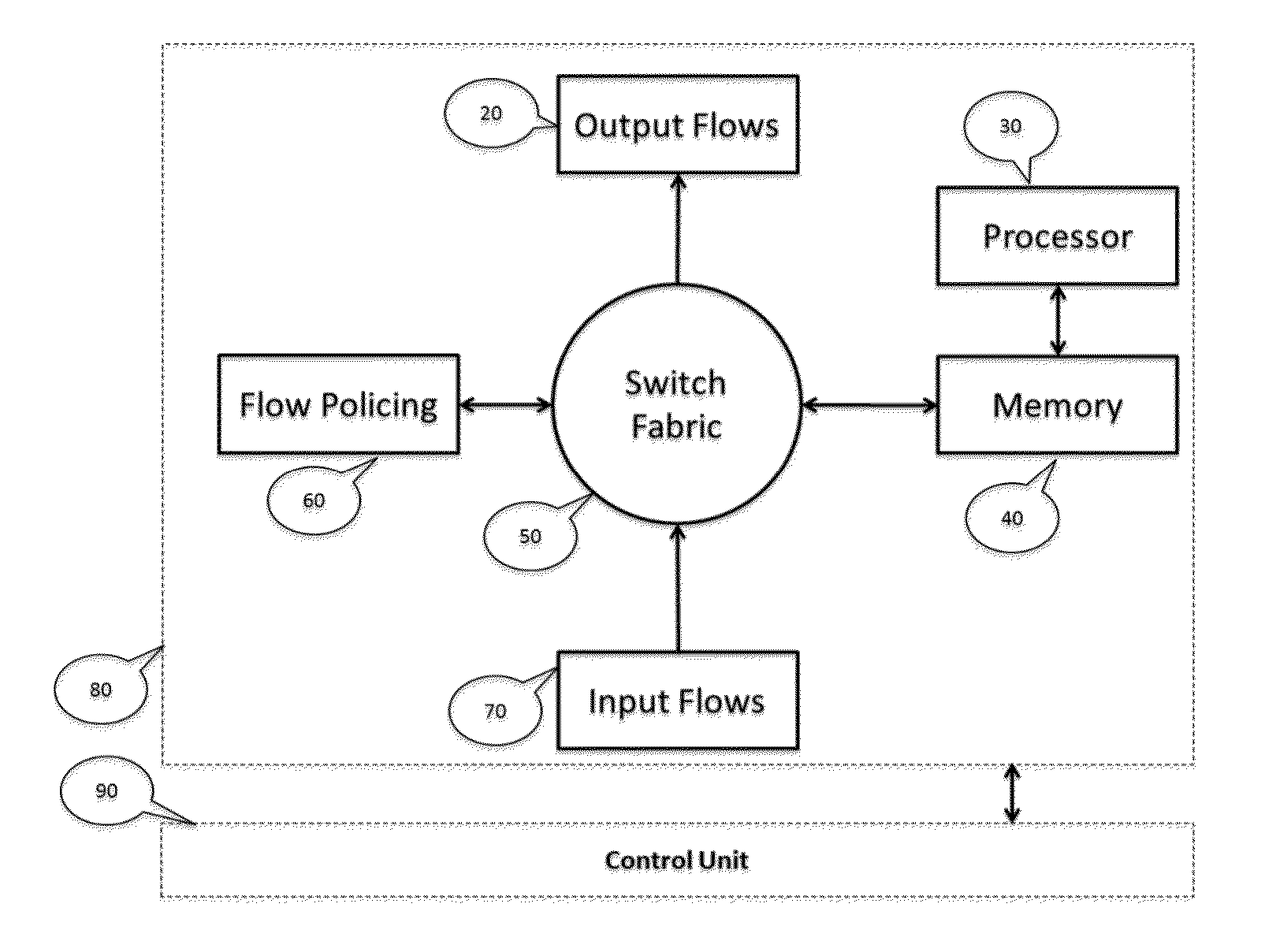

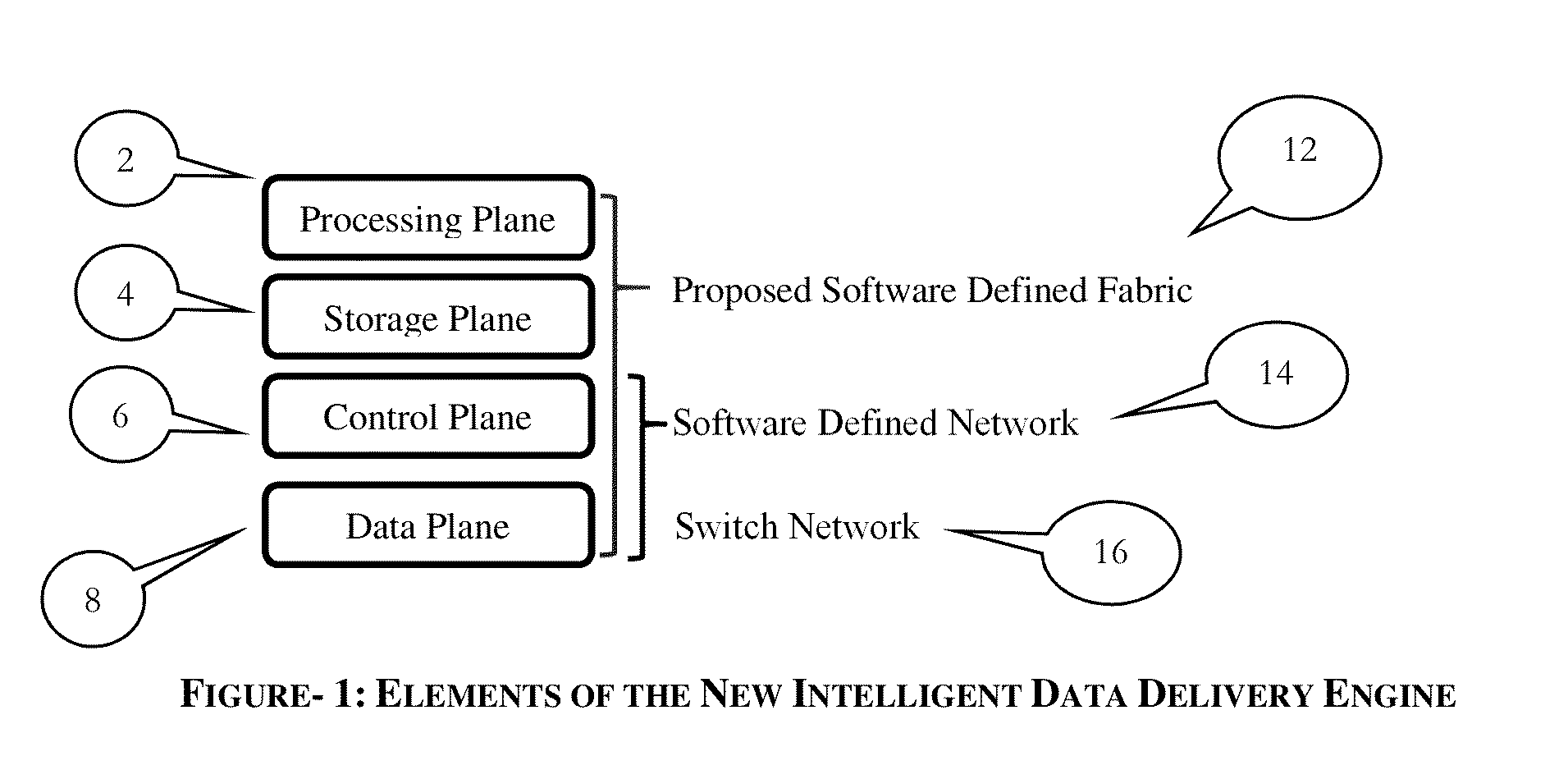

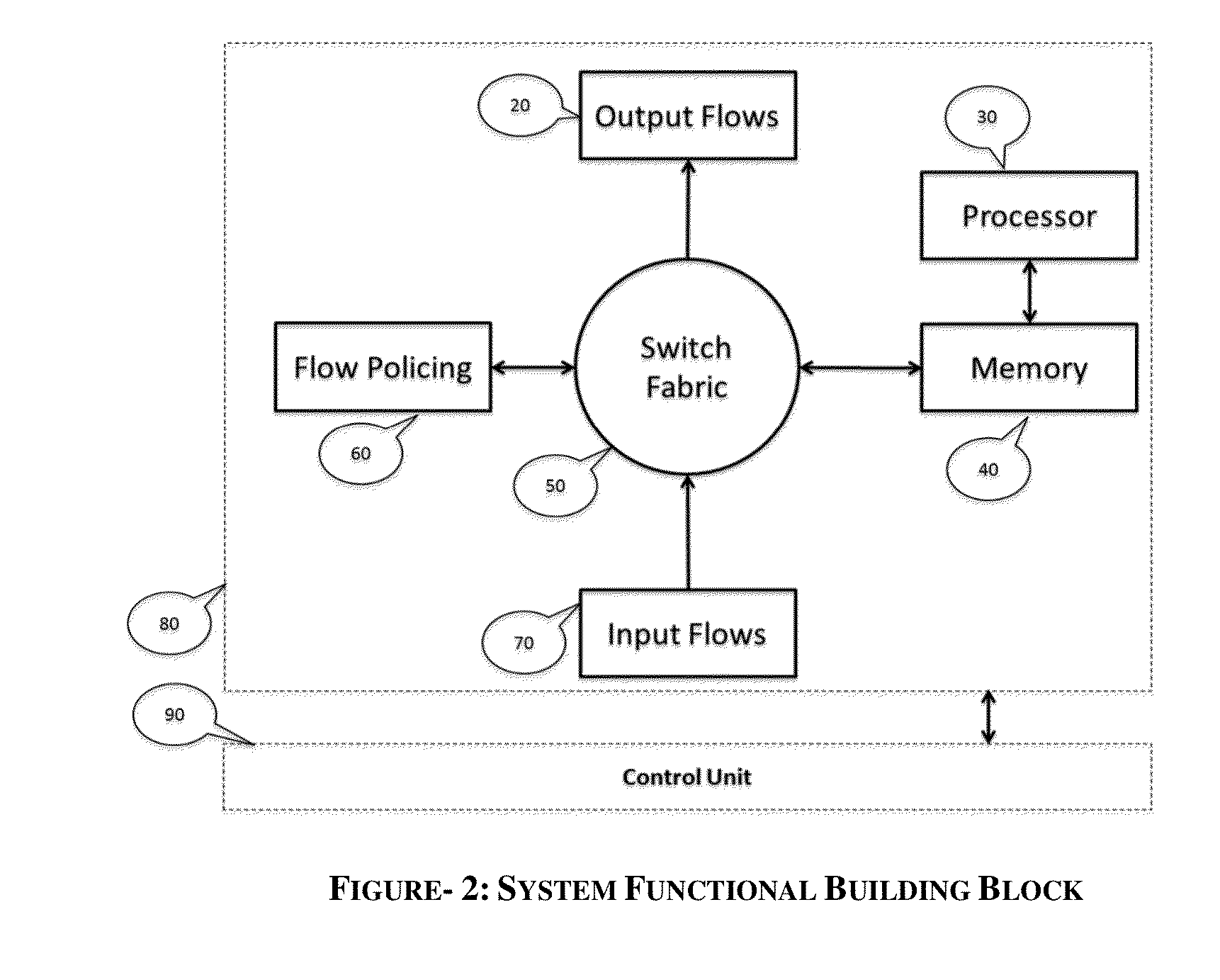

Programmable switching engine with storage, analytic and processing capabilities

InactiveUS20150063349A1Good varietyEasy to manageMultiplex system selection arrangementsCircuit switching systemsStructure of Management InformationMulti dimensional

An improvement to the prior-art extends an intelligent solution beyond simple IP packet switching. It intersects with computing, analytics, storage and performs delivery diversity in an efficient intelligent manner. A flexible programmable network is enabled that can store, time shift, deliver, process, analyze, map, optimize and switch flows at hardware speed. Multi-layer functions are enabled in the same node by scaling for diversified data delivery, scheduling, storing, and processing at much lower cost to enable multi-dimensional optimization options and time shift delivery, protocol optimization, traffic profiling, load balancing, and traffic classification and traffic engineering. An integrated high performance flexible switching fabric has integrated computing, memory storage, programmable control, integrated self-organizing flow control and switching.

Owner:ARDALAN SHAHAB +1

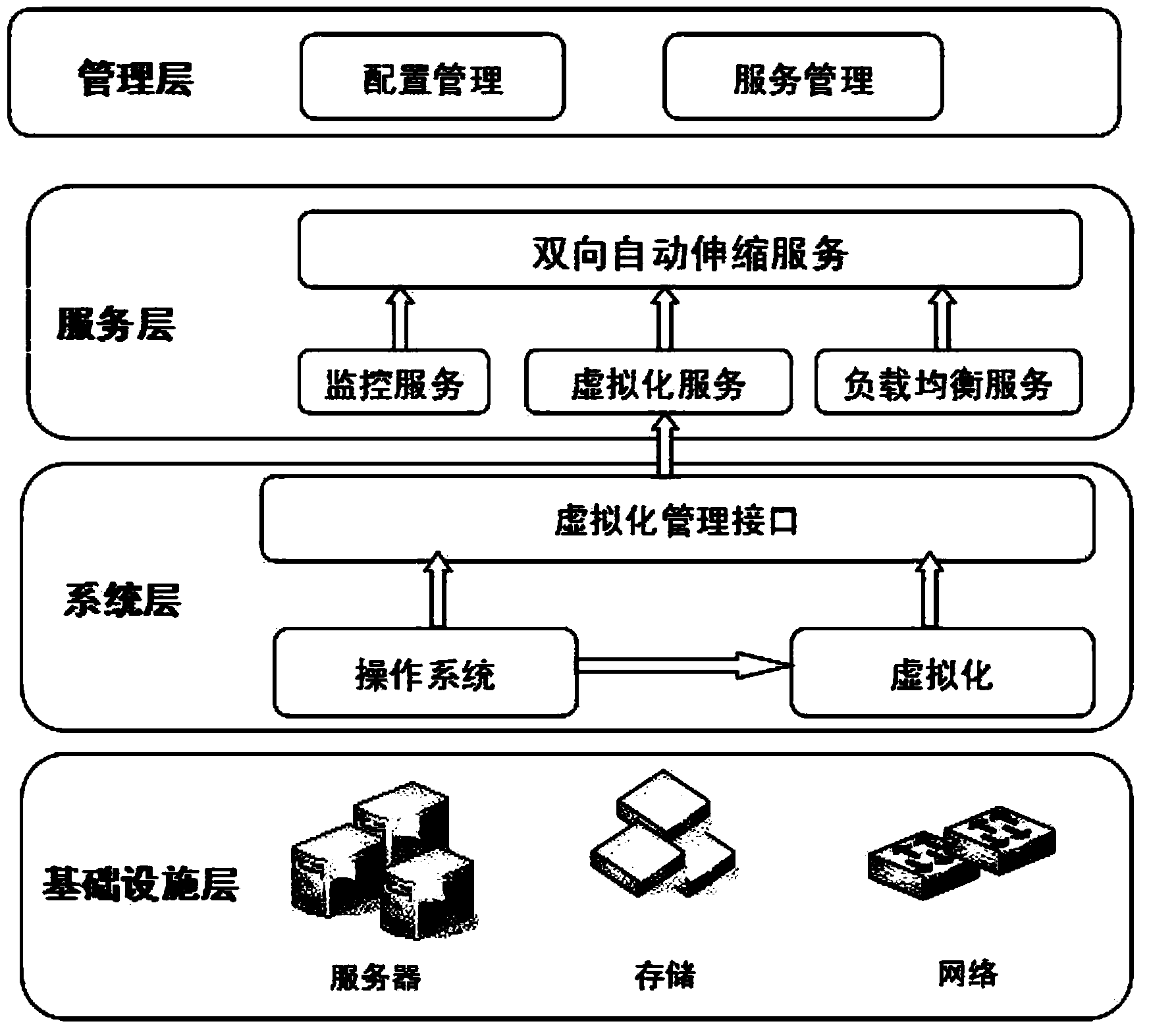

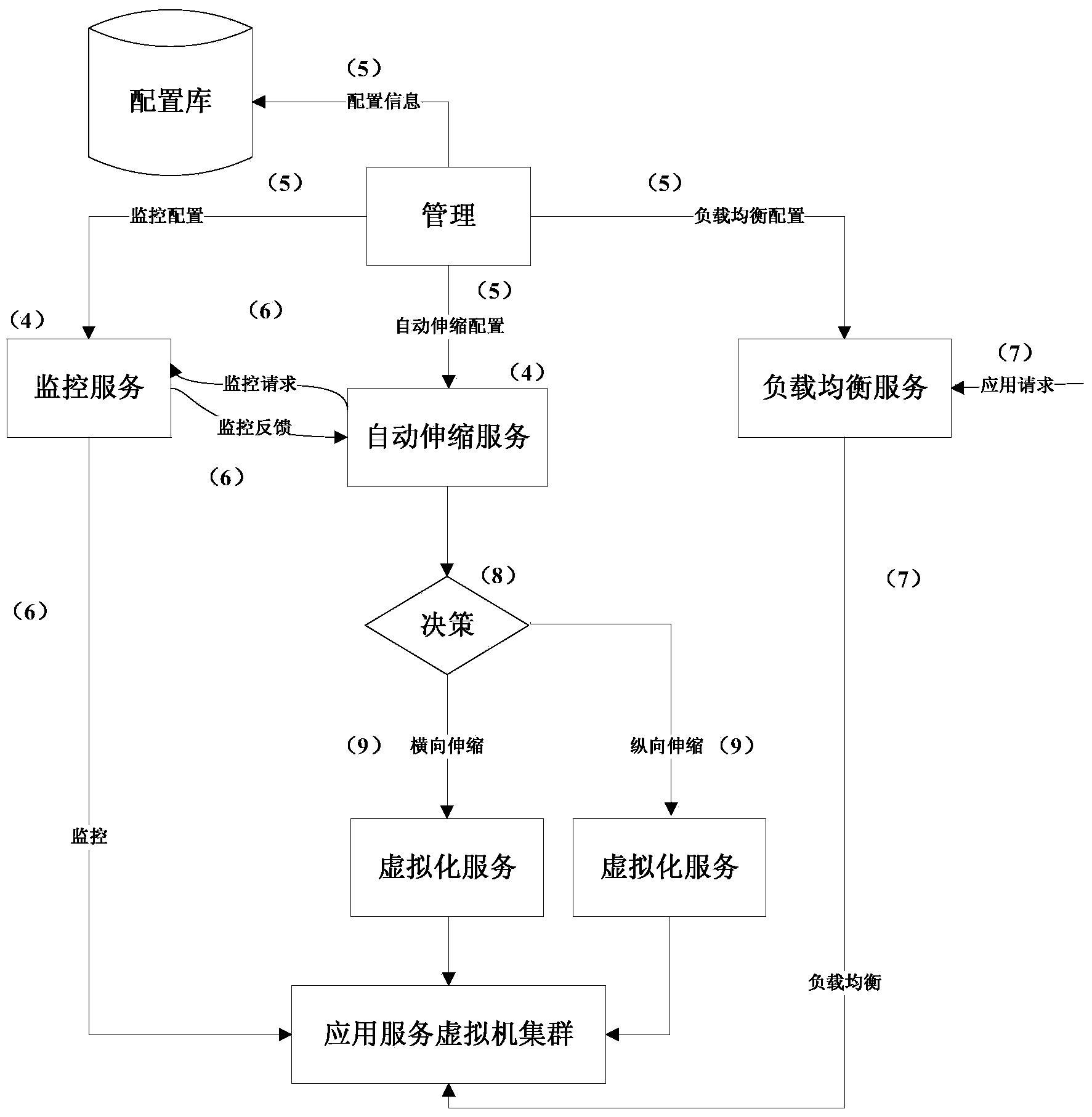

Method and system for implementing bidirectional auto scaling service of virtual machines

ActiveCN103559072AIncrease or decrease quantityQuantity automatically adjustedTransmissionSoftware simulation/interpretation/emulationVirtualizationStart stop

The invention discloses a method and a system for implementing the bidirectional auto scaling service of virtual machines. According to the method, the bidirectional auto scaling service requests monitoring data from a monitoring service, the monitoring service monitors application service virtual machine clusters and feeds the monitoring data back, and meanwhile, a load balancing service loads application requests on different application service virtual machines; according to configuration parameters and the feedback monitoring data, the bidirectional auto scaling service determines whether to perform virtual machine cluster scaling and determines to perform the virtual machine cluster scaling in a transverse mode or a longitudinal mode; when required, the virtual machine cluster scaling is performed by calling virtualization service interfaces, the transverse mode comprises start-stop control of the virtual machines, and the longitudinal mode comprises sequentially and dynamically adjusting computing resources and storage resources of the virtual machines according to the serial number of the virtual machines. By means of bidirectional auto scaling, the method and the system for implementing the bidirectional auto scaling service of the virtual machines can automatically adjust the resources of the virtual machines as well as the number of the virtual machines, thereby being more flexible to serve for applications and support application running in all directions.

Owner:无锡中科方德软件有限公司

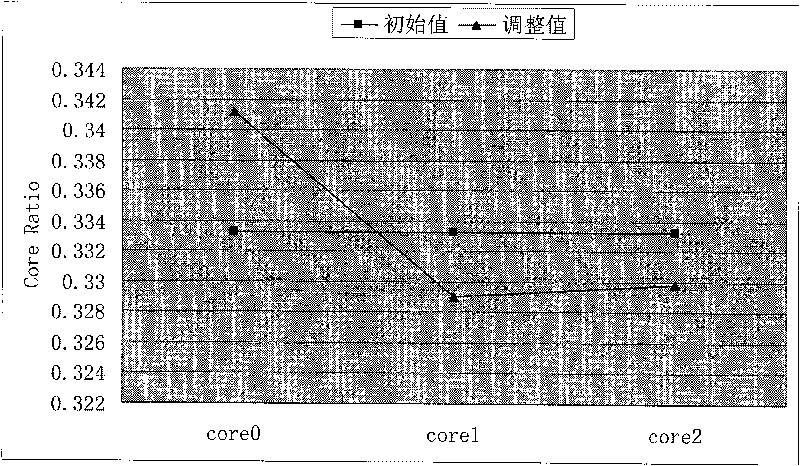

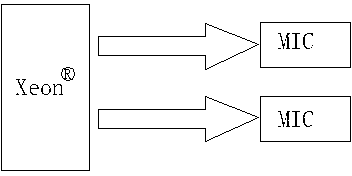

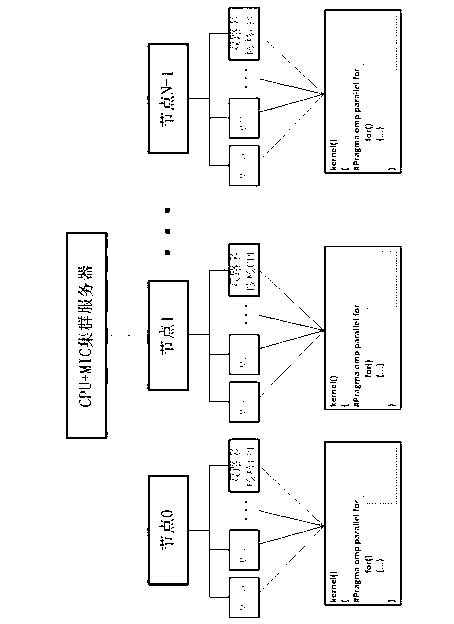

Load balancing optimization method based on CPU (central processing unit) and MIC (many integrated core) framework processor cooperative computing

InactiveCN103279391AImprove computing efficiencyImprove performanceResource allocationComputer architectureThread scheduling

The invention provides a load balancing optimization method based on CPU (central processing unit) and MIC (many integrated core) framework processor cooperative computing, and relates to an optimization method for load balancing among computing nodes, between CPU and MIC computing equipment in the computing nodes and among computing cores inside the CPU and MIC computing equipment. The load balancing optimization method specifically includes task partitioning optimization, progress / thread scheduling optimization, thread affinity optimization and the like. The load balancing optimization method is applicable to software optimization of CPU and MIC framework processor cooperative computing, software developers are guided to perform load balancing optimization and modification on software of existing CPU and MIC framework processor cooperative computing modes scientifically, effectively and systematically, maximization of computing resource unitization by the software is realized, and computing efficiency and overall performance of the software are remarkably improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

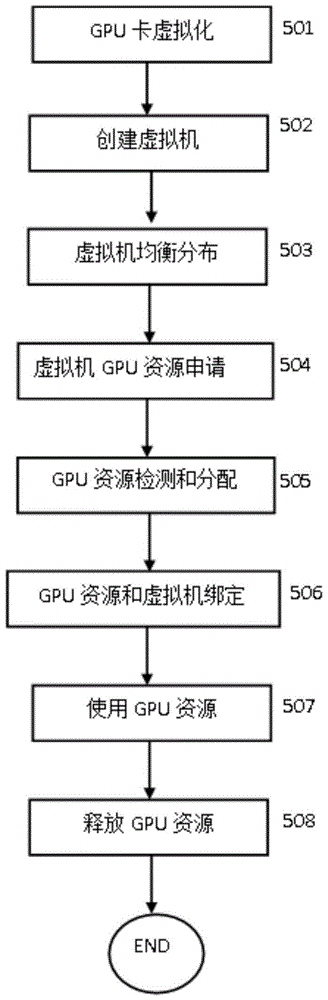

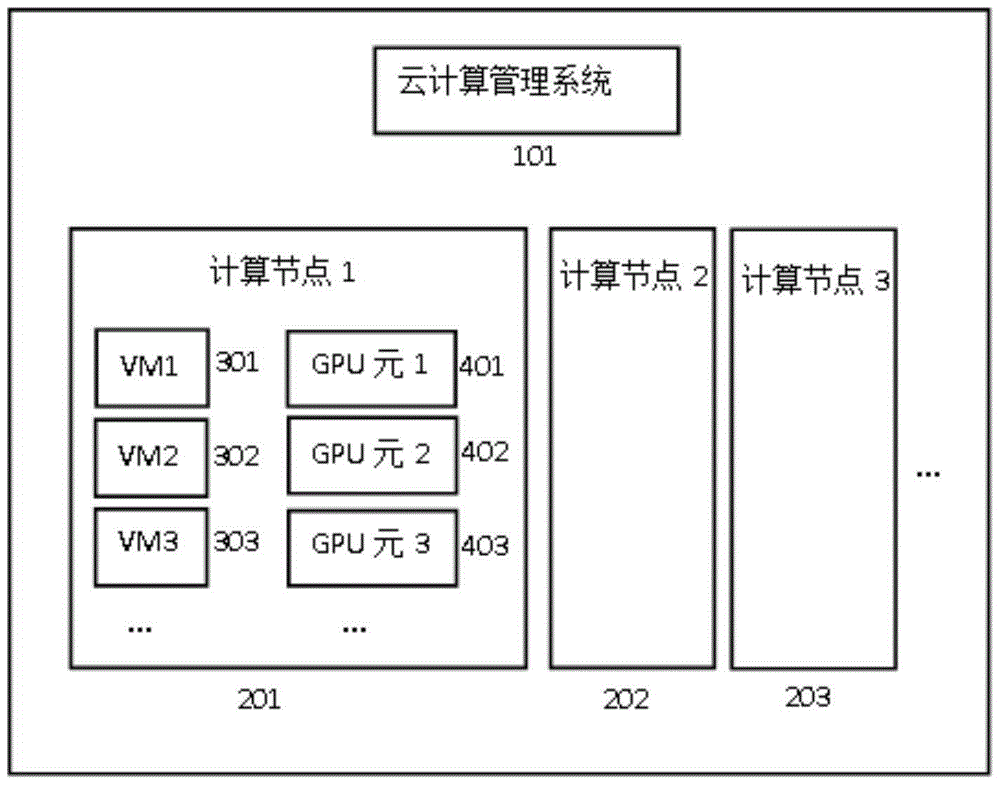

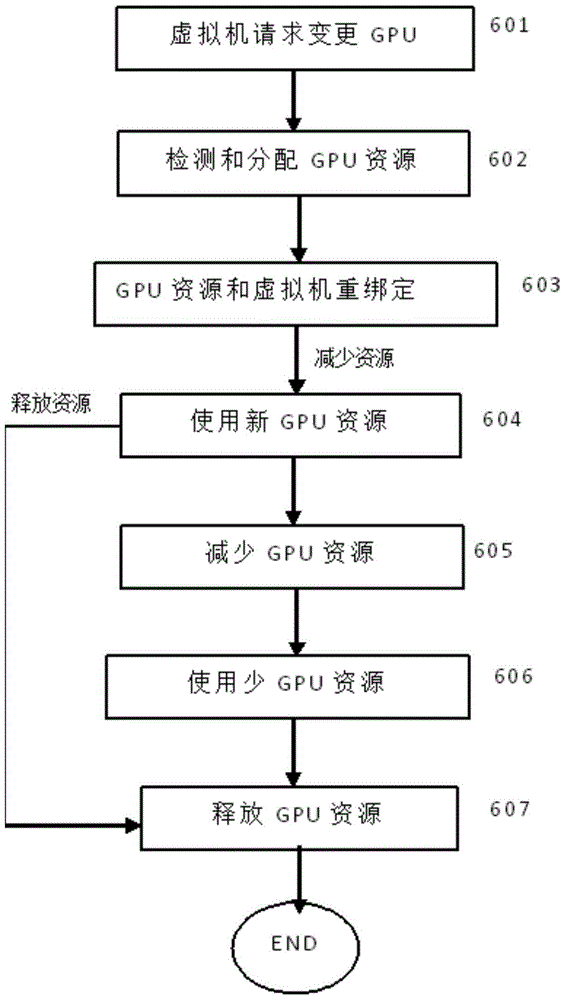

Method and system for cloud computing system to allocate GPU resources to virtual machine

InactiveCN105242957AEasy to useLoad balancingResource allocationSoftware simulation/interpretation/emulationResource poolHigh-definition video

The present invention provides a method and a system for a cloud computing system to allocate GPU resources to a virtual machine. By applying the method, the virtual machine does not need to be constantly allocated with certain GPU resources; when the virtual machine needs to perform related high-definition video or 3D rendering, the virtual machine can apply to the cloud computing management system for the GPU resources; after the virtual machine uses the GPU resources, the resources are released and return to a GPU resource pool, so that large amounts of the GPU resources are saved; and the cloud computing management system evenly distributes virtual machines in need of the GPU resources to computing nodes with GPU cards according to created GPU usage identifiers of the virtual machines, thereby achieving usage effects of load balance and GPU resource optimization.

Owner:广州云晫信息科技有限公司

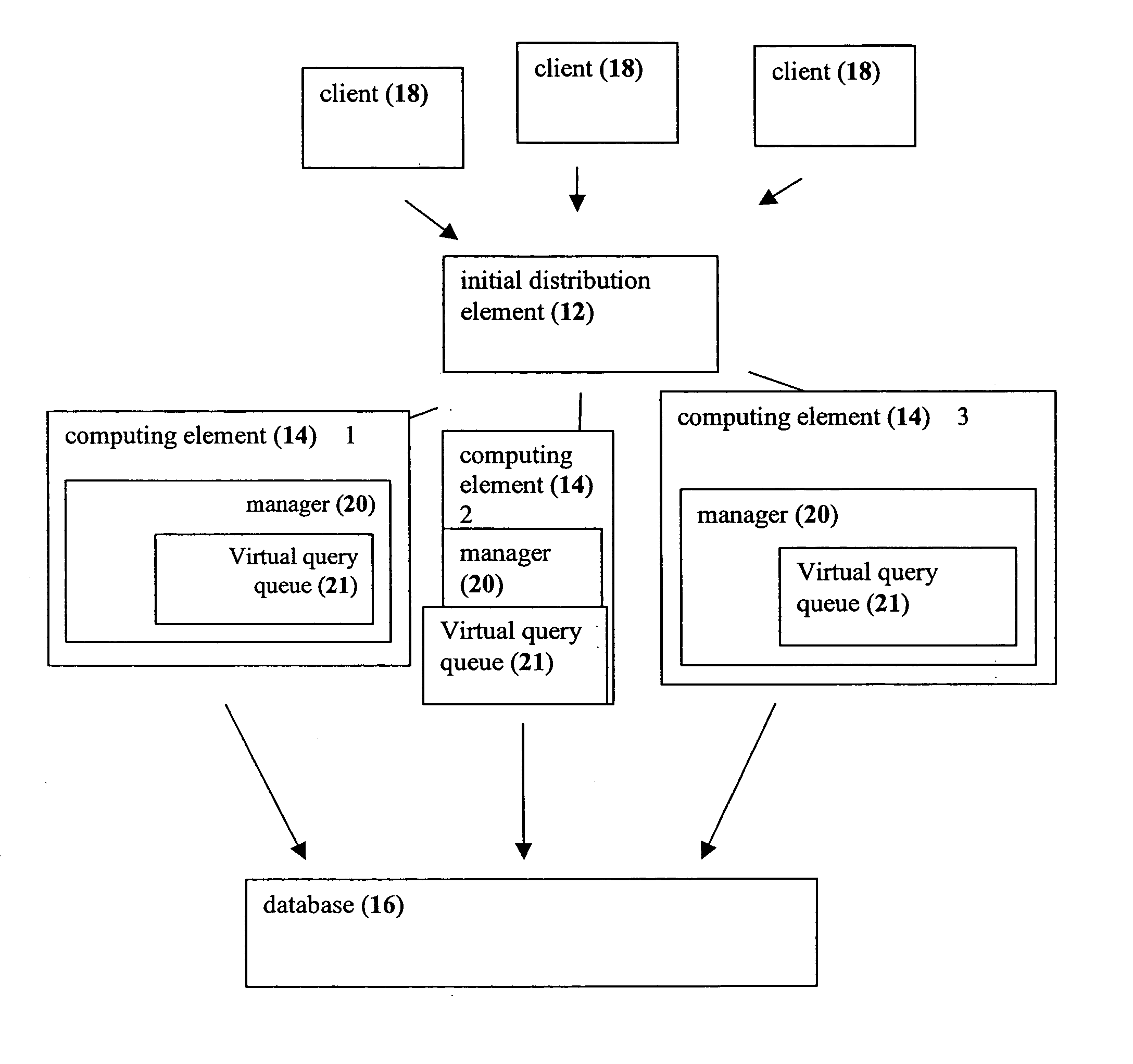

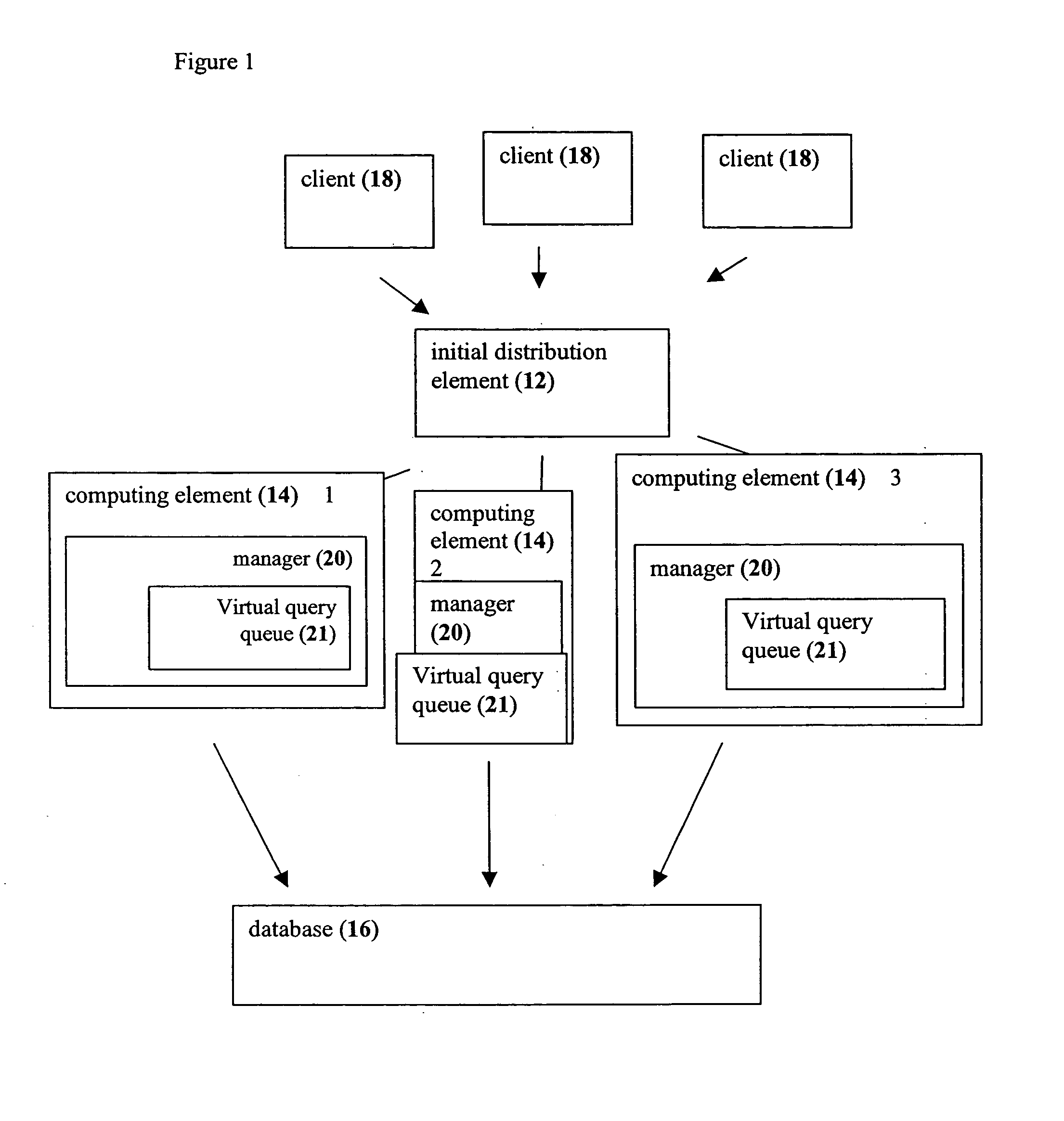

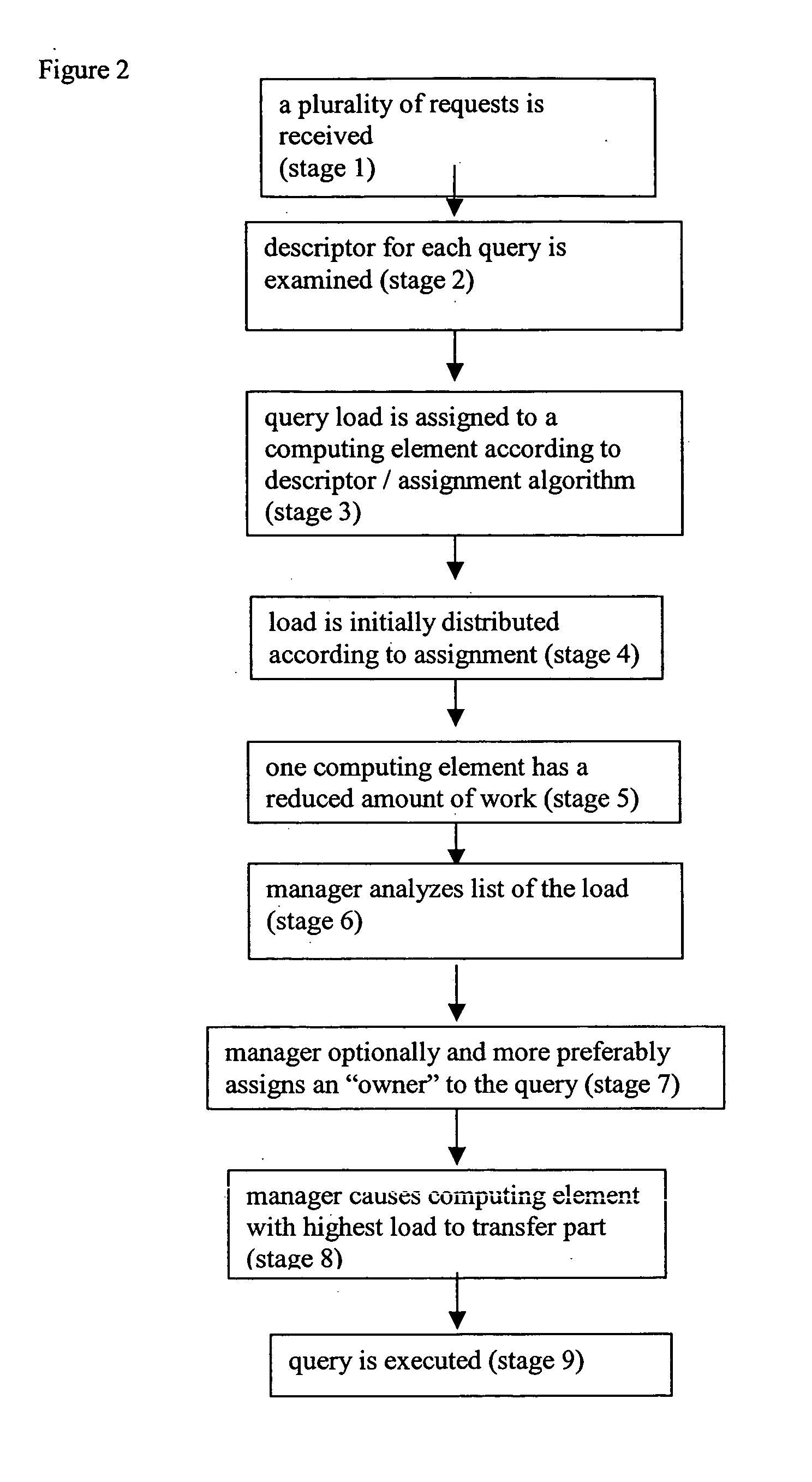

System and method for load balancing in database queries

InactiveUS20050021511A1Reduce in quantityReduce the numberDigital data information retrievalDigital data processing detailsDatabase queryLoad Shedding

A system and method in which query load balancing process is performed in a cluster of middle-tier computing elements according to the load on at least one, but more preferably a plurality, of the computing elements of the group, this by passing pointers to queries between machines with information or processes pertinent to the query. Optionally the balancing may be carried out in two stages. The first stage performed rapidly, preferably implemented as a hardware device such as a switch for example in which a plurality of queries is distributed to a plurality of computing elements according to at least one descriptor attached to each query or a simple algorithm is used. The second stage preferably performed as above by at least one computing element of the group, but may optionally be performed by a plurality of such elements. Alternatively, the second stage may be performed by a separate computer.

Owner:KCI LICENSING INC +1

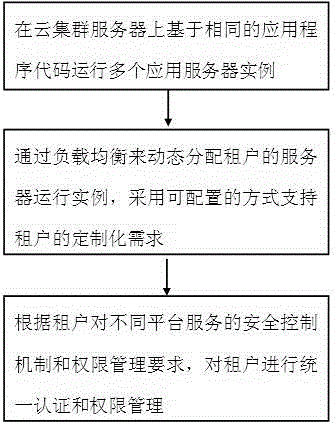

Financial data processing method based on cloud computing

InactiveCN103984600AEasy to storeImprove securityFinanceResource allocationPlatform as a serviceMultiple applications

The invention provides a financial data processing method based on cloud computing. The financial data processing method comprises the steps of operating multiple application server instances on a cloud cluster server based on the same application program code; dynamically allocating server operation instances of tenants through load balancing, and supporting customized requirements of the tenants by adopting a configurable way; carrying out unified authentication and authority management on the tenants according to requirements of the tenants on safety control mechanism and authority management which are served by different platforms. The invention provides a cloud computing system and a cloud computing method based on PaaS (platform-as-a-Service), database storage and a unified safety authentication and authority management mode are improved, and the financial data processing method is suitable for PaaS platforms under various business fields.

Owner:福建今日特价网络有限公司

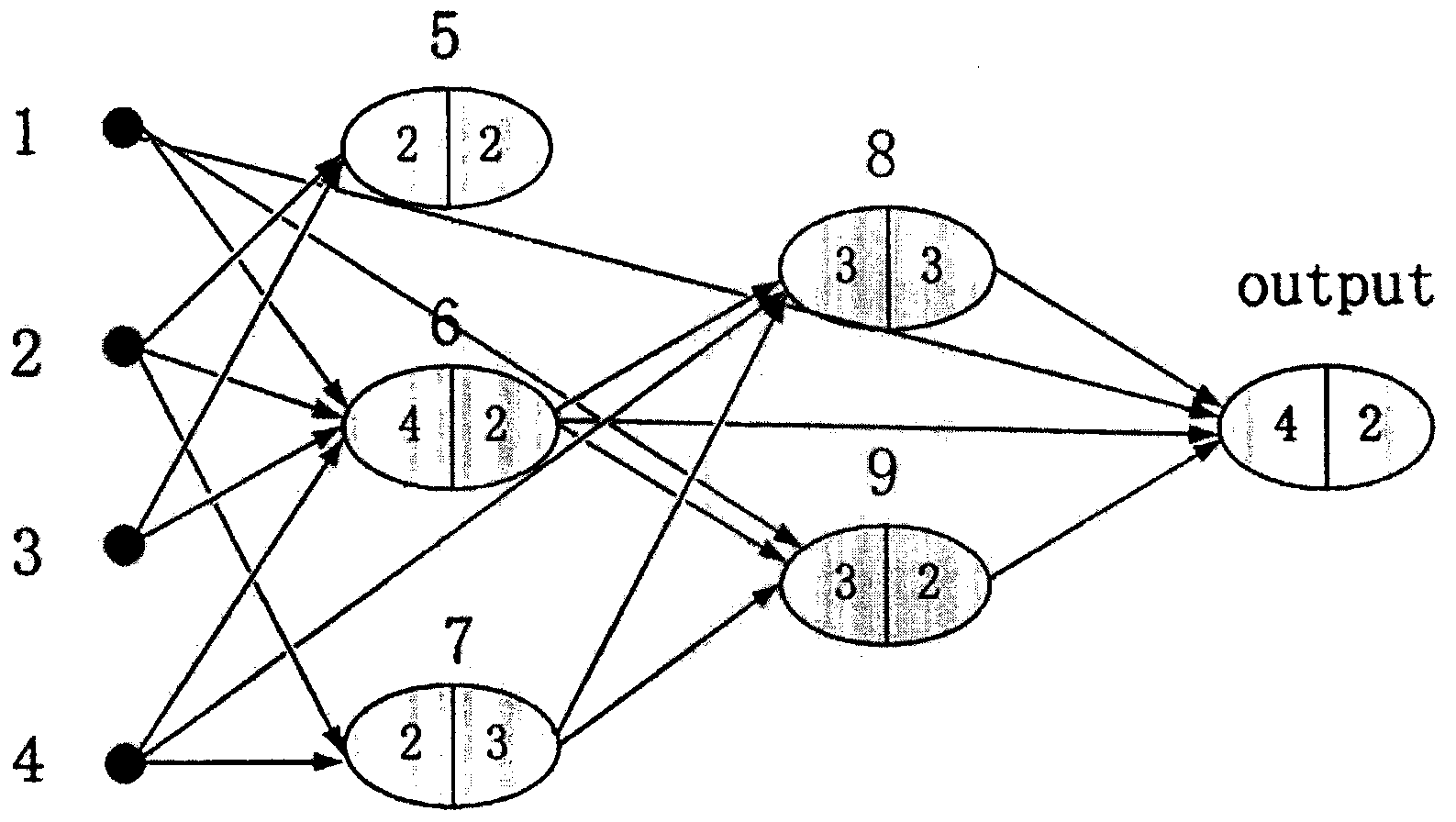

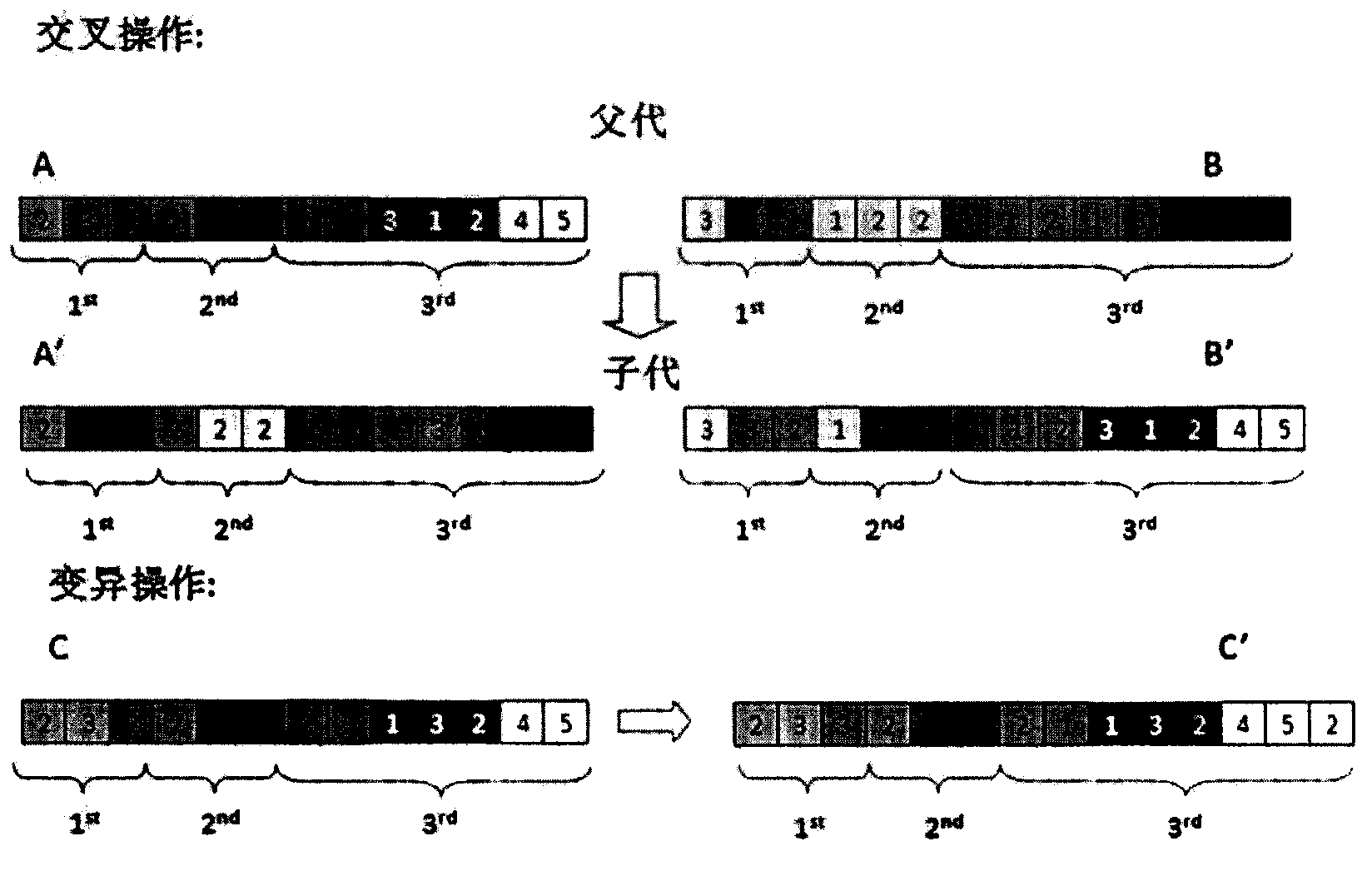

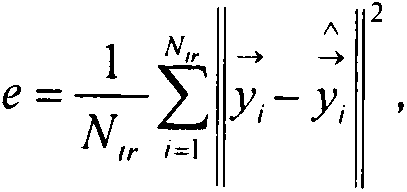

Host load forecasting method in cloud computing environment

The invention provides a load forecasting method for a cloud computing center host, belongs to the cloud computing field and solves the problem that as virtual machines of different users work on a host of a cloud computing center and the host load is subject to more complicated changes, the host load has to be accurately forecasted to further dispatch the virtual machines so as to achieve the purposes of load balancing and energy consumption reduction. The core of the algorithm lies in that the phase-space reconstruction method in the chaos theory and the data grouping treatment algorithm based on genetic algorithm are combined together. Compared with the present existing method, by adopting the method provided by the invention, a smaller relative error can be obtained. Besides, under the condition that the forecasting time is prolonged, compared with the traditional method, the accumulated error of the forecasting is reduced.

Owner:NANJING UNIV

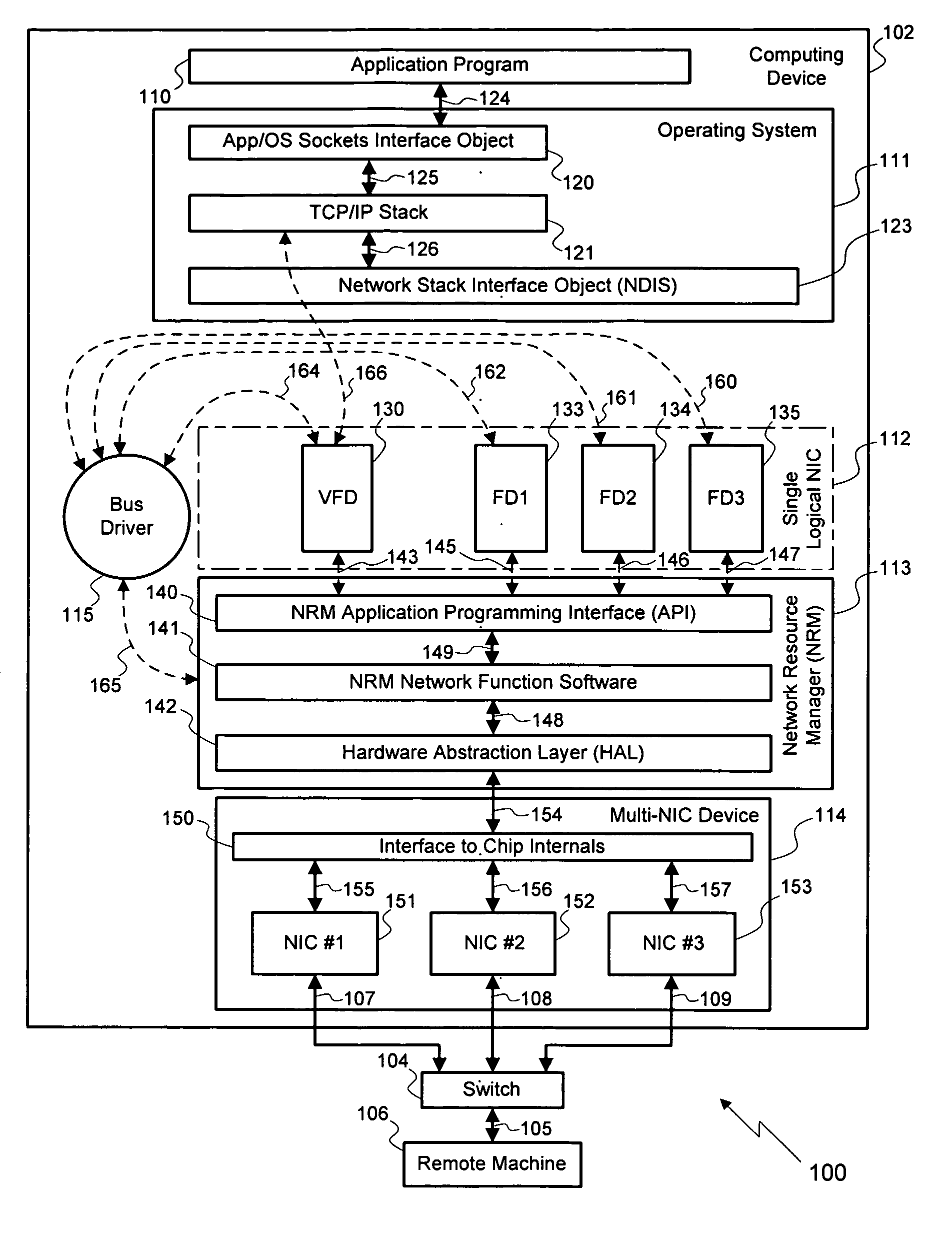

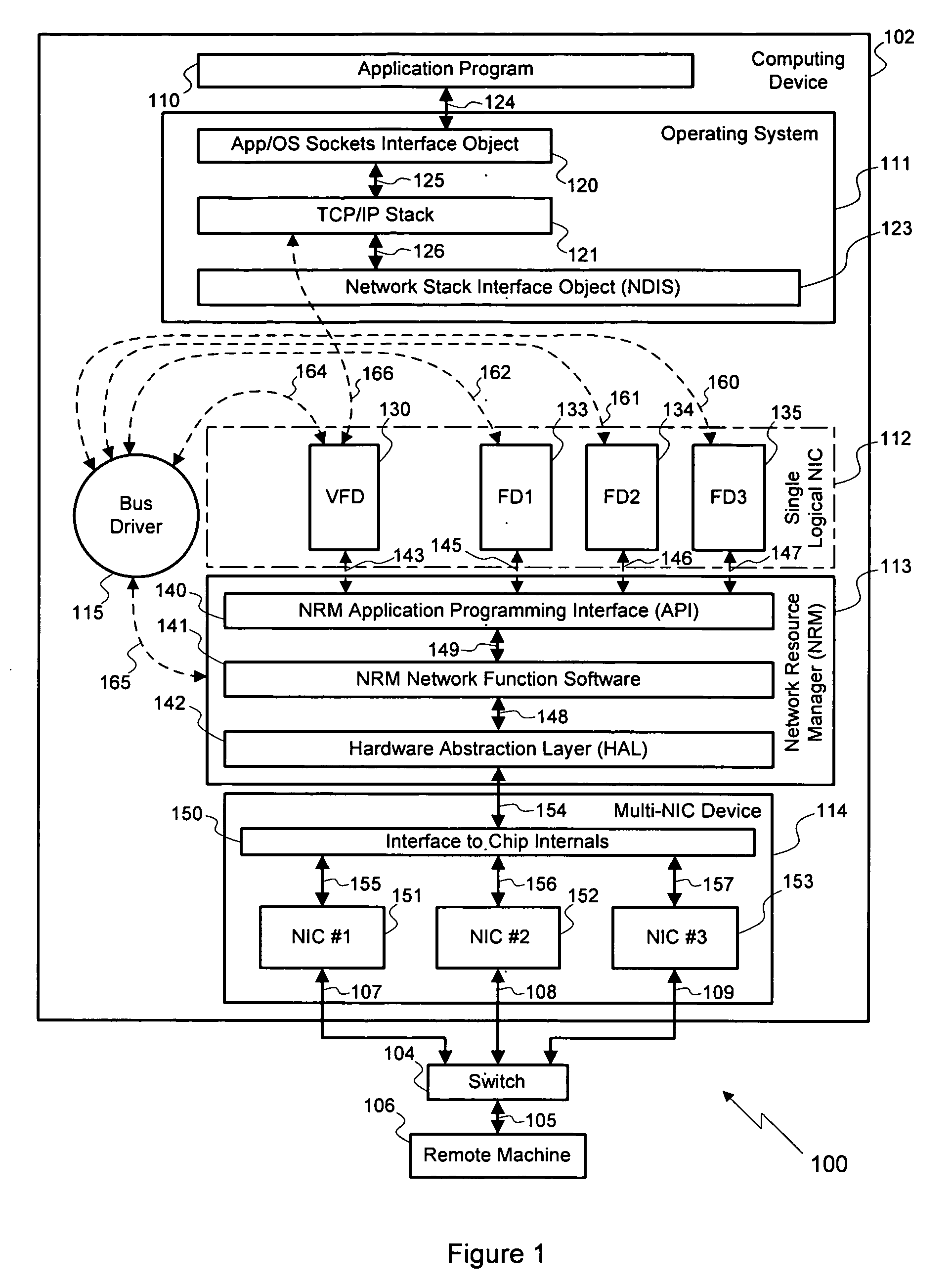

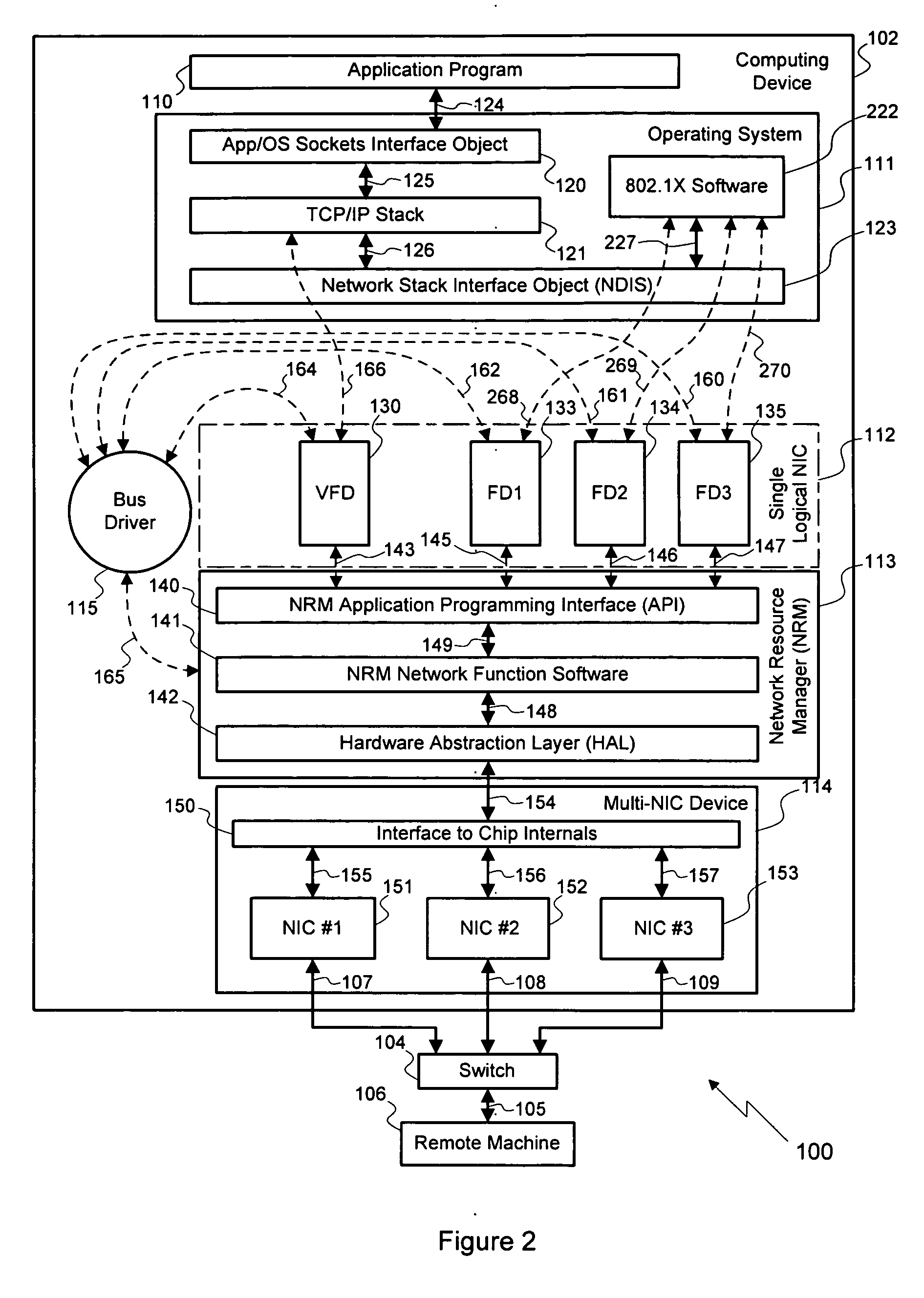

Single logical network interface for advanced load balancing and fail-over functionality

InactiveUS20080056120A1Improve reliabilityImprove network throughputError preventionTransmission systemsNetwork resource managementFailover

The invention sets forth an approach for aggregating a plurality of NICs in a computing device into a single logical NIC as seen by that computing device's operating system. The combination of the single logical NIC and a network resource manager provides a reliable and persistent interface to the operating system and to the network hardware, thereby improving the reliability and ease-of-configuration of the computing device. The invention also may improve communications security by supporting the 802.1X and the 802.1Q networking standards.

Owner:NVIDIA CORP

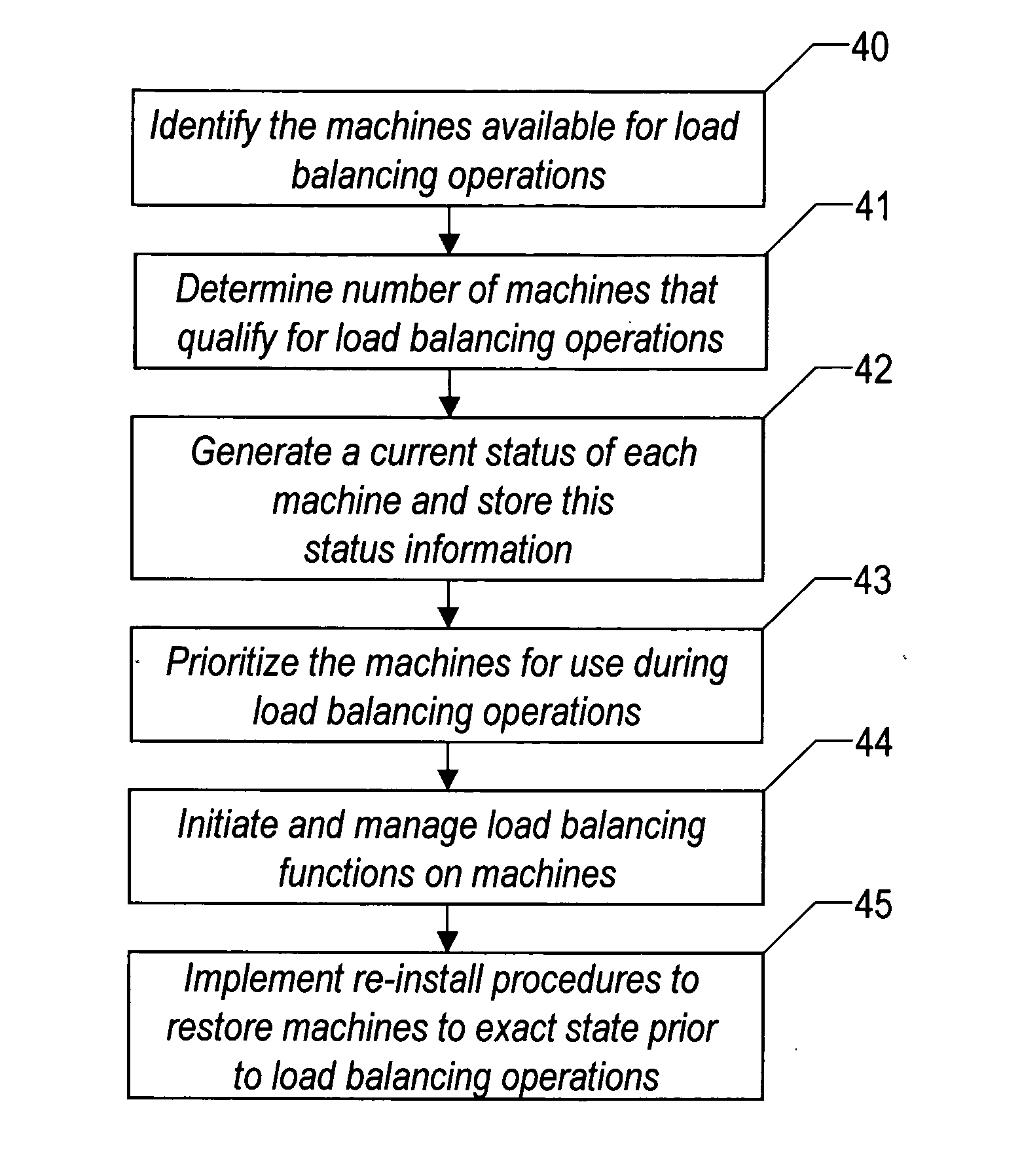

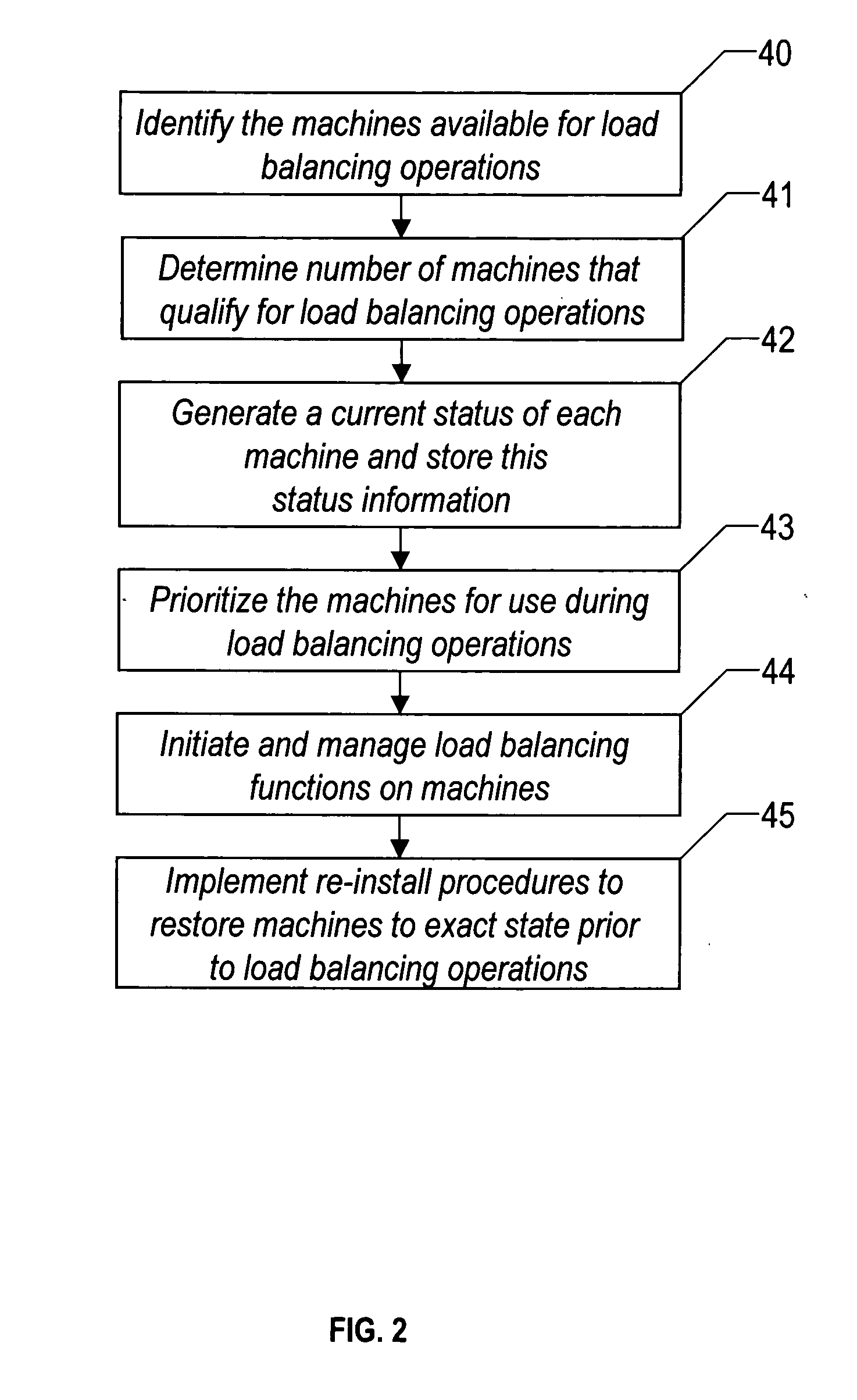

Method and system for load balancing of computing resources

InactiveUS20070078858A1Reduce loadBalance processing loadDigital computer detailsMultiprogramming arrangementsLoad SheddingDistributed computing

A load balancing method incorporates temporarily inactive machines as part of the resources capable of executing tasks during heavy process requests periods to alleviate some of the processing load on other computing resources. This method determines which computing resources are available and prioritizes these resources for access by the load balancing process. A snap shot of the resource configuration and made secured along with all data on this system such that no contamination occurs between resident data on that machine and any data placed on that machine as put of the load balancing activities. After a predetermined period of time or a predetermined event, the availability of the temporary resources for load balancing activities ends. At this point, the original configuration and data is restored to the computing resource such that no trace of use of the resource in load balancing activities is detected to the user.

Owner:IBM CORP

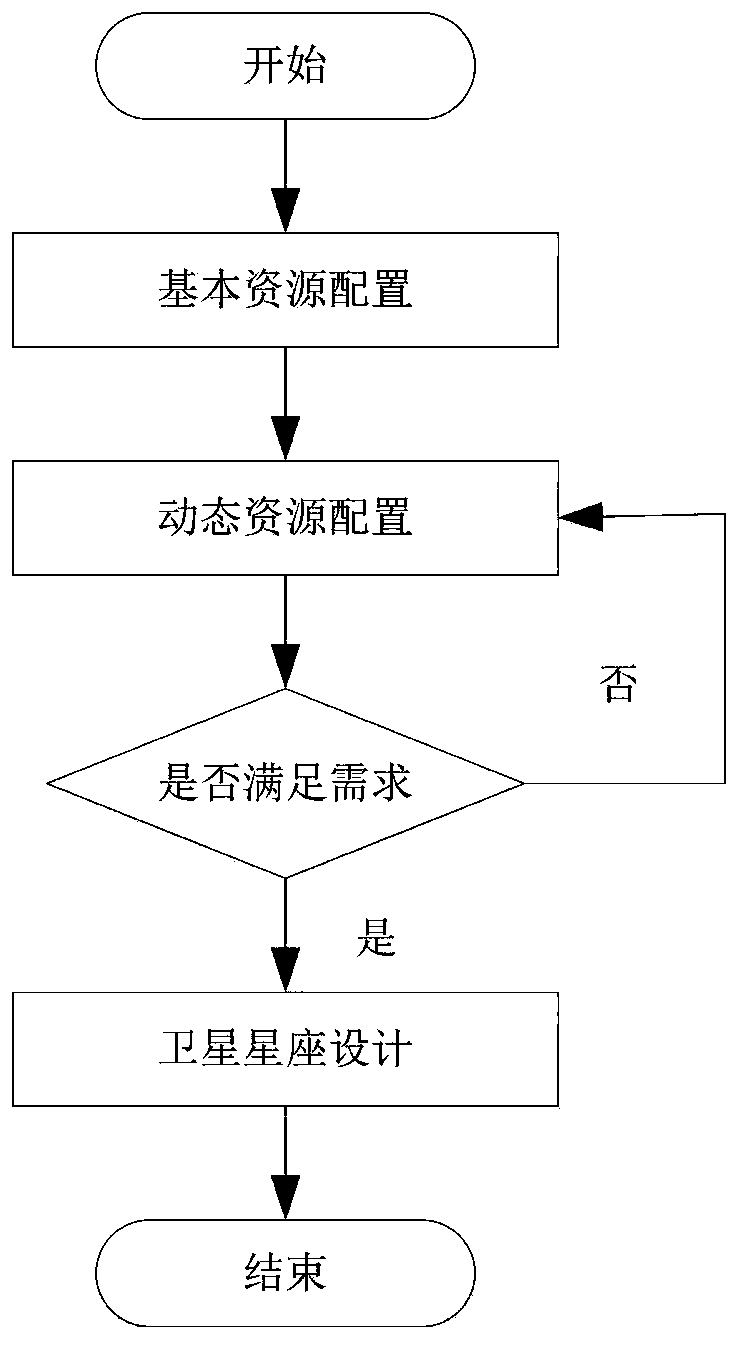

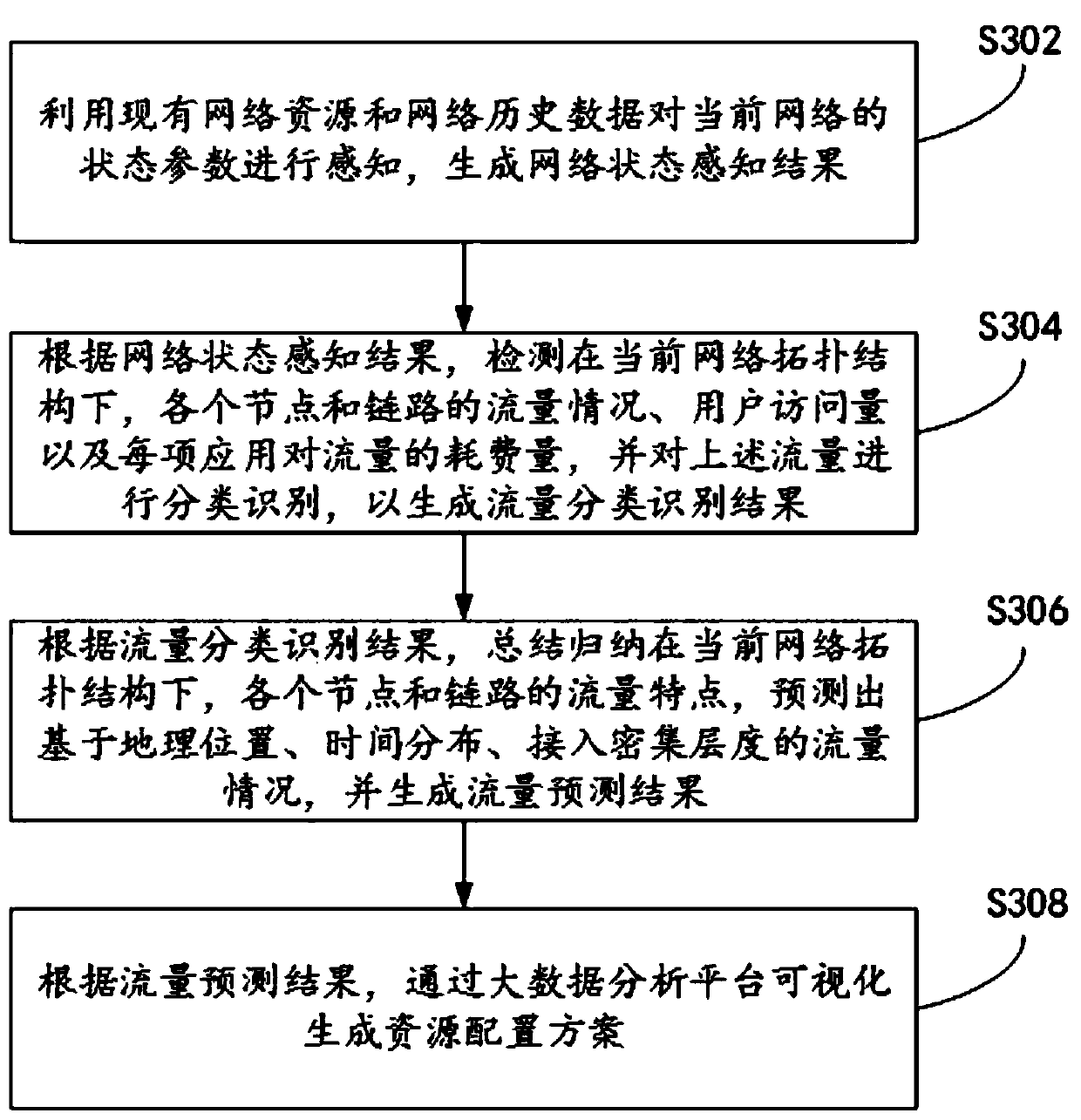

Dynamic resource allocation method and system under space-based cloud computing architecture, and storage medium

PendingCN110730138AReduce configuration costsMeet needsData switching networksService flowTraffic prediction

The invention provides a dynamic resource allocation method and system under a space-based cloud computing architecture and a storage medium. The method comprises the following steps: sensing a stateparameter of a current network to generate a network state sensing result; according to the network state sensing result, detecting the traffic condition of each node and link, the user page view andthe traffic consumption of each application under the current network topology structure, and carrying out classification identification on the traffic to generate a traffic classification identification result; predicting a traffic condition based on the geographic position, the time distribution and the access density according to the traffic classification and identification result, and generating a traffic prediction result; and visually generating a resource allocation scheme through a big data analysis platform according to the flow prediction result. Heterogeneous resources can be efficiently utilized through dynamic resource configuration, the requirements for space-based delay sensitivity and big data application are met, dynamic network connection is adapted, the reliability of service flow is guaranteed, and load balancing of the system is achieved.

Owner:TECH & ENG CENT FOR SPACE UTILIZATION CHINESE ACAD OF SCI

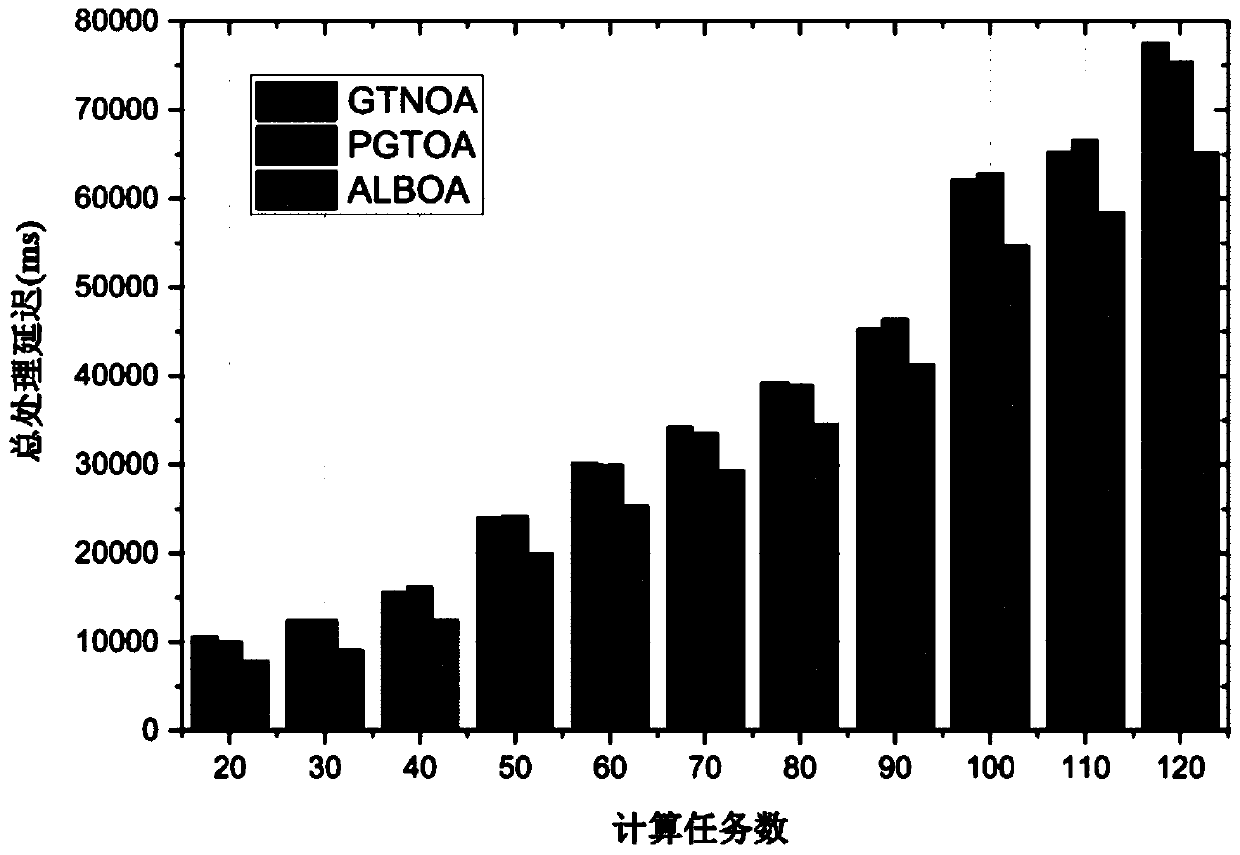

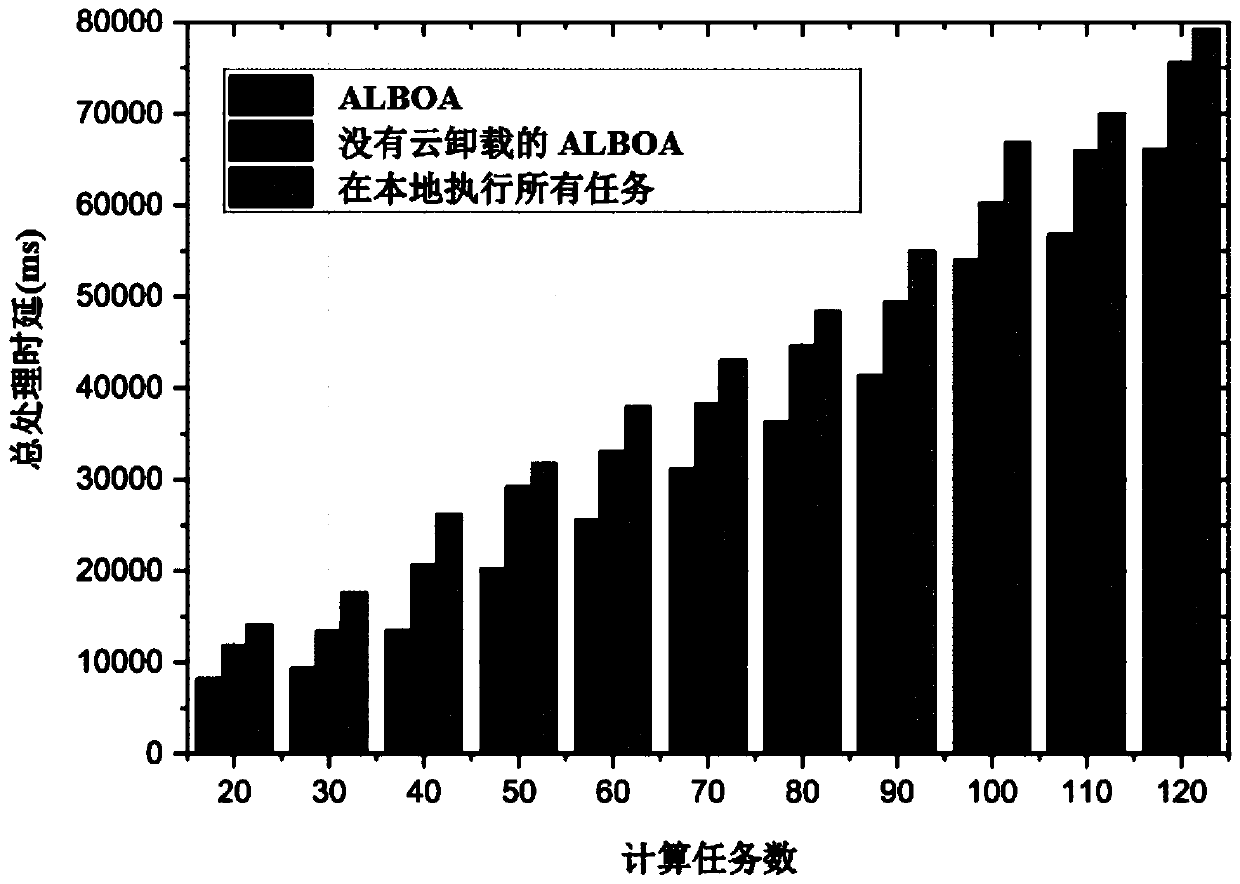

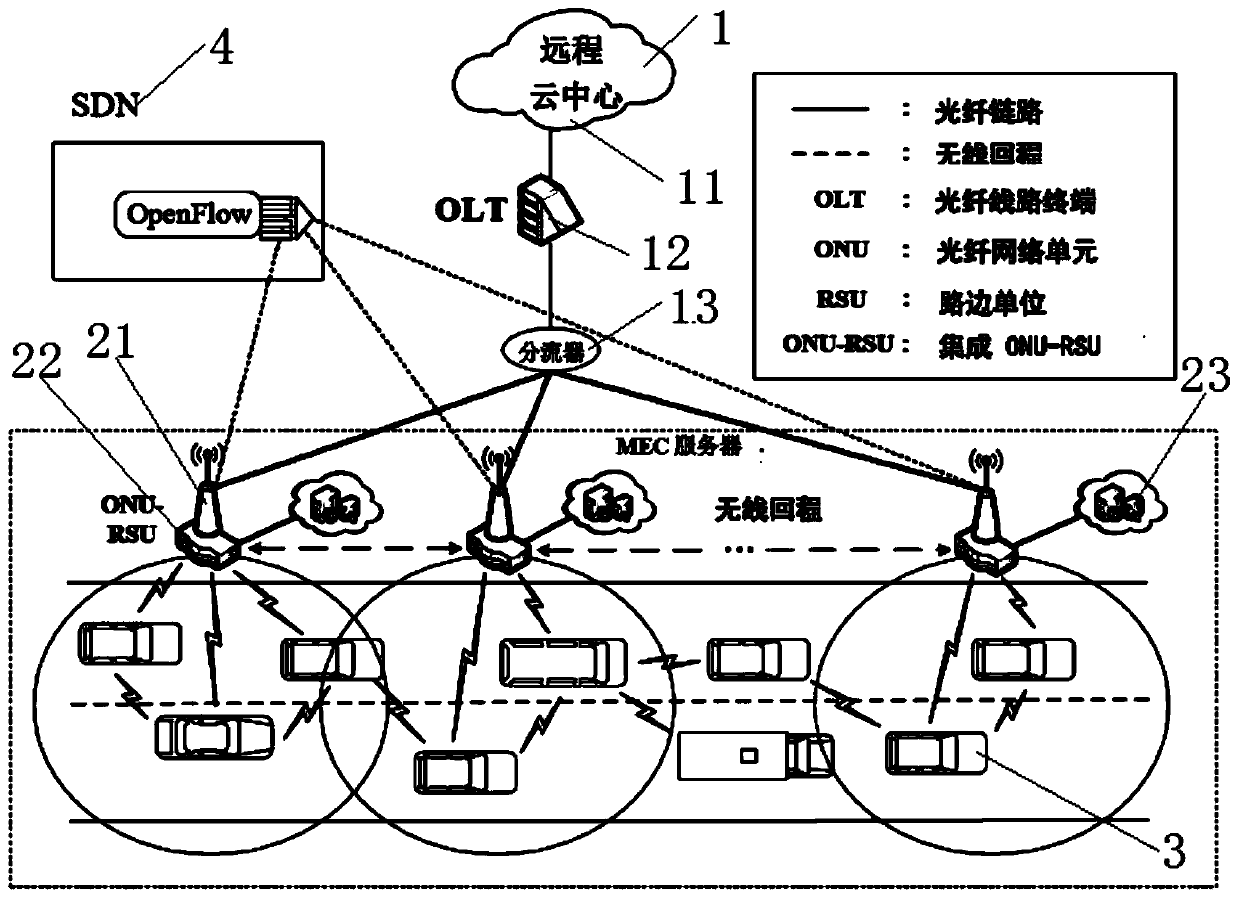

Vehicle edge computing network task unloading load balancing system and balancing method

ActiveCN110557732ALower latencyIncrease capacityParticular environment based servicesVehicle-to-vehicle communicationEdge computingSystem structure

The invention discloses a vehicle edge computing network task unloading load balancing system and balancing method, and the system comprises a remote cloud center, the remote cloud center builds connection with each road side unit, a plurality of road side units are uniformly distributed in a road extension direction, and a wireless network connection is built between two road side units; each vehicle is provided with a wireless communication module, and the vehicles are connected through the wireless communication modules; wireless network connection is established between the vehicle and theroad side unit; the SDN controller establishes connection with each road side unit. In a FiWi enhanced vector task unloading system structure based on an SDN controller, the communication path is optimally selected, under the condition that the computing power of the MEC servers is reduced along with the increase of the number of vehicles which select to unload the computing tasks to the MEC servers, relevant parameters are continuously updated, computing resources of each MEC server are averagely utilized in the load balancing task unloading method, and the unloading method is excellent in performance.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

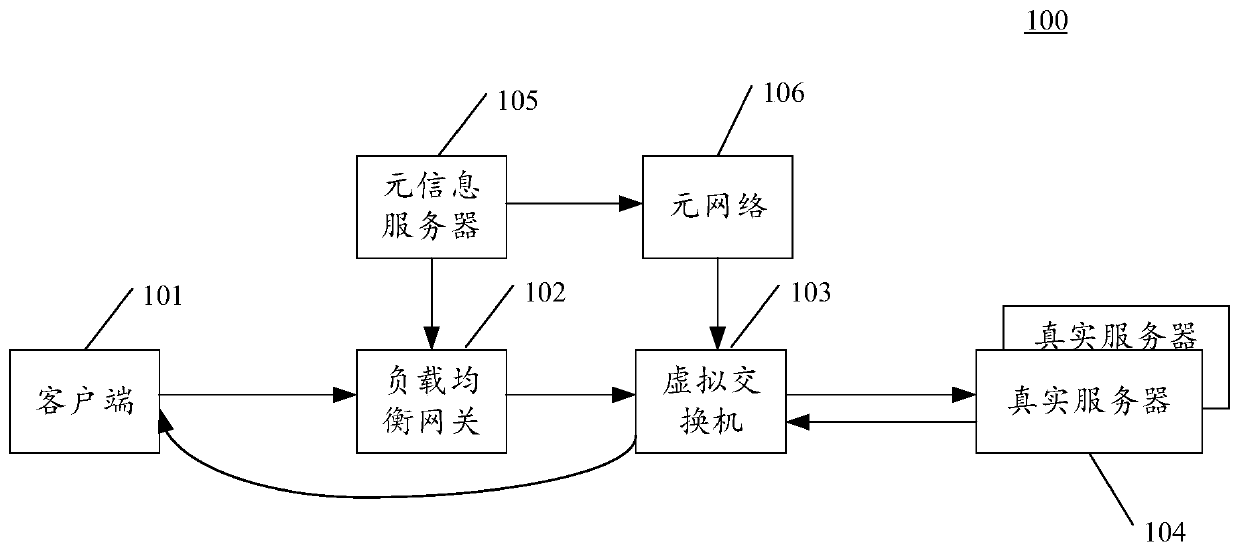

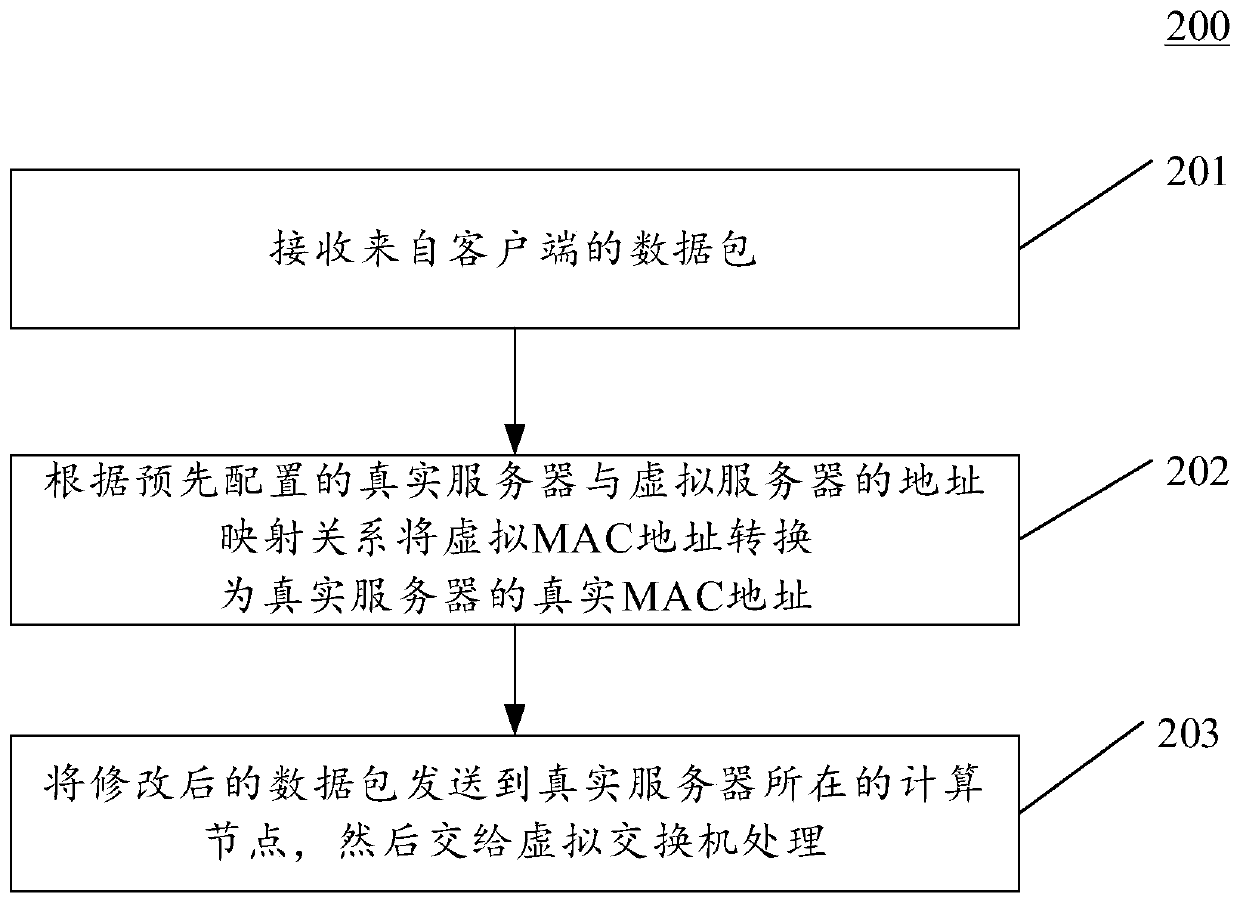

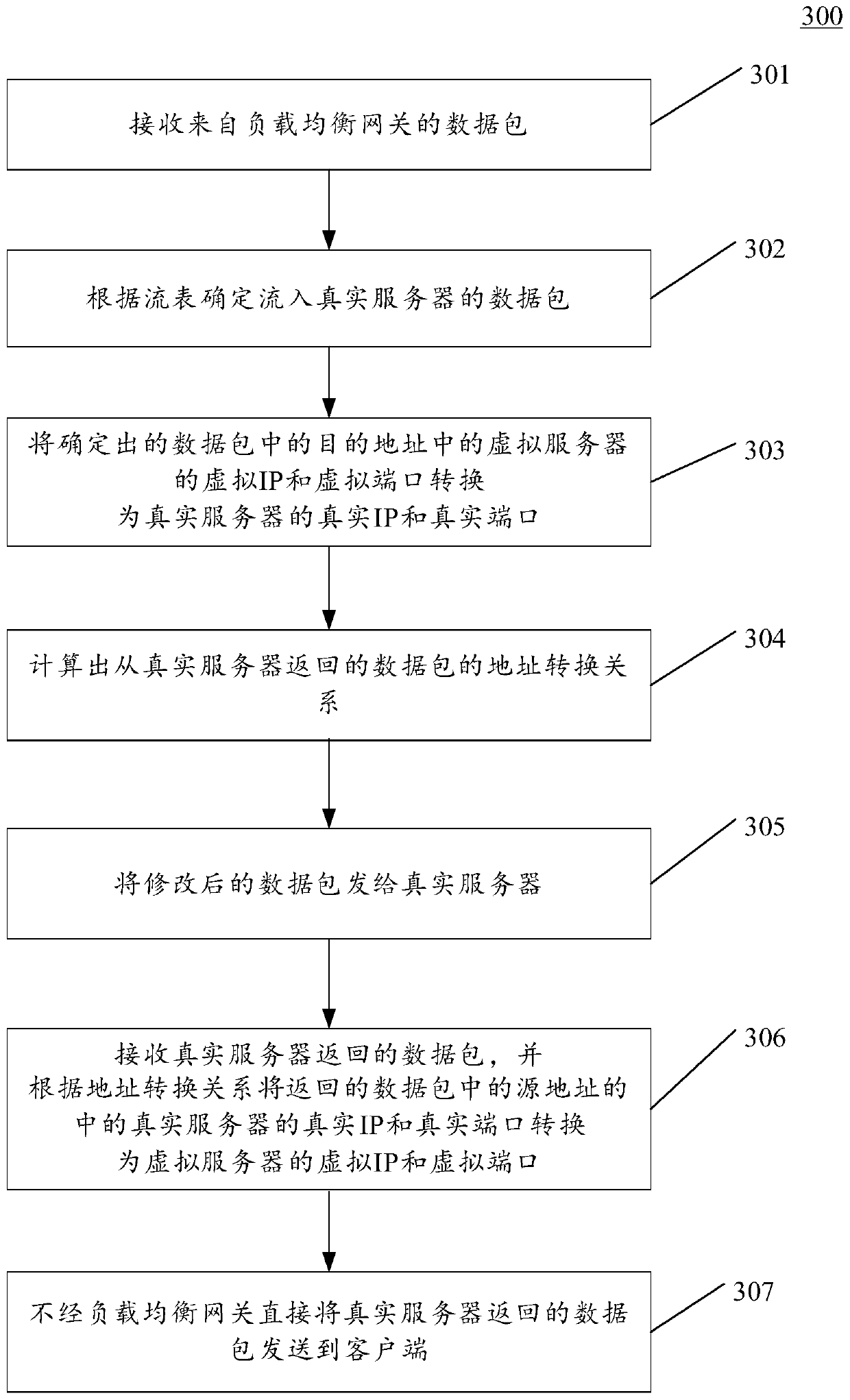

Method, device and system for transmitting data

PendingCN110708393AShorten the forwarding pathSolve online problemsData switching networksData packPathPing

The embodiment of the invention discloses a method, device and system for transmitting data. A specific embodiment of the system comprises the following steps: a load balancing gateway converts a virtual MAC address of a virtual server in a destination address of a data packet from a client into a real MAC address of a real server according to a pre-configured address mapping relationship betweenthe real server and the virtual server; the load balancing gateway sends the modified data packet to the virtual switch; the virtual switch determines a data packet flowing into the real server afterreceiving the data packet from the load balancing gateway, and converts a virtual IP and a virtual port of the virtual server in a destination address in the determined data packet into a real IP anda real port of the real server; and the virtual switch sends the modified data packet to a real server. According to the embodiment, the forwarding path between computing nodes of cloud computing is shortened, the system stability is improved, and the data forwarding delay is reduced.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

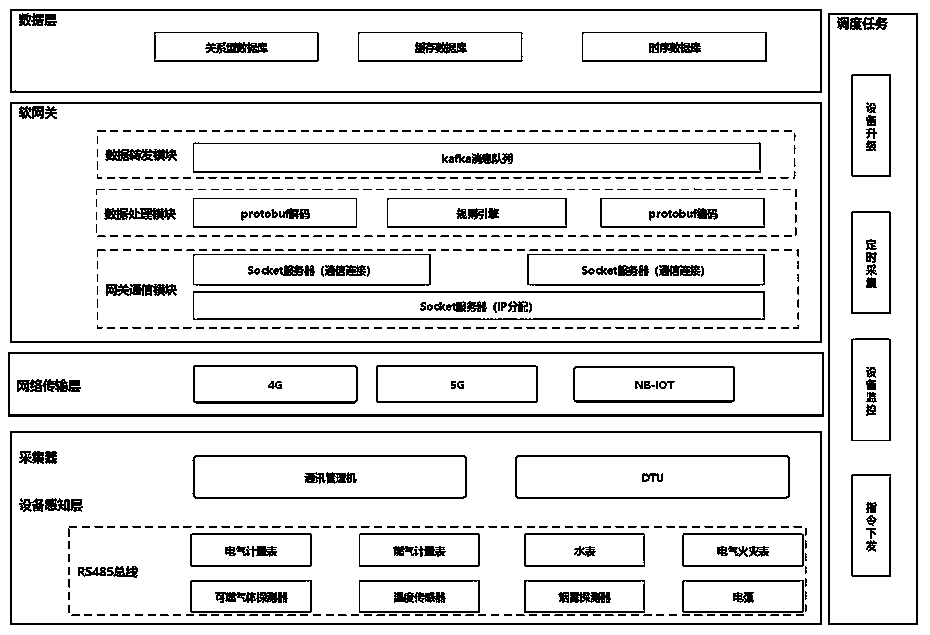

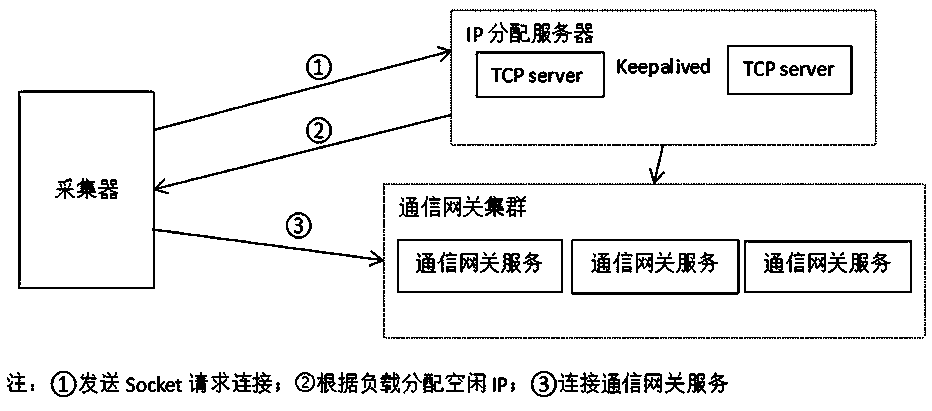

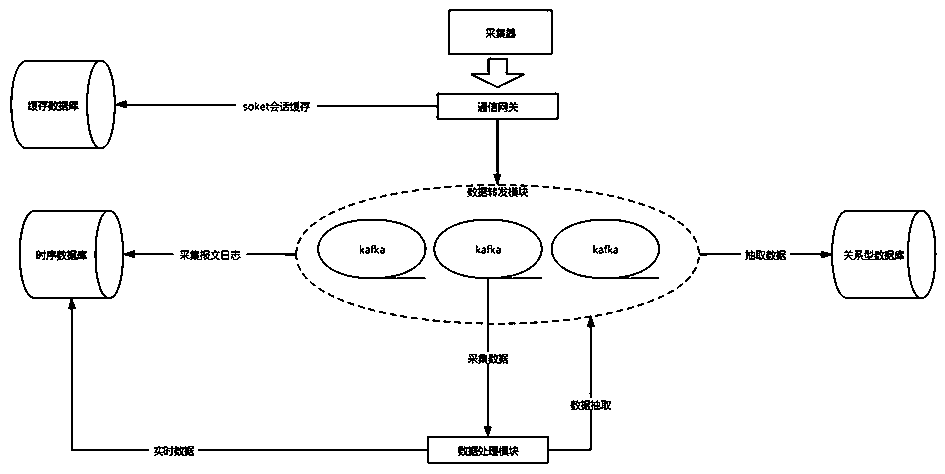

Energy Internet of Things data acquisition method based on non-blocking input and output model and software gateway

The invention provides an energy Internet of Things data acquisition method and a software gateway using a non-blocking input / output model NIO (Non-blocking Input / Output). Pure software such as load balancing and the like and a cloud computing technology are used for solving the problems in the field of energy internet of things under various sensing equipment and communication equipment access scenes; a traditional pure hardware gateway is high in multi-communication protocol compatibility difficulty, difficult to support high-availability requirements of a system in a high-concurrency scene,poor in dynamic expansion capacity and the like. The implementation process of the method comprises the steps that a collector is connected with a communication gateway, a scheduling task is used fordata collection, data processing and data forwarding.

Owner:上海积成能源科技有限公司

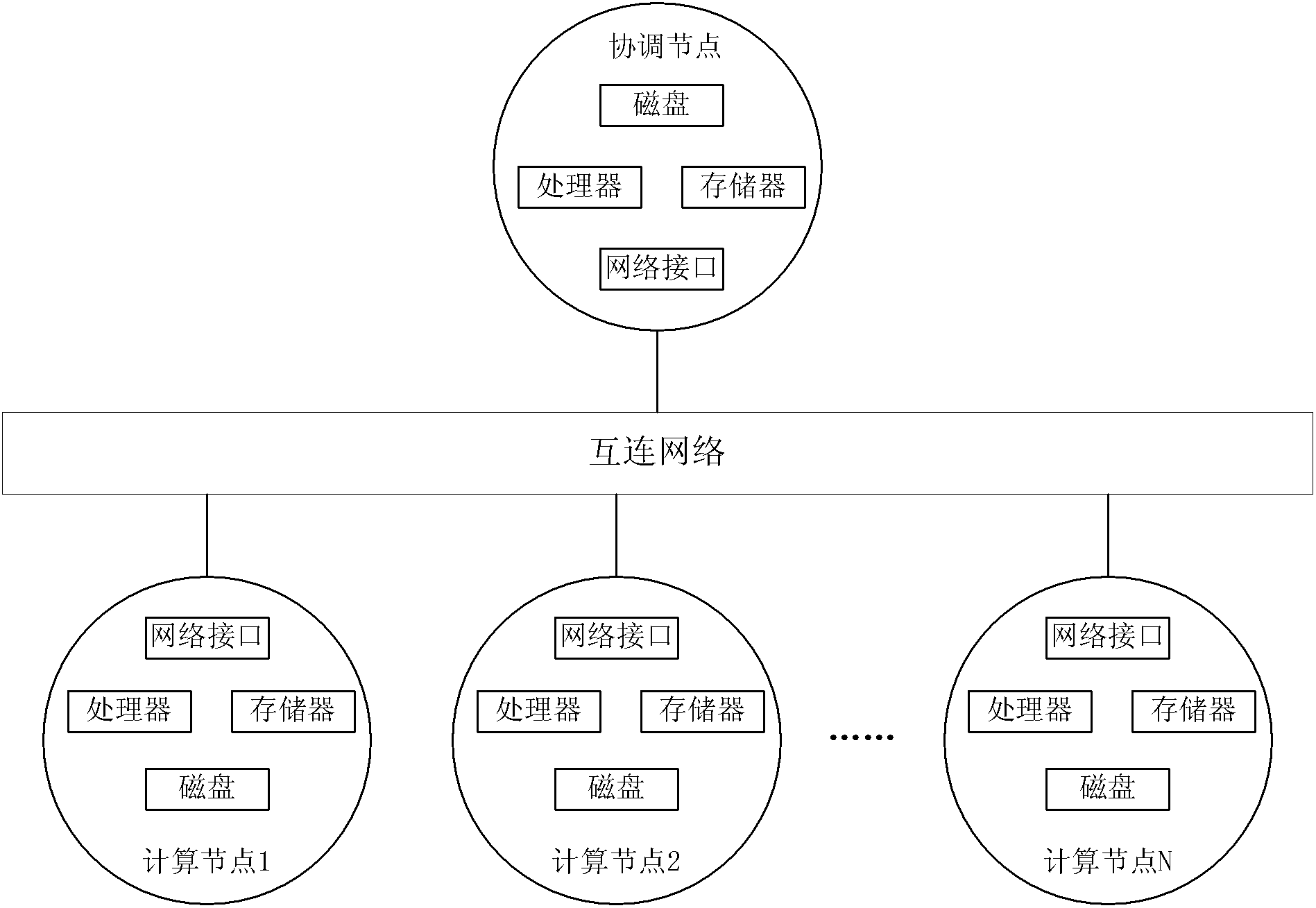

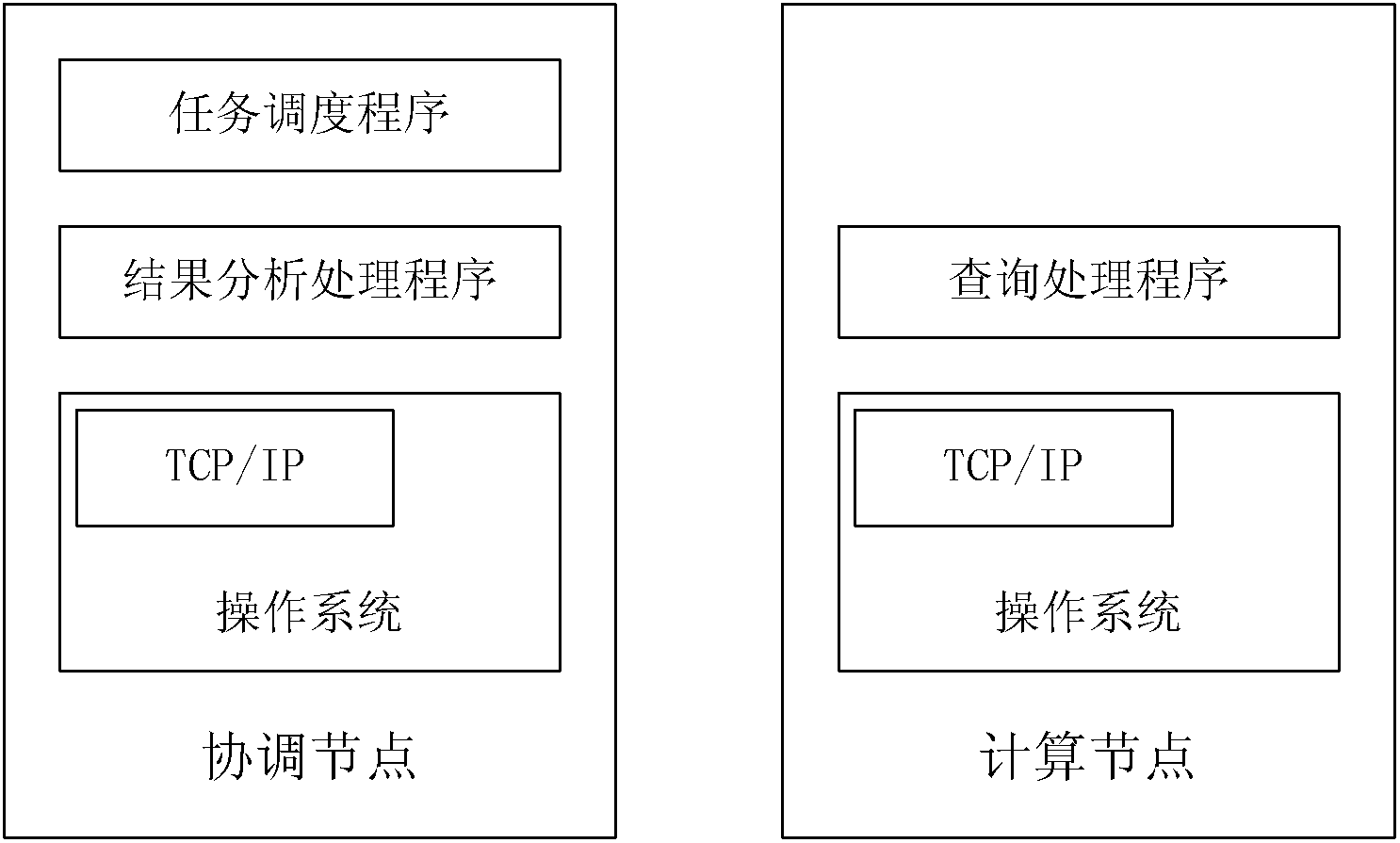

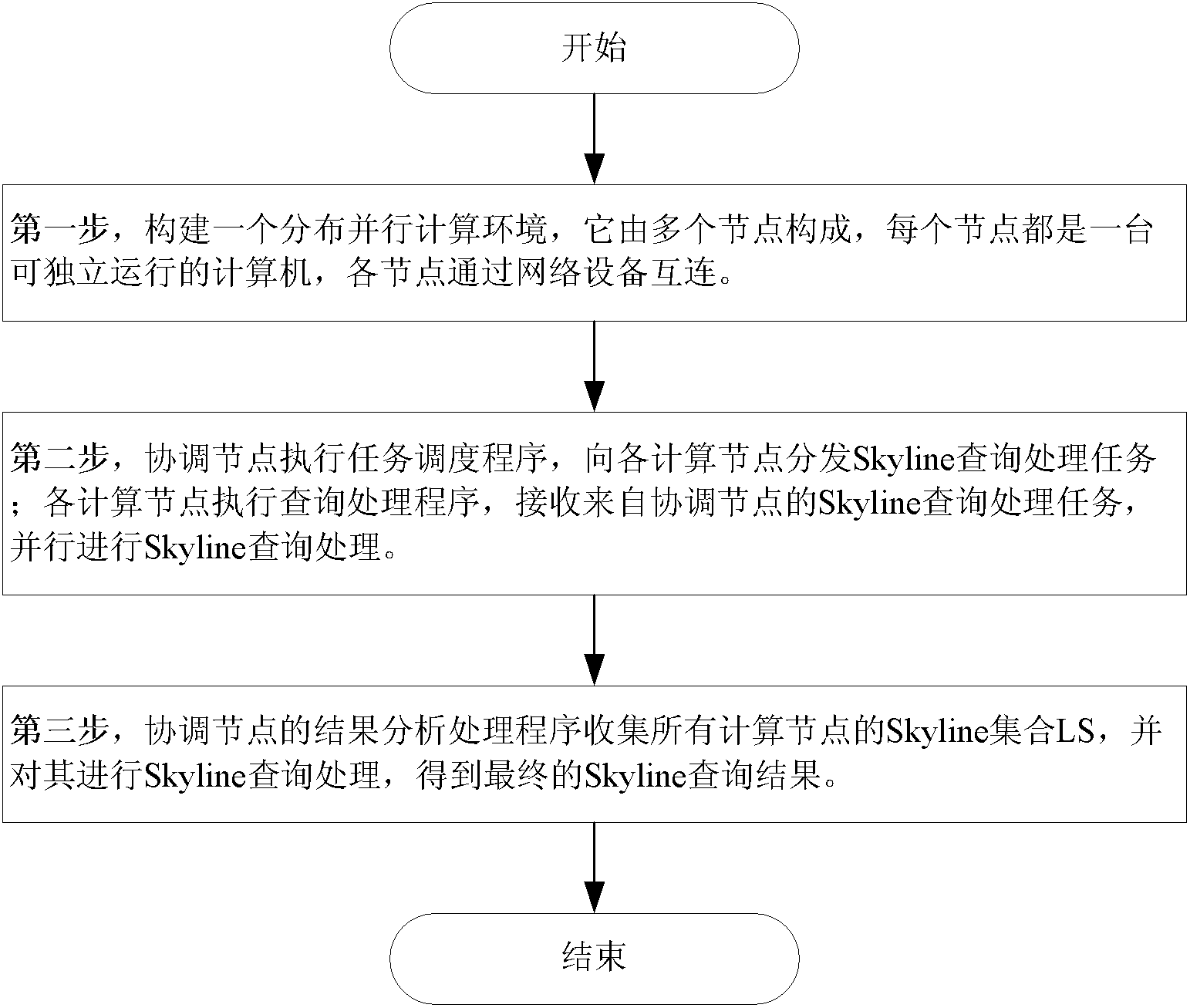

Distributed parallel Skyline query method based on vertical dividing mode

ActiveCN102323957ALoad balancingImprove query processing efficiencySpecial data processing applicationsFully developedParallel computing

The invention discloses a distributed parallel Skyline query method based on a vertical dividing mode and aims to provide a new Skyline query method for fully developing the parallelism of Skyline query processing and improving efficiency. The invention adopts the technical scheme that: the method comprises the following steps that: a distributed parallel computing environment which consists of a coordination node and N computing nodes is constructed, wherein the coordination node has a task scheduling program and a result analysis processing program, and the computing nodes have query processing programs; the coordination node executes the task scheduling program and distributes a Skyline query processing task to each computing node; each computing node executes the query processing program, receives the Skyline query processing task from the coordination node and performs Skyline query processing; and the coordination node executes the result analysis processing program to collect a Skyline set LS of all computing nodes and performs Skyline query processing on the Skyline set LS to obtain a final Skyline query result. By adoption of the method, load balancing between the computing modes can be effectively guaranteed, the accuracy of the Skyline query result is guaranteed, and query efficiency is improved.

Owner:NAT UNIV OF DEFENSE TECH

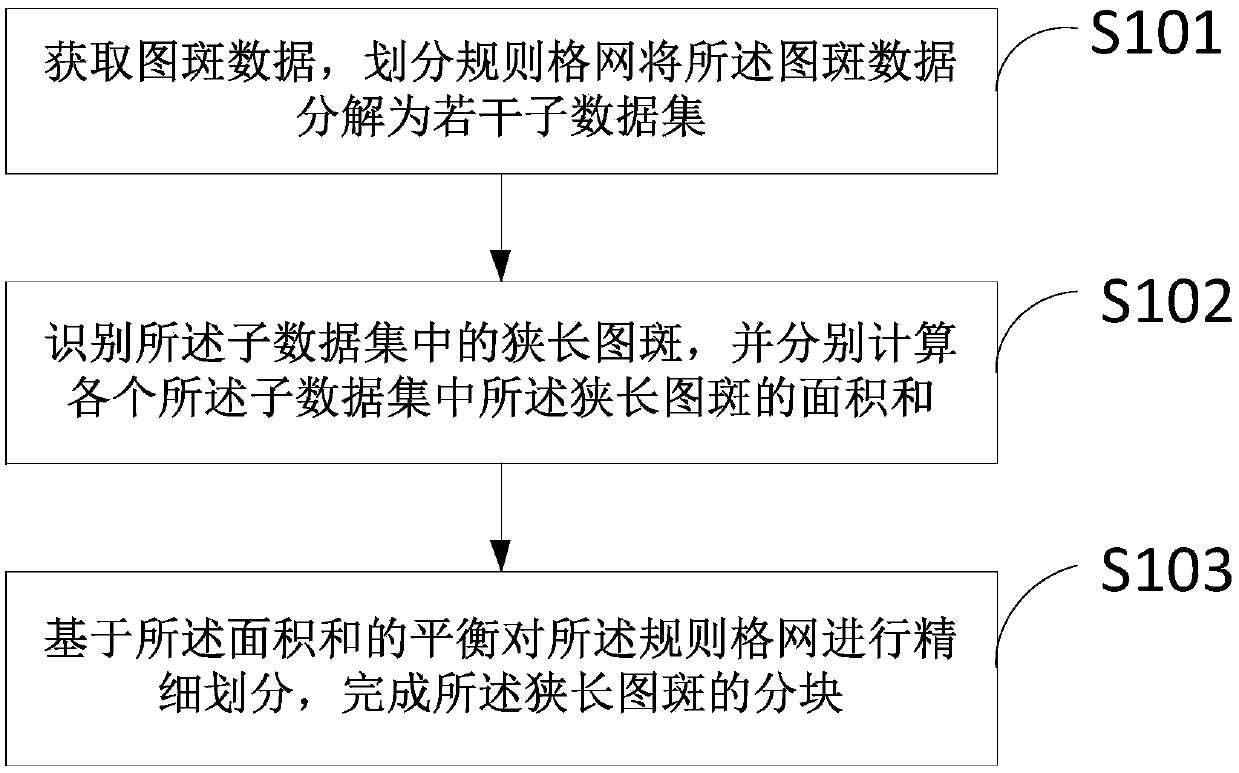

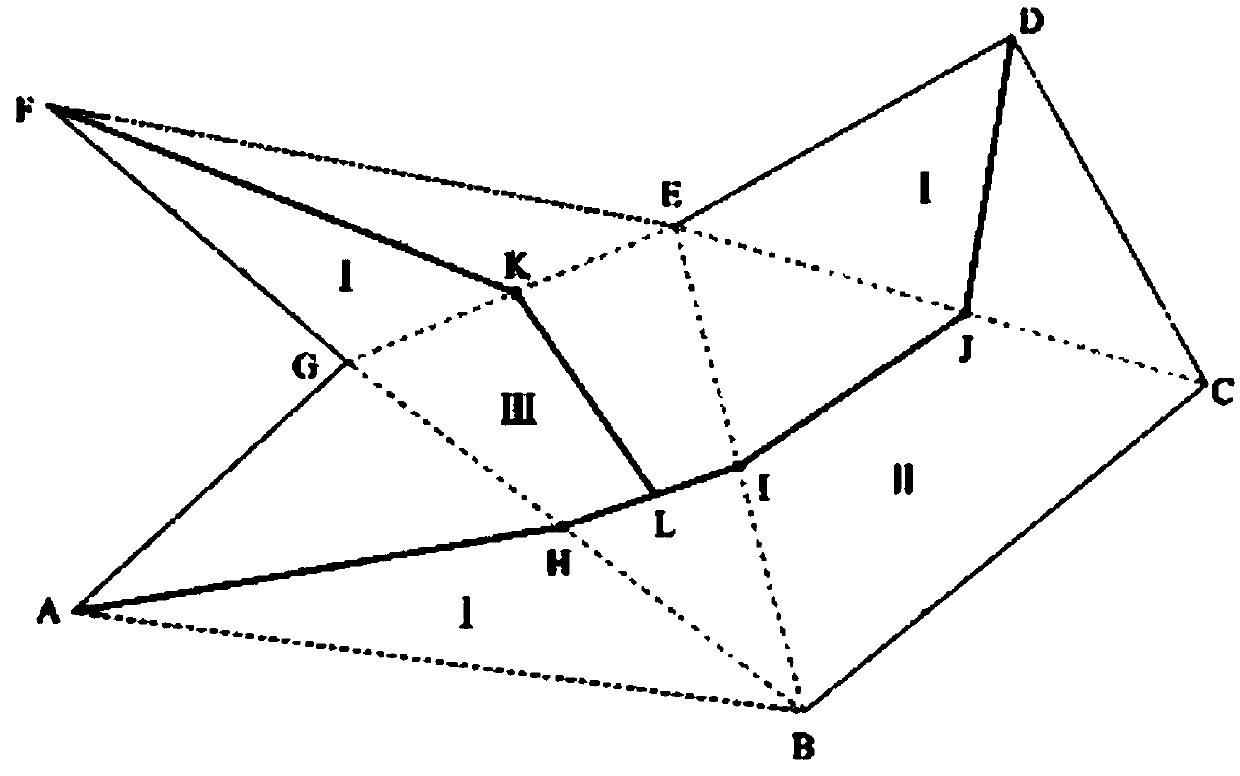

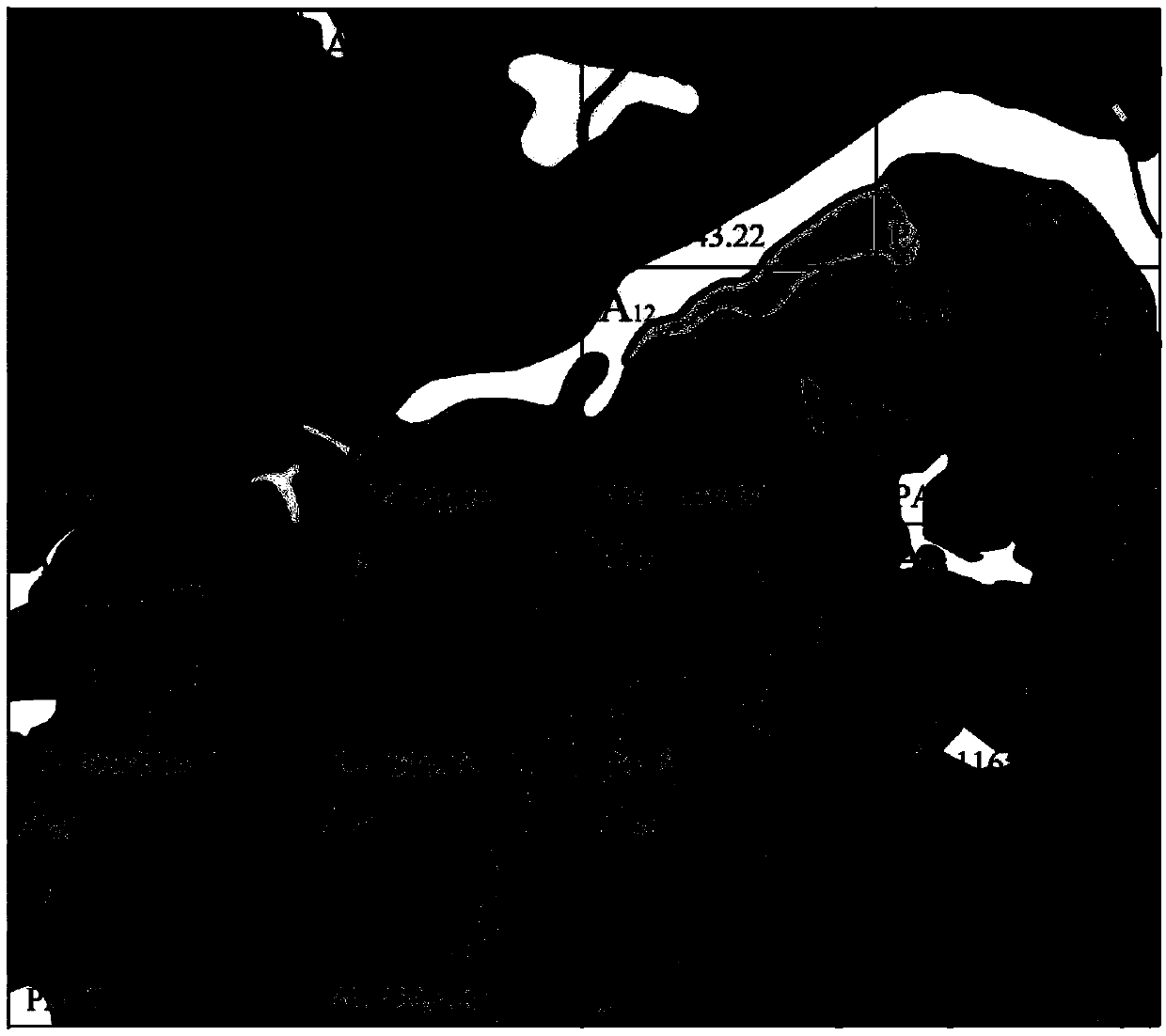

Long and narrow map spot partitioning method and device, computer equipment and storage medium

The invention relates to the technical field of map drawing and map synthesis, in particular to a long and narrow map spot partitioning method and device, computer equipment and a storage medium. Themethod comprises the following steps: acquiring image spot data, and dividing a rule grid to decompose the image spot data into a plurality of sub-data sets; Identifying long and narrow spots in the sub-data sets, and respectively calculating the area sum of the long and narrow spots in each sub-data set; And based on the balance of the area sum, finely dividing the regular grid to complete the partitioning of the long and narrow spots. According to the balance of the area sum, the grids are finely divided; The method has the advantages that the method is suitable for parallel computing, the load balance of a computer is facilitated, the computing efficiency can be improved during large-data-volume computing, computing resources are saved, program crash caused by insufficient memory of thecomputer during computing is prevented, and the stability of data computing is guaranteed.

Owner:CHINESE ACAD OF SURVEYING & MAPPING

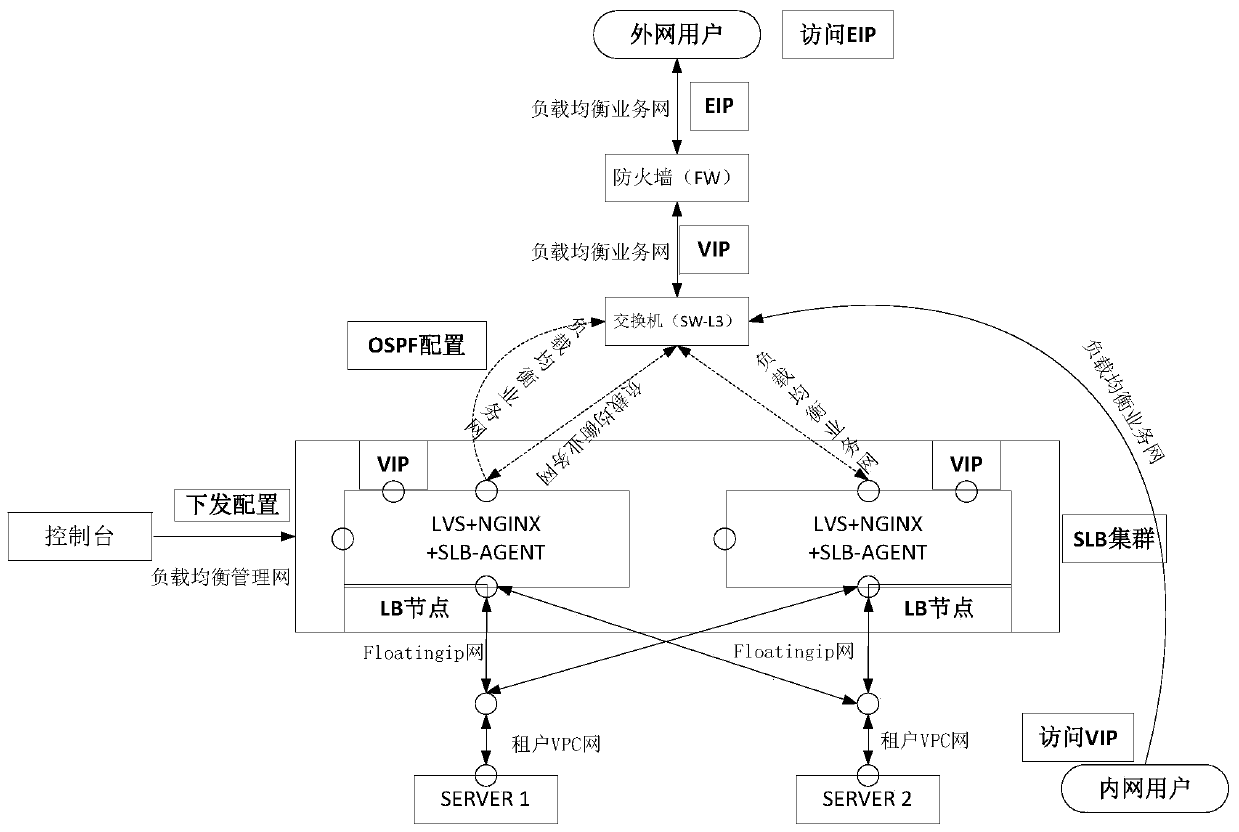

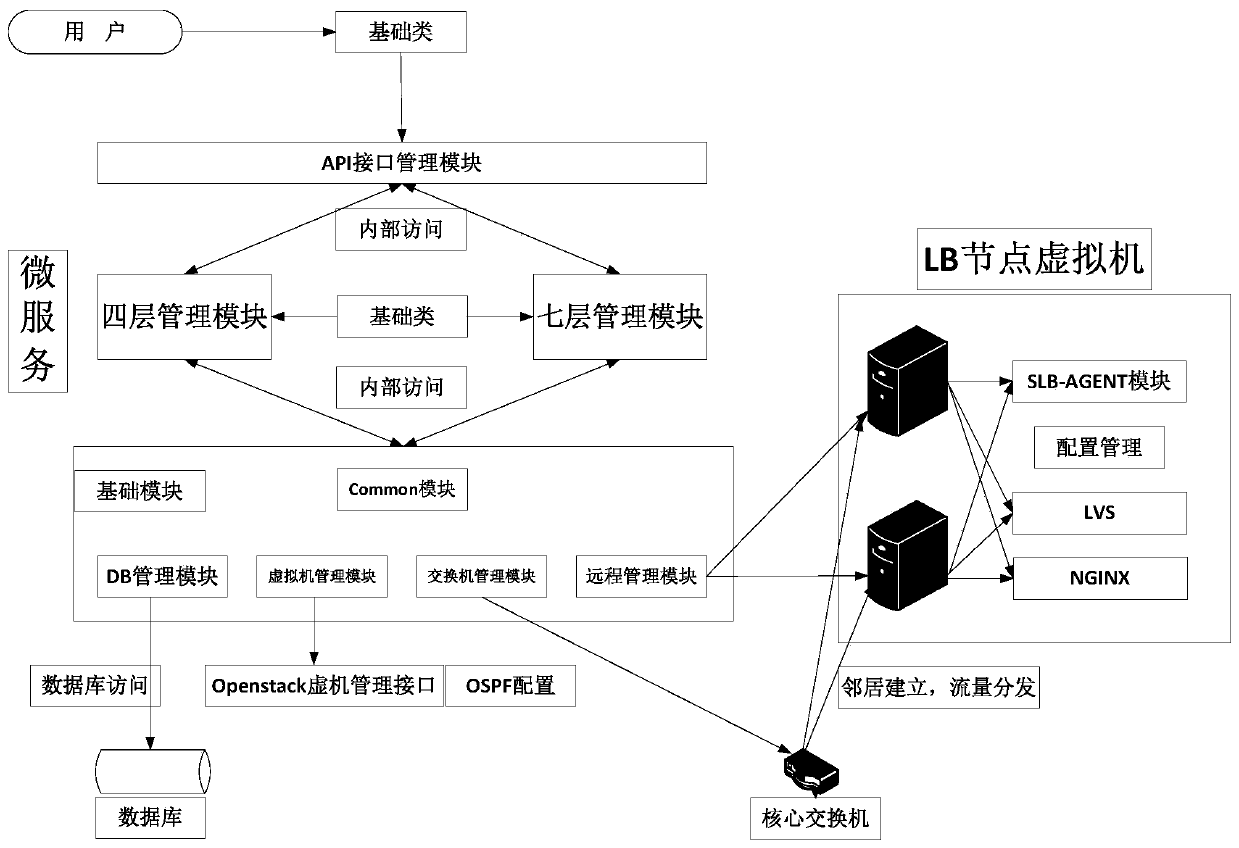

Multi-live load balancing method and system applied to openstack cloud platform

PendingCN111274027AImplement multi-active modeReduce demandResource allocationSoftware simulation/interpretation/emulationResource utilizationSingle point of failure

The invention discloses a multi-live load balancing method and system applied to an openstack cloud platform, and belongs to the field of cloud computing pass. The technical problem to be solved by the invention is how to meet the requirements of four-layer and seven-layer flow load balancing, avoide a single-point fault of the load balancing node, achieve the maximum utilization rate of the loadbalancing node performance, and improve the resource utilization rate of the physical server on the cloud platform; the adopted technical scheme is as follows: the method comprises the following steps: open-source LVS and NGINX are used for carrying out load balancing on four-layer flow and seven-layer flow respectively; through cooperation of an open source quagga virtual router of an ospf protocol and a physical switch supporting the ospf protocol, multi-path selection is carried out; a multi-active mode is realized, and communication with a virtual machine in a tenant vpc is performed through an external network shared by tenants on an openstack platform, so that cross-vpc load balancing is realized. The system adopts a micro-service software architecture and comprises a UI layer, a back-end micro-service layer, an atomic micro-service layer and a far-end SLB-AGENT layer.

Owner:山东汇贸电子口岸有限公司

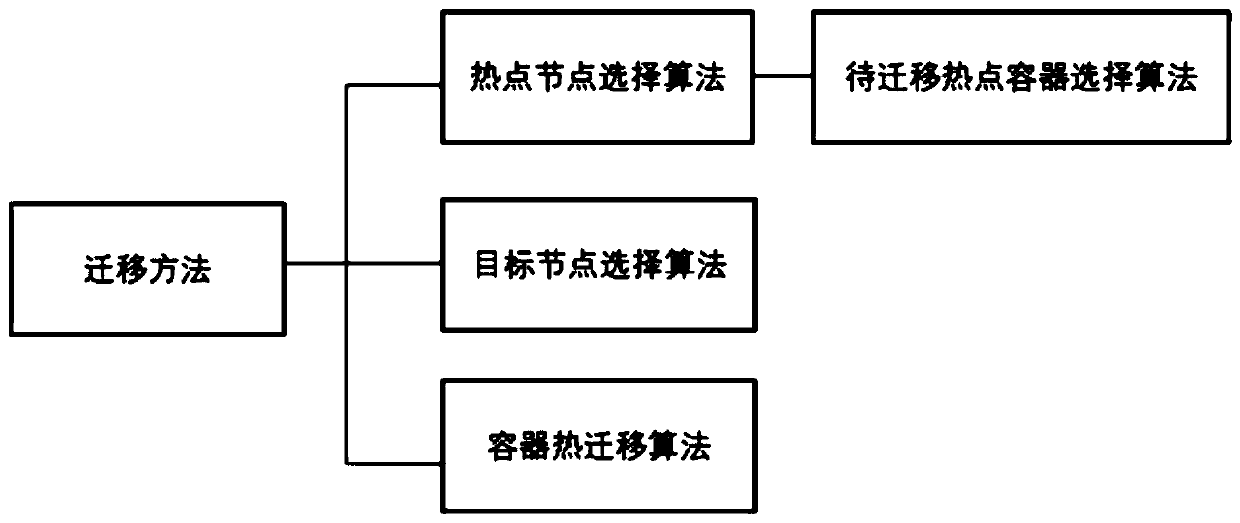

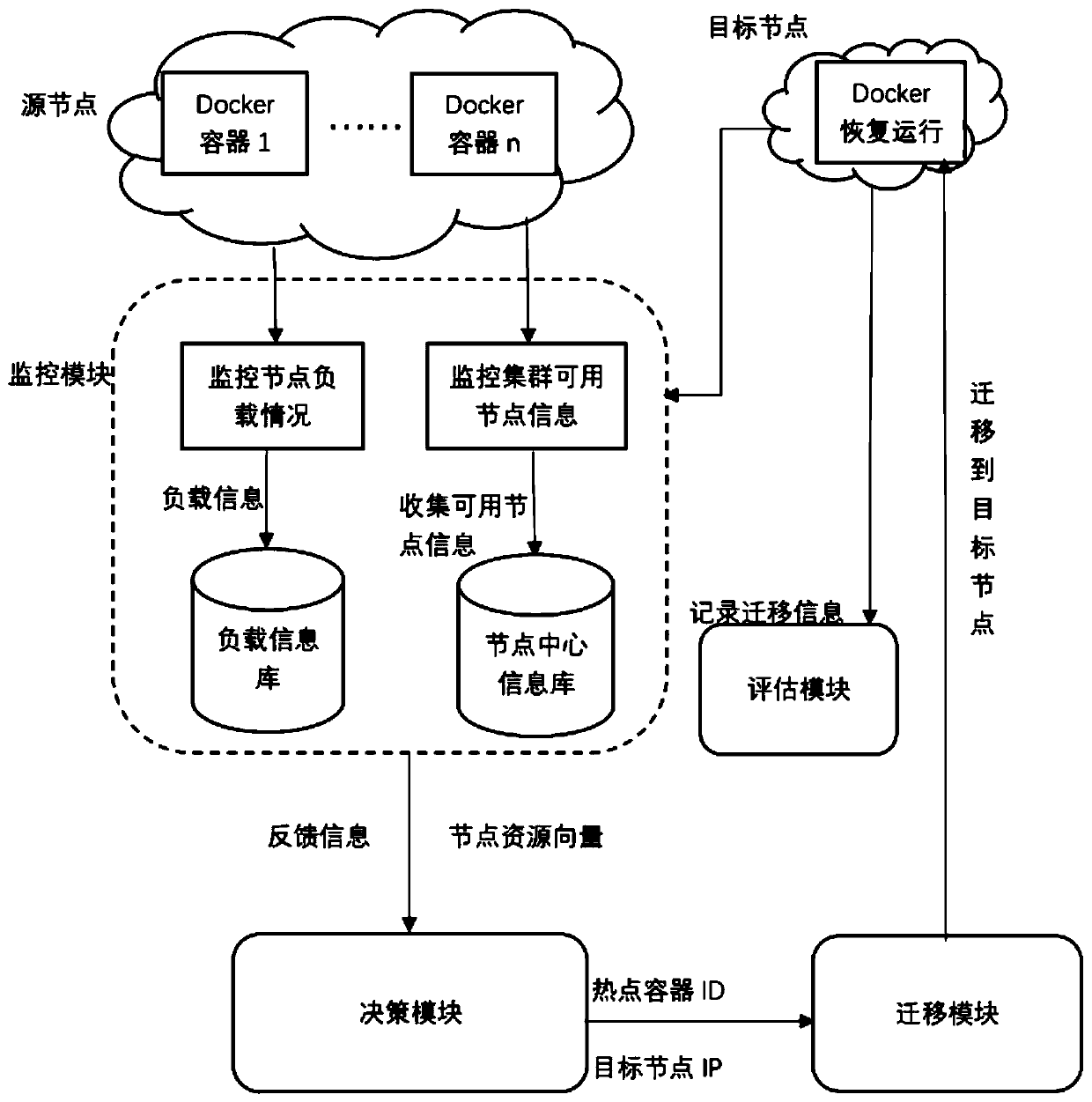

Docker migration method and system for cloud data center

ActiveCN111190688AReduce lossesImprove reliabilityProgram initiation/switchingEnergy efficient computingEngineeringAlgorithm Selection

The invention discloses a Docker migration method and system for a cloud data center. Load information values of all physical nodes in a cluster are collected and quantized; when the condition that the load of a certain node reaches a set threshold value or a container is suspended due to damage is monitored, feedback information is generated, a to-be-migrated container is selected on the overloador no-load node by using a hotspot container selection algorithm, affinity matching is performed according to a resource demand vector of the to-be-migrated container and the residual resource quantity of other nodes, and a target node set is selected. Then, in the target node set, an optimal target node is selected according to a probability selection algorithm, and the to-be-migrated containeris migrated to a target node to recover execution. According to the method, the container migration method is designed by taking the minimum migration amount, the shortest migration time and the lowest energy consumption as principles, so that the loss of the cluster caused by the fault of a certain computing node can be effectively reduced, the reliability and the utilization rate of the existingcluster are improved, the load balance is realized, and higher availability is provided for the cluster.

Owner:XI AN JIAOTONG UNIV

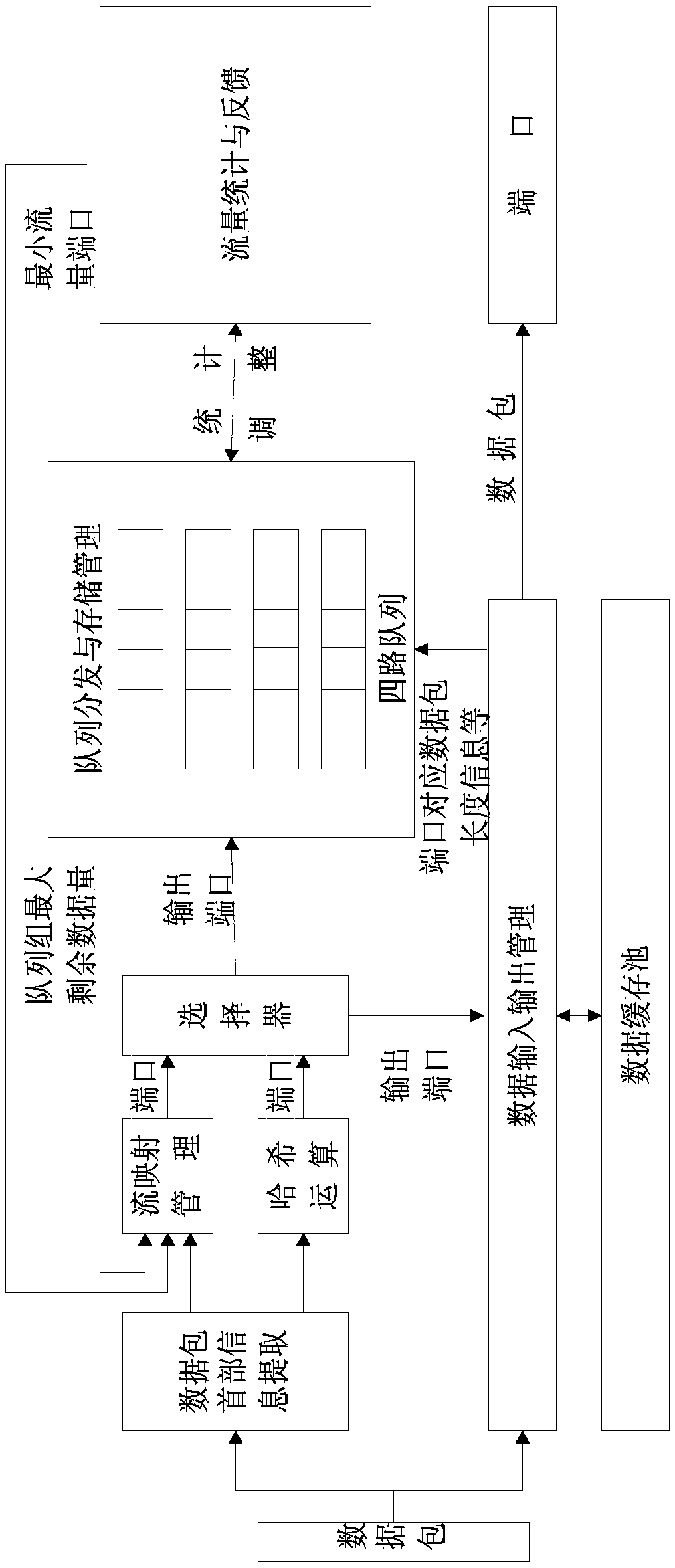

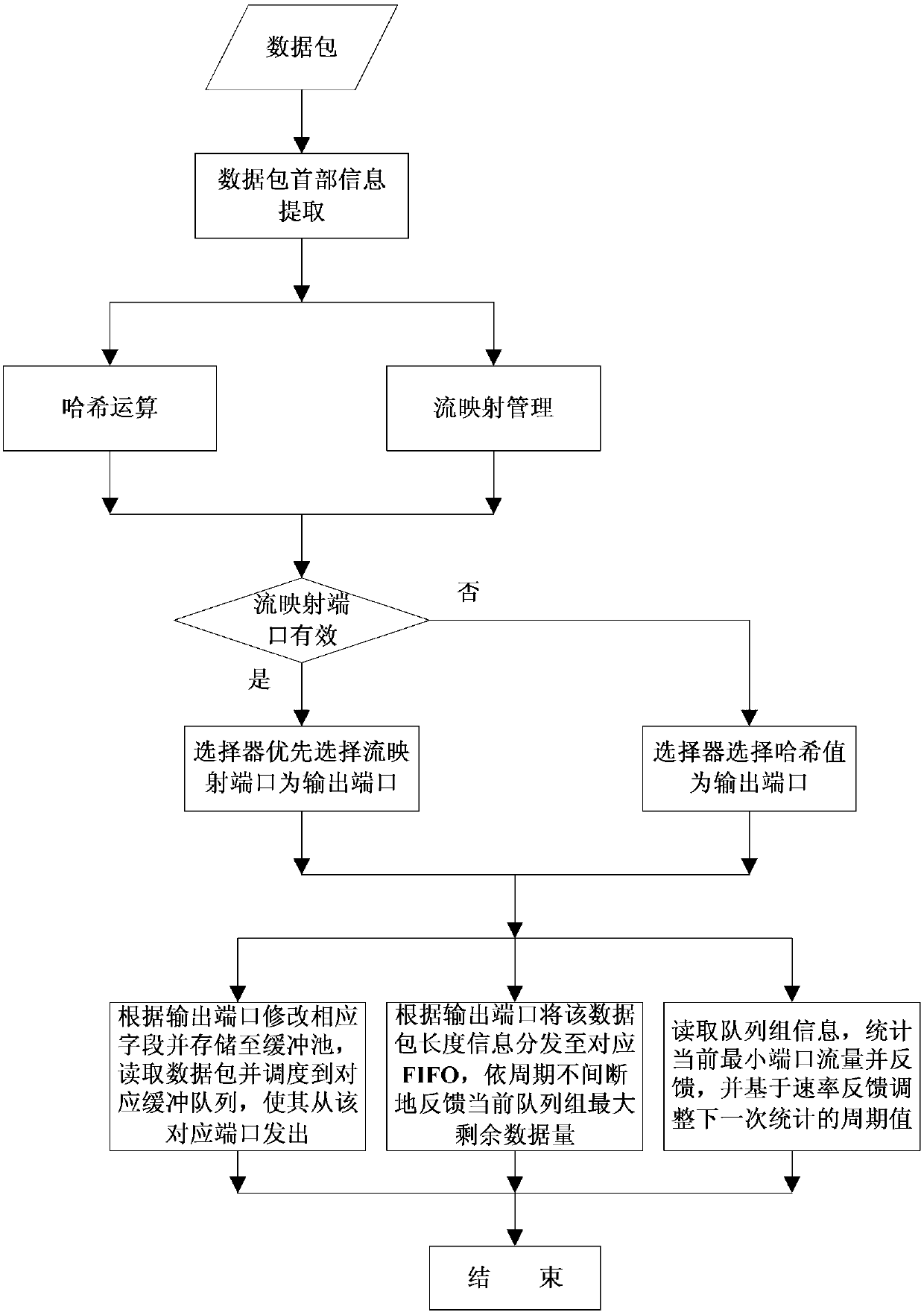

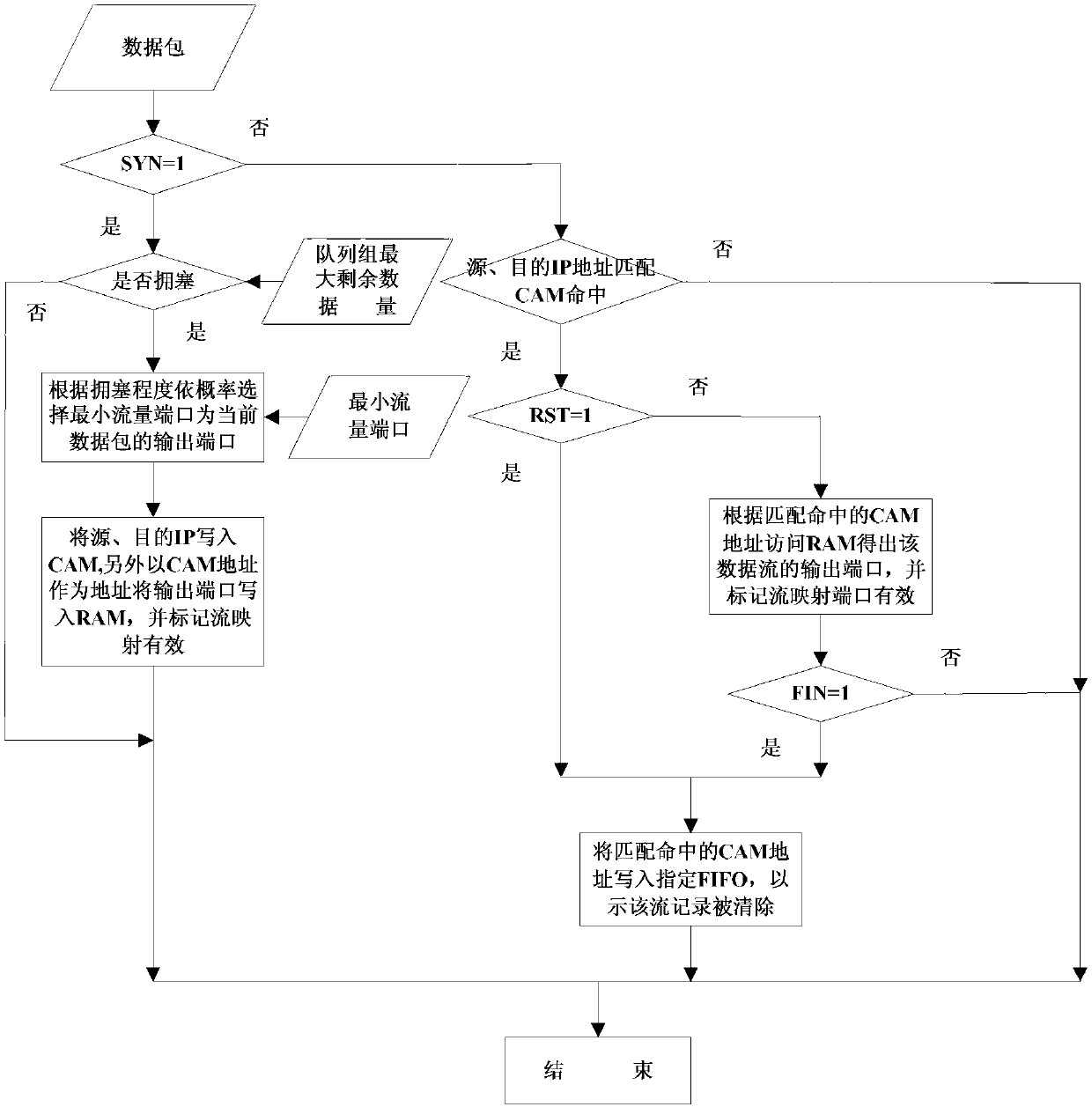

High speed network data flow load balancing scheduling method based on field programmable gate array (FPGA)

InactiveCN103139093ARealize instantaneous equalizationLoad balancingData switching networksTraffic capacityData stream

The invention discloses a high speed network data flow load balancing scheduling method based on a field programmable gate array (FPGA). According to the method, parallel computing of the FPGA is given full play. The method comprises the steps that the front end concurrently executes Hash operation and selects an output port in probability according to a jam degree of array groups, wherein the strategy that an flow mapping port is preferential is adopted, and the rear end concurrently sends a data packet out from a corresponding physical layer (PHY) port according to the output port, enables corresponding information of the data packet to be written into the PHY port in a first in first out (FIFO) mode, feeds back a current maximum remaining data size by periods, counts and feeds back a port with the smallest flow quantity in the period, simultaneously regulates the period for next counting according to rate feedback trends, enables the period for next counting to be suitable for burst flow quantity, and achieves instantaneous balance of high speed network data flows under the premise that flow particle sizes are guaranteed.

Owner:GUILIN UNIV OF ELECTRONIC TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com