Illumination matching virtual fitting method and device and storage medium

A technology of virtual fitting and light intensity, applied in neural learning methods, 3D image processing, image data processing, etc., can solve the poor time control of clothing model reconstruction, complex calculation methods and calculation principles, and is not suitable for virtual dressing Application and other issues to achieve a high degree of realism

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

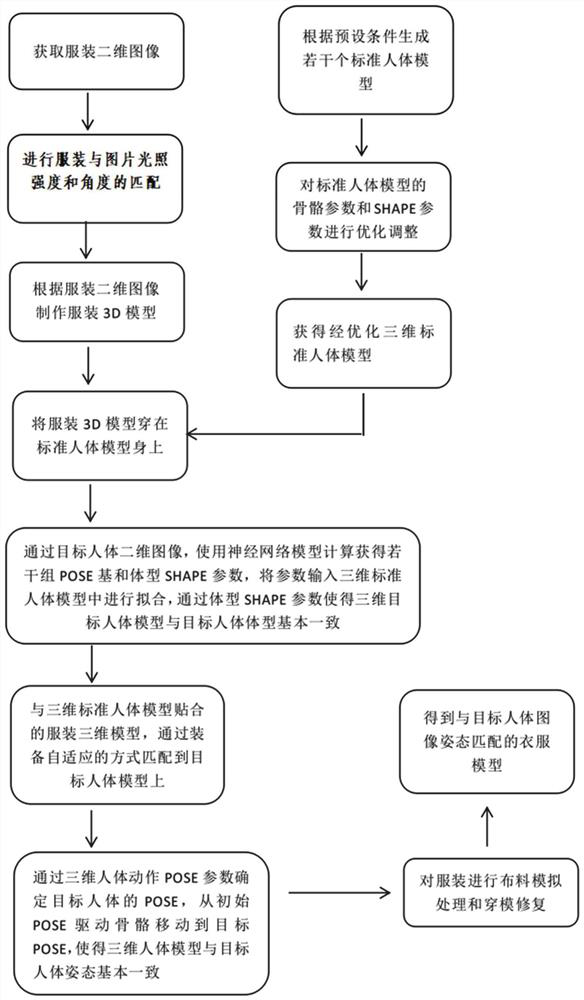

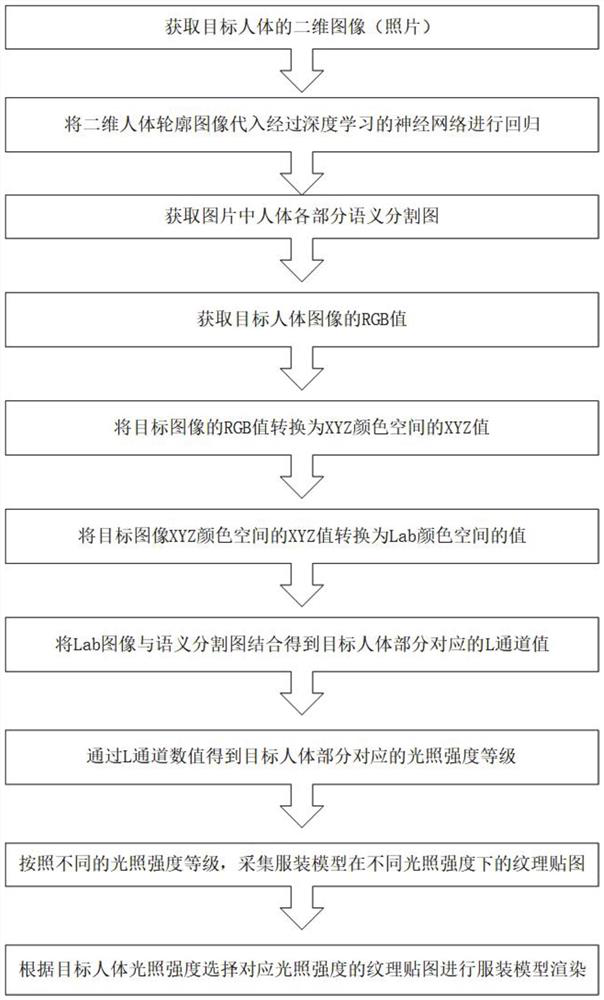

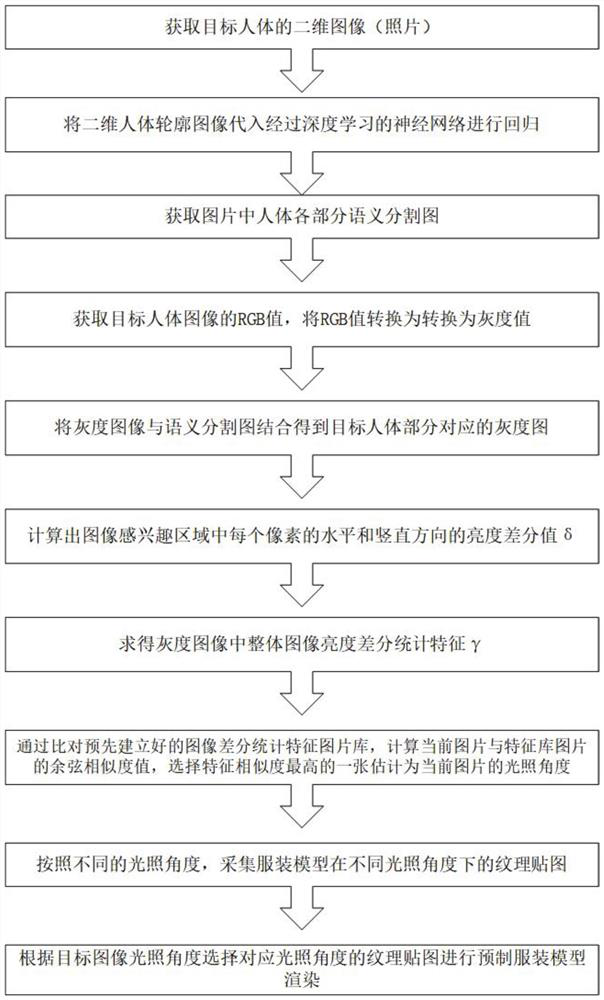

Method used

Image

Examples

Embodiment Construction

[0040] The characteristics and exemplary embodiments of various aspects of the present invention will be described in detail below. In order to make the purpose, technical solutions and advantages of the present invention more clear, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only configured to explain the present invention, not to limit the present invention. It will be apparent to one skilled in the art that the present invention may be practiced without some of these specific details. The following description of the embodiments is only to provide a better understanding of the present invention by showing examples of the present invention.

[0041]It should be noted that in this article, relational terms such as first and second are only used to distinguish one entity or operation from another entity or operation, and d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com