Method and device for extraction of user portrait labels for cold chain stowage based on multimodality

A multi-modal, cold-chain technology, applied in character and pattern recognition, biological neural network models, special data processing applications, etc., can solve the problems of ignoring the semantic differences of different modal features, difficult features, and limited research.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0067] The present invention will be further described below in conjunction with the accompanying drawings. The following examples are only used to illustrate the technical solutions of the present invention more clearly, and cannot be used to limit the protection scope of the present invention.

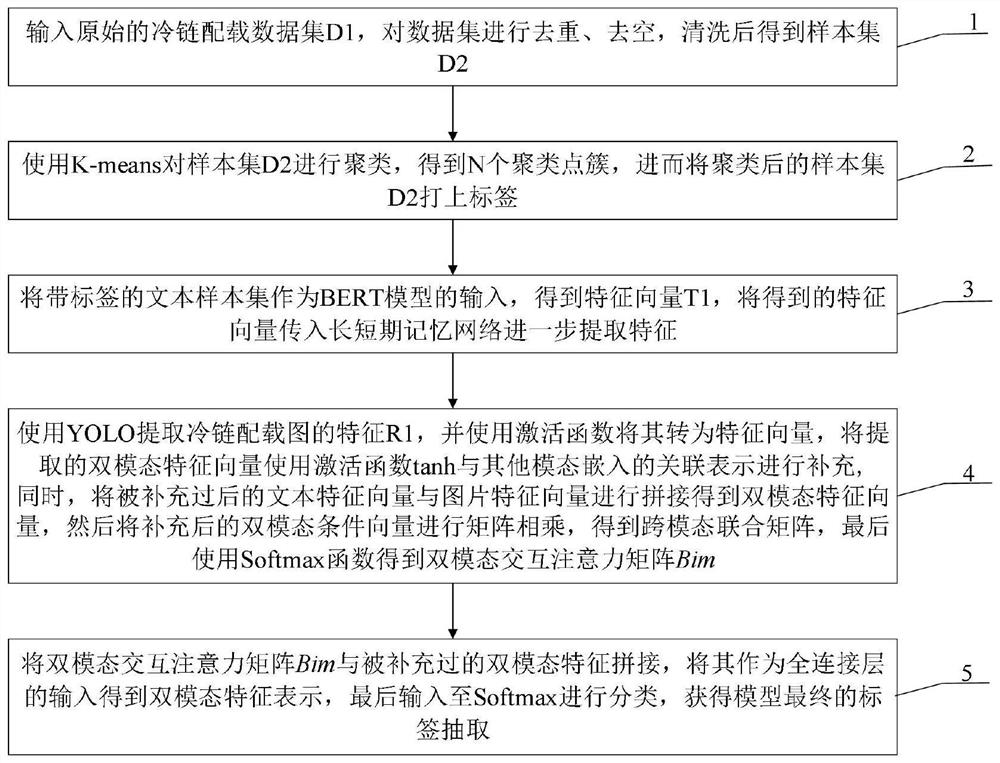

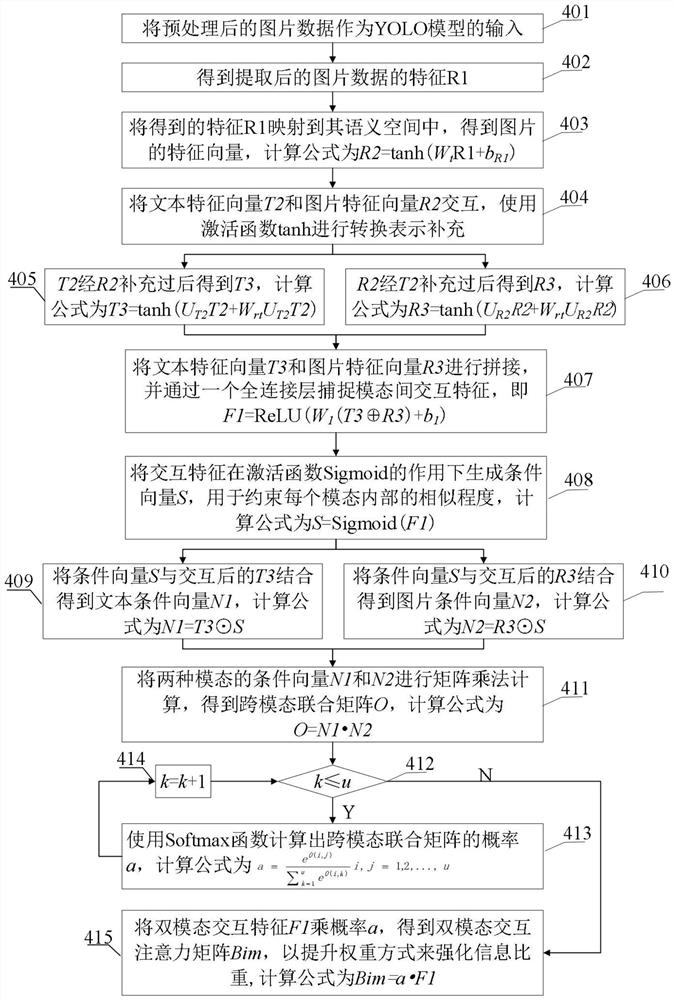

[0068] The invention discloses a method and a device for extracting user portrait labels for cold chain stowage based on multimodality.

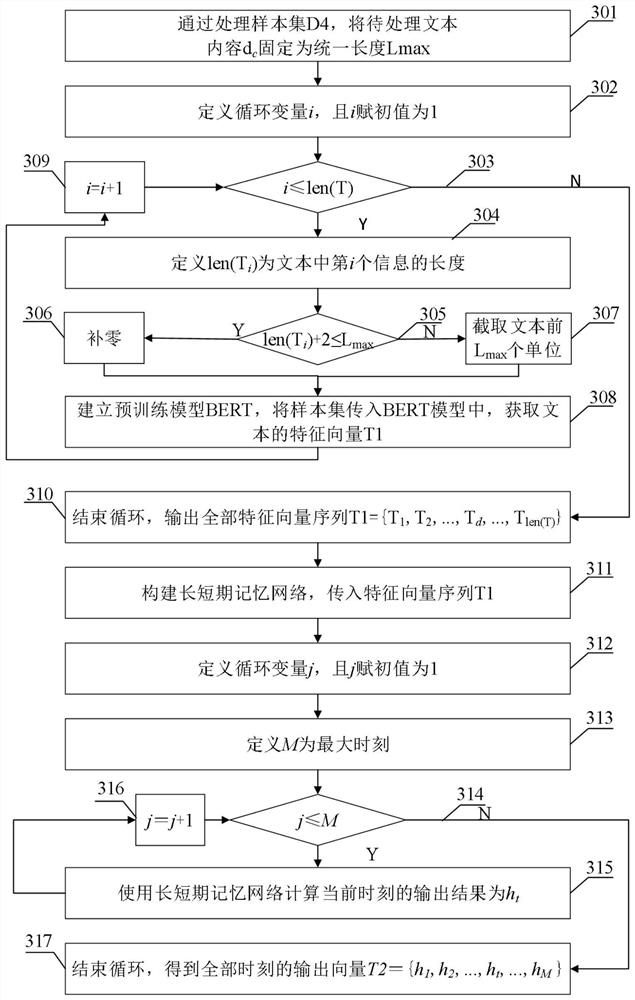

[0069] Step 1: Input the original cold chain stowage data set D1, deduplicate and empty the data set, and obtain sample set D2 after cleaning:

[0070] Step 1.1: Define Data as a single data to be cleaned, define id and content as the serial number and content of the data, and satisfy the relationship Data={id, content};

[0071] Step 1.2: Define D1 as the data set to be cleaned, D1={Data 1 ,Data 2 ,…,Data a ,…,Data len(D1) }, Data a is the a-th information data to be cleaned in D1, where len(D1) is the number of data in D1, and the variable ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com