Large-scale multi-operation floating point matrix calculation acceleration implementation method and device

A technology of floating-point matrix and implementation method, which is applied in computing, complex mathematical operations, instruments, etc., can solve problems such as insufficient structural reusability, multiple logic resources, and increased area, and achieve flexible use, high computing efficiency, and resource The effect of less consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

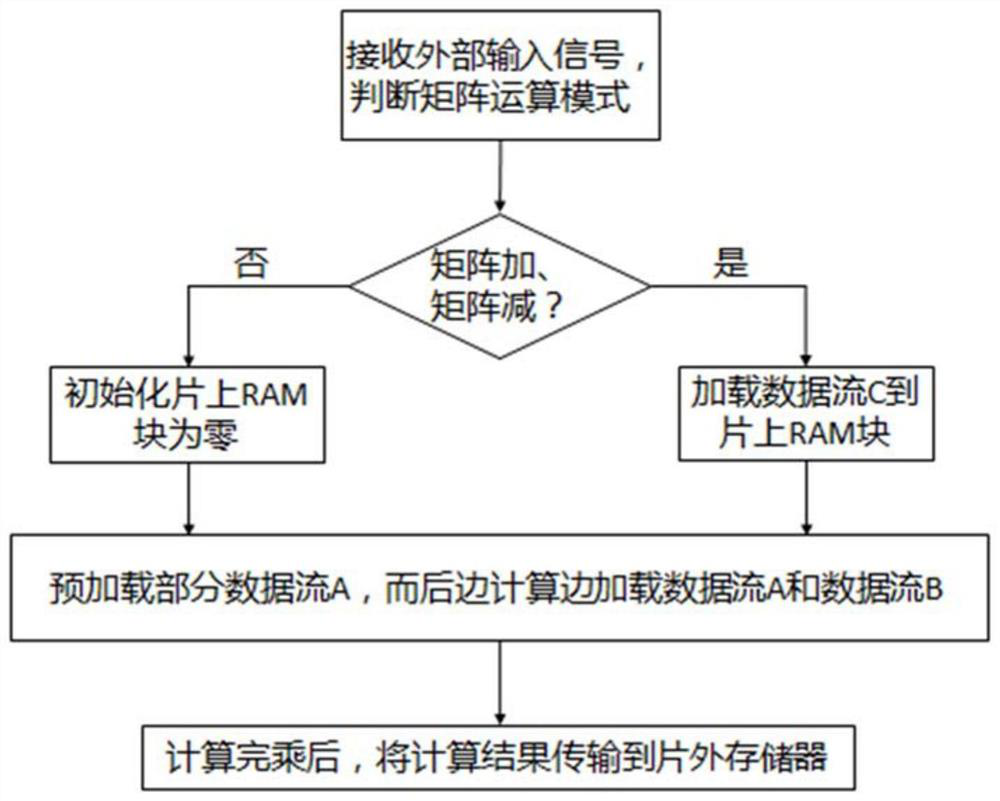

[0038] Such as figure 1 As shown, the large-scale multi-operation floating-point matrix calculation acceleration realization method of the present invention, its steps include:

[0039] Step S1: According to the operation type of the matrix to be processed, receive an external input signal and judge the matrix operation mode: when the operation mode is matrix addition or matrix subtraction, go to step S3; when the operation mode is matrix multiplication, matrix-vector multiplication, During matrix-scalar multiplication, proceed to step S2;

[0040] Step S2: Initialize the on-chip RAM to be zero, and proceed to step S4;

[0041] Step S3: Load the data source C into the on-chip RAM through the RAM channel, and transfer to step S4;

[0042] Step S4: Preload part of data stream A through the RAM channel, and then load data stream A and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com