Multi-dimensional measurement method for obtaining target form

A measurement method and target technology, applied in the direction of measuring devices, instruments, optical devices, etc., can solve the problems of large amount of points and calculation, difficulty in real-time measurement and application, and long calculation time, so as to improve the scope of application and physical hardware Effect of low complexity and improved measurement accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0029] A multi-dimensional measurement method for obtaining target morphology, comprising:

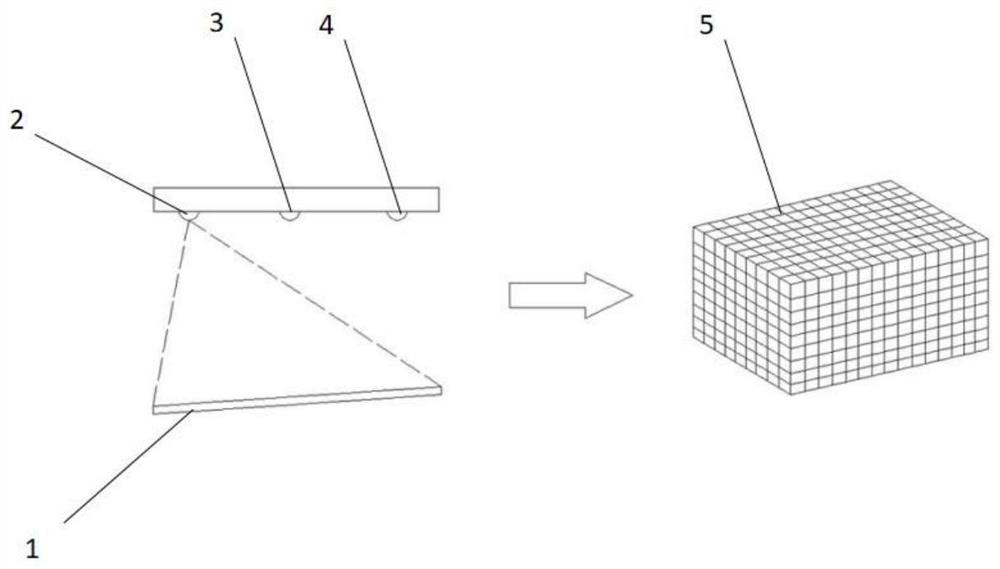

[0030] S1. Perform matrix measurement on the bearing surface where the target to be measured is located by the measuring device, and convert the bearing surface where the target to be measured is located according to the conversion model to form a virtual background measurement space.

[0031] S2. Select a virtual measurement surface in the virtual background measurement space, and form correction parameters of each dot matrix on the bearing surface where the target to be measured is located relative to the virtual measurement surface.

[0032] S3. When it is recognized that the target to be measured appears on the bearing surface where the target to be measured is located, the target to be measured and the bearing surface where the target to be measured is located are measured in matrix by the measuring device, and according to the conversion model described in S1 and the correction pa...

Embodiment 2

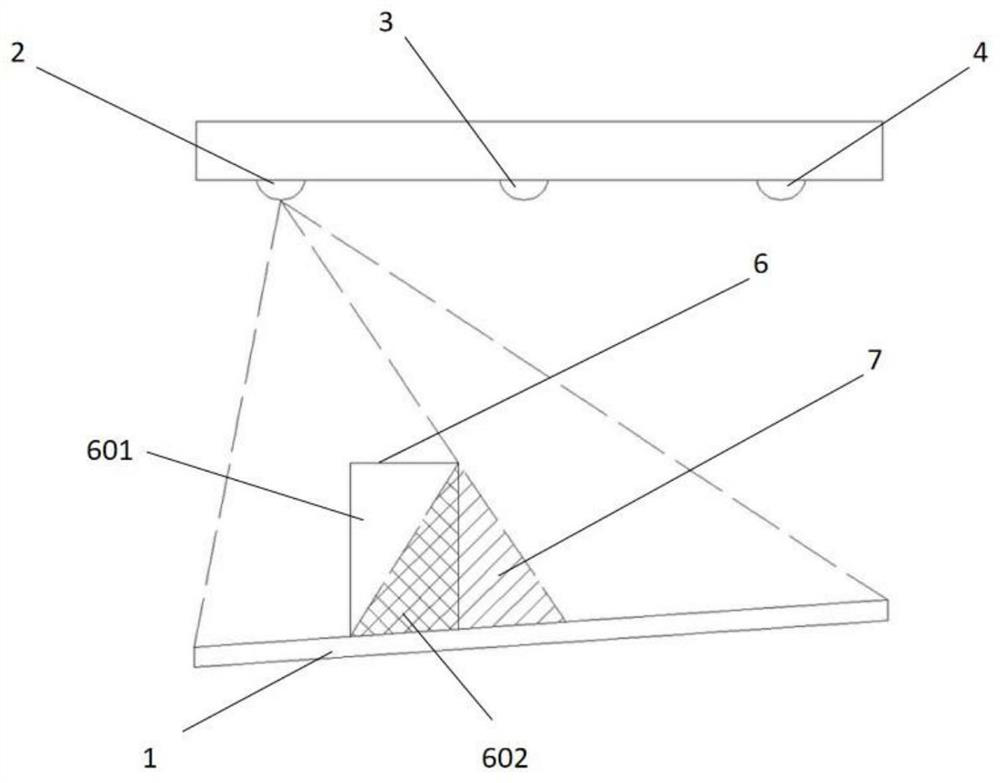

[0045] Based on the multi-dimensional measurement method for obtaining the target form described in Embodiment 1, when anchoring the virtual target measurement space as a virtual space that coincides with each virtual dot matrix of the virtual background measurement space, first use the virtual target measurement space and the virtual background measurement space Among them, the overlapping part of the distance and angle of the original measurement point is the anchor point. The virtual measurement matrix point in the virtual target measurement space is compared with the virtual matrix point in the corresponding position in the virtual background measurement space. The point where the position error occurs is based on the virtual The matrix point position data corrects the point position data for the virtual measurement matrix point position data. After the correction of the position data of all virtual measurement matrix points is completed, it is determined that the action is...

Embodiment 3

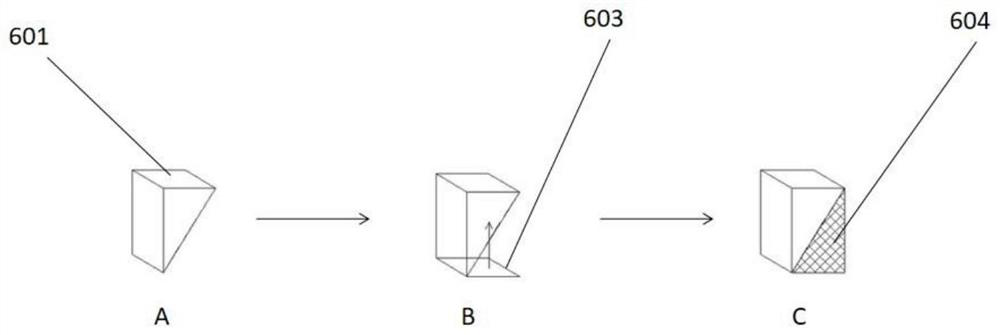

[0047] Based on the multi-dimensional measurement method for obtaining the shape of the target described in Embodiment 1, the method of filling the unmeasured surface of the measurement target with data according to the projection structure is as follows: first, in the virtual background measurement space, according to the acquired virtual measurement matrix point data A virtual form model-measuring surface of the measuring target facing the fast distance measuring device is formed. Then, according to the relative positional relationship between the projection device and the fast distance measuring device, the projection structure is adjusted to the position where the virtual shape model-measuring surface fits. Then the size of the projected structure is adjusted so that the projected structure overlaps with the virtual shape model-measuring surface to the greatest extent. Afterwards, data points are supplemented with the structural boundary of the projected structure in the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com