Vehicle-road collaborative awareness and data fusion method, medium and automatic driving system

A technology of data fusion and vehicle-road coordination, which is applied in the direction of road network navigators, navigation, instruments, etc., can solve the problem that the effect of collaborative perception and data fusion application at both ends of the vehicle road is difficult to reach the industrial level, and the communication delay between the road end and the vehicle end The data fusion algorithm at both ends of the problem is not mature enough, it is difficult to meet the needs of industrial applications, etc., to achieve the effect of resisting bad weather and road conditions, stable application effect, and fast sensing speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

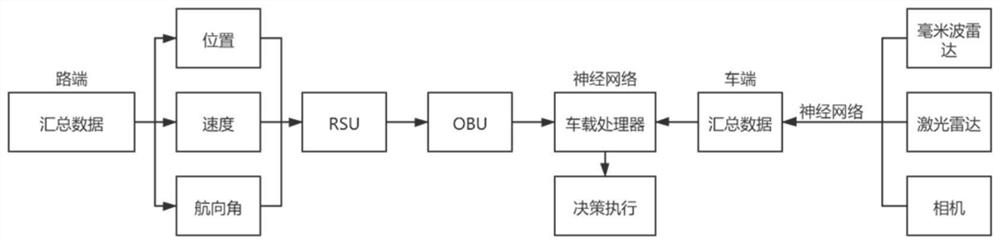

[0032] This embodiment provides a vehicle-road cooperative sensing and data fusion method, which is applied to a vehicle-mounted processor and includes the following steps:

[0033] 1) Obtain multiple sensor data, perform data fusion through neural network algorithms, and obtain the first summary data as vehicle-side summary data. Among them, the sensors include vehicle-side millimeter-wave radar, vehicle-side laser radar and vehicle-side cameras, and neural network algorithms Fusion algorithms built for deep learning models;

[0034] 2) Obtain real-time information collected by the roadside unit RSU, including position, speed and heading angle;

[0035] 3) The first summary data is fused with the real-time information through the neural network algorithm based on Kalman filter to obtain the second summary data, which can be used as the basic data for decision-making and execution of the automatic driving system.

[0036] In a specific implementation manner, the deep learning...

Embodiment 2

[0040] This embodiment provides an automatic driving system, including a roadside unit, a vehicle-mounted unit, and a vehicle-mounted processor connected in sequence. The vehicle-mounted unit and the roadside unit are connected through 5G wireless communication. The vehicle-mounted processor is connected to various sensors, and the A computer program that, when invoked, performs the following operations:

[0041] Obtain a variety of sensor data, perform data fusion through neural network algorithms, and obtain the first summary data, in which the sensors include car-end millimeter-wave radar, car-end laser radar and car-end camera;

[0042] Obtain real-time information collected by roadside units, including position, speed and heading angle;

[0043] fusing the first aggregated data with real-time information to obtain second aggregated data;

[0044] An automatic driving strategy is generated based on the second aggregated data.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com