Depth completion method for sparse depth map, computer device and storage medium

A depth map and completion technology, applied in the field of image processing, can solve the problem that sparse depth maps cannot meet the requirements of dense depth maps

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

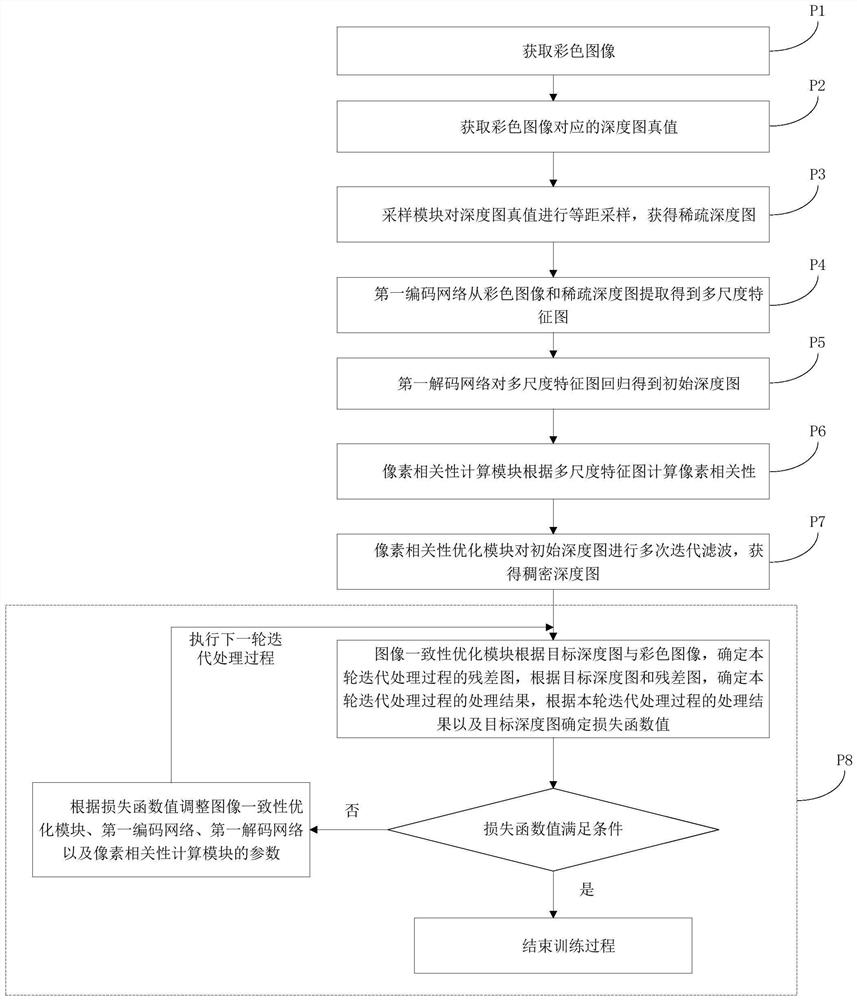

[0051] In this embodiment, the depth completion method of the sparse depth map includes the following steps:

[0052] S1. Train the neural network;

[0053] S2. Obtain the image to be processed;

[0054] S3. Use the trained neural network to perform depth completion on the image to be processed.

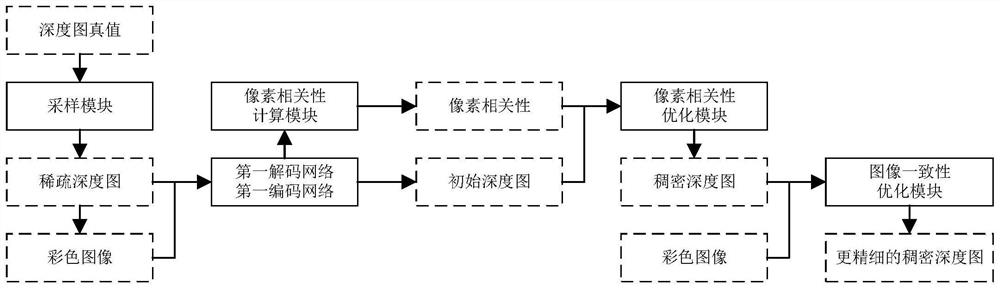

[0055] In step S1, the neural network to be trained includes a sampling module, a first encoding network, a first decoding network, a pixel correlation calculation module, a pixel correlation optimization module and an image consistency optimization module. By training the neural network, the neural network can have the performance of depth completion. Therefore, before describing the depth completion method, the neural network training method, that is, step S1, will be described first.

[0056]refer to figure 1 , the neural network training method, that is, the process of training the neural network in step S1, includes the following steps:

[0057] P1. Get a color image;

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com