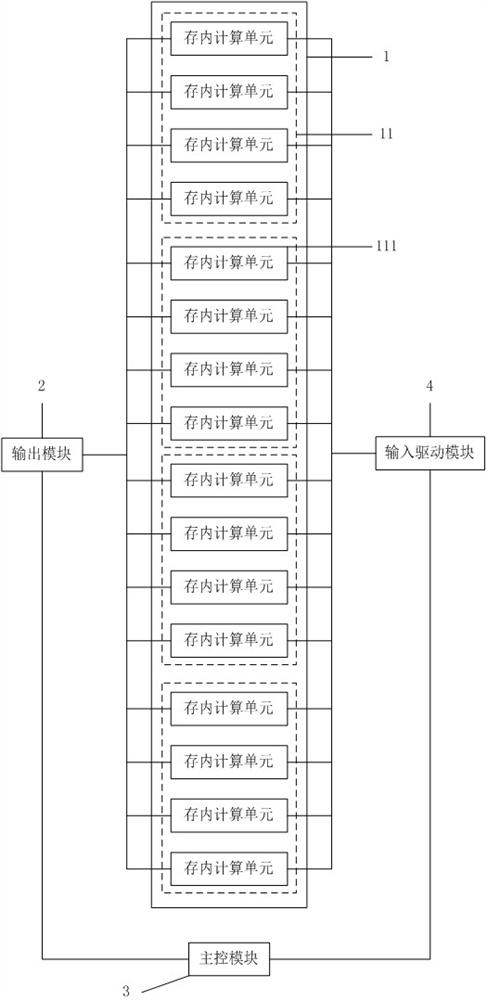

Distributed bit line compensation digital-analog hybrid in-memory computing array

A technology for calculating arrays and compensation numbers, which is applied in the field of distributed bit line compensation digital-analog hybrid in-memory calculation arrays, and can solve the problems that calculation results cannot be accurately quantized, word lines and bit lines are heavily loaded, and the accuracy of calculation results is affected. Achieve the effect of improving charging nonlinearity, small word line drive load, and saving area

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the protection scope of the present invention.

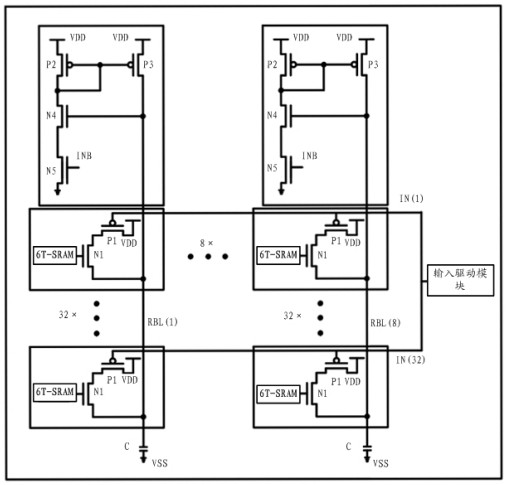

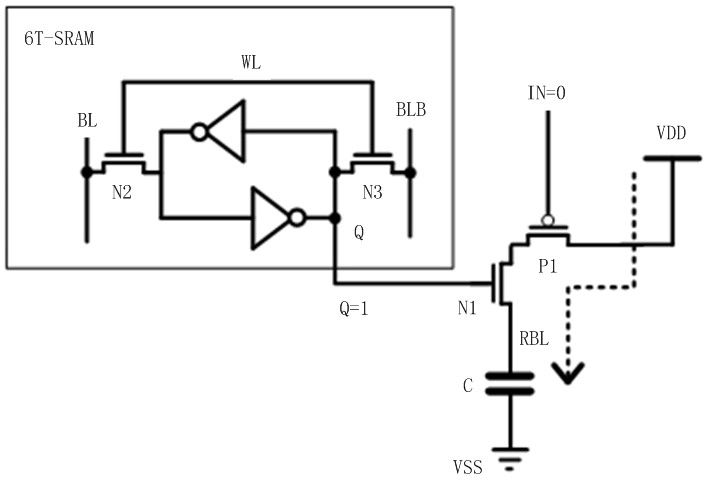

[0043] The purpose of the present invention is to provide a distributed bit line compensation digital-analog hybrid memory calculation array, which uses 8T calculation units, which relatively saves the number of transistors, and eliminates the need for reading due to the decoupling of calculation logic and weight storage units in the calculation multiplication stage Write interference; at the same time, the current mirror compensator proposed in this design cor...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap