Multi-sensor fusion vehicle-road collaborative sensing method for automatic driving

A multi-sensor fusion and automatic driving technology, applied in the field of artificial intelligence, can solve the problems of vehicle-road interconnection real-time communication delay, low positioning accuracy, and low perception accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] In order to make the above objects, features and advantages of the present invention more clearly understood, the present invention will be described in further detail below with reference to the accompanying drawings.

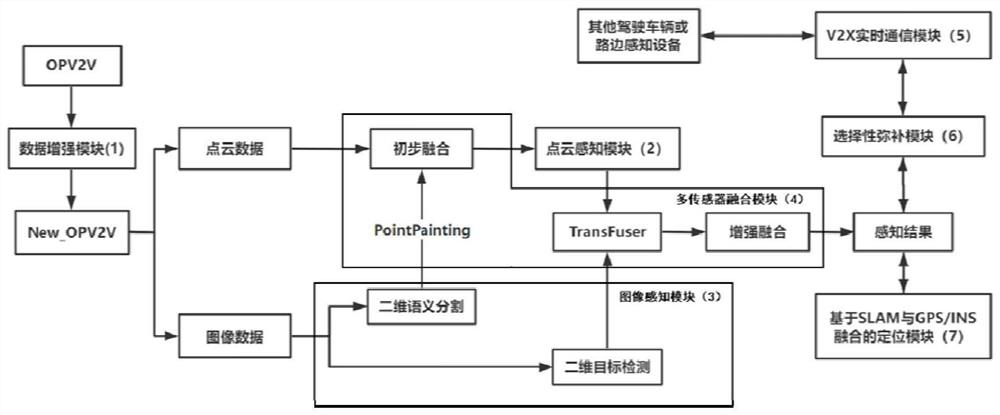

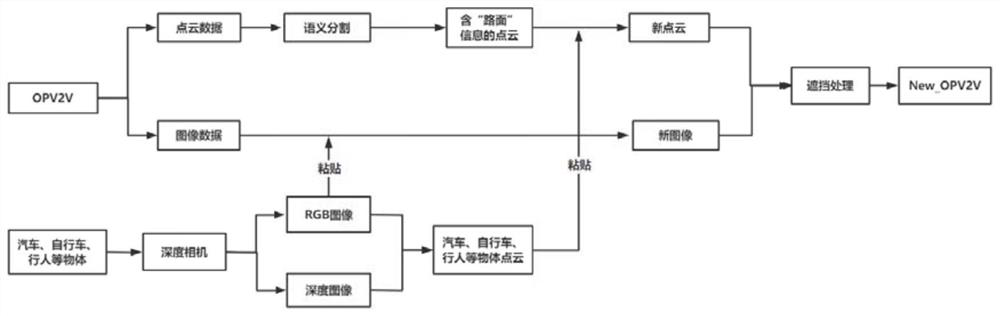

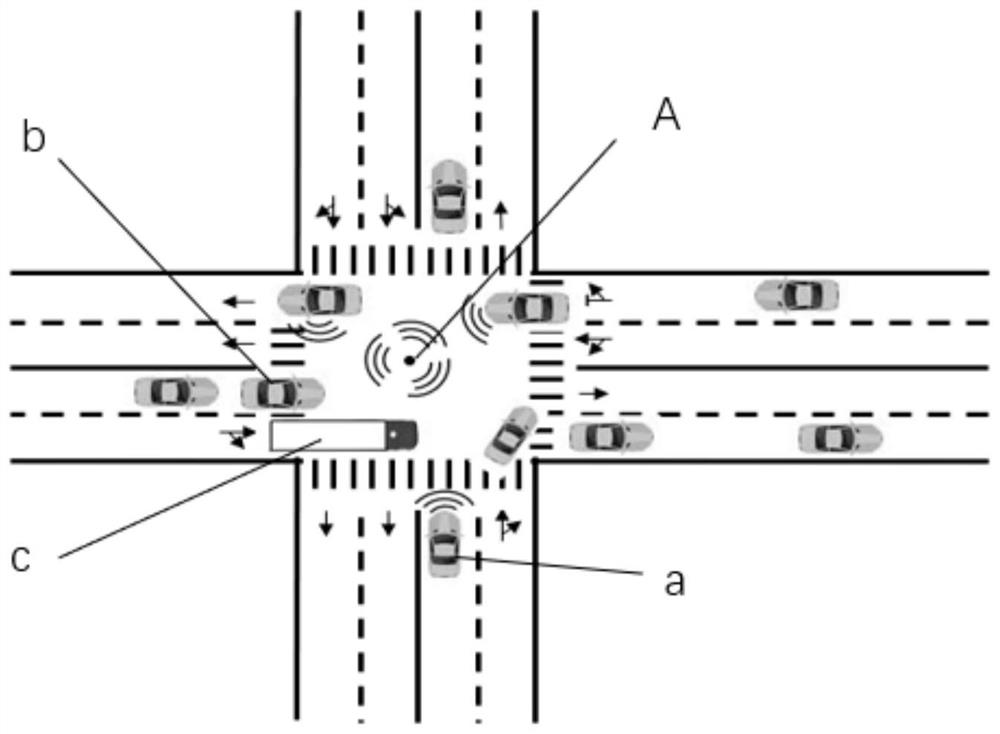

[0036] The invention provides a multi-sensor fusion vehicle-road collaborative sensing method for automatic driving, which is used to solve the problems of low environmental perception accuracy, real-time communication delay of vehicle-road interconnection and low positioning accuracy in urban roads in the prior art. like figure 1 As shown, it includes a data enhancement module (1), a point cloud perception module (2), an image perception module (3), a multi-sensor fusion module (4), a V2X real-time communication module (5), a selective compensation module (6), Positioning module based on fusion of SLAM and GPS / INS (7).

[0037] figure 1 It is an overall conceptual diagram of the multi-sensor fusion vehicle-road collaborative sensing method for automa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com