Extraction method of key frame of 3d human motion data

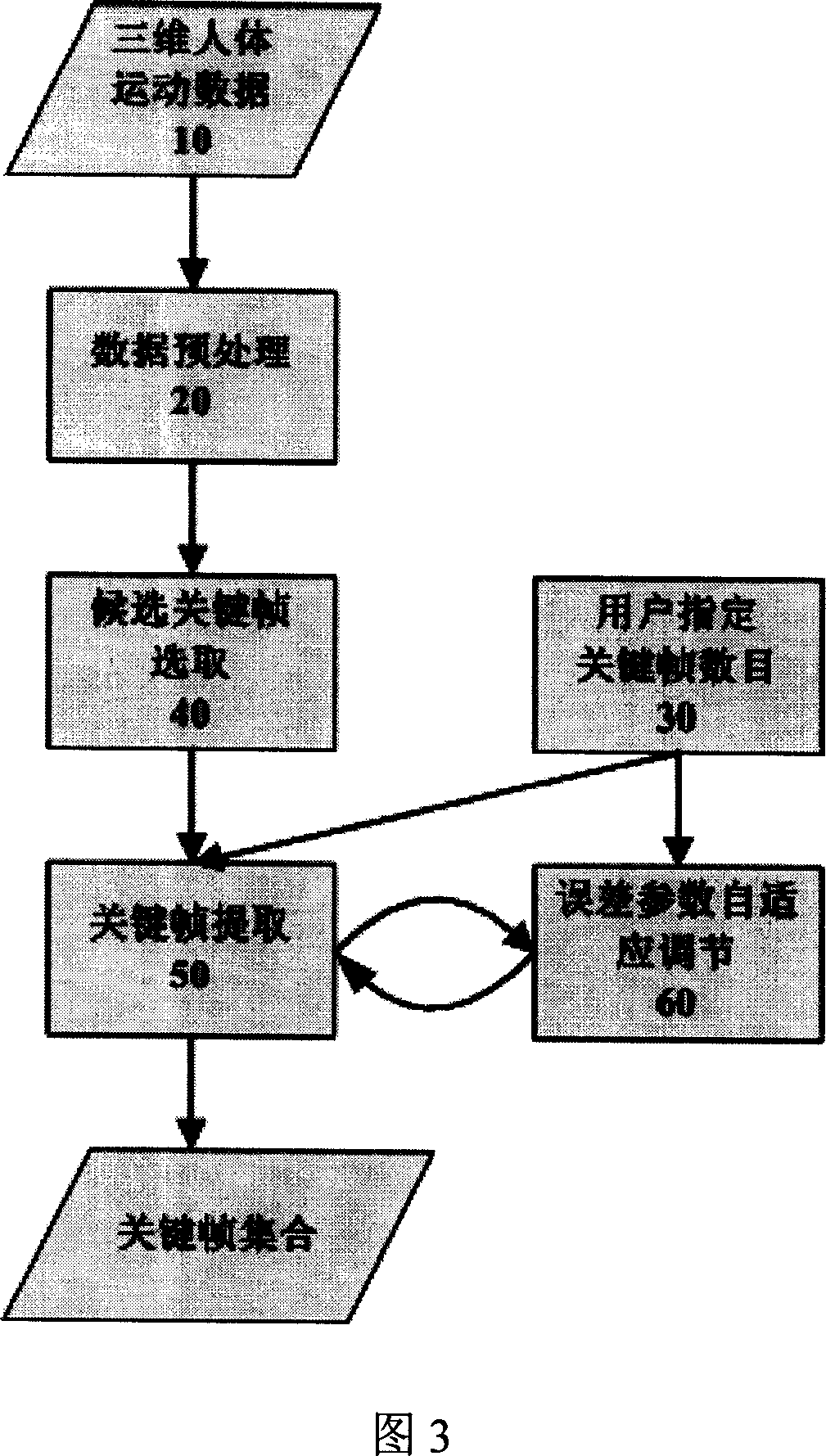

A technology of human body movement and extraction method, which is applied in image data processing, electrical digital data processing, special data processing applications, etc., and can solve the problems of low computing efficiency and accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

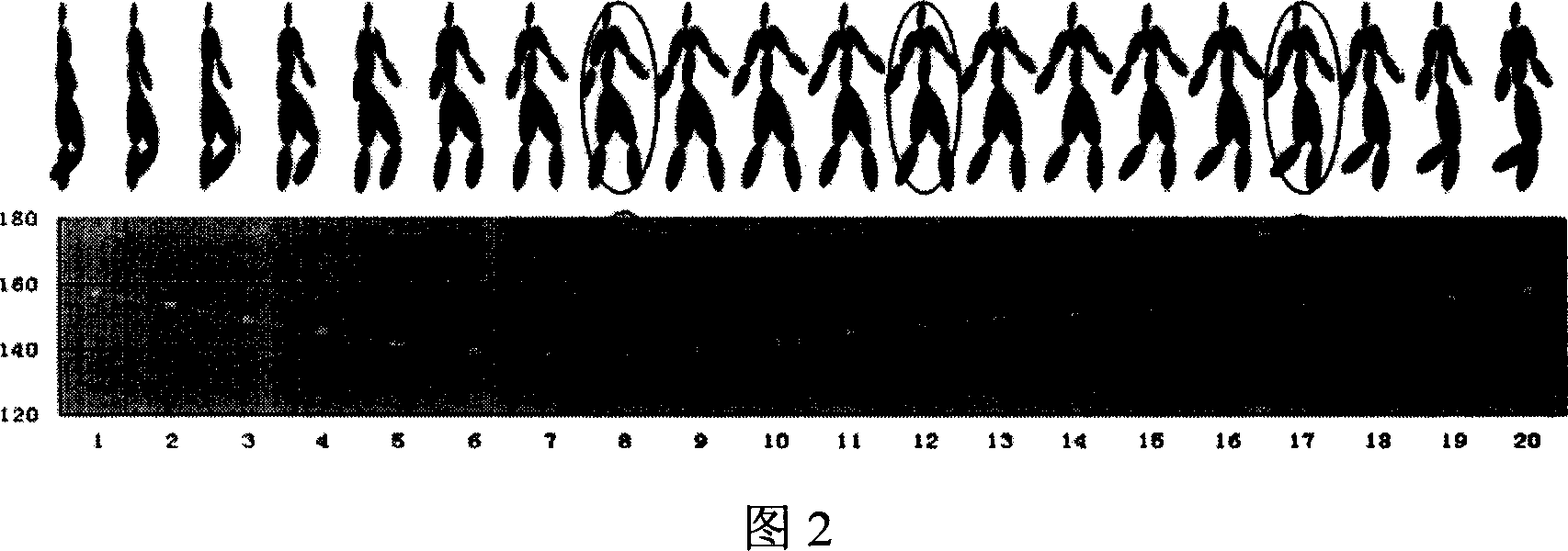

[0087] As shown in Figure 4, an example of key frame extraction for a punching movement is given. Below in conjunction with the method of the present invention describe in detail the concrete steps that this example implements, as follows:

[0088] (1) Use an optical motion capture system to capture a section of punching motion data with a length of 100 frames;

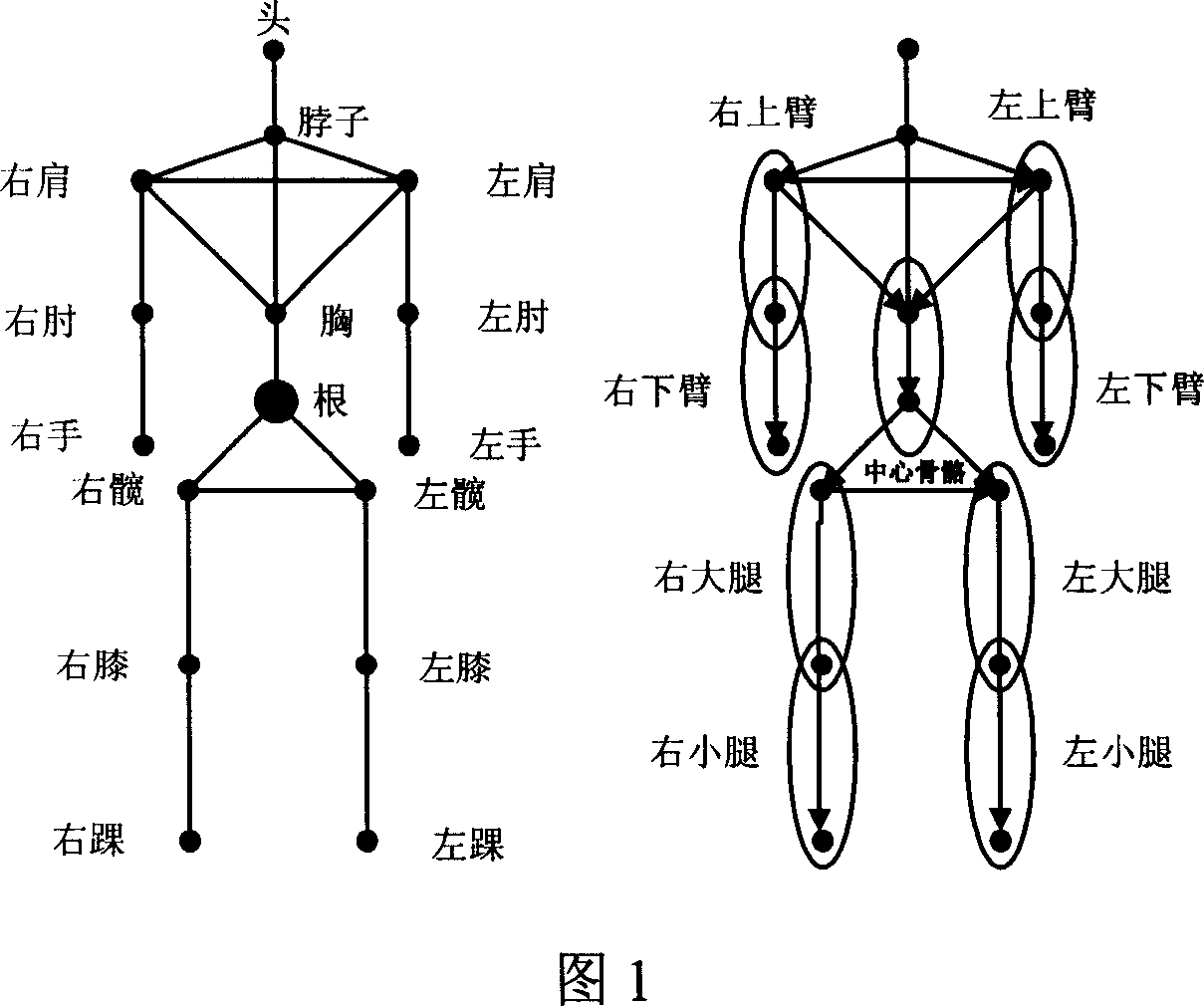

[0089] (2) with the original motion data of TRC format that captures in step (1) as input, adopt existing motion data conversion method that TRC data is converted into the rotation data representation format that meets the definition of the present invention with 16 articulation points;

[0090] (3) Based on the standard human body model obtained in step (2) and the rotational motion data, the angle between the limb bones and the central bone is calculated by using the formula (1) in the claim to form an octet sequence representing the posture of the human body Fi;

[0091] (4) The user specifies the number n of key...

Embodiment 2

[0096] As shown in Figure 5, an example of extracting different numbers of key frames for a piece of stair climbing motion data is given. Below in conjunction with the method of the present invention describe in detail the specific steps that this example implements, as follows:

[0097] (1) The input is a stair climbing motion sequence with a length of 85 frames obtained by an optical motion capture system, and the data file is the original motion data in TRC format;

[0098] (2) adopt existing data format conversion method to convert the motion data of TRC format into the rotary motion data that meets the requirements of the present invention with 16 standard articulation points, promptly have the BVH data format of 16 designated articulation points;

[0099] (3) based on the BVH format data that step (2) obtains, adopt the formula (1) in the claim to calculate the included angle between limb skeleton and central skeleton, form the octet sequence Fi that represents human pos...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com