Traffic management architecture

a technology of traffic management and architecture, applied in the field of traffic management, can solve the problems of inability to achieve, complex routing schemes, sheer volume of traffic management schemes,

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

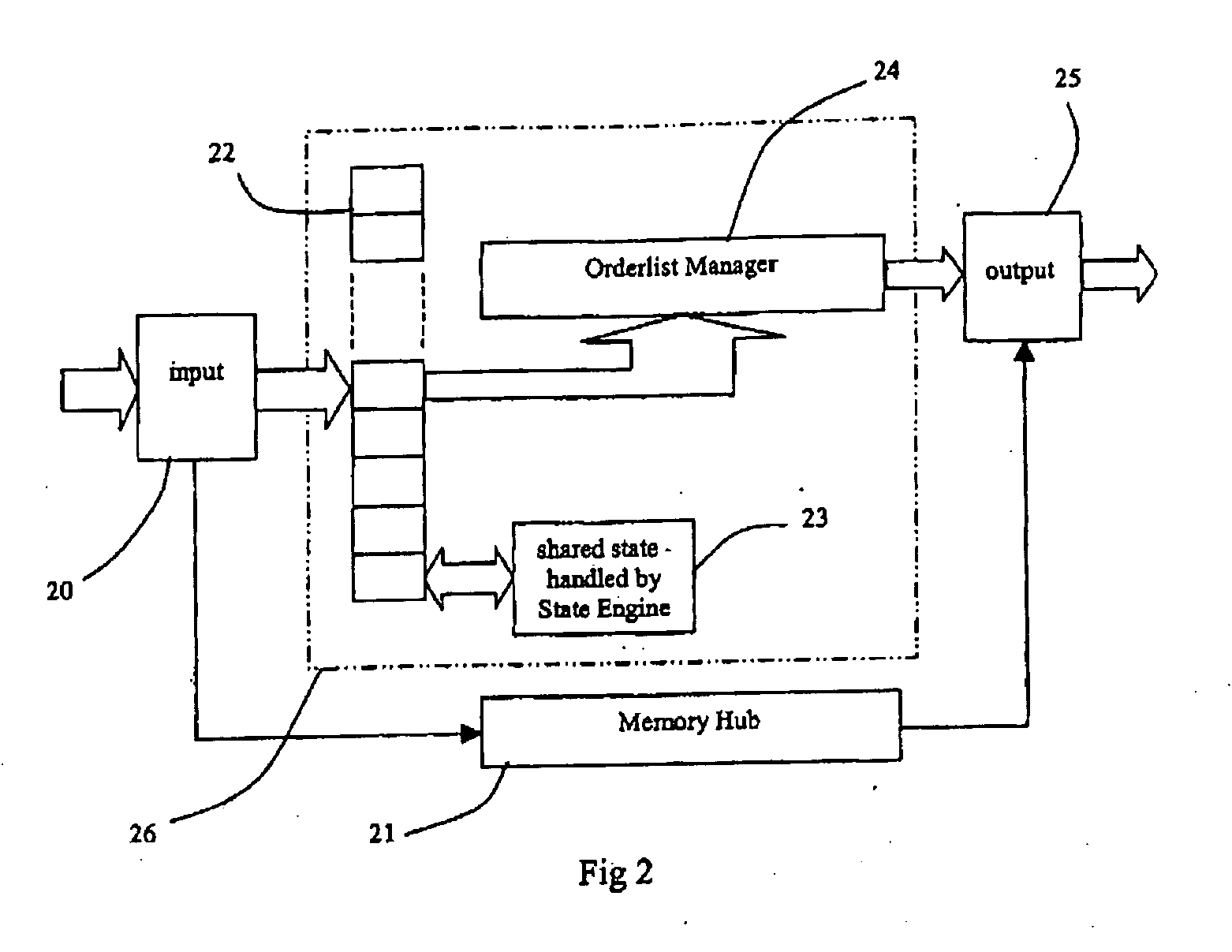

[0034] The present invention turns current thinking on its head. FIG. 2 shows schematically the basic structure underlying the new strategy for effective traffic management. It could be described as a “think first, queue later™” strategy.

[0035] Packet data (traffic) received at the input 20 has the header portions stripped off and record portions of fixed length generated therefrom, containing information about the data, so that the record portions and the data portions can be handled separately. Thus, the data portions take the lower path and are stored in Memory Hub 21. At this stage, no attempt is made to organise the data portions in any particular order. However, the record portions are passed to a processor 22, such as a SIMD parallel processor, comprising one or more arrays of processor elements (PEs). Typically, each PE contains its own processor unit, local memory and register(s).

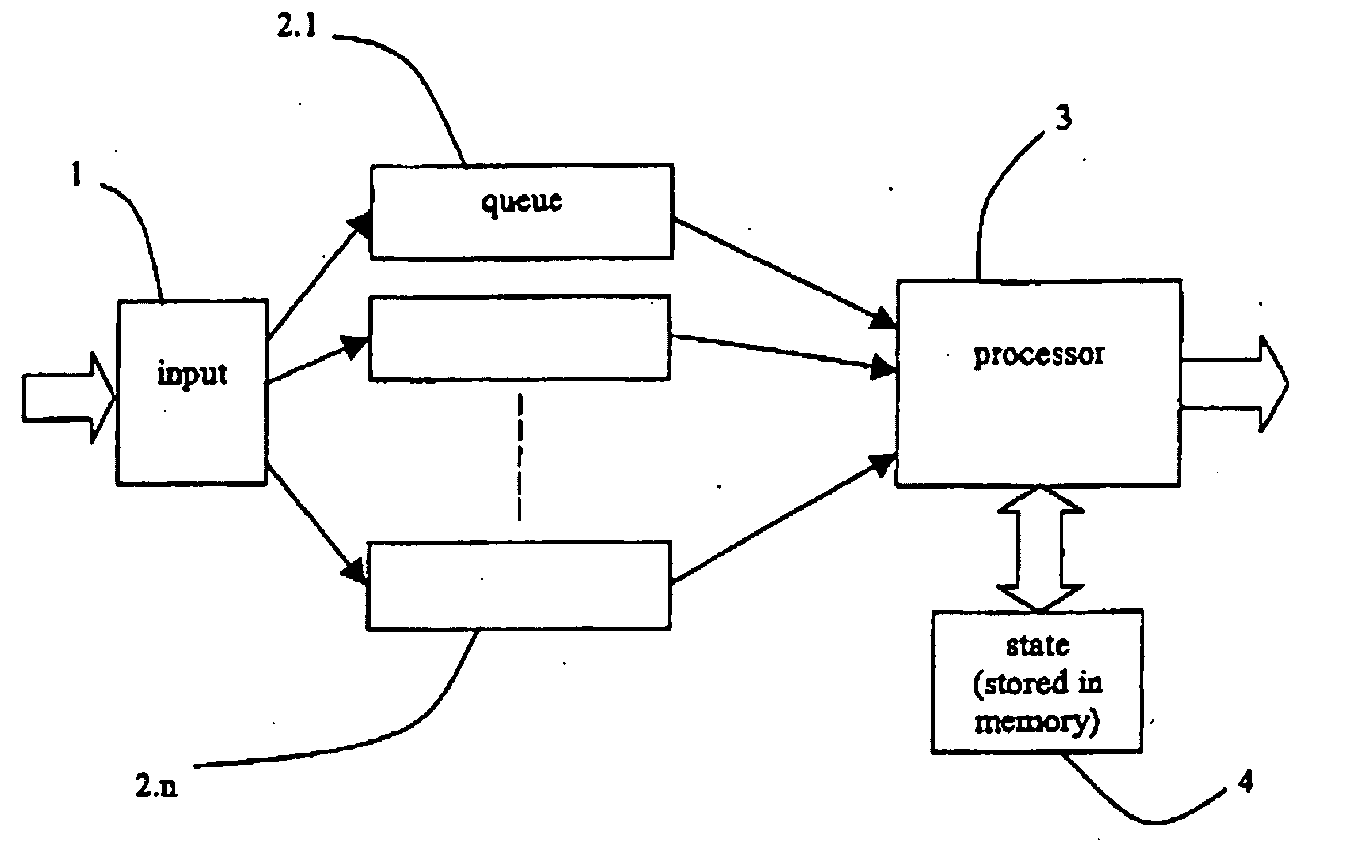

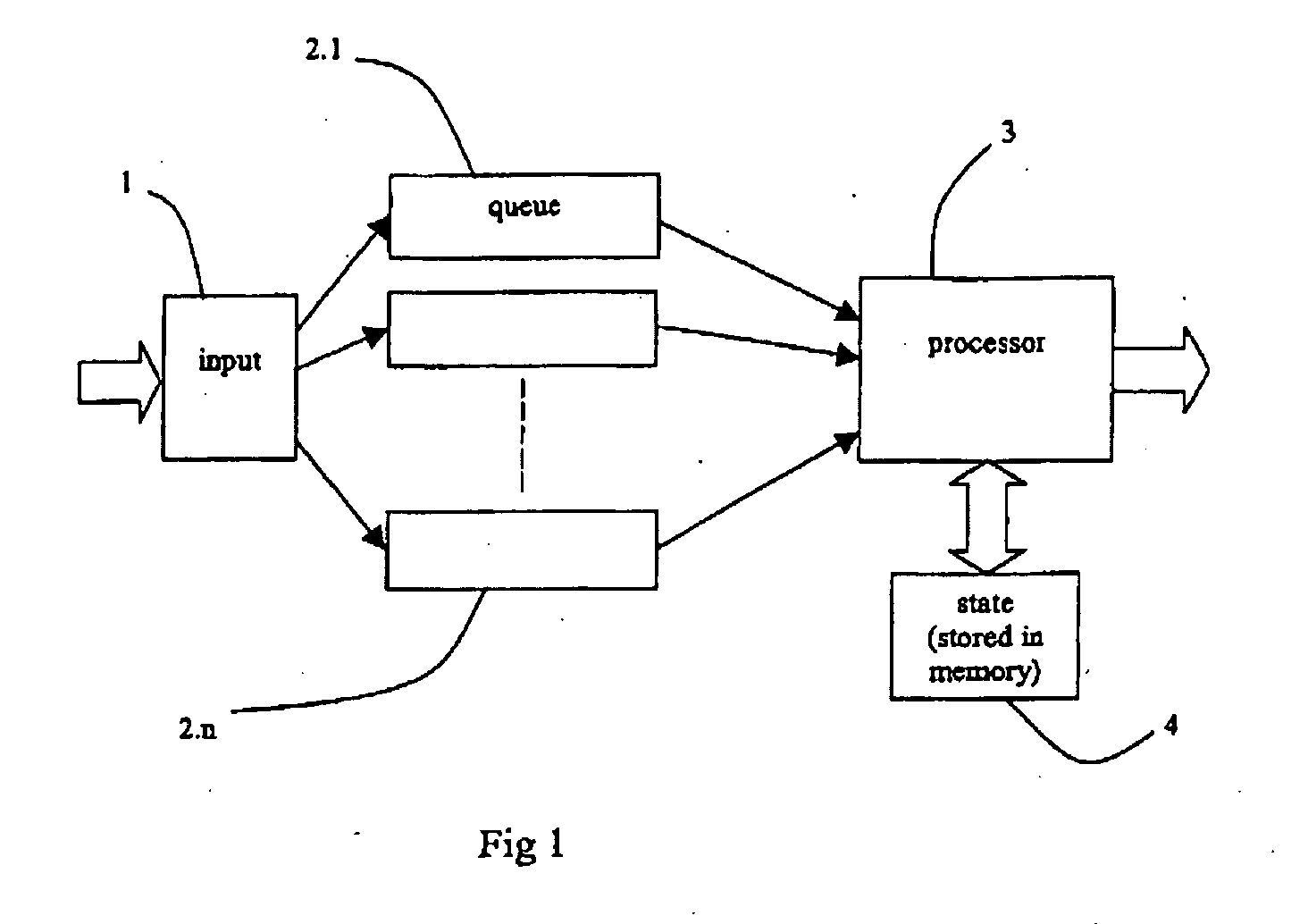

[0036] In contrast to the prior architecture outlined in FIG. 1, the present architecture sha...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com