Flow State Aware QoS Management Without User Signalling

a flow state and user signal technology, applied in the field of communication network and a method of operating a communication network, can solve the problems of unsuitable video flow, adverse effects on all users, and reduce the sending rate of senders, so as to increase the quality of service offered

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

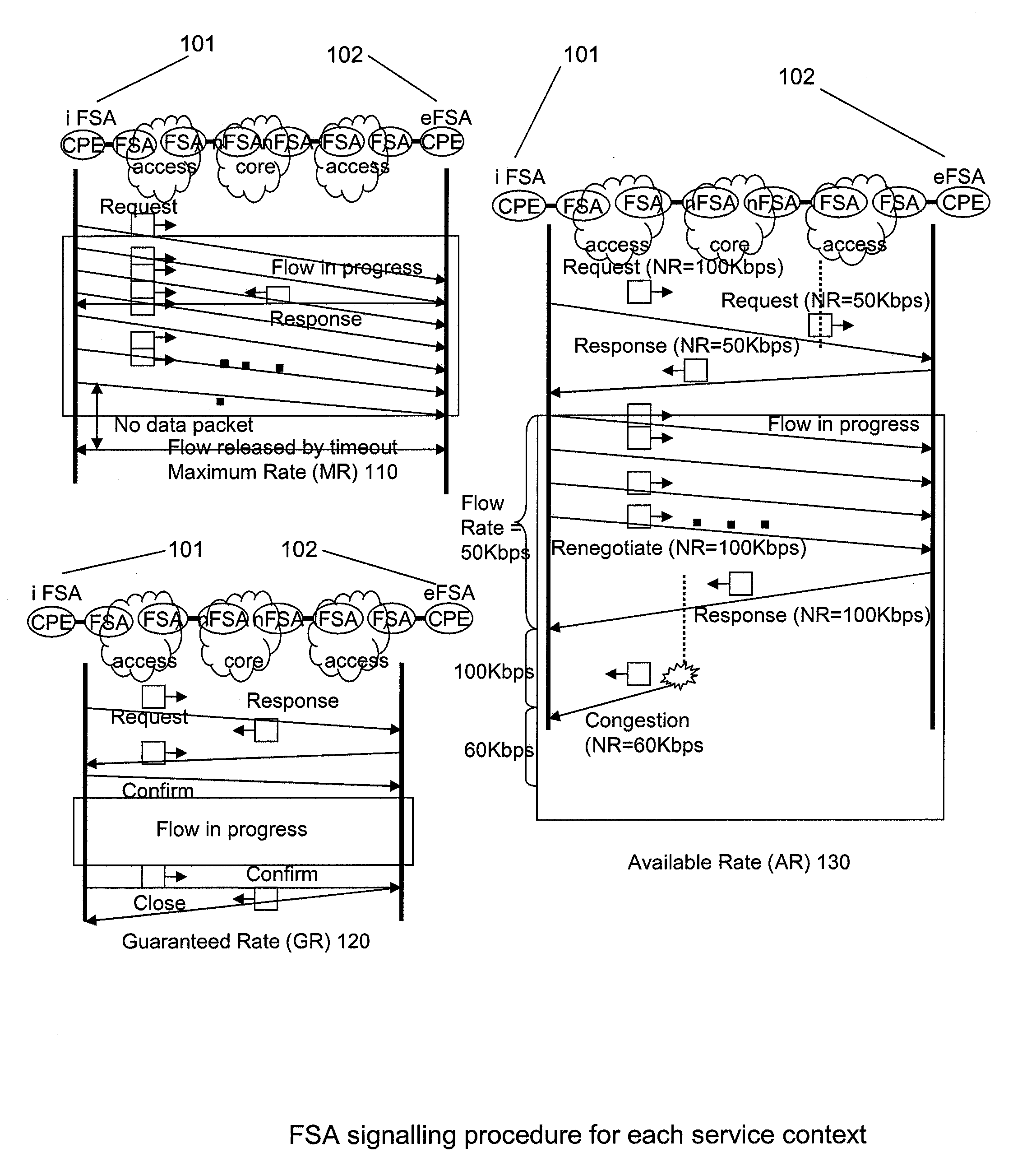

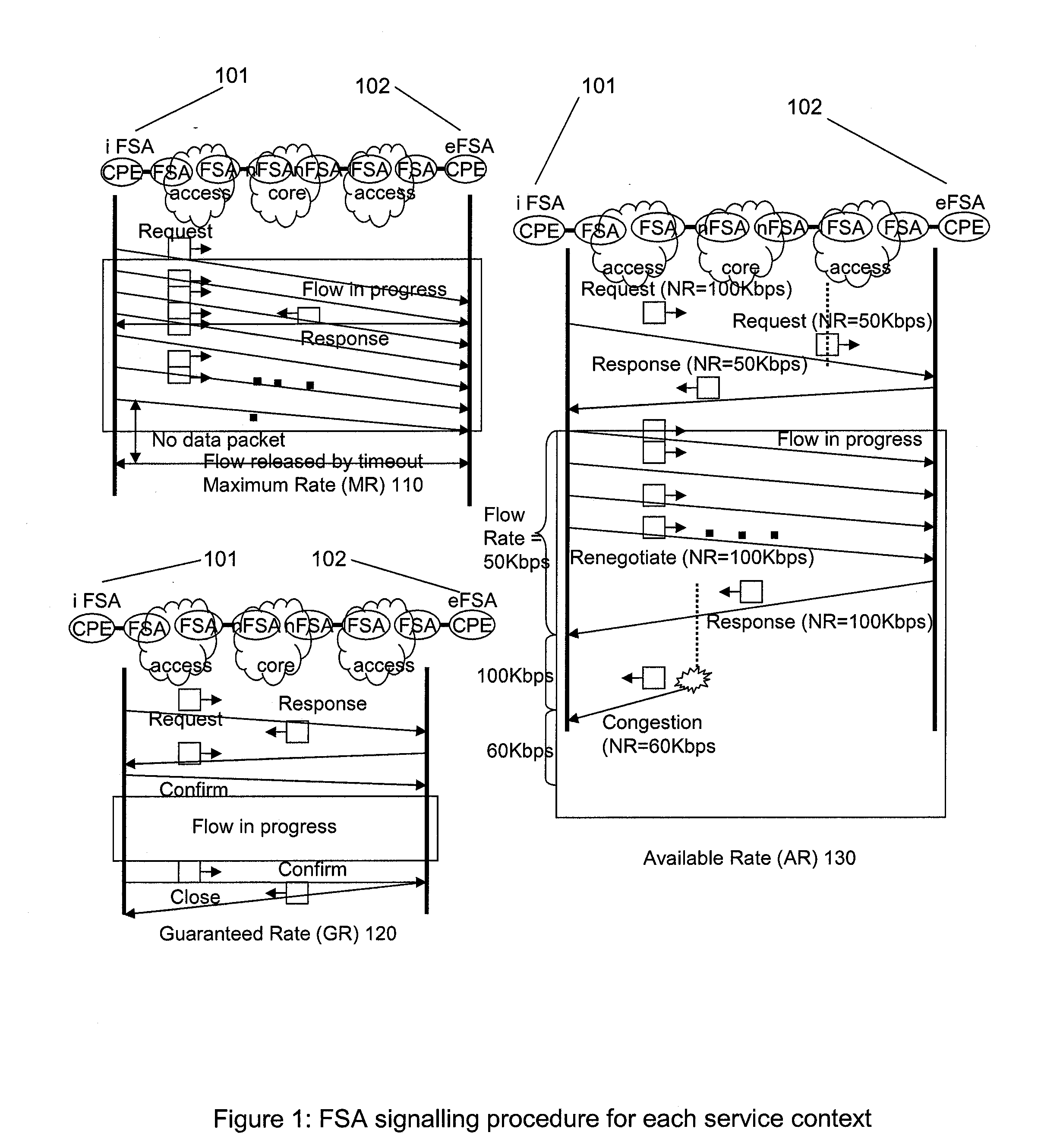

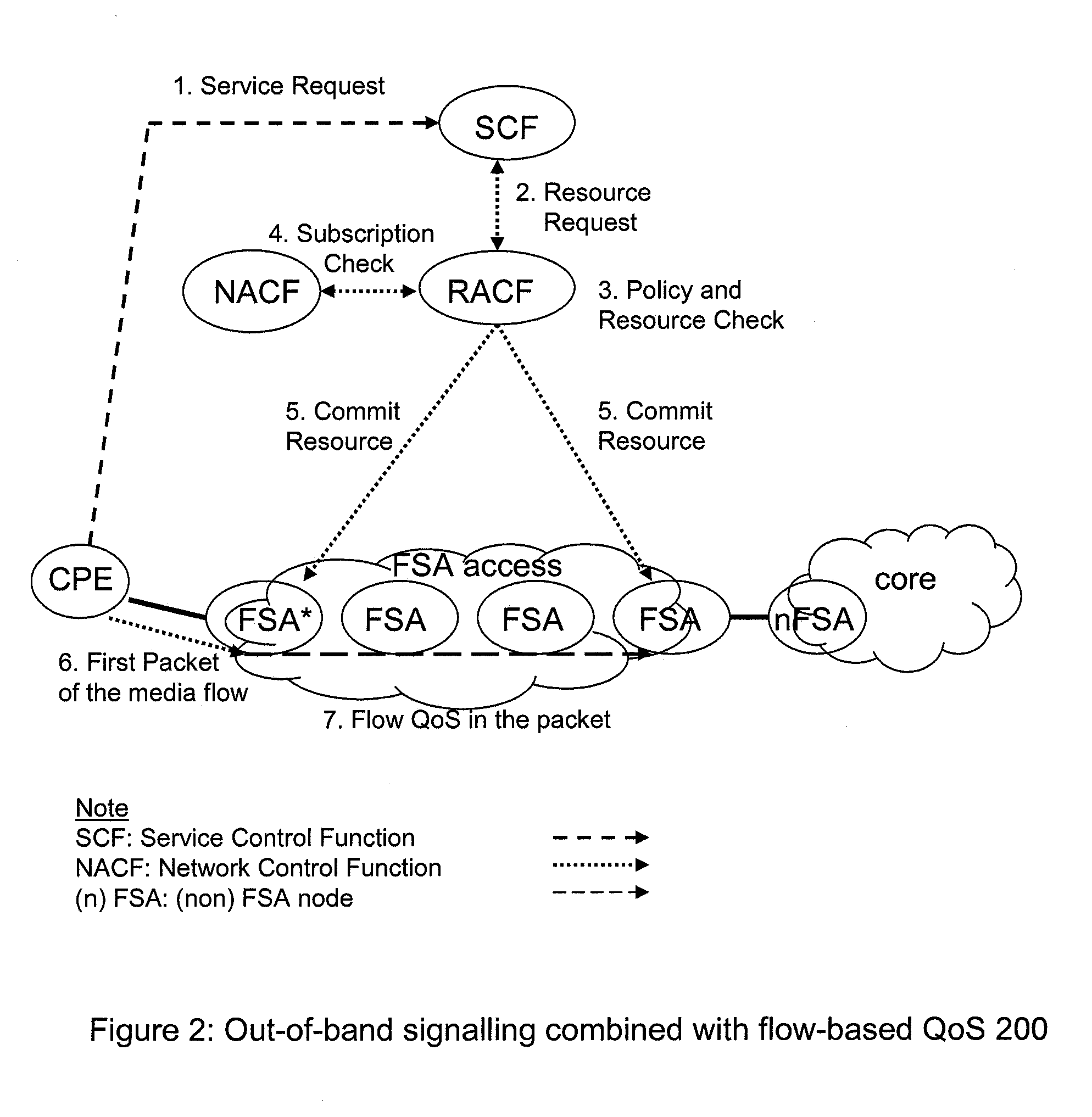

Method used

Image

Examples

first embodiment

[0142]FIG. 4 relates to the first preferred embodiment and shows an expansion of the function 6, containing sub-function 6.1 to 6.4. The size of buffer 6.1 is based on the following considerations. Firstly, as all flows are being policed at input 6.2 against their individual capacity allocations, it is possible to arrange the operation to be such that the sum of all capacity allocations is never larger than the output link capacity. In such a case, only the simultaneous and independent forwarding of packets from two or more input links to the same output link would cause the need for buffering at the output. But two other conditions are considered within the scope of this present first embodiment of the invention:[0143]Sudden surges of traffic on to a specific output link, for example due to traffic being re-routed following a link failure. This may happen, for example, in some applications where alternative paths are established between the content source and a group of receiving e...

third embodiment

[0184]In another embodiment, this delay interval may be a pre-determined fixed short interval. In a third embodiment, every packet is automatically delayed for a pre-determined fixed short interval, but function 6.5.4 is informed only of those that are to be examined for possible deletion as described next.

[0185]If the Control Function 6.5.4 detects there is already a delayed packet for the same aggregate identity (e.g. there is already a delayed packet waiting to go to the same given end-user), then function 6.5.4 proceeds as follows:[0186]If any delayed packet of this same aggregate identity belongs to a flow with flow status “vulnerable to discard”, function 6.5.4 instructs function 6.5.2 to delete that packet[0187]If there are no such packets that can be deleted because of the “vulnerable to discard” status, the lowest preference priority packet is deleted from the Delay / Deletion function 6.5.2[0188]The state of the flow id of this deleted packet is changed to “vulnerable to di...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com