Artificial intelligence enhanced system for adaptive control driven ar/vr visual aids

an adaptive control and visual aid technology, applied in the direction of instruments, user interface execution, computing models, etc., can solve the problems of loss of mobility loss of income, etc., and achieve the effect of loss of income, loss of ability to read, and loss of mobility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

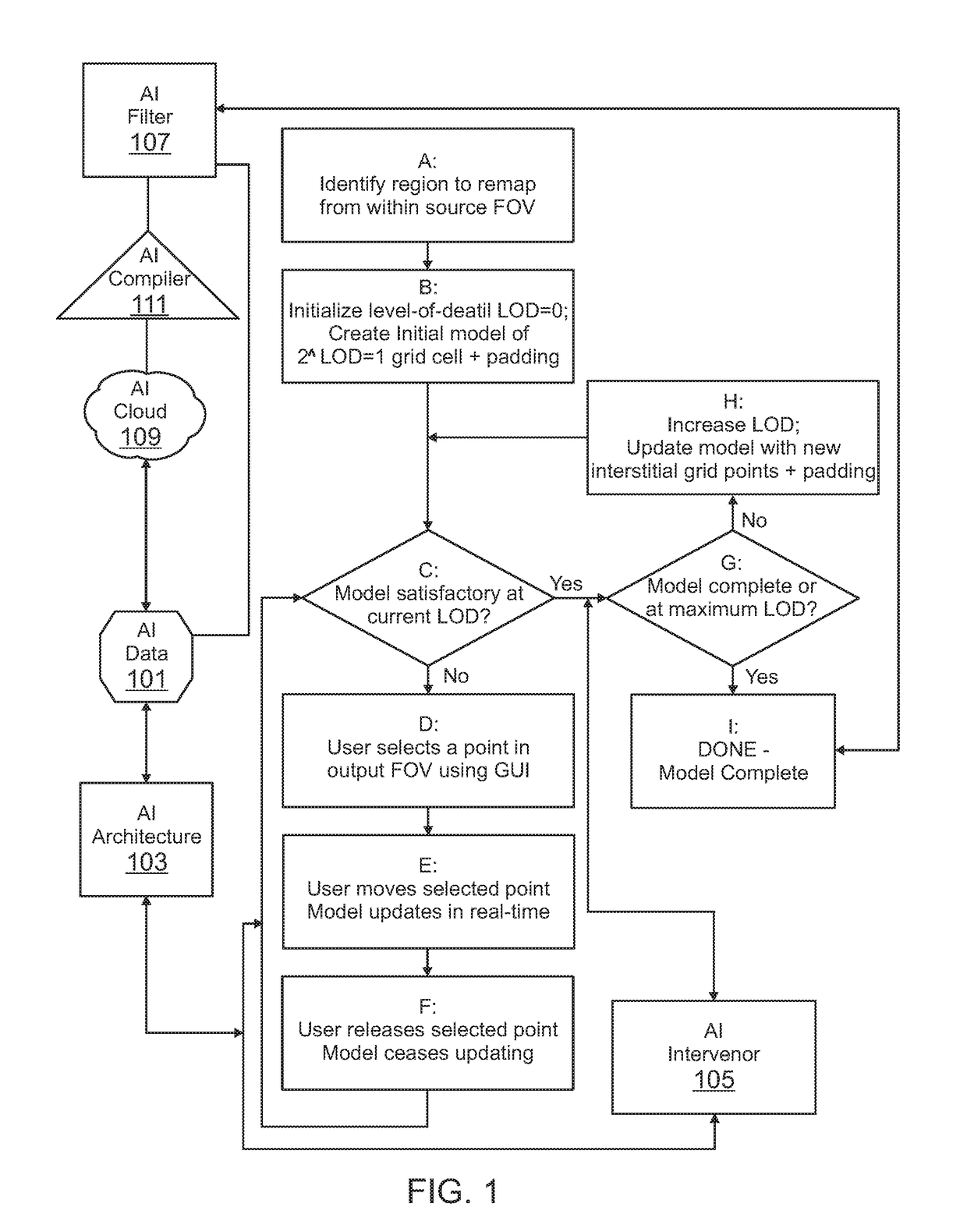

Image

Examples

Embodiment Construction

[0018]The present inventors have discovered that low-vision users can conform a user-tuned software set and improve needed aspects of vision to enable functional vision to be restored.

[0019]Expressly incorporated by reference as if fully set forth herein are the following: U.S. Provisional Patent Application No. 62 / 530,286 filed Jul. 9, 2017, U.S. Provisional Patent Application No. 62 / 530,792 filed Jul. 9, 2017, U.S. Provisional Patent Application No. 62 / 579,657, filed Oct. 13, 2017, U.S. Provisional Patent Application No. 62 / 579,798, filed Oct. 13, 2017, Patent Cooperation Treaty Patent Application No. PCT / US17 / 62421, filed Nov. 17, 2017, U.S. NonProvisional patent application Ser. No. 15 / 817,117, filed Nov. 17, 2017, U.S. Provisional Patent Application No. 62 / 639,347, filed Mar. 6, 2018, U.S. NonProvisional patent application Ser. No. 15 / 918,884, filed Mar. 12, 2018, and U.S. Provisional Patent Application No. 62 / 677,463, filed May 29, 2018.

[0020]It is contemplated that the proces...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com