Characteristic point matching method based on relativity measurement

A technology of correlation measurement and feature point matching, applied in image data processing, instrumentation, computing, etc., can solve problems such as poor matching performance, high time complexity and space complexity, and image noise is not robust

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

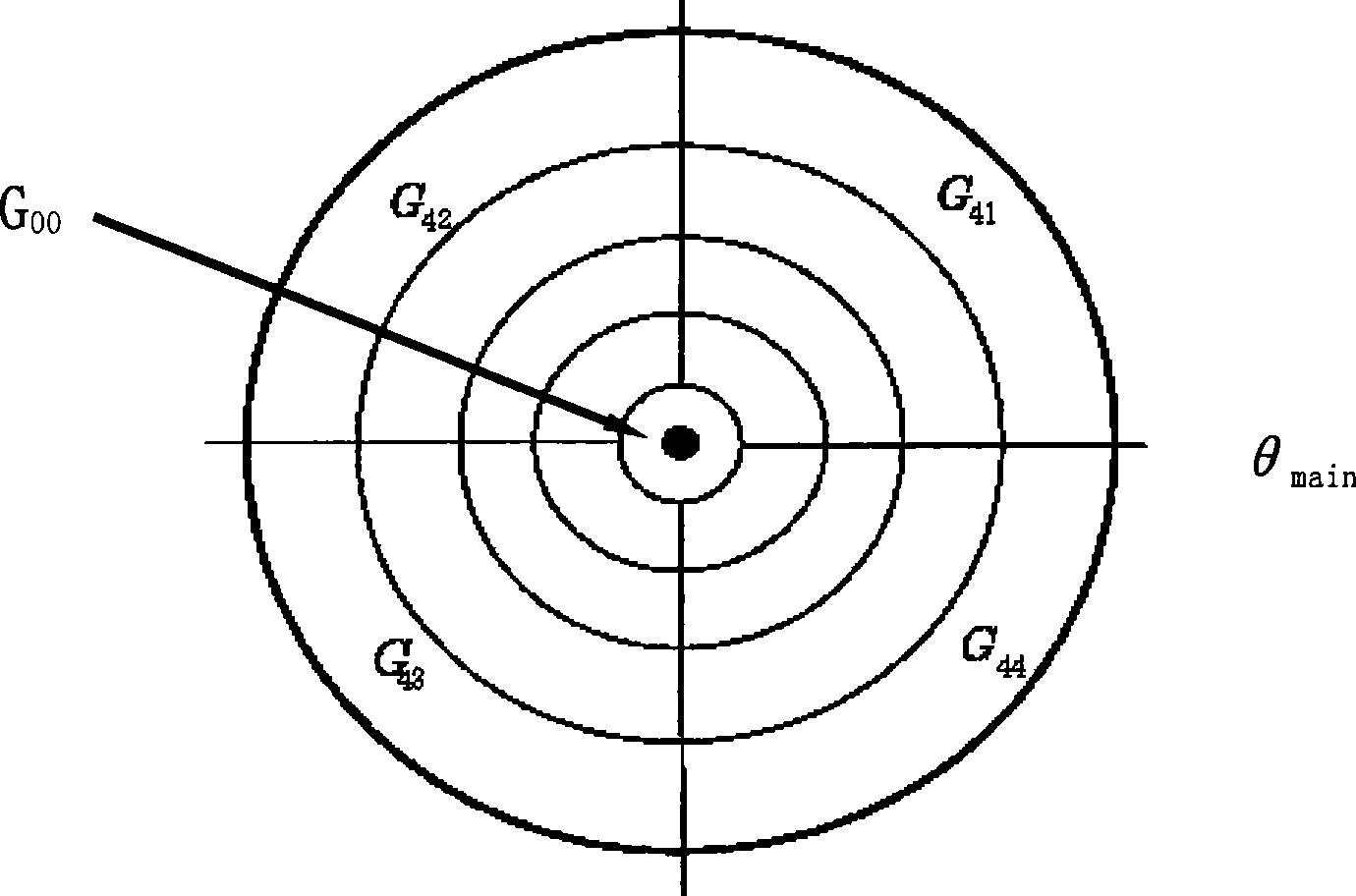

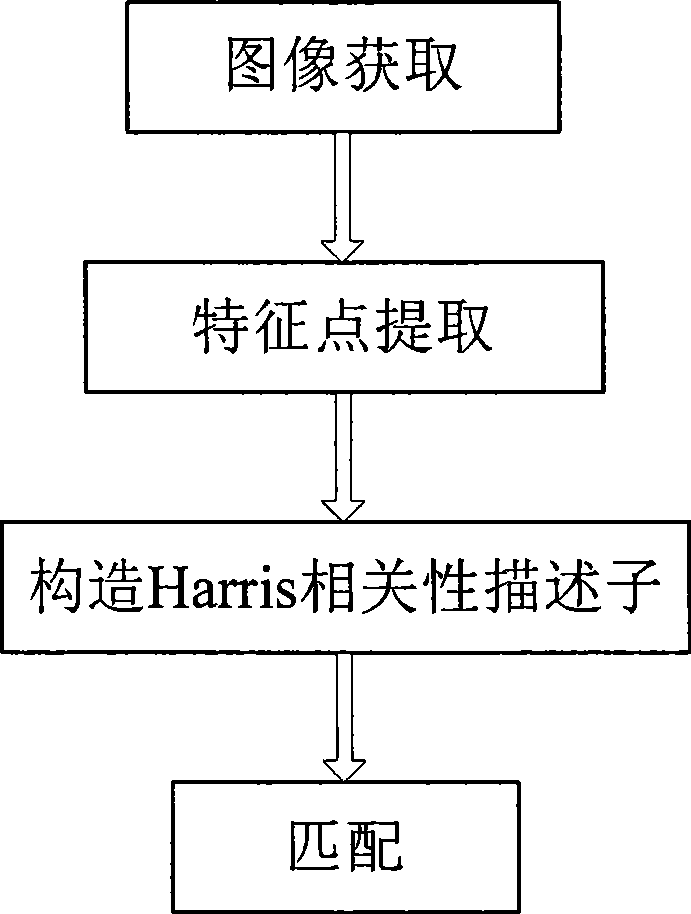

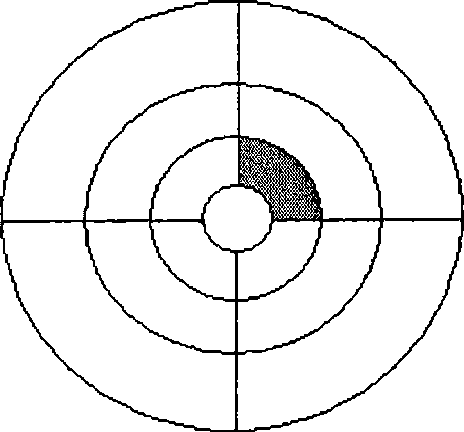

Method used

Image

Examples

example 1

[0069] Example 1 is the matching result of two Buddha statue scenes, such as Figure 5 As shown, from the matching results of this pair of Buddha images, the two images have a relatively large relative rotation, that is, when the image is taken, it is obtained by rotating the camera, and the NNDR criterion is used in the matching. According to experience, the value of NNDR is 0.75. After removing the candidate matching pairs whose NNDR value of the matching point pair is greater than 0.75, 214 matching pairs are obtained, the wrong matching pair is 0, and the matching accuracy rate is 100%.

example 2

[0070] The matching result of the rocky scene in Example 2, such as Figure 6 As shown, from this pair of rock scene images, the two images have a relatively large change in perspective, the matching criterion adopts the NNDR criterion, the NNDR value is 0.75, the number of matching pairs is 449, the number of wrong matching pairs is 4, and the matching accuracy rate is 98.89%.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com