Monocular visual positioning method based on inverse perspective projection transformation

A technology of projection transformation and positioning method, applied in the direction of photo interpretation, etc., can solve the problems of difficult extraction of feature points, large measurement errors of algorithms, and no consideration of cameras, so as to avoid large calculation errors, improve positioning accuracy, and eliminate perspective. effect of effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

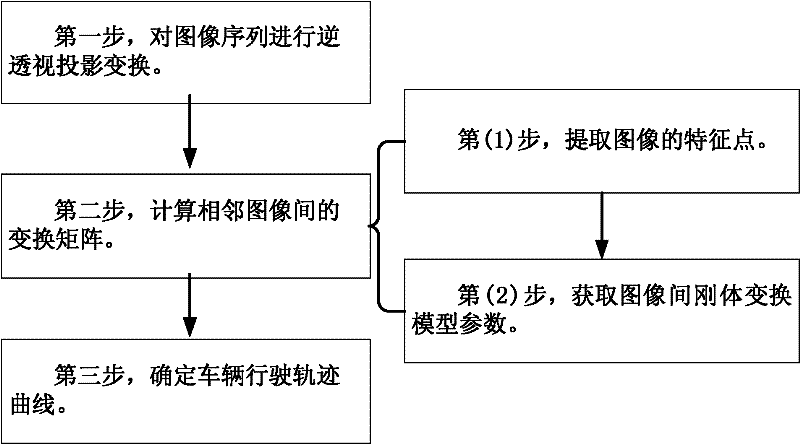

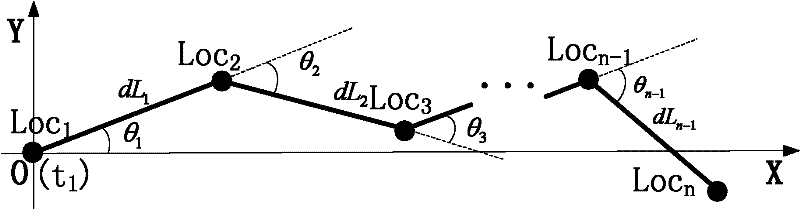

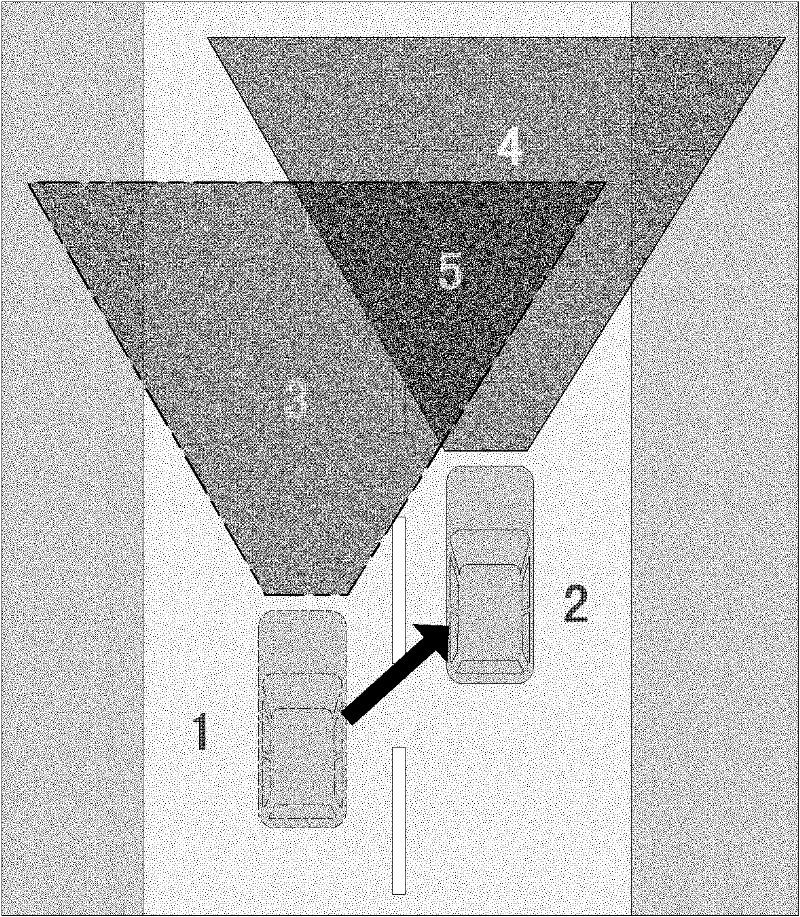

[0034] figure 1A flow chart of the specific implementation of the present invention is given. In the first step, an inverse perspective projection transformation is performed on the image sequence. In this step, for the method of solving the camera internal parameter matrix and the camera external parameter matrix, refer to pages 22 to 33 of the book "Principles and Applications of Photogrammetry" (published by Science Press, written by Yu Qifeng / Shang Yang). In the second step, the transformation matrix between adjacent images is calculated. Among them, step (1) is to extract feature points. In this step, the feature detection operator used is the SURF operator. For the properties and usage of the SURF feature operator, refer to the document "Distinctive image features from scale invariant key points" (Journal: International Journal of Computer Vision, 2004, 60( 2), page number 91-110, author David G.Lowe) and literature "SURF: Speeded up robust features" (Conference: Proc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com