A 3D gesture motion reconstruction method and system

A technology of three-dimensional gestures and gestures, which is applied in image data processing, instruments, calculations, etc., can solve problems such as local extremum and long algorithm running time, and achieve the effects of comprehensive information, reduced complexity, and convenient use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

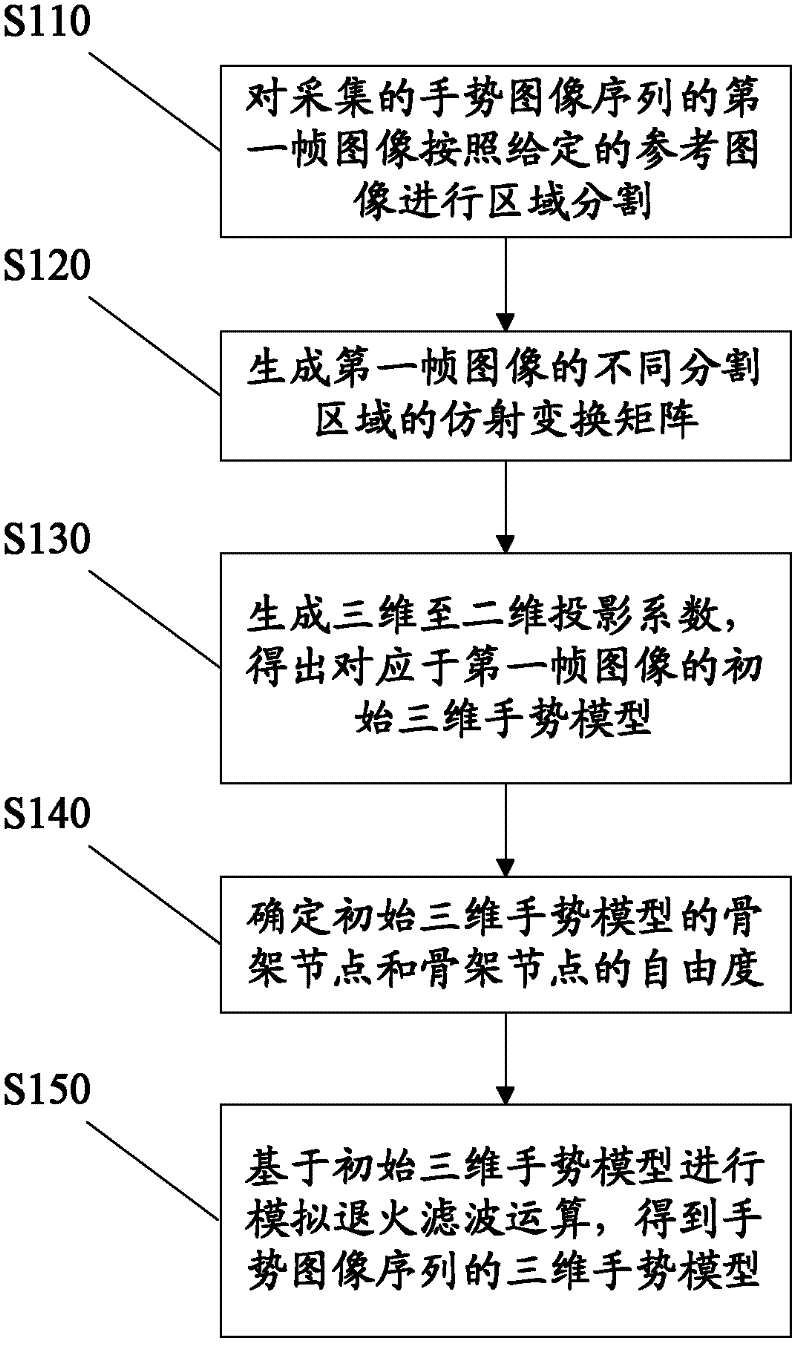

[0034] figure 1 It is a flow chart of the three-dimensional gesture motion reconstruction method according to Embodiment 1 of the present invention, refer to below figure 1 , detail each step.

[0035] Step S110 , perform region segmentation on the first frame image of the collected gesture image sequence according to a given reference image.

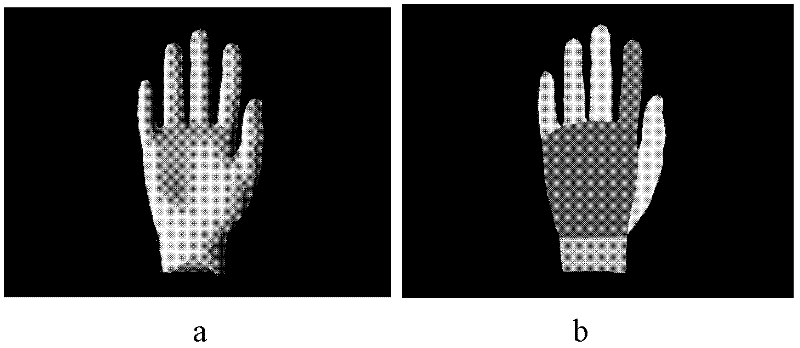

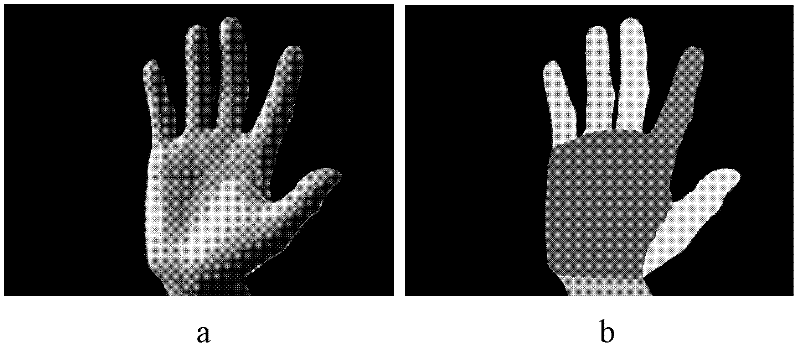

[0036] In this embodiment, the system pre-stores a reference image of a gesture, such as figure 2 As shown in a and b, the reference image performs area segmentation on the gesture, and divides each area of the hand by color. This embodiment has certain requirements on the first frame of the gesture image sequence collected by the user. The first frame of the image sequence is required to be a pattern of an open hand, so that various parts of the hand can be reflected. Preferably, the hand's The pattern is free of self-occlusion and external occlusion, and the fingers are facing up, the palm or the back of the hand is facing the c...

Embodiment 2

[0074] Figure 8 It is a schematic structural diagram of a three-dimensional gesture motion reconstruction system according to Embodiment 2 of the present invention, according to the following Figure 8 Describe in detail the components of the system.

[0075] The system is used to execute the method of Embodiment 1 of the present invention, and includes the following units:

[0076] A region segmentation unit, which performs region segmentation on the first frame image of the collected gesture image sequence according to a given reference image;

[0077] an affine transformation matrix generating unit, which generates an affine transformation matrix of each segmented region of the first frame image;

[0078] an initial model generation unit, which generates three-dimensional to two-dimensional projection coefficients, and obtains a three-dimensional gesture model corresponding to the first frame image according to the projection coefficients and the affine transformation ma...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com