Method for showing actual object in shared enhanced actual scene in multi-azimuth way

A real object, augmented reality technology, applied in image data processing, 3D modeling, instruments, etc., can solve problems such as expensive instruments, little consideration of error effects, and no need to share information sharing in augmented reality scenes. The effect of reducing computational overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be further described below in conjunction with the accompanying drawings, so that those of ordinary skill in the art can implement it after referring to this specification.

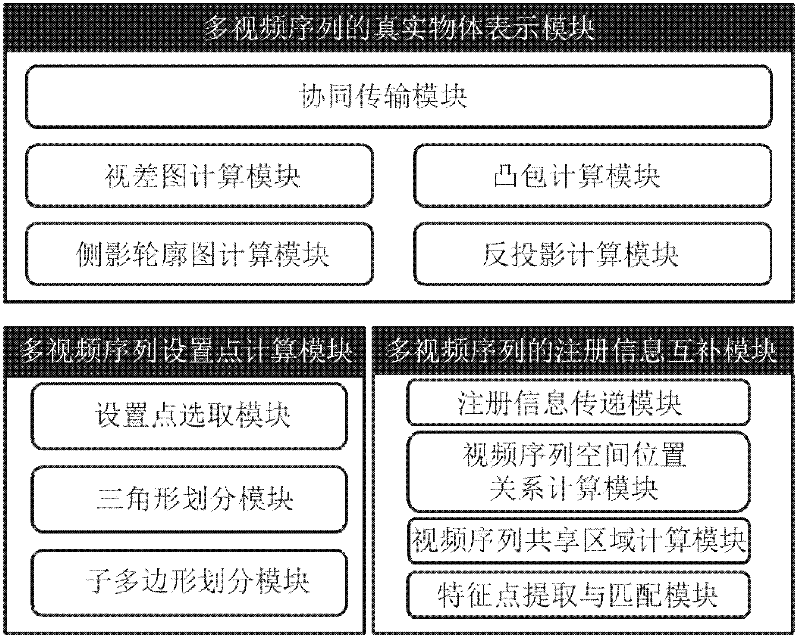

[0035] Such as figure 1 As shown, a real object multi-directional representation method of a shared augmented reality scene of the present invention comprises the following steps:

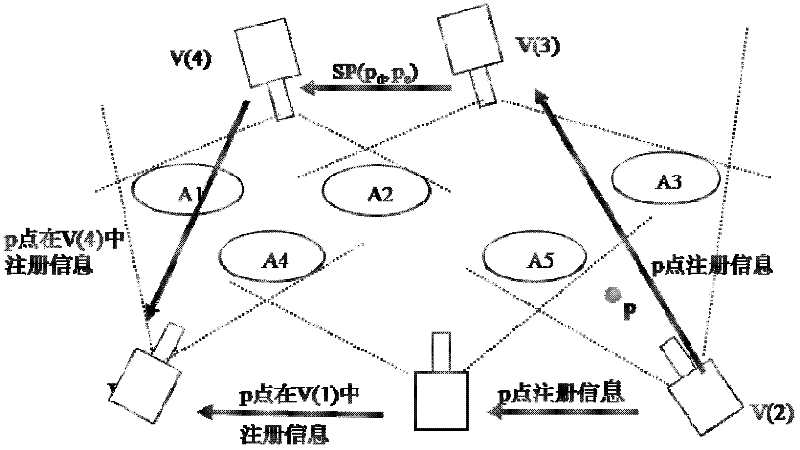

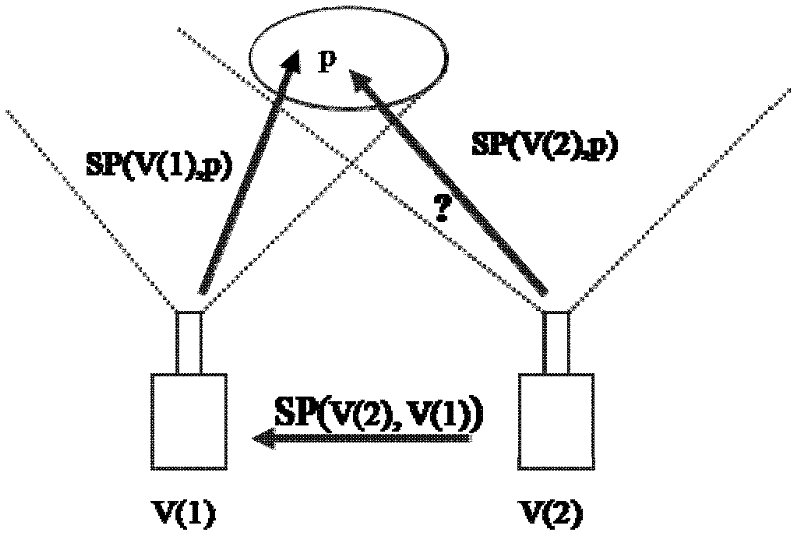

[0036]Step 1. The multi-video sequence set point calculation module abstracts the shared augmented reality scene area into a plane polygon P, and uses the scan line algorithm to divide the plane polygon into a plurality of triangles with no overlapping areas between each other. First, the polygon is divided into Multiple monotone polygons, and then divide each monotone polygon into multiple triangles. In the process of decomposing polygons into triangles, lines will be generated to connect different vertices of polygons. These lines are completely inside the polygon. Such lines are It is call...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com