Method for processing multi-viewpoint depth video

A technology of deep video, processing method, applied in the direction of digital video signal modification, television, electrical components, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

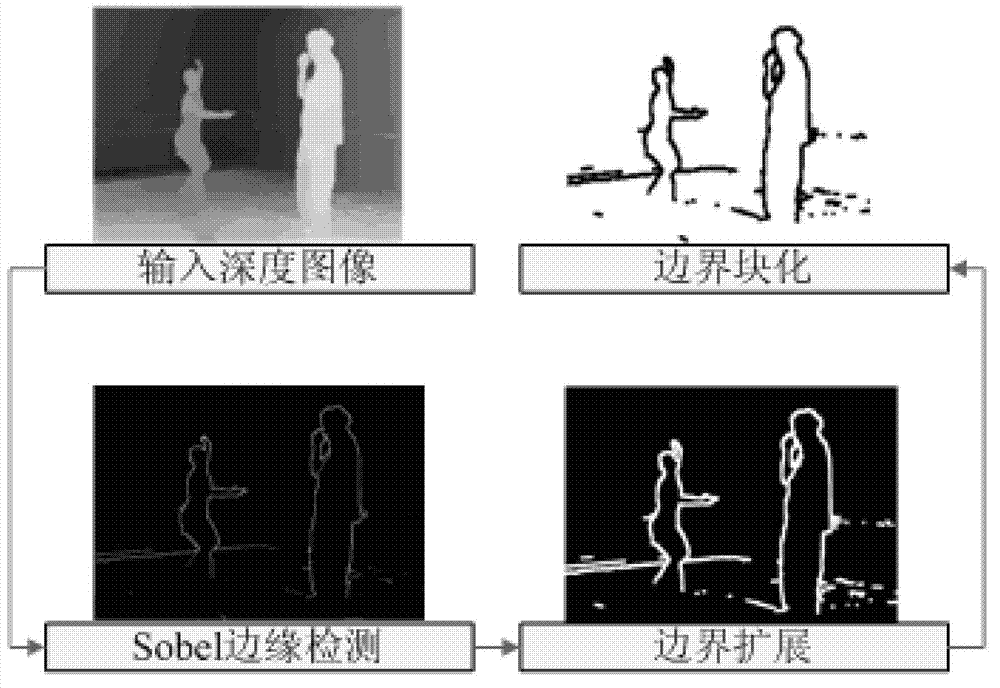

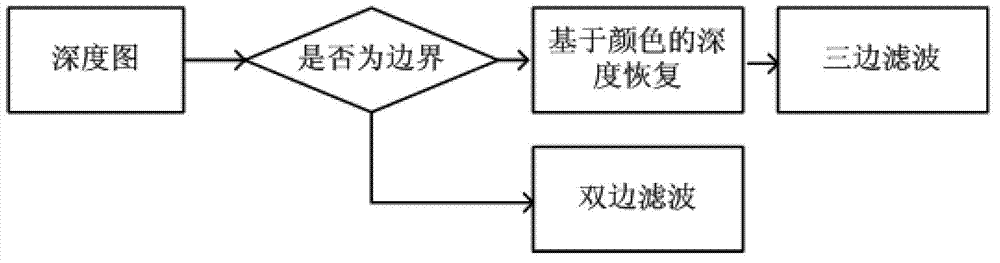

[0045] A method for processing multi-view depth video proposed by the present invention, the processing process is as follows: preprocessing the original multi-view depth video to be processed to reduce the encoding bit rate; then encoding and compressing the pre-processed multi-view depth video, Decoding and reconstruction operations; then perform depth restoration and spatial smoothing processing on the decoded and reconstructed multi-view depth video; finally use the processed multi-view depth video to draw virtual view video images.

[0046] In this specific embodiment, the specific process of preprocessing the original multi-viewpoint depth video to be processed is:

[0047] A1. Use the existing edge detection operator to perform edge detection on each frame of the color image in the original multi-viewpoint color video corresponding to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com