Method and device of pre-fetching data of compiler

A data prefetching and compiler technology, applied in the field of data processing, can solve problems such as lack of collaborative support, overall performance impact of software algorithms, SPM cannot complete the basic functions of Cache, etc., to achieve the effect of ensuring flexibility and easy implementation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention. All other embodiments obtained by those skilled in the art based on the embodiments of the present invention belong to the protection scope of the present invention.

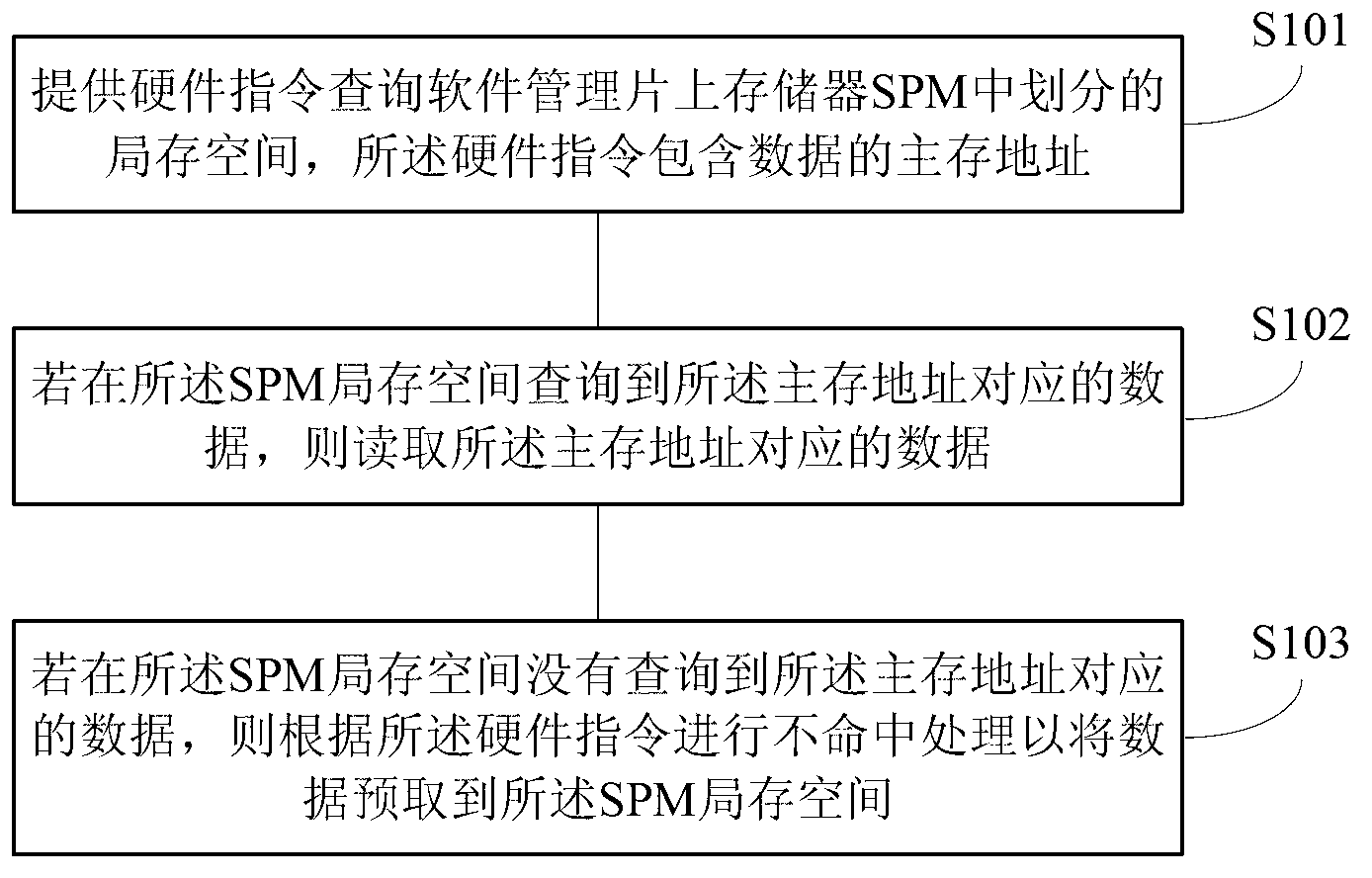

[0022] Please refer to the attached figure 1 , is a schematic flow chart of the compiler data prefetching method provided by the embodiment of the present invention, mainly including step S101, step S102 and step S103, specifically:

[0023] S101. Provide a hardware instruction to query the local storage space divided in the software management on-chip memory SPM, where the hardware instruction includes a main storage address of data.

[0024] On the software-managed on-chip memory (Scratch Pad Memory, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com