Method for processing space hand signal gesture command based on depth camera

A depth camera and space gesture technology, applied in image data processing, instruments, computing, etc., can solve the problems of large computing data, complex device structure, and inability to acquire data accurately and quickly

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

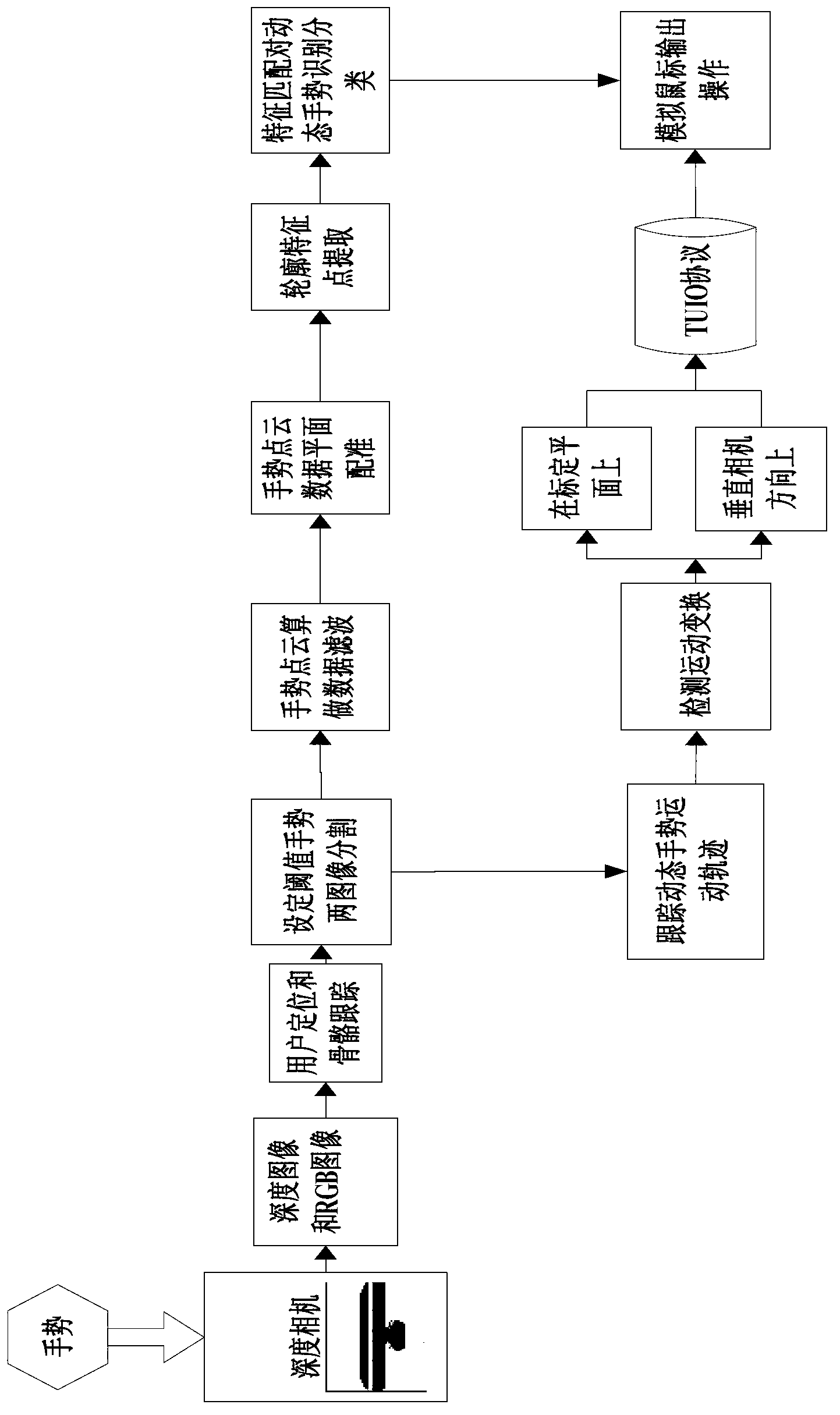

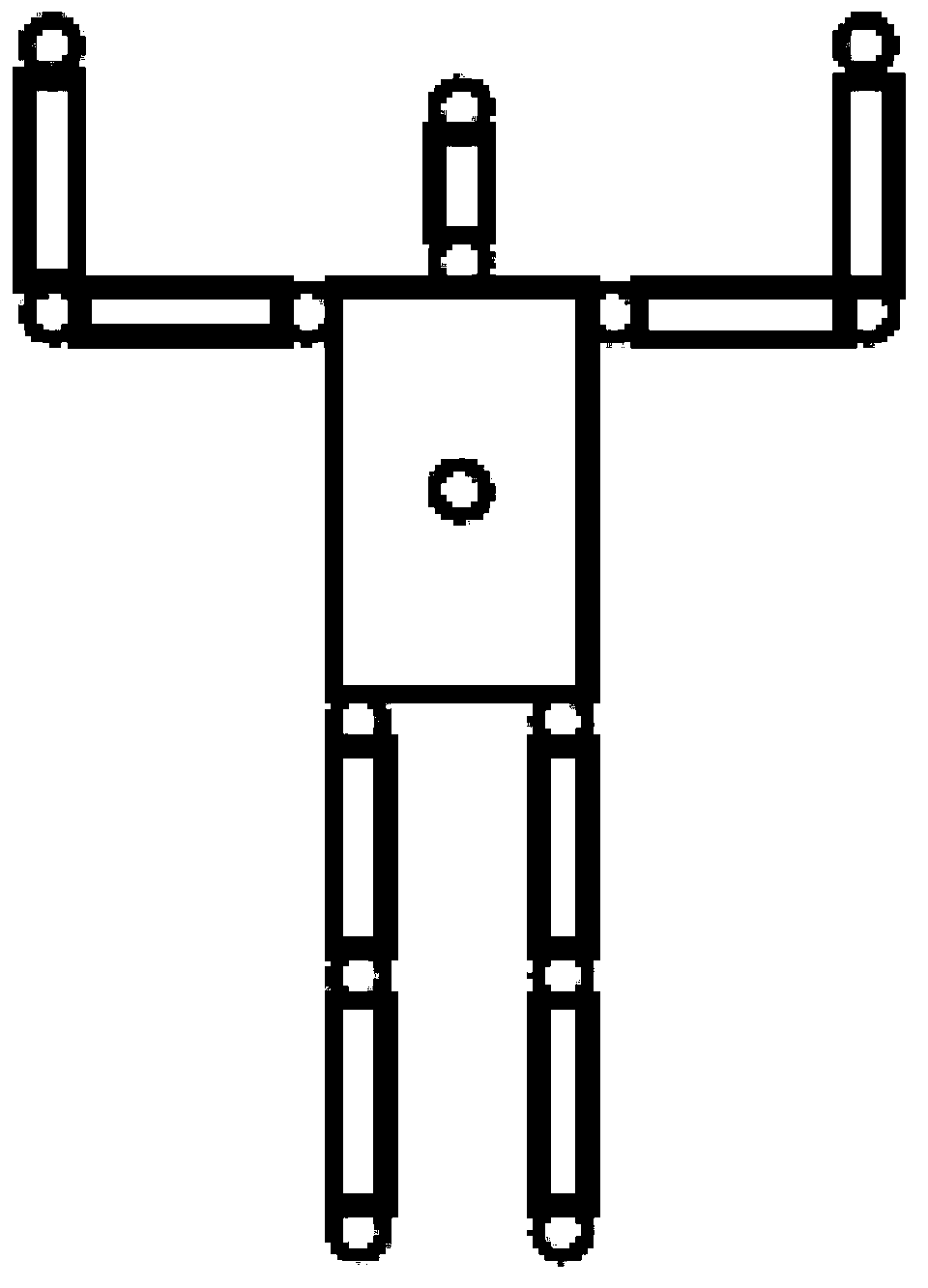

[0031] The present invention as Figure 1-2 shown, including the following steps:

[0032] The first step is to obtain real-time images through the depth camera, and the images include depth images and RGB color images;

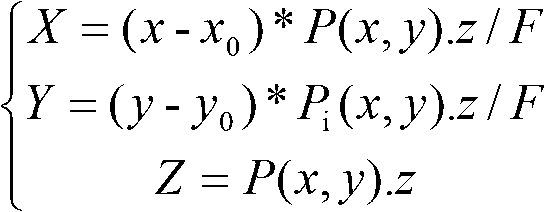

[0033]The depth camera is a camera based on the principle of structured light encoding and capable of collecting RGB images and depth images. The depth image includes two-dimensional XY coordinate information of the scene, and pixel depth value information reflecting the distance from the camera in the scene. The depth value is represented by the infrared light ranging receiving reflection distance of the IR camera, which is expressed as a gray value in the depth image; the greater the depth value, the farther the distance from the camera plane in the corresponding actual scene, that is, the closer the camera is. The larger the depth value.

[0034] When using the depth camera to capture images, the frame rate is set to 30FPS, and the size of the images ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com