Extraction method of kinesiology parameters

A technology of kinematic parameters and human motion, applied in the field of extraction of human kinematic parameters, can solve the problems of high price, complicated operation, complex calculation, etc., and achieve the effect of easy operation, simple method and low cost

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

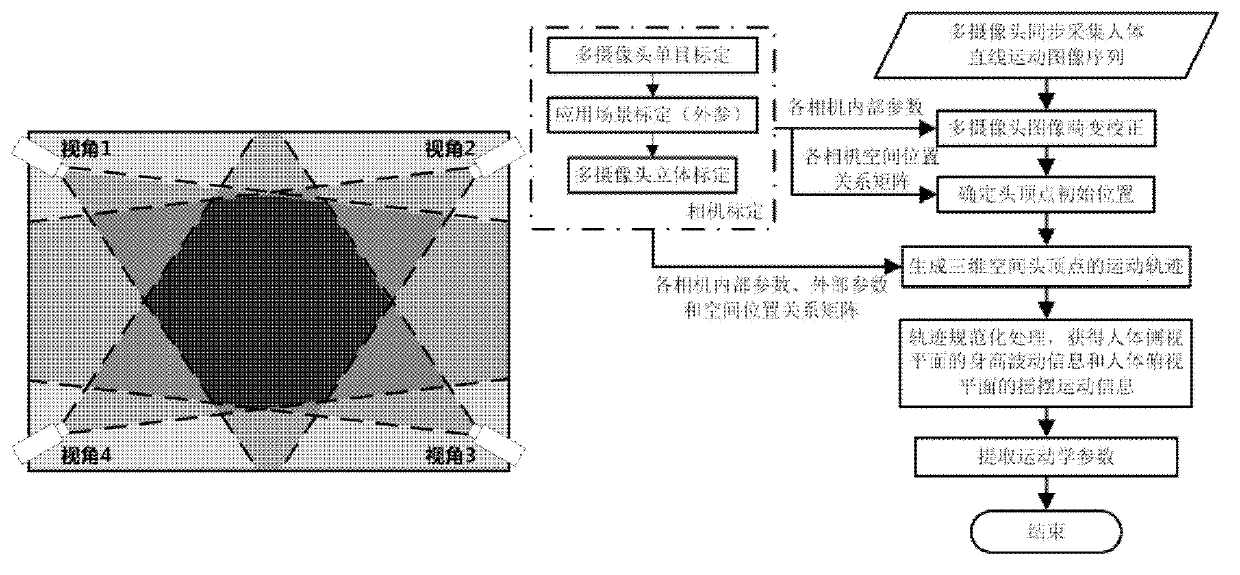

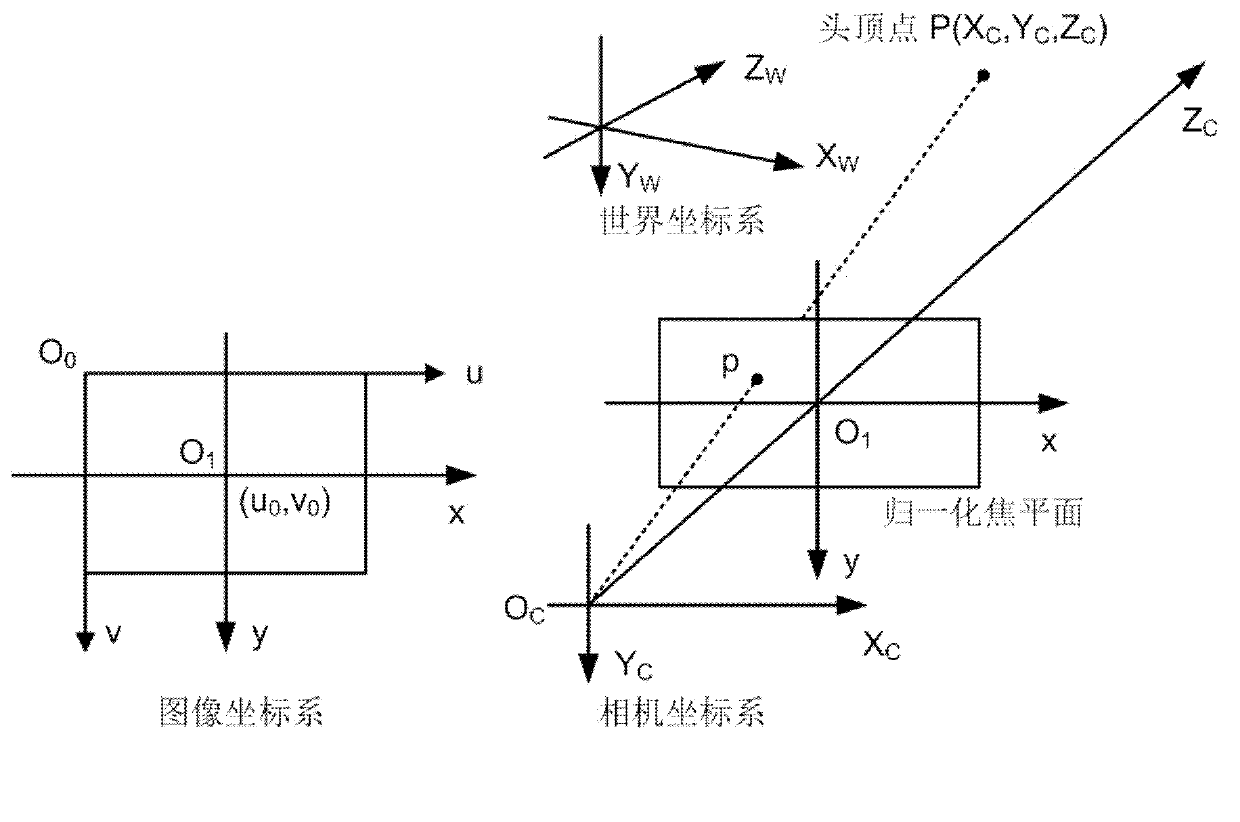

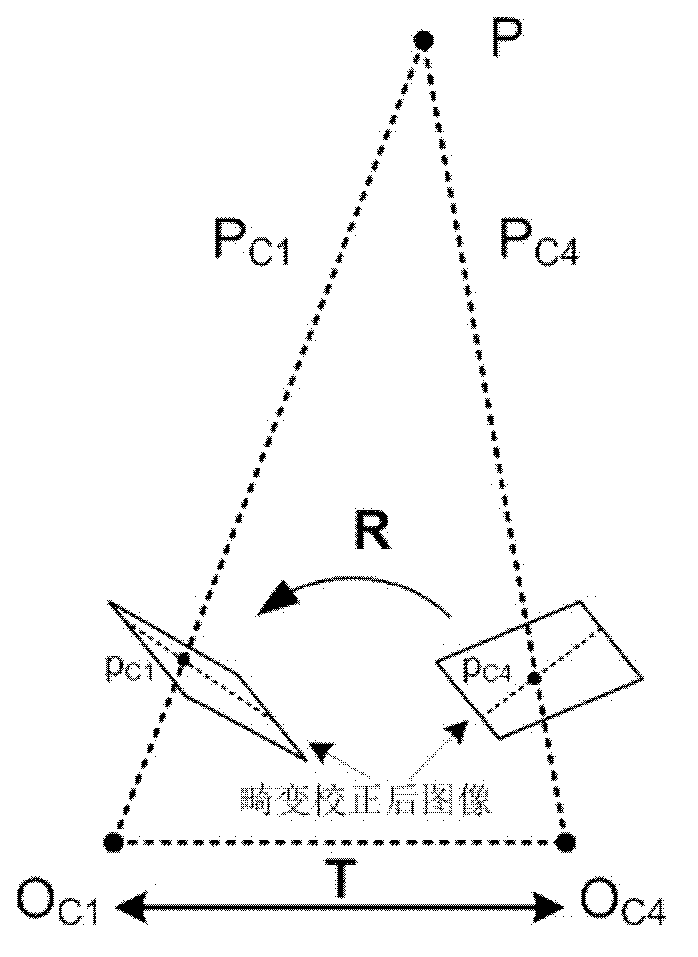

[0023] figure 1 A general block diagram of the method for extracting human kinematic parameters in the present invention is given. It can be seen from the figure that the method includes camera calibration, initial positioning of the head apex, generation of the three-dimensional motion trajectory of the head apex, normalization of the trajectory, and extraction of kinematic parameters. steps. In addition, the input data is a sequence of human linear motion images collected by multiple cameras synchronously; the human linear motion images collected by each camera are subjected to distortion correction operations before further processing to eliminate image distortion caused by the lens. Each step of the present invention...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com