Vision positioning method in dynamic environment

A visual positioning and dynamic scene technology, applied in image data processing, instruments, calculations, etc., can solve the problems of reduced positioning accuracy, positioning errors, and mismatching feature points, so as to reduce errors, increase matching stability, and improve real-time Effects of Sexuality and Effectiveness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

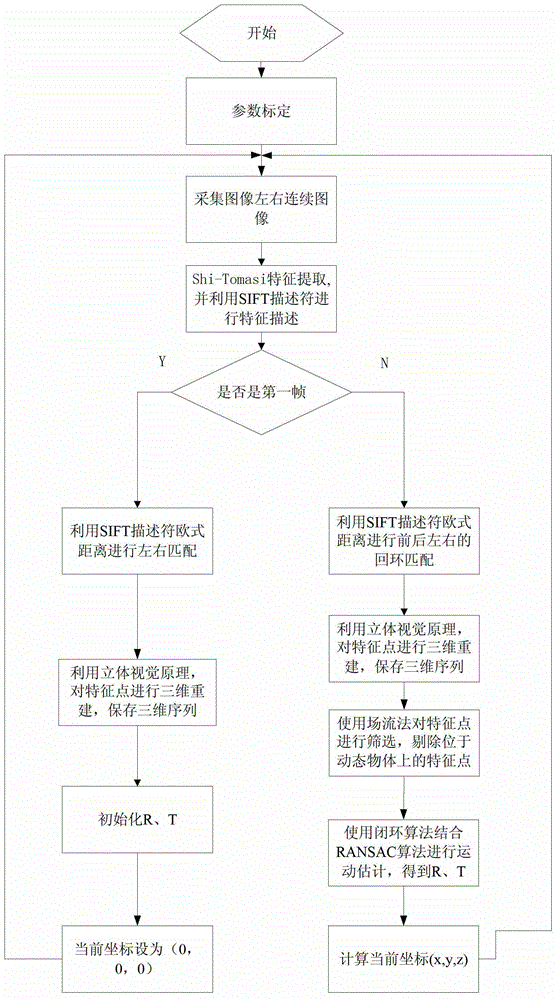

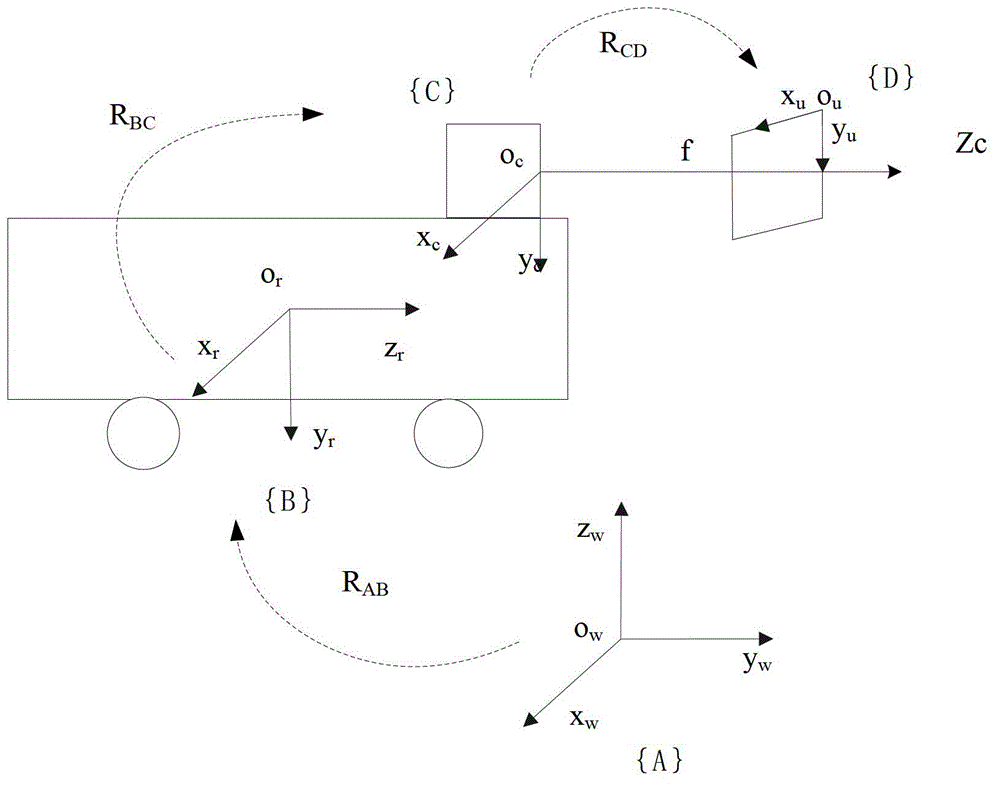

[0030] Below in conjunction with accompanying drawing, the method of the present invention is described in detail:

[0031] Step 1: Calibrate the binocular camera and obtain the internal and external parameters of the camera, including: focal length f, baseline length b, image center pixel position u 0 , v 0 , the correction matrix of the entire image, etc.

[0032] Step 2: Turn on the binocular camera, collect left and right images continuously, and use the camera parameters obtained in step 1 to correct the image.

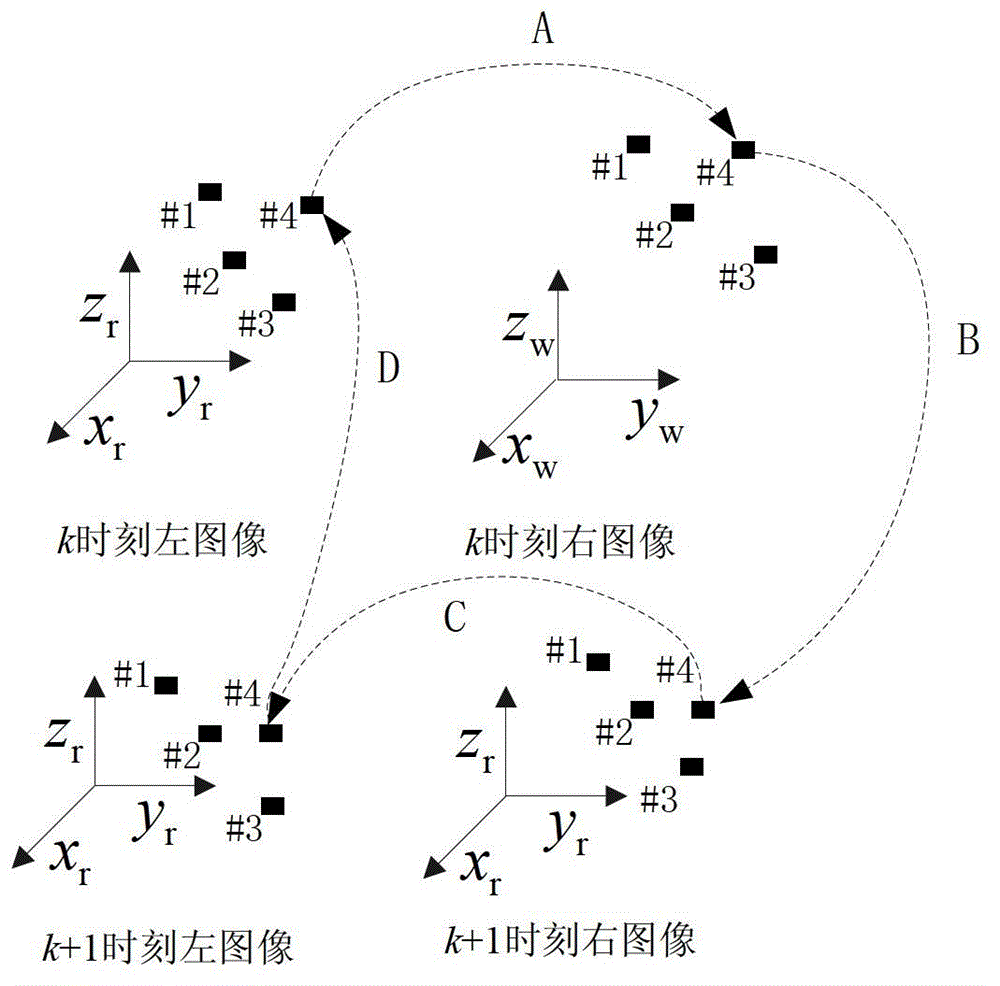

[0033] Step 3: If the image is the first frame, use the sobel operator to extract features from the collected left and right images, and use the SIFT descriptor to describe, and perform special positive matching between the left and right images to obtain the corresponding relationship of feature points. If the image is not the first frame, a total of four images at two moments before and after are matched for feature matching, and the accuracy of the matching ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com