Asymmetrical voice conversion method based on deep neural network feature mapping

A technology of deep neural network and feature mapping, which is applied in the field of asymmetric speech conversion based on deep neural network feature mapping, which can solve problems such as large data volume, unsatisfactory voice quality, and system performance deterioration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

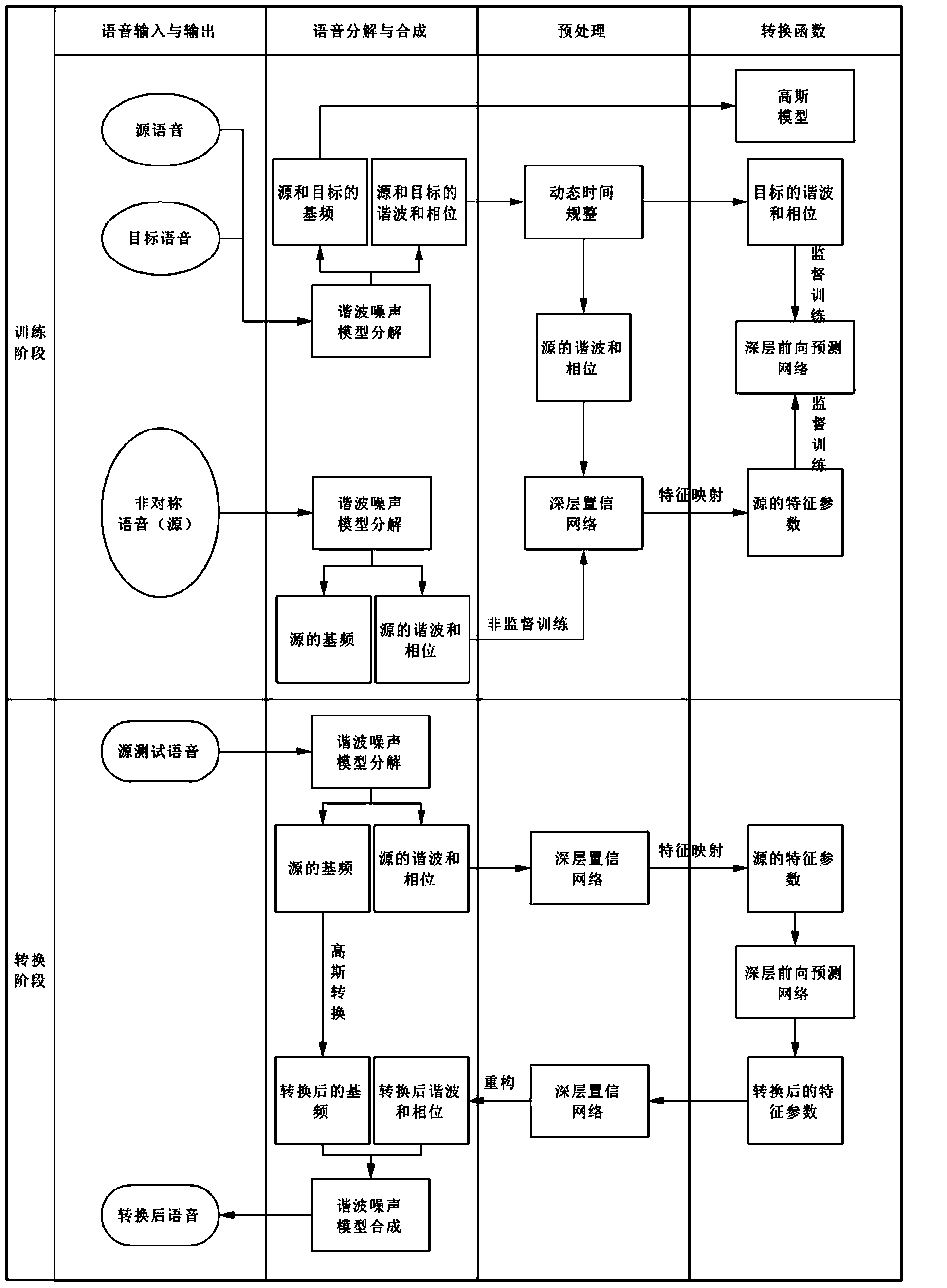

[0043] The present invention will be described in further detail below with reference to the accompanying drawings and examples.

[0044] In order to effectively deal with the problems of "asymmetric data" and "lack of data" in the actual environment, the present invention designs the following data acquisition and integration schemes for subsequent operations: for most applications, the sound data of the target speaker is generally It is relatively passive, so it is more difficult to collect, and often leads to a lack of data volume; in contrast, because the source speaker's voice data collection process is more active, it is relatively easy to collect and the data volume is relatively sufficient. For this reason, on the basis of the existing source speech data, let the source speaker record a small amount of sound data containing the same semantic content as a reference according to the collected speech of the target speaker (the source speaker incrementally records a small a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com