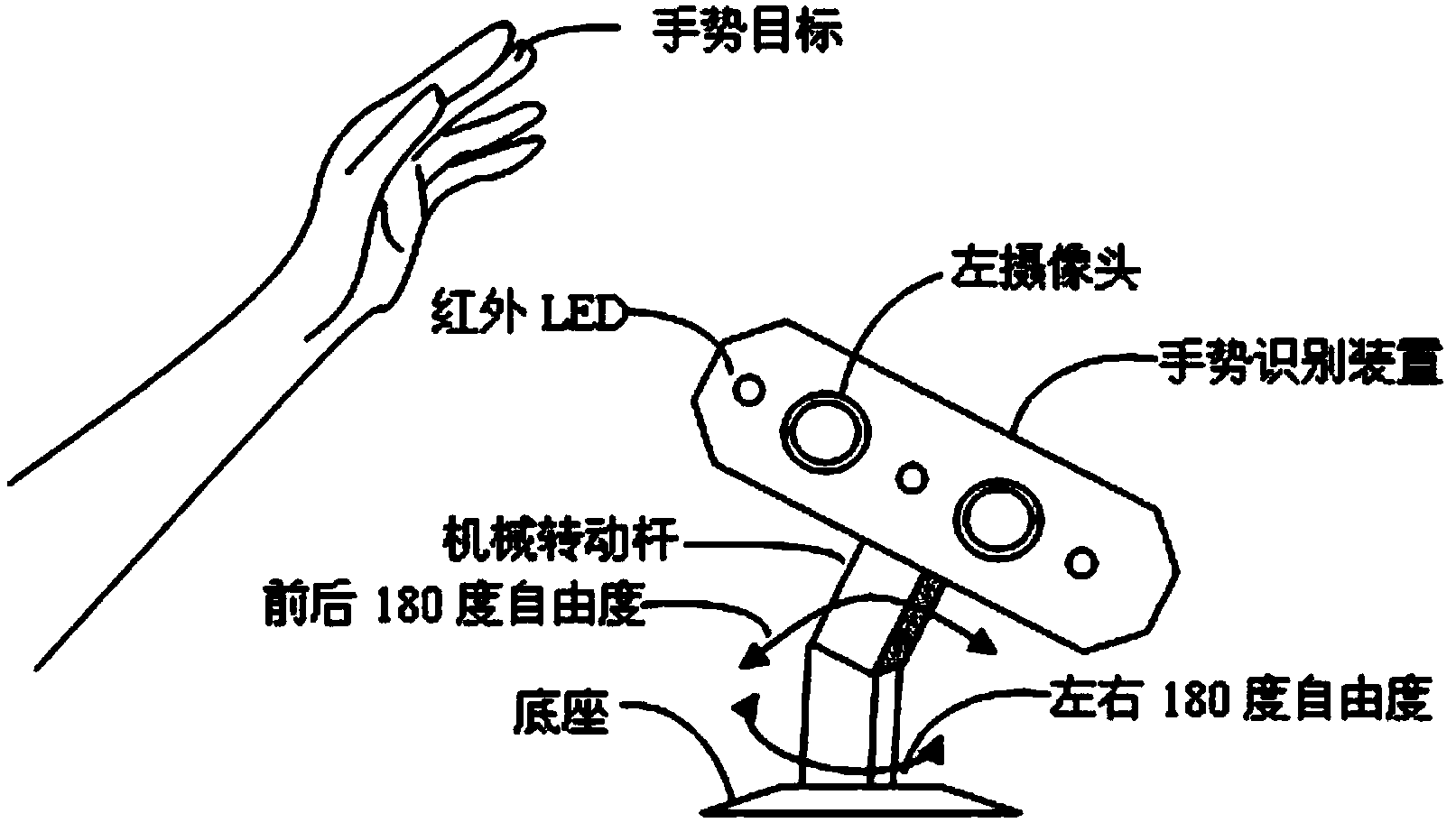

Real-time gesture recognition method and device

A gesture recognition and gesture technology, which is applied in character and pattern recognition, instruments, user/computer interaction input/output, etc., can solve the problems of increasing the complexity of recognition algorithms, template updates, poor versatility, and increased process difficulty.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0082] Embodiment one: specific steps: as image 3 as shown,

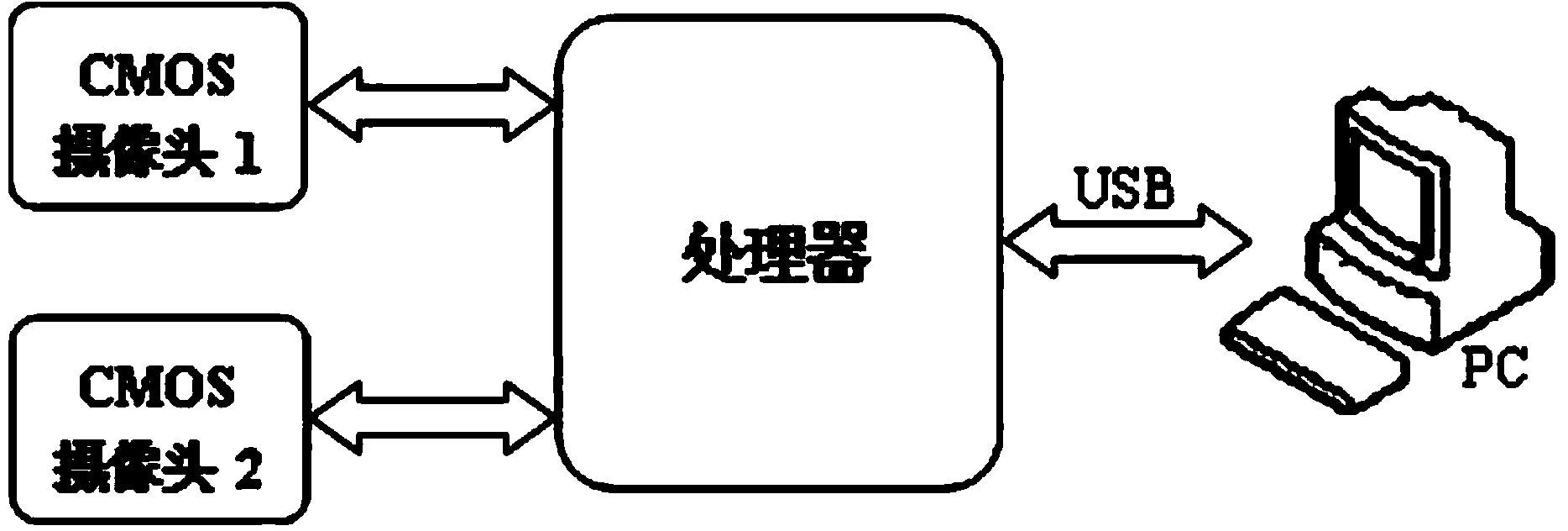

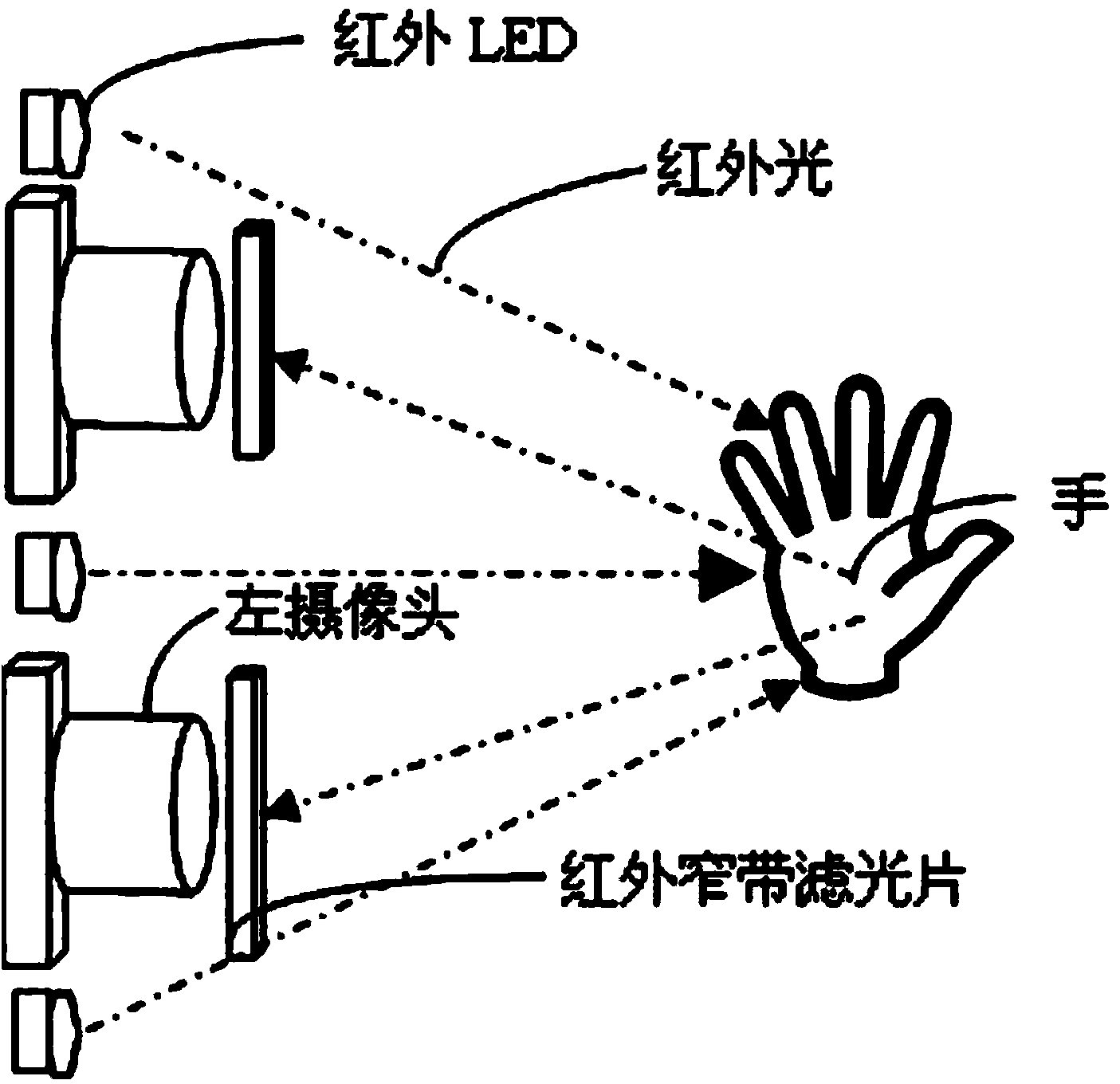

[0083] Step 1: Obtain infrared images through the left camera and the right camera of the real-time gesture recognition device;

[0084] Step 2: The processor converts the infrared image into a grayscale image and separates the gesture area from the background area through adaptive threshold processing, and then obtains the binarized image of the gesture target through the adaptive method of image binarization (such as Figure 4 ), the white area is the target area, that is, the gesture area, and the black and white area is the separation area, that is, the background area, which is regularly updated over time;

[0085] Step 3: Predict the coordinate position of relevant features (such as edges, skeletons, etc.) for each finger in the gesture area through the prediction and classification algorithm based on the target boundary, and separate and classify different finger feature data (such as Figure 5 and Figur...

Embodiment 2

[0090] Embodiment 2: in step 2, the gesture area is separated from the background area, and the binarization map of the gesture target is obtained through the adaptive method of image binarization. The specific process is: (the binarization process of the data of the left camera and the right camera Similarly, the following process can represent both the left camera data and the right camera data binarization process), such as Figure 4 as shown,

[0091] Step 21: Let the image be f (ex,ey) , the binarized image is p (ex,ey) , the threshold value is T, calculate the normalized histogram of the gray level of the input image, use h (ei) express.

[0092] Step 22: Calculate the gray mean where i=0,1,...,255;

[0093] Step 23: Calculate the zero-order cumulative moment w(k) and first-order cumulative moment μ(k) of the histogram

[0094]

[0095]

[0096] Step 24: Calculate class separation metrics k=0,1,...,255;

[0097] Step 25: Find The maximum value of , and ...

Embodiment 3

[0100] Embodiment three: step 3 wherein the specific steps of the predictive classification algorithm based on the target boundary:

[0101] Step 31: When a suspected gesture target is detected, that is, the white pixel of the binary image, record the gesture gesture at the starting point a of the row ei , and its coordinate value is (xa ei ,ya ei );

[0102] Step 32: When the width of the suspected target in k lines, that is, the number of continuous white pixels is greater than the threshold p, consider that the gesture target is detected instead of a noise point, and record the end point b of the line of gesture targets ei (xb ei ,yb ei ), where p=10.

[0103] Step 33: Obtain the starting edge (xa e(i-1) ,ya e(i-1) ) and the terminal edge (xb e(i-1) ,yb e(i-1) ),which is Figure 5 a, b (point a, b is also the edge of the target in row i). and ya e(i-1) =yb e(i-1) , through (xa e(i-1) ,ya e(i-1) ), (xb e(i-1) ,yb e(i-1) ) two points to find the midpoint coor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com