Object tracking method based on state fusion of multiple cell blocks

A technology of object tracking and block state, which is applied in image data processing, instrumentation, calculation, etc., can solve the problems of limited application and low calculation efficiency, and achieve the effect of simple confidence, simple calculation, and real-time stable object tracking

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be further described below according to the accompanying drawings: the method of the present invention can be used in various occasions of object tracking, such as intelligent video analysis, automatic human-computer interaction, traffic video monitoring, unmanned vehicle driving, biological group analysis, and fluid surface velocity measurement Wait.

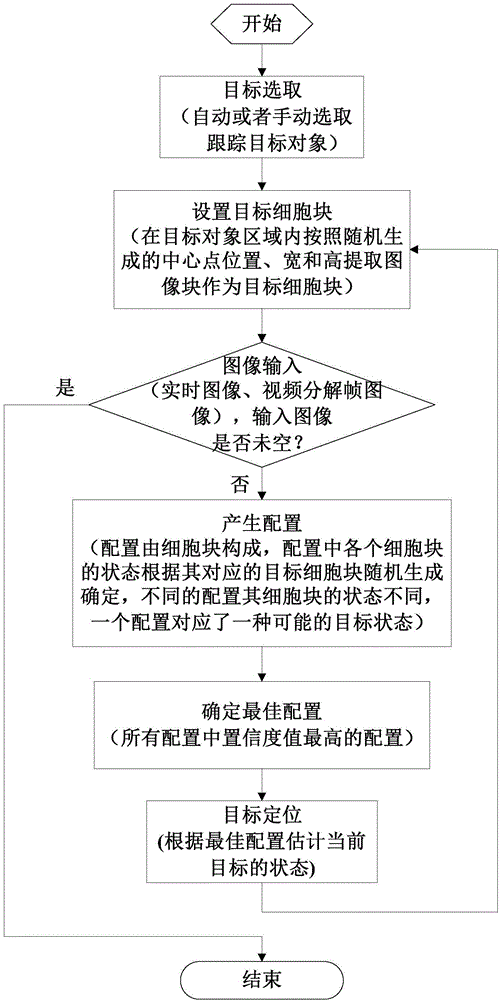

[0024] Technical scheme of the present invention comprises the steps:

[0025] (1) Target selection

[0026] Select and determine the target object to track from the initial image. The target selection process can be automatically extracted by the moving target detection method, or manually specified by the human-computer interaction method.

[0027] (2) Set the target cell block

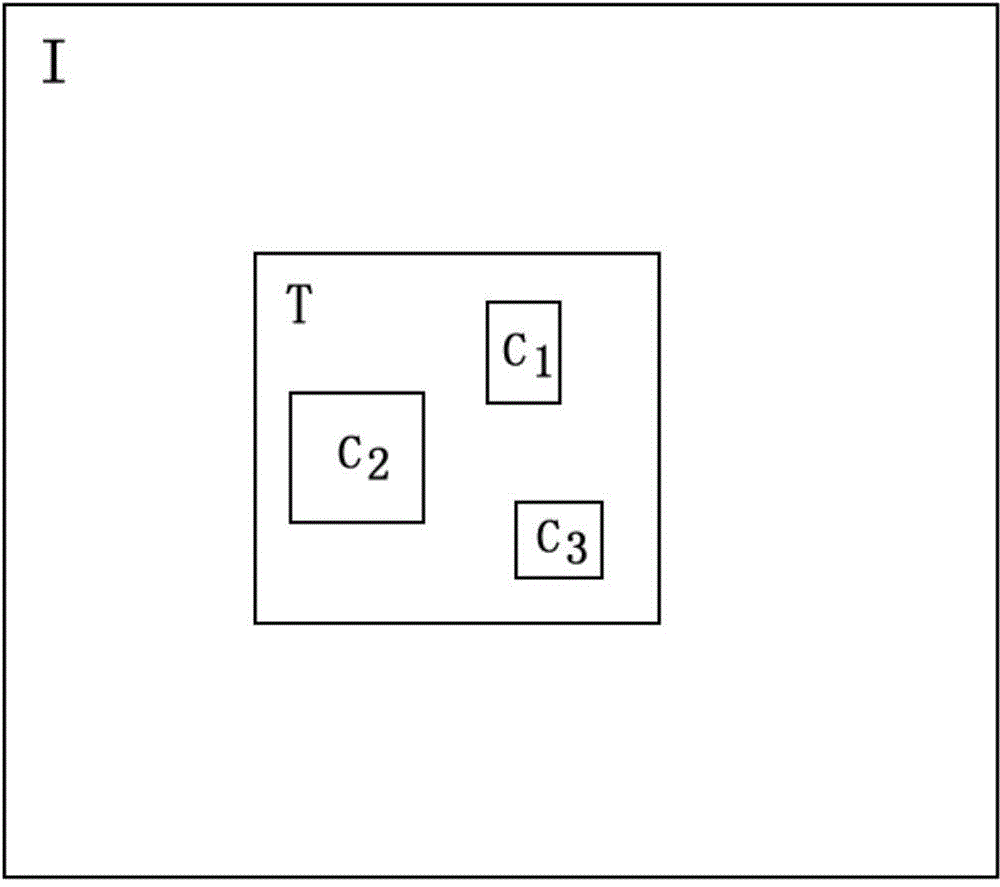

[0028] Extract the image block as the target cell block according to the randomly generated center point position, width and height in the target object area, figure 1 In , use I to represent the image, T to repres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com