Dynamic gesture recognition method

A technology of dynamic gestures and recognition methods, applied in the fields of computer vision and machine learning, to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

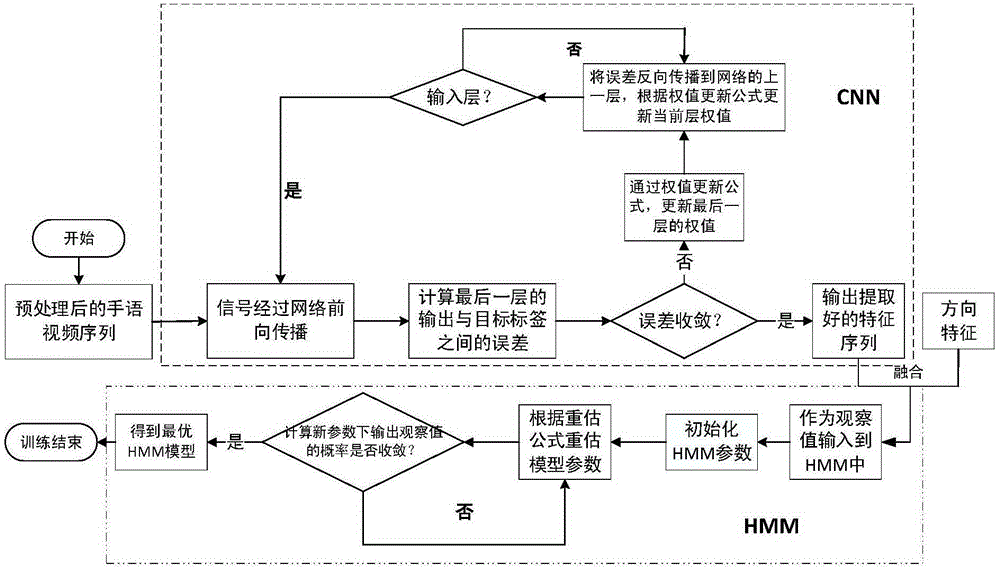

[0010] This dynamic gesture recognition method, the method comprises the following steps:

[0011] (1) Preprocess the dynamic gesture data, expand the dynamic gesture data based on the interval sampling method, calculate and expand the edges of the RGB three channels of the expanded dynamic gesture data based on the canny edge detection operator, and generate a color edge image;

[0012] (2) Extract gesture feature sequence based on convolutional neural network model;

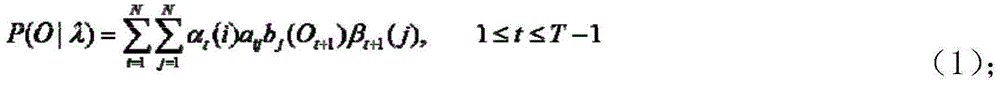

[0013] (3) Through the gesture feature sequence and hand direction feature extracted in step (2), conduct hidden Markov model HMM training to obtain the HMM closest to the gesture sample.

[0014] The present invention extracts the gesture feature sequence based on the convolutional neural network model, and then performs hidden Markov model HMM training to obtain the HMM closest to the gesture sample, thereby improving the accuracy of dynamic gesture recognition.

[0015] Preferably, the interval sampling met...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com