A Real-time Video Stitching Method Based on Improved Surf Algorithm

A real-time video and algorithm technology, applied in the field of video splicing, can solve problems such as speeding up the splicing speed, and achieve the effect of real-time splicing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

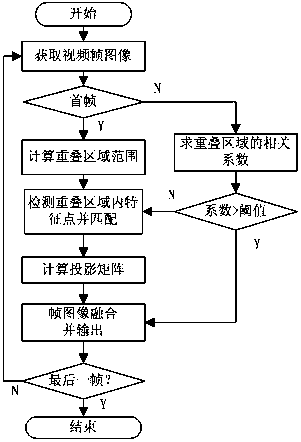

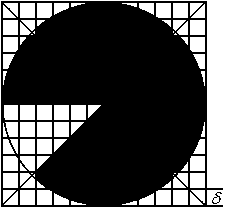

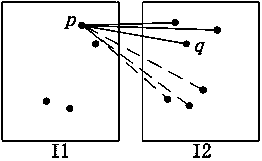

[0036] The invention realizes a real-time video splicing method aiming at the strict requirement on time of real-time video splicing and some deficiencies in existing video splicing methods. This method first uses the phase correlation coefficient to obtain the overlapping range of the two cameras, and then only the images within the overlapping range will be processed; then the SURF algorithm is improved to improve the generation method of the feature point descriptor in the SURF algorithm and reduce the description. Using the improved SURF algorithm to extract the features in the overlapping area of the video frame; using the feature registration method of block matching to realize the registration of the video frame, obtain the transformation matrix, and finally realize it according to the correlation coefficient between adjacent frames The real-time update of the projection matrix realizes the splicing of videos. In order to facilitate the public's understanding, the tec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com