Cache Management Method for Distributed Memory Columnar Database

A cache management, in-memory columnar technology, applied in the field of cache management of distributed in-memory columnar databases, achieves the effect of improving query efficiency, saving query time and storage space, and reducing the calculation of repetitive tasks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

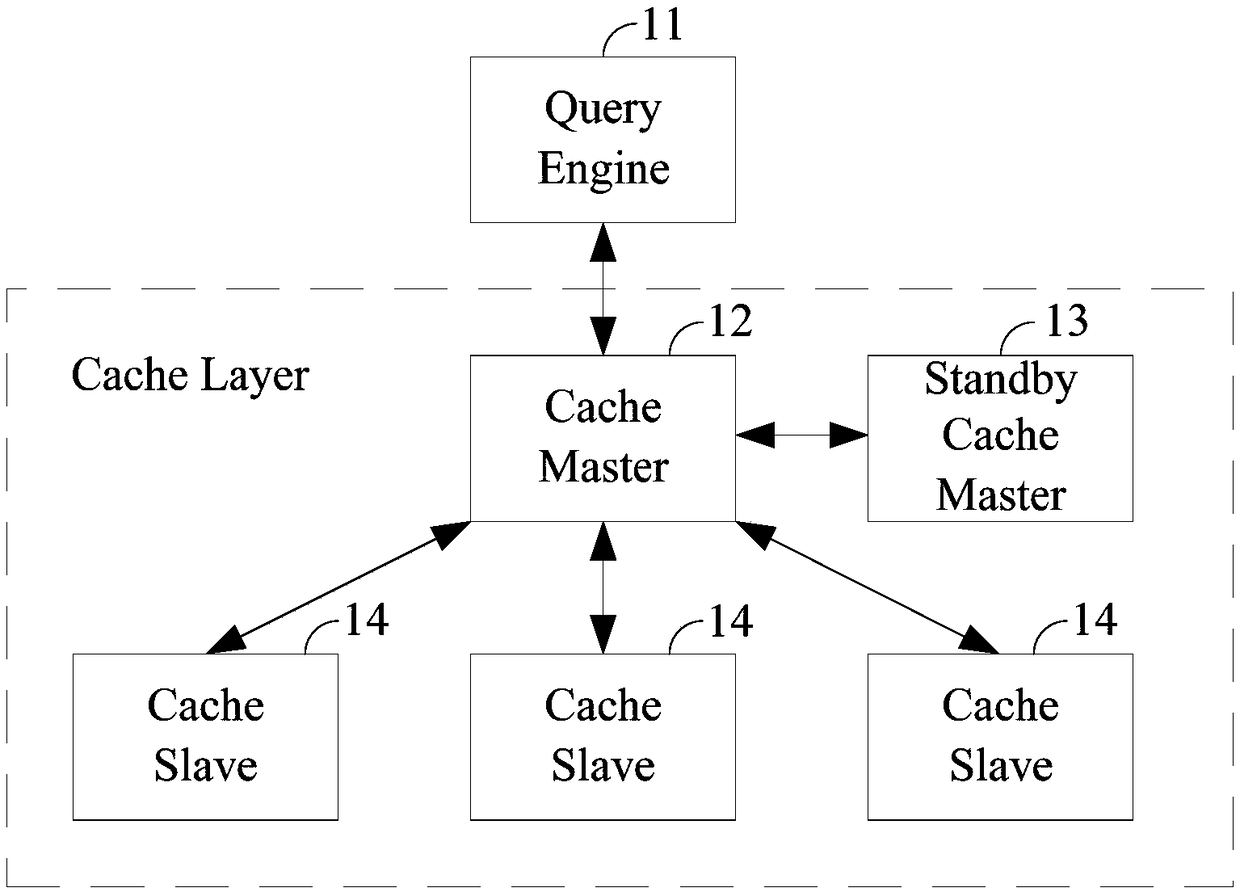

[0030] This embodiment provides a cache management method for a distributed in-memory columnar database. For the structural diagram of the cache management system for a distributed in-memory columnar database, please refer to figure 1 , including a query execution engine, a cache master node, a standby node, and at least one cache slave node.

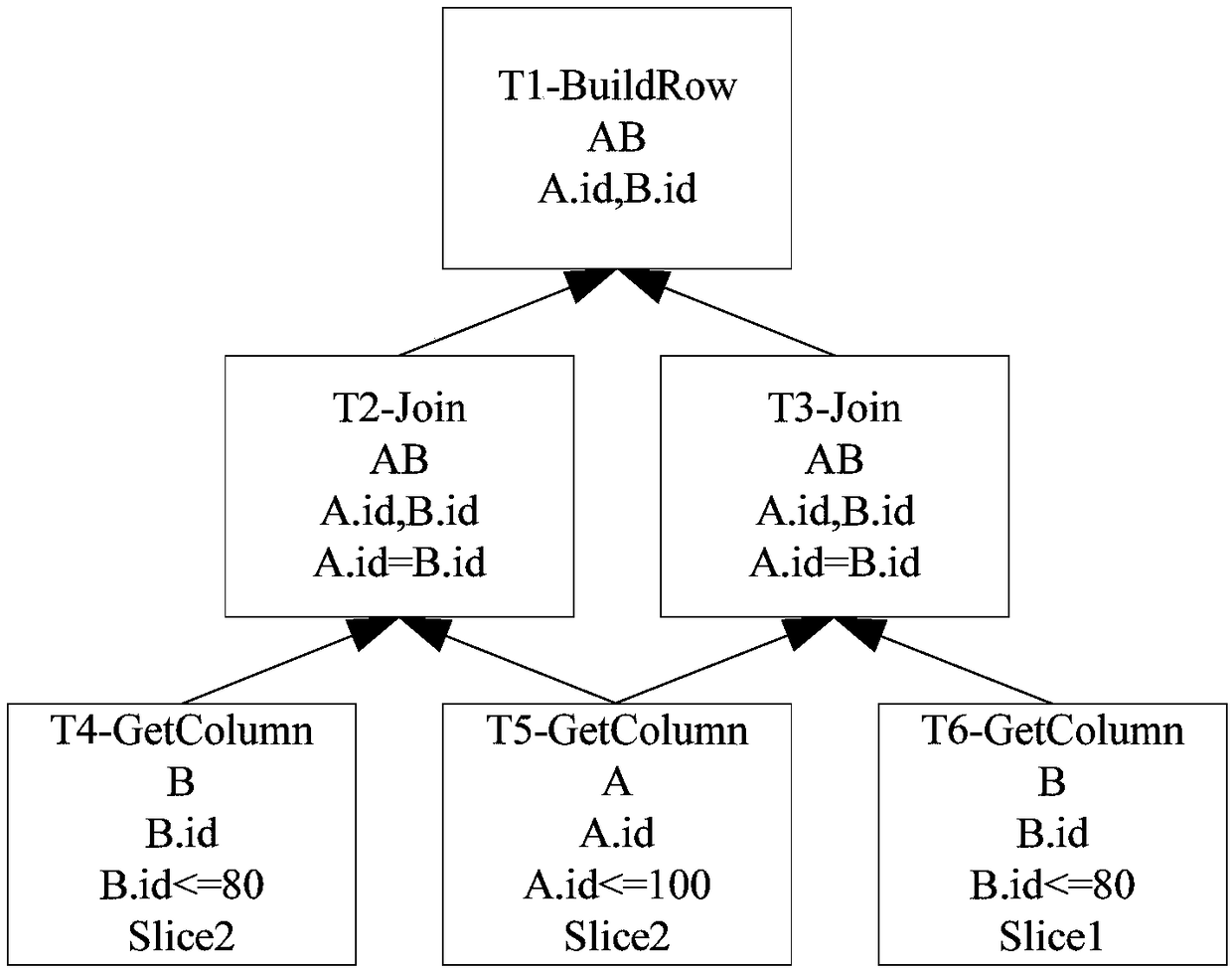

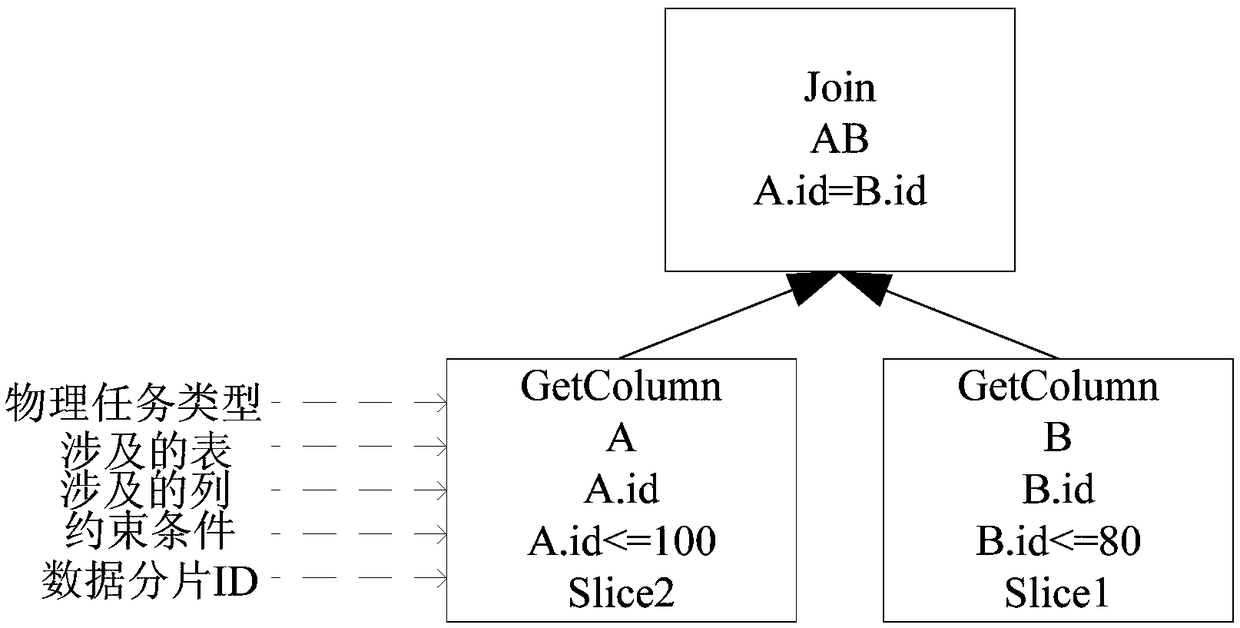

[0031] When a query request comes, the query execution engine parses the SQL statement into a physical execution plan represented by DAG. Each node in the physical execution plan represents a physical task, and the physical tasks are divided into GetColumn, Join, Filter, Group, BuildRow, etc. Each edge represents the transmission relationship of calculation results between two physical tasks. The physical execution plan of a typical query statement (SELECT A.id FROM A,B WHERE A.id=B.id AND A.idfigure 1 shown. In a cache management system, the granularity of cached data is the calculation result of a single physical task. When the cach...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com