A Fast Segmentation Method for Moving Objects in Unrestricted Scenes Based on Fully Convolutional Networks

A fully convolutional network and moving target technology, applied in the field of fast segmentation of moving targets, to achieve accurate segmentation and improve analysis accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

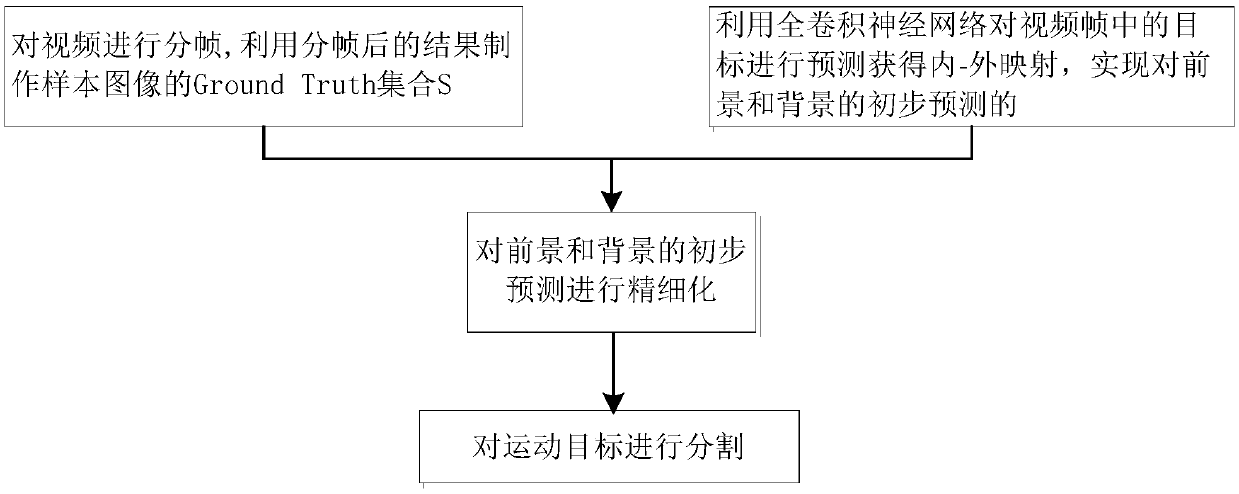

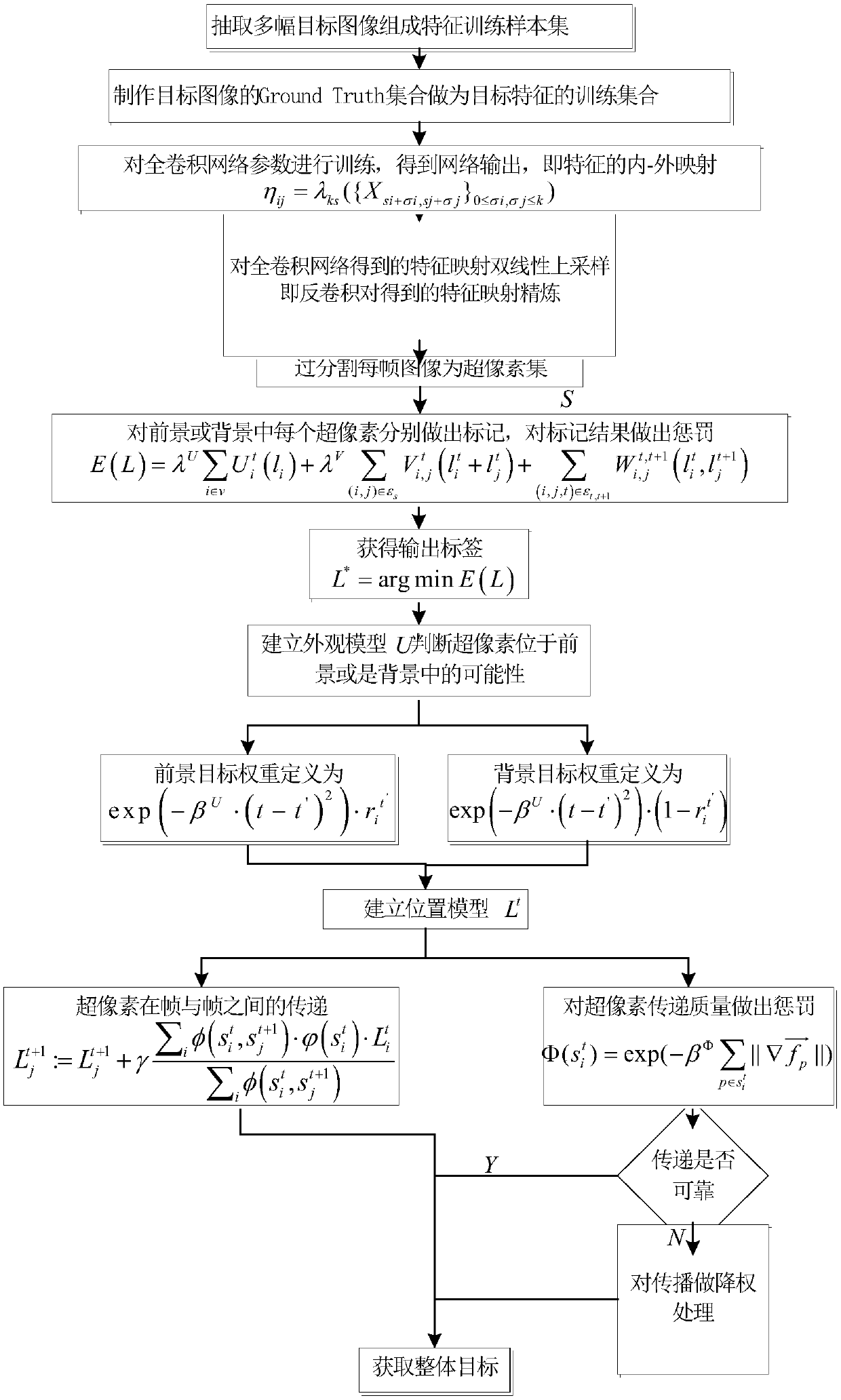

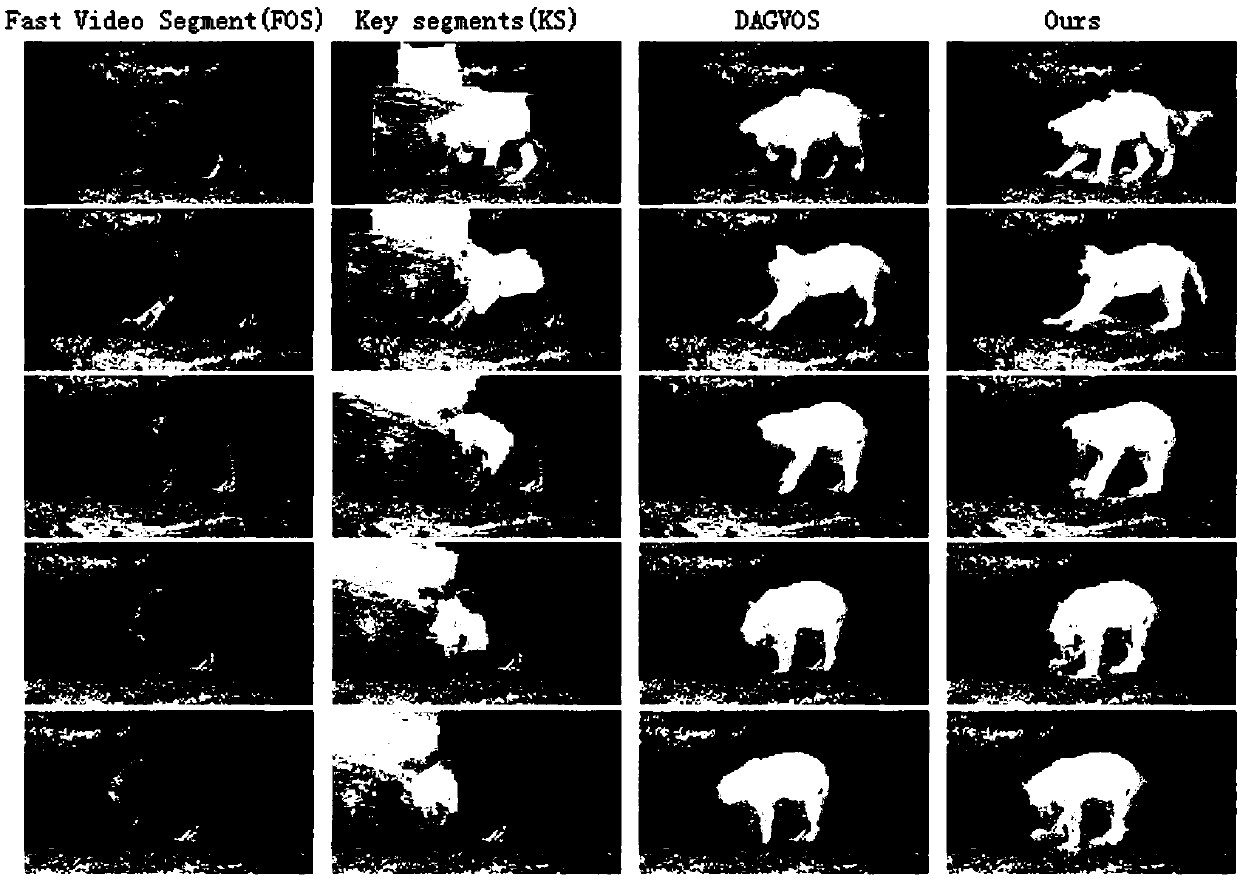

[0031] Embodiment 1: as Figure 1-4 As shown, a method for fast segmentation of moving objects in unrestricted scenes based on full convolutional networks. First, the video is divided into frames, and the ground truth set S of sample images is made using the results of the frame division; the PASCAL VOC standard library is adopted The trained fully convolutional neural network predicts the target in each frame of the video, obtains the deep feature estimator of the foreground target in the image, and obtains the inside-outside mapping information of the target in all frames, and realizes the foreground and background in the video frame. Preliminary prediction; then, the deep feature estimators of the foreground and background are refined by Markov random field, so as to realize the segmentation of video foreground moving objects in unrestricted scene videos and verify the performance of this method through the Ground Truth set S.

[0032] The concrete steps of described method...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com