Method and device for optimizing neural network

A technology of neural network and optimization method, applied in the field of artificial neural network, which can solve problems such as occupation, shortening of running time, and high computational complexity of image data processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

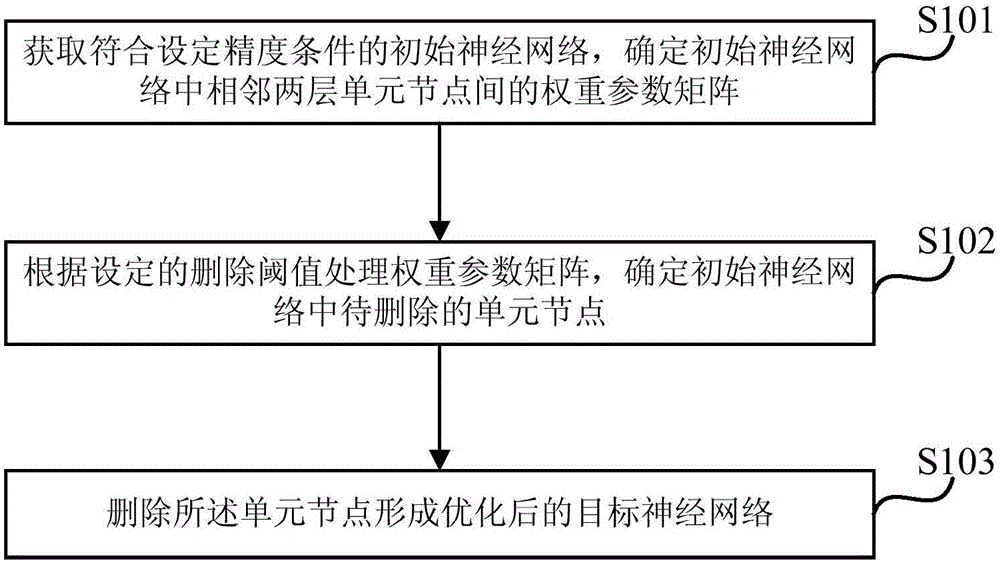

[0023] figure 1 It is a schematic flowchart of a neural network optimization method provided in Embodiment 1 of the present invention, which is applicable to the case of compressing and optimizing the neural network after training and learning, and the method can be executed by a neural network optimization device, wherein the device It can be implemented by software and / or hardware, and is generally integrated on the terminal device or server platform where the neural network model is located.

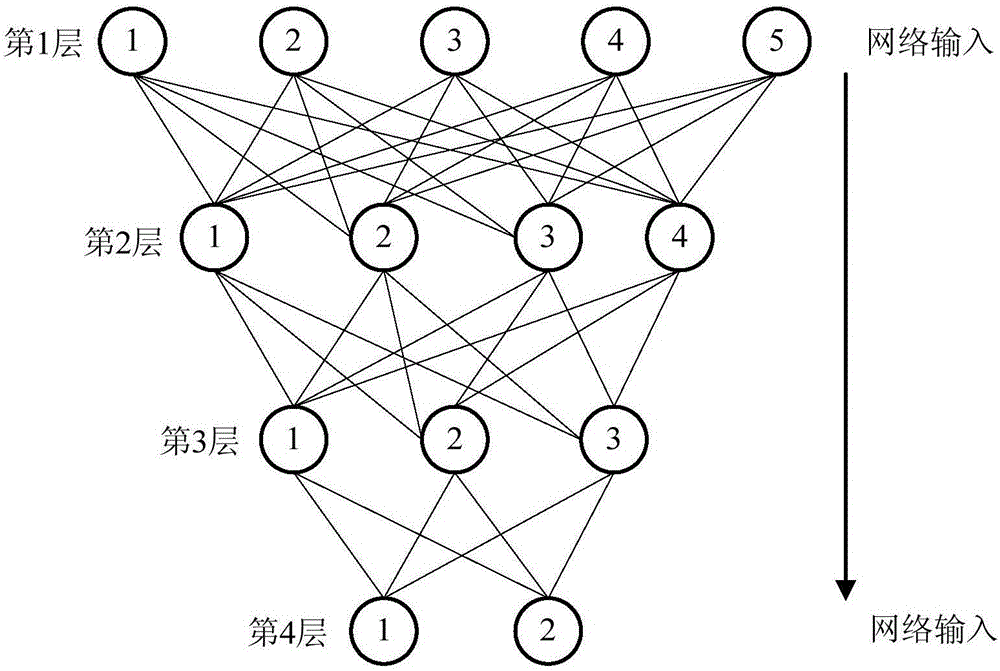

[0024] Generally speaking, neural network mainly refers to artificial neural network, which can be regarded as an algorithmic mathematical model that imitates the behavior characteristics of animal neural network and performs distributed parallel information processing. The unit nodes in the neural network are divided into at least three layers, including the input layer, the hidden layer and the output layer. The input layer and the output layer both contain only one layer of unit no...

Embodiment 2

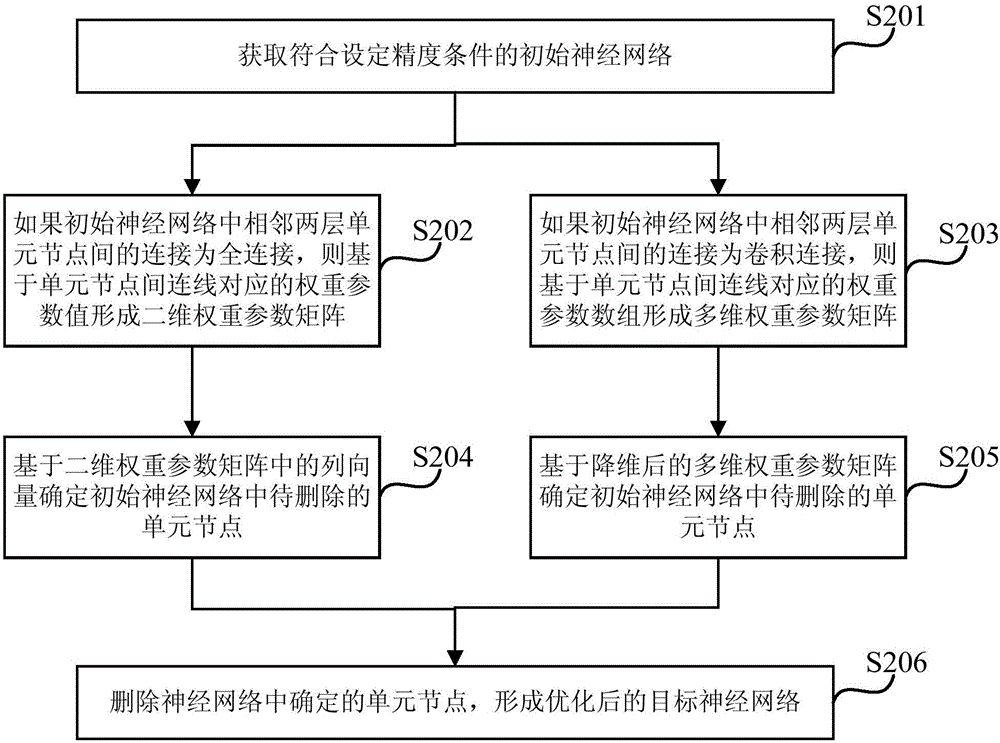

[0038] FIG. 2 is a schematic flowchart of a neural network optimization method provided by Embodiment 2 of the present invention. The embodiment of the present invention is optimized on the basis of the above-mentioned embodiments. In this embodiment, the weight parameter matrix between two adjacent layers of unit nodes in the initial neural network will be determined, and further optimized as follows: if the initial neural network The connection between the unit nodes of two adjacent layers is fully connected, then a two-dimensional weight parameter matrix is formed based on the weight parameter values corresponding to the lines between the unit nodes; if the connection between the unit nodes of two adjacent layers in the initial neural network is volume If the product is connected, a multi-dimensional weight parameter matrix is formed based on the weight parameter array corresponding to the connection between the unit nodes.

[0039] Further, the weight parameter matri...

Embodiment 3

[0063] Figure 3a It is a schematic flowchart of a neural network optimization method provided by Embodiment 3 of the present invention. The embodiments of the present invention are optimized on the basis of the above-mentioned embodiments. In this embodiment, further optimizations are added: determine whether the current processing accuracy of the target neural network meets the set accuracy conditions, Neural network for training learning or deep optimization.

[0064] On the basis of the above optimization, the target neural network will be trained or deeply optimized based on the determination results, and further optimized as follows: if the current processing accuracy does not meet the set accuracy conditions, the target neural network will be Carry out training and learning until the set accuracy condition is met or the set number of training times is reached; otherwise, perform a self-increment operation on the deletion threshold, and use the target neural network as a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com