Panoramic video splicing method

A panoramic video and scene video technology, applied in the field of panoramic video stitching, can solve the problems of high error matching rate and low matching degree of feature points, and achieve the effect of improving stitching efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

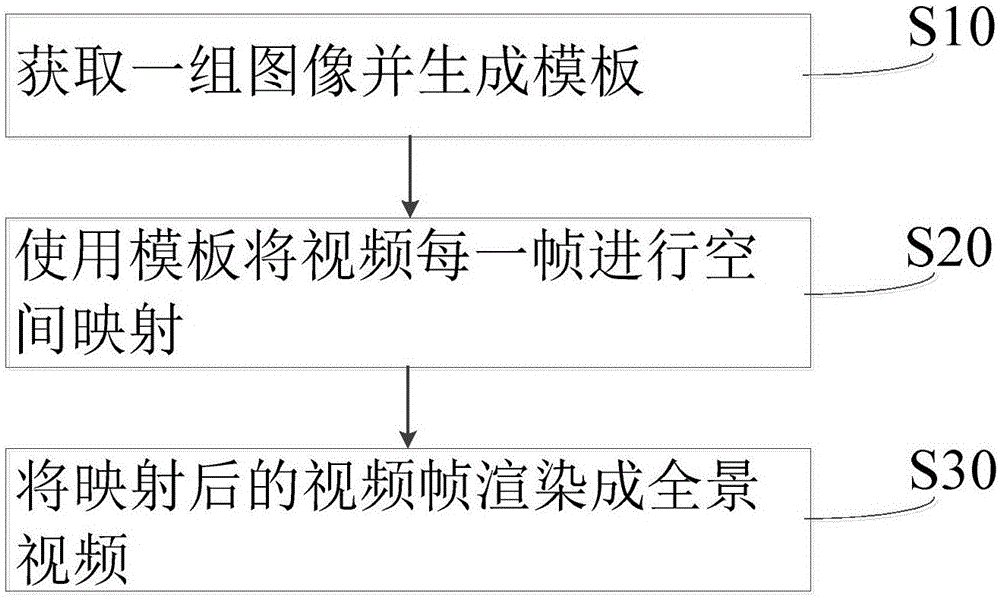

[0041] see figure 1 , is a flow chart of the steps of the panoramic video stitching method in a specific embodiment of the present invention, comprising the following steps:

[0042] S10, acquiring a set of images and generating a template;

[0043] S20, using a template to spatially map each frame of the video;

[0044] S30. Render the mapped video frame into a panoramic video.

[0045] Through the above steps, a group of images to be spliced can be generated as a template, and the generated template is a set of parameters, which are the mapping parameters for mapping the circumferential fisheye image to the final equidistant cylindrical image (ie, the panoramic image). Using the template, each frame of the video can be spatially mapped and then rendered into a panoramic video. The generated template can directly stitch the video images captured by the subsequent panoramic camera, which greatly improves the efficiency.

Embodiment 2

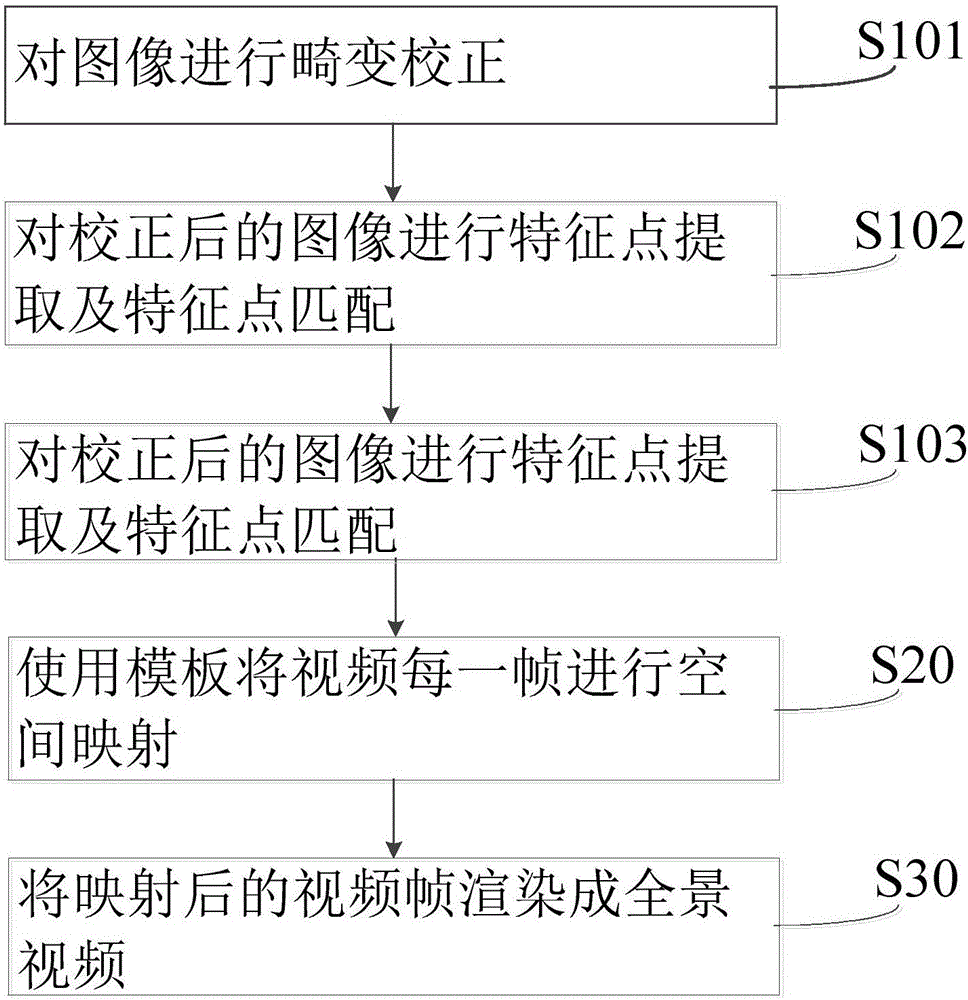

[0047] see figure 2 , is a flow chart of the steps of the panoramic video stitching method in a specific embodiment of the present invention, comprising the following steps:

[0048] S101, performing distortion correction on the image;

[0049] S102, performing feature point extraction and feature point matching on the corrected image;

[0050] S103, using the optimization algorithm and the feature point matching result to estimate the spatial mapping parameters, and saving the mapping parameters as a template;

[0051] S20, using a template to spatially map each frame of the video;

[0052] S30. Render the mapped video frame into a panoramic video.

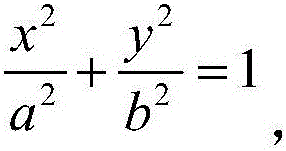

[0053] In the above steps, S101 performs distortion correction on the image, using the latitude and longitude correction method. On a certain longitude, the elliptic equation of a point on the image is:

[0054]

[0055] The coordinates after latitude and longitude correction are (x1, y1), and the correction relationship ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com