Cloud data center energy-saving scheduling implementation method based on rolling grey prediction model

A gray prediction model and cloud data center technology, applied in the field of cloud computing energy-saving scheduling, can solve problems such as virtual machine scheduling integration strategy defects, to achieve the effect of ensuring cloud service experience, avoiding overload or no-load phenomenon, and improving indicators

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] In order to make the technical solutions and advantages of the present invention clearer, further detailed description will be given below in conjunction with the accompanying drawings, but the implementation and protection of the present invention are not limited thereto.

[0022] 1. Strategic Framework

[0023] 1.1 Cloud computing resource intelligent scheduling framework

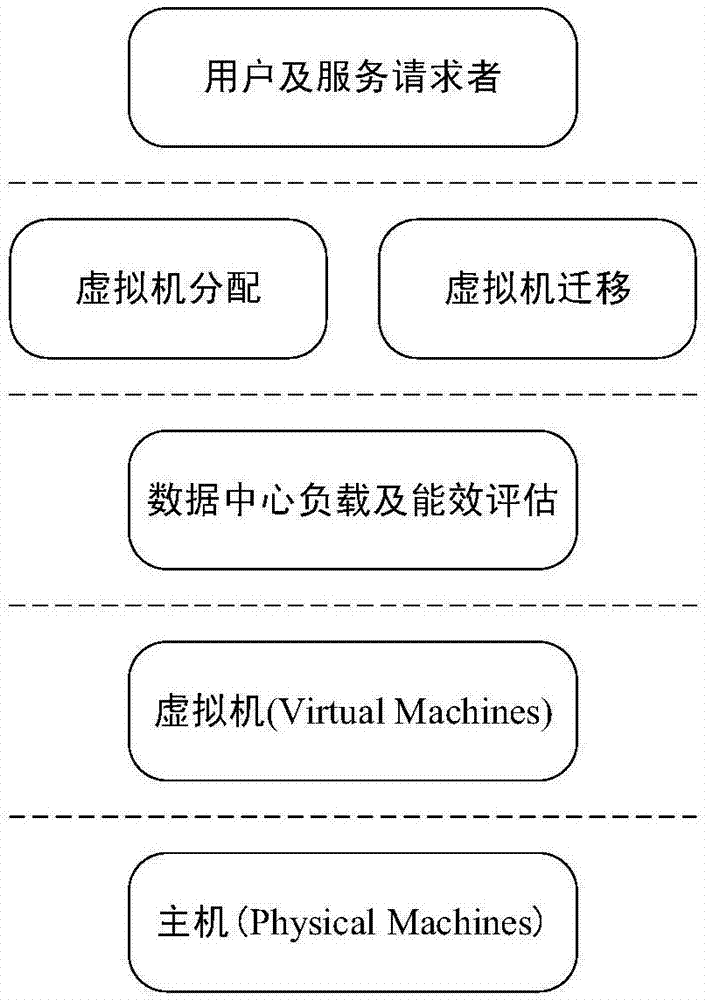

[0024] figure 1 It is the architecture diagram of the cloud computing platform resource intelligent scheduling framework, which is divided into the host layer, virtual machine layer, performance evaluation layer, scheduling layer, and user layer from bottom to top. The scheduling layer and the evaluation layer are the core of the entire energy-saving strategy framework. Each layer will be explained below.

[0025] The host layer refers to all servers in the cloud data center, including all physical host nodes. These hardware devices are the bottom infrastructure of the cloud environment and provide us wi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com