Human face shape classification method and system

A face shape and face technology, which is applied in the field of face shape classification methods and systems, can solve the problems of low accuracy of face shape classification, and achieve the effects of overcoming poor robustness, improving accuracy, and improving precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

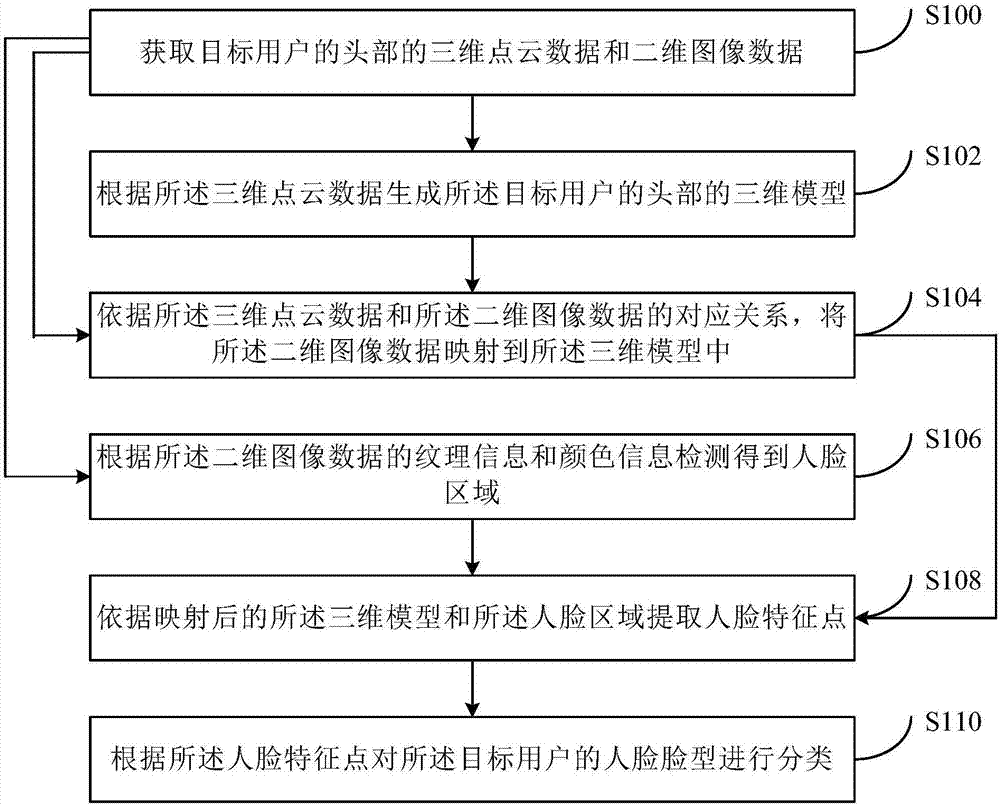

[0030] figure 1 It shows a flow chart of steps of a face type classification method according to Embodiment 1 of the present invention.

[0031] refer to figure 1 , the face classification method of the present embodiment comprises the following steps:

[0032] Step S100, acquiring 3D point cloud data and 2D image data of the target user's head.

[0033] In this step, the point data collection of the appearance surface of the scanned object obtained by the measuring instrument is called point cloud data, and the three-dimensional point cloud data is the point data collection of the appearance surface of the scanned object obtained by a three-dimensional image acquisition device such as a laser radar. In this embodiment, the scanning object is a human head. The 3D point cloud data includes 3D coordinate XYZ information.

[0034] In this embodiment, the 3D point cloud technology is applied to the field of face shape classification, and the model corresponding to the user's h...

Embodiment 2

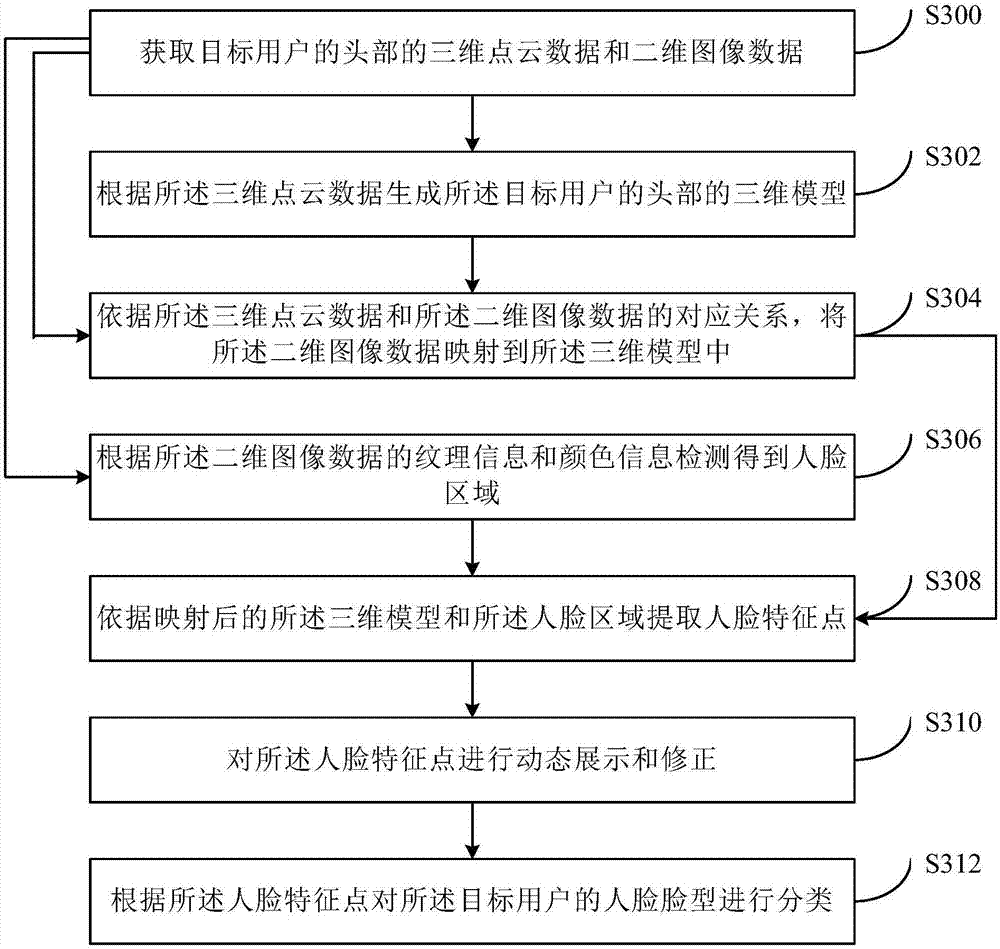

[0049] image 3 It shows a flow chart of steps of a face type classification method according to Embodiment 2 of the present invention.

[0050] refer to image 3 , the face classification method of the present embodiment comprises the following steps:

[0051] Step S300, acquiring 3D point cloud data and 2D image data of the target user's head.

[0052] Specifically, the 3D point cloud data and 2D image data of the target user's head are acquired through an image acquisition device.

[0053] In this embodiment, the 3D point cloud data of multiple viewpoints of the target user's head is obtained by scanning, the 3D point cloud data includes data of multiple frames of the target user's head, and each frame of 3D point cloud data includes at least the data of the target user's head Point cloud data, wherein the Hough forest model detection method is used to perform three-dimensional detection on multi-frame three-dimensional point cloud data, and multiple initial head three-d...

Embodiment 3

[0100] Image 6 A structural block diagram of a face type classification system according to Embodiment 3 of the present invention is shown.

[0101] The face type classification system in this embodiment includes: a data acquisition module 600 for acquiring 3D point cloud data and 2D image data of the target user's head; a model generation module 602 for Generate a 3D model of the target user's head; a data mapping module 604, configured to map the 2D image data to the 3D model according to the correspondence between the 3D point cloud data and the 2D image data Middle; the region detection module 606, used for detecting the human face region according to the texture information and color information of the two-dimensional image data; the feature point extraction module 608, used for according to the mapped three-dimensional model and the described human face region Extracting face feature points; a face classification module 610, configured to classify the target user's fac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com