Kinect-based Darwin robot joint mapping analysis method

A technology of robot joints and analysis methods, applied in the field of Kinect-based Darwin robot joint mapping analysis

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

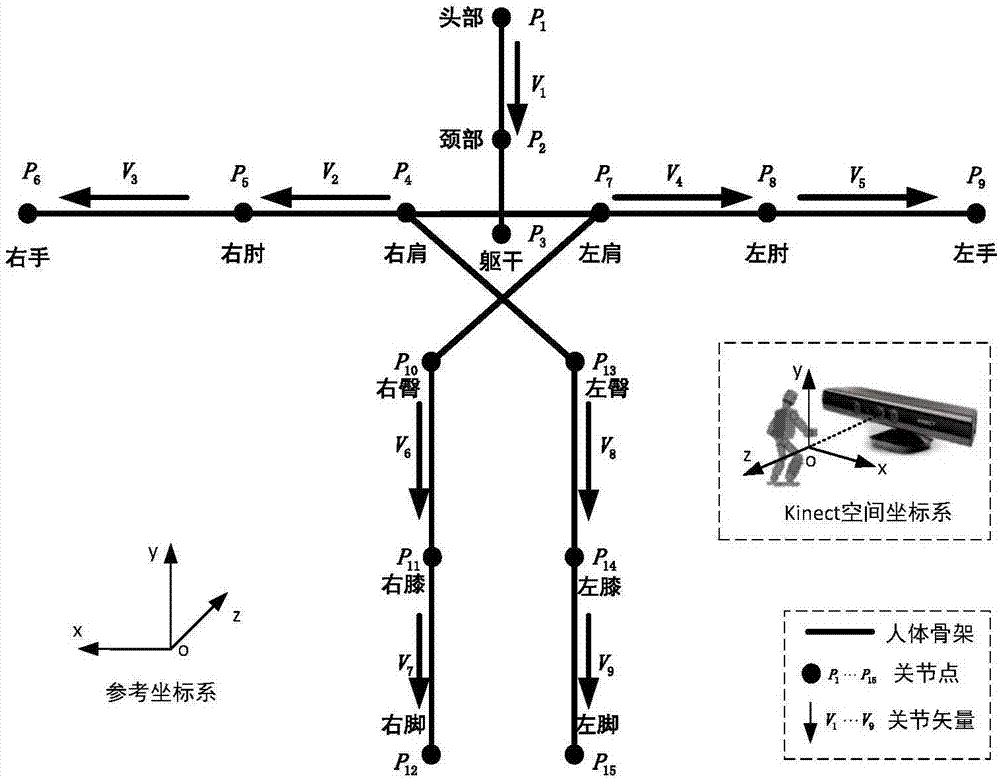

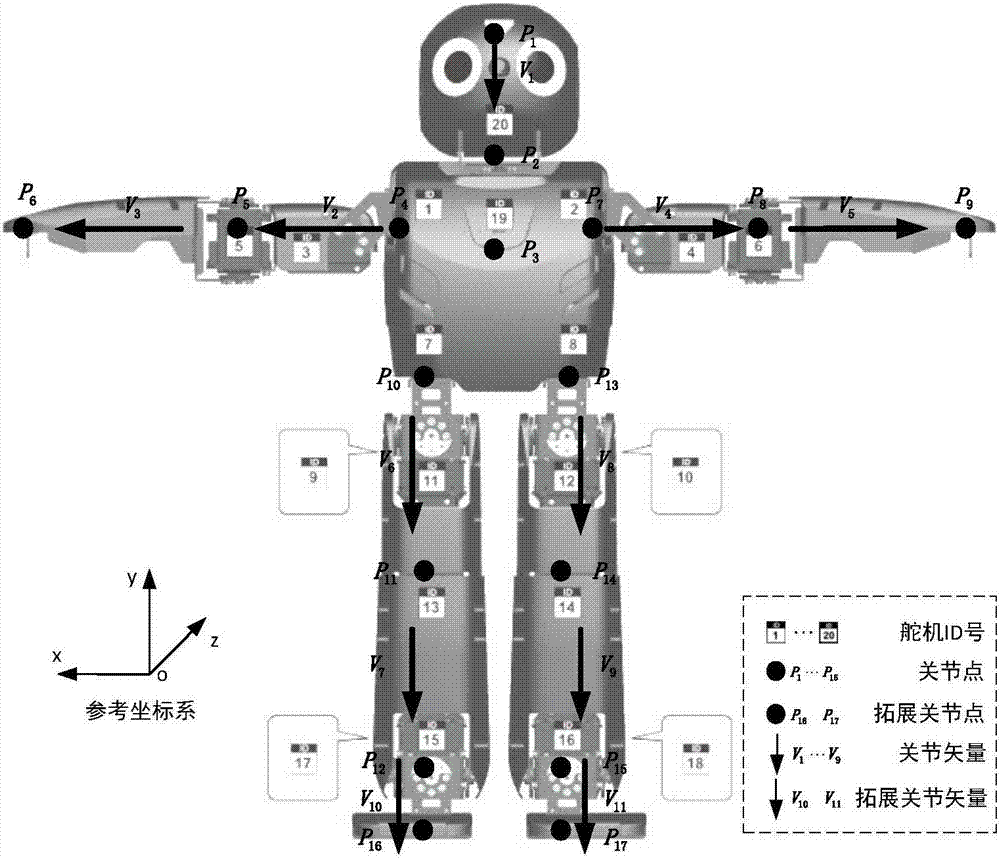

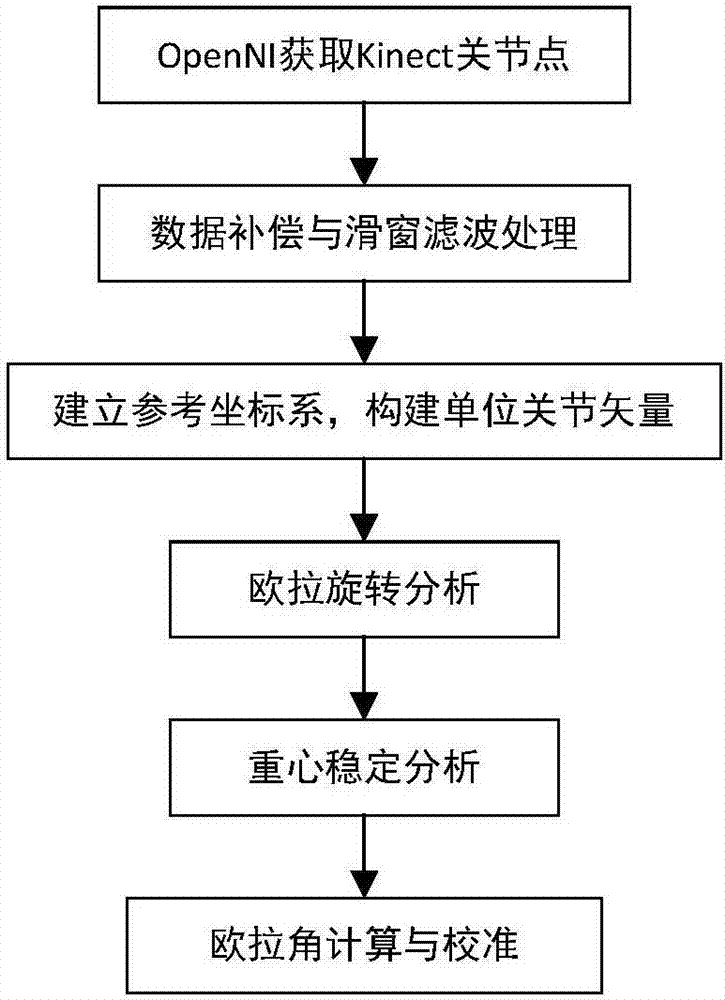

[0075] This embodiment discloses a Kinect-based Darwin robot joint mapping analysis method. The analysis method first utilizes Kinect to collect human joint bone data, and then maps the Kinect human joint bone data to the joints of the Darwin robot after joint analysis, and makes it The Darwin robot imitates human actions to the greatest extent. The method specifically includes the following steps:

[0076] S1, using Kinect to collect the skeleton data of the joint point P of the human body;

[0077]In this step, OpenNI can be used to read the 24 joint point information of Kinect, which are: head, neck, torso, waist, left collar, left shoulder, left elbow, left wrist, left hand, left fingertip, right collar, right Shoulder, right elbow, right wrist, right hand, right fingertip, left hip, left knee, left ankle, left foot, right hip, right knee, right ankle, right foot, only 15 joint data are valid in practical applications, respectively For: head, neck, torso, left shoulder, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com