Deep learning-based target tracking method, device and storage medium

A target tracking and deep learning technology, applied in devices and storage media, in the field of target tracking methods based on deep learning, can solve problems such as poor real-time performance, difficult to meet practical applications, etc., achieve high tracking speed, improve target tracking accuracy, target tracking High coincidence effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

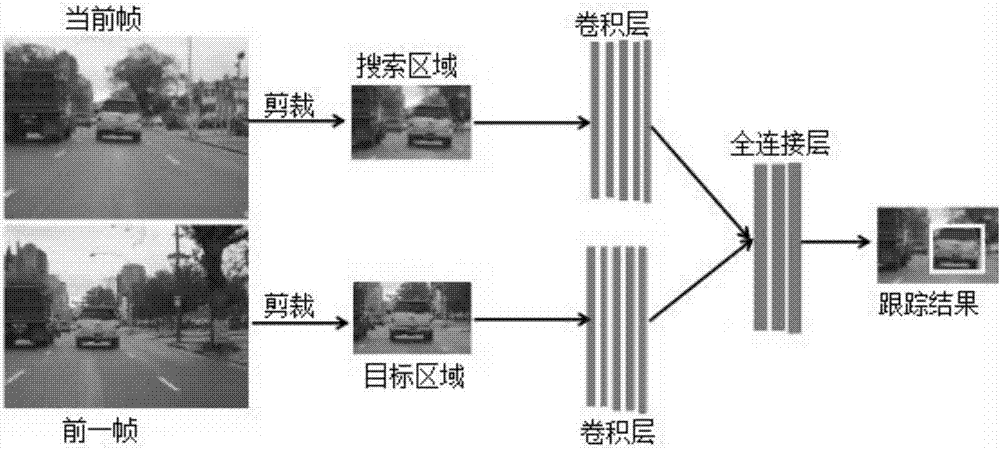

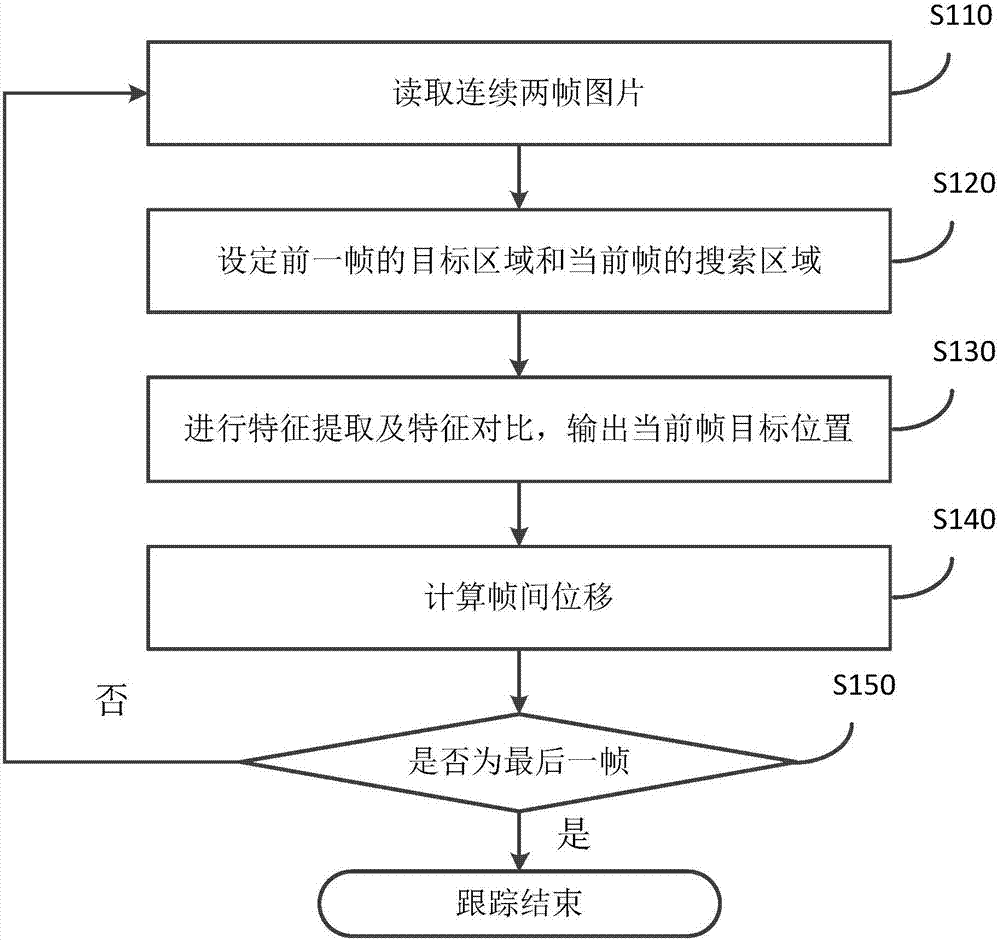

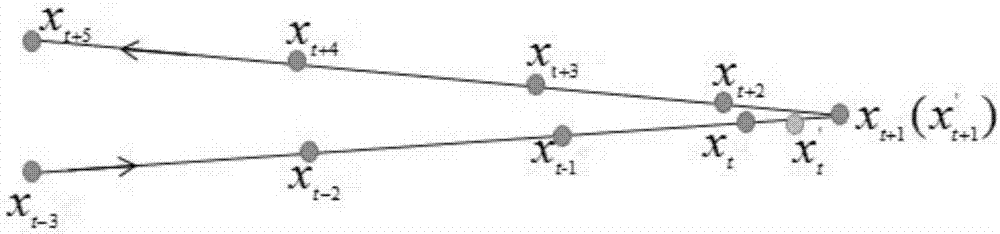

Method used

Image

Examples

Embodiment 1

[0092] In this embodiment, a comparison example of using the method of the present invention and other target tracking methods is shown.

[0093] At present, most of the algorithms that use deep learning methods to study object tracking problems are relatively slow, and the fastest is the general object tracking algorithm based on regression network GOTURN (Generic Object Tracking Using Regression Networks) proposed in 2016. In order to evaluate the performance of the algorithm of the present invention more accurately and objectively, the present invention designs multiple groups of comparative experiments and GOTURN algorithm to compare, and evaluates the performance of the accuracy, real-time and robustness of the target tracking algorithm in three aspects: using Tracking accuracy and coincidence are used to quantify tracking accuracy, and tracking speed is used to quantify real-time performance. For the evaluation of robustness, this experiment conducts qualitative analysis....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com