Anthropomorphic oral translation method and system with man-machine communication function

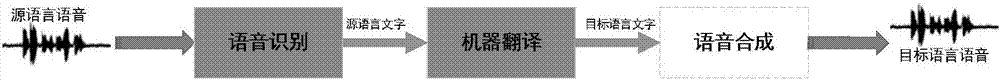

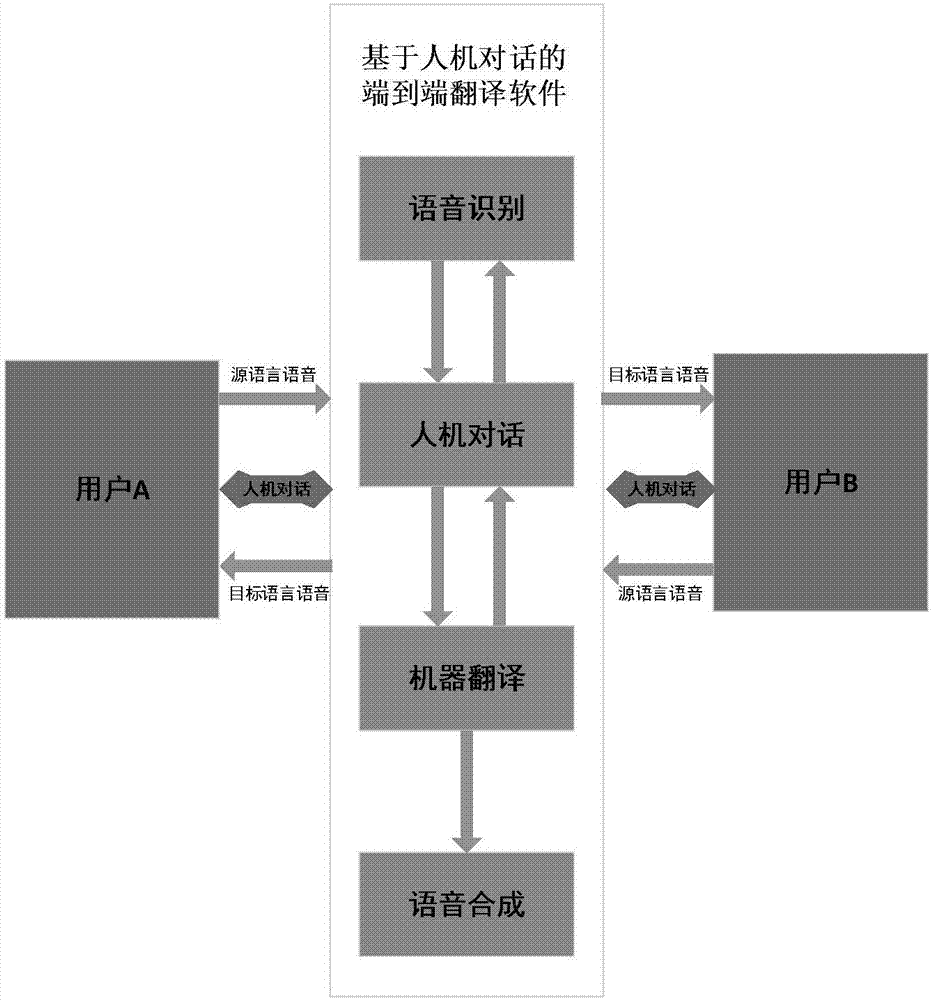

A technology of oral translation and human-computer dialogue, applied in oral translation methods and corresponding system fields, can solve problems such as difficulty in meeting the requirements of scene friendliness, lack of user-computer communication, and difficulty in meeting accuracy requirements, so as to improve accuracy , translation and interactive experience with intelligent and easy-to-use effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

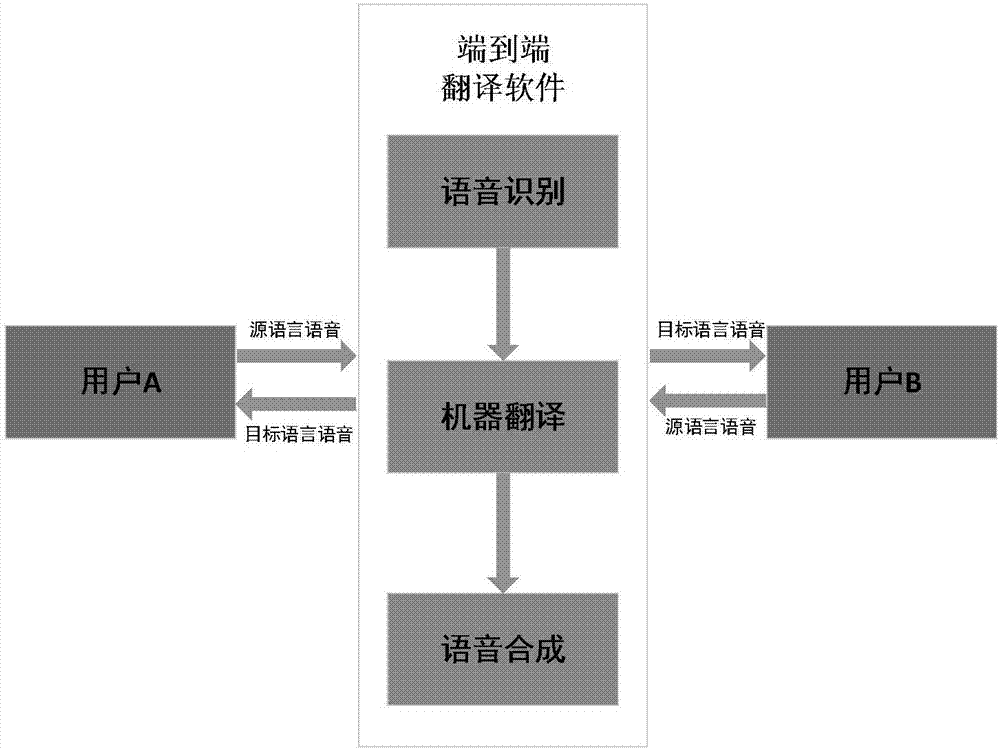

[0038] The first embodiment: the dialogue system of oral translation between the two parties on the mobile phone

[0039] In this embodiment, a two-party oral translation dialogue system on a mobile phone is provided. The system provides an end-to-speak translation dialogue function for both parties in the dialogue, and initiates a man-machine dialogue to the user when necessary to improve the user's translation experience.

[0040] (1) Obtain the source language speech input by the speaker, and the input method is optional according to the speaker's use environment and usage habits (such as Figure 6 shown).

[0041] If the speaker's current environment is not conducive to direct use of voice input, the system provides alternatives for direct input of source language text;

[0042] If the speaker is used to manually specifying the language of the two parties in the conversation, the system provides a button for manually specifying the language, and at the same time allows ma...

no. 2 example

[0070] The second embodiment: multi-party oral translation conference system on the mobile phone

[0071] In this embodiment, a multi-party spoken language translation conference system on a mobile phone is provided. The system provides participants with an end-to-end multi-party spoken language conference translation function, provides an intelligent conference moderator function, and provides a conference call to the participants when necessary. Machine dialogue to improve the translation experience of the meeting.

[0072] (1) Obtain meeting information and create a meeting (such as Figure 10 shown). The meeting creator specifies the meeting identification code, which is the basis for the unique identification of the meeting, and other meeting participants participate in the specified meeting by entering the meeting identification code; the meeting creator specifies the meeting name, and the meeting name is the content summary or participation of the meeting The informat...

no. 3 example

[0099] The third embodiment: an anthropomorphic spoken language translation system based on no-screen display

[0100] In this embodiment, an anthropomorphic spoken language translation system based on no-screen display is provided. The system provides end-to-end anthropomorphic translation services to users without a screen. The system adopts the following technical solutions (such as Figure 13 shown):

[0101] (1) Obtain information about the speaker

[0102] In the case of no screen display, the system acquires relevant information about the speaker through intelligent processing of the speaker's input voice, and the relevant information includes but is not limited to:

[0103] Optionally, the system requests all speakers to speak a common phrase in turn when starting up, so as to obtain the language information of the dialogue participants;

[0104] The system automatically identifies the speaker, and uses the identification result as an important basis for language ide...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com